Dual-Slot NVMe Card With Eight E1.S SSDs Achieves 55 GB/s Performance

All eight drives fit in the dual-slot card, making for a compact storage solution with bleeding-edge performance.

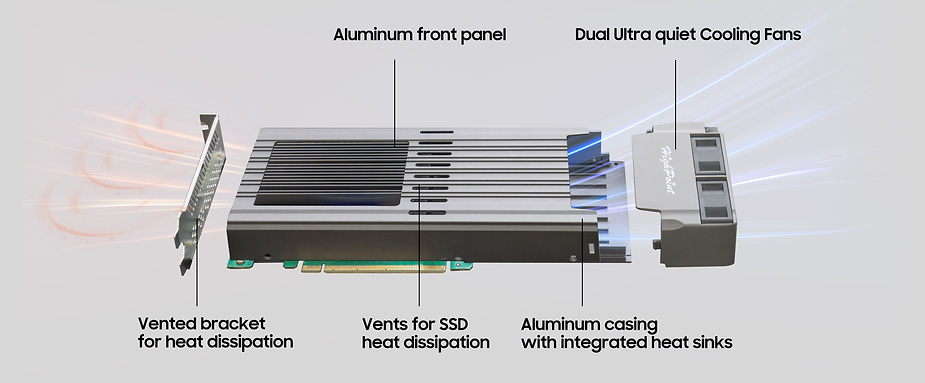

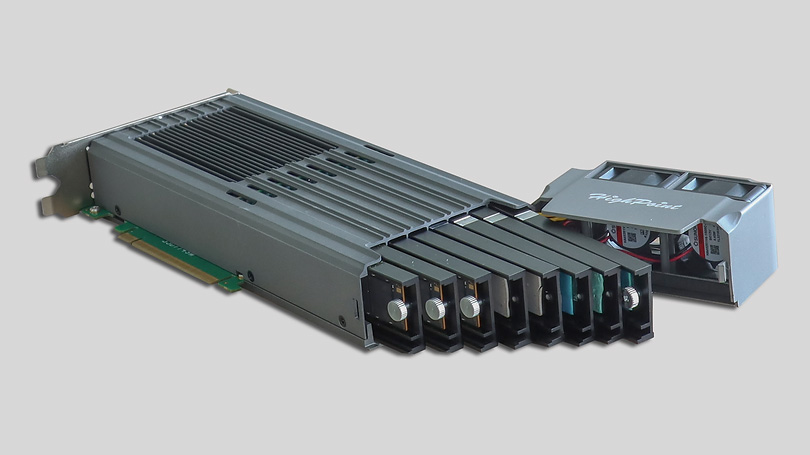

PCIe card specialist HighPoint has developed the first dual-slot NVMe RAID add-in card, the SSD7749E. The card is designed for server applications with support for up to eight E1.S SSDs and a unique dual-fan cooling solution to keep all eight SSDs running cool. The card can act as a RAID card or a simple NVMe expansion card, supporting RAID 0, 1, 10, or non-RAID configurations.

Even though dual-slot cards are standard in the consumer space, particularly among graphics cards, dual-slot AICs for storage cards are non-existent. According to HighPoint, the primary purpose of the SSD7749E's dual slot design is to improve the card's cooling capability for future NVMe storage devices with speeds of up to 14GB/s. We already have drives like these in the consumer space in the form of the Best SSDs featuring PCIe Gen 5 connectivity. Still, these blisteringly fast storage speeds have yet to make their way to the server industry.

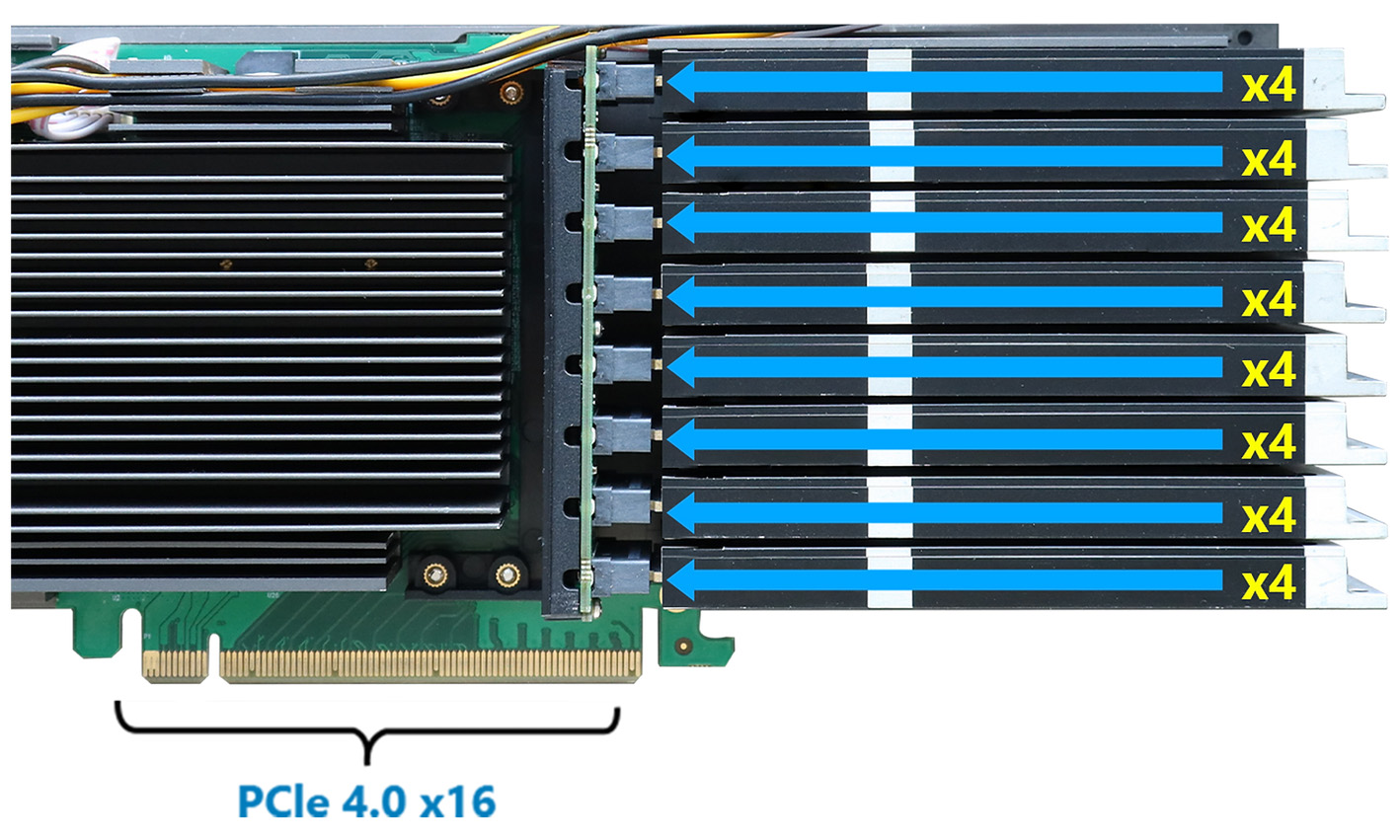

For more details on some of the best PCIe Gen 5 SSDs, check out our list of Best SSDs. For clarification, the SSD7749E does not support PCIe Gen 5 speeds and only supports Gen 4 speeds featuring a PCIe Gen 4 x16 interface.

The cooling system on the SSD7749 is very unconventional, featuring a "left to right" airflow strategy. Two low-decibel cooling fans are mounted on the side, pushing air directly into the card. Once through the card, all the hot air exhausts out of the other side of the card, similar to blower-style GPUs.

This design philosophy is very different from regular expansion cards, which favor front-mounted fans that cool the card from top to bottom rather than side to side. The benefit of side-to-side cooling is its inherent compatibility with 1U and 2U server chassis, which feature the same cooling solution for chassis cooling.

Besides cooling, the SSD7749E boasts eight 9.5mm E.1S storage drives thanks to vertically stacked drive slots. E1.S is a slightly larger variant of M.2 explicitly designed for the server industry. It is reportedly the successor of U.2 and features five different-size options to accommodate various storage solutions. The storage slots on the SSD7749E are also toolless, allowing for straightforward drive installations.

With eight drives installed, the card can speed up to 28 GB/s via a PCIe Gen 4 x16 interface and up to an even more impressive 55 GB/s with HighPoint's Cross-Sync RAID technology. As previously stated, the card features support for RAID 0,1, and 10 arrays along with independent drive configurations. Under lighter workloads or separate drive configurations, PCIe switching technology enables up to four PCIe Gen 4 lanes to be dynamically allocated to a single drive to boost performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

HighPoint's new dual-slot AIC also features a CPU core performance optimizer that reportedly fixes allocation issues when using the storage drive. Having this feature enabled should boost SSD performance. Due to its server roots, the SSD7749E is anything but cheap, costing $1,499.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

bit_user I was about to applaud the fact that, after nearly 2 years of LGA1700 being on the market, here's something that actually justifies having a PCIe 5.0 x16 slot... until I got to this part:Reply

"With eight drives installed, the card can speed up to 28 GB/s via a PCIe Gen 4 x16 interface and up to an even more impressive 55 GB/s with HighPoint's Cross-Sync RAID technology."

A quick web search indicates that "HighPoint's Cross-Sync RAID technology" involves using a pair of cards as a single device. So, it's misleading to tout this thing as delivering 55 GB/s, when you actually need 2 of them. If true, that makes the title factually inaccurate. Not just misleading, but outright wrong, because it clearly says one card reaches that speed:

"Dual-Slot NVMe Card With Eight E1.S SSDs Achieves 55 GB/s Performance"

What I find a bit funny is that most people running such storage-intensive applications would be using a server chassis with a purpose-built backplane, anyhow. You'd probably never want this in a desktop PC, as it probably sounds like a jet engine.

Makes me wonder what the heck their market is, or what those people would be doing with it. If we're talking networked storage, you'd need 400 Gbps Ethernet to reach its theoretical limit.

Due to its server roots, the SSD7749E is anything but cheap, costing $1,499.

Compared to the cost of the drives, that's a mere down payment.

E.1S ... It is reportedly the successor of U.2

I think U.3 is more of a direct successor to U.2, but much of the market will probably move on to E.1S and E.1L. Then again, judging by what's available today, I see that while Micron has completely shifted to U.3, while Solidigm only offers U.2. Both offer E.1S and E.1L, of course. -

Vanderlindemedia I think PCI-E5 is way more expensive to implement compared to 4. And PCI-E 4 still offers more then enough bandwidth for all your sort of applications. 55GB a second is nice but what real workload do you use that for?Reply -

palladin9479 Read the headline and thought "that sounds like something Highpoint would do", then read the article and confirmed. Stuff like this is for server / workstation scenarios where you need insane disk capability. CAD/CAM, video rendering or modeling systems come to mine.Reply -

NeoMorpheus Using this form factor would bring some very interesting designs for NASes like the Synology onesReply -

Li Ken-un Reply

If you can’t imagine a use case, it doesn’t mean that one doesn’t exist…Vanderlindemedia said:And PCI-E 4 still offers more then enough bandwidth for all your sort of applications. 55GB a second is nice but what real workload do you use that for? -

bit_user Reply

A NAS with 400 Gbps Ethernet? For what? Serving all the video ads to the entire US West Coast?NeoMorpheus said:Using this form factor would bring some very interesting designs for NASes like the Synology ones

: D

The bandwidth numbers are just impossibly high, for it to make sense as networked storage. Whatever people do with these, it's not that.

I know that's the standard answer for what people do with workstations, but I'm still having trouble wrapping my mind around what exactly any of those involves that requires quite so much raw bandwidth.palladin9479 said:Stuff like this is for server / workstation scenarios where you need insane disk capability. CAD/CAM, video rendering or modeling systems come to mine.

The only thing I can come up with is that you're using this for analyzing some scientific or GIS-type data set that won't fit in RAM. -

USAFRet Reply

:ptdr:bit_user said:A NAS with 400 Gbps Ethernet? For what? Serving all the video ads to the entire US West Coast? -

mickey21 Reply

I don't have this information myself, but they may be touting the throughput in one direction, since the communication is full duplex capable and may only be stating the throughput in the form of a "read" or "write" operation, and if that is the case, can truthfully say 55GB/s performance in a read/write max capability.bit_user said:I was about to applaud the fact that, after nearly 2 years of LGA1700 being on the market, here's something that actually justifies having a PCIe 5.0 x16 slot... until I got to this part:

"With eight drives installed, the card can speed up to 28 GB/s via a PCIe Gen 4 x16 interface and up to an even more impressive 55 GB/s with HighPoint's Cross-Sync RAID technology."

A quick web search indicates that "HighPoint's Cross-Sync RAID technology" involves using a pair of cards as a single device. So, it's misleading to tout this thing as delivering 55 GB/s, when you actually need 2 of them. If true, that makes the title factually inaccurate. Not just misleading, but outright wrong, because it clearly says one card reaches that speed:

"Dual-Slot NVMe Card With Eight E1.S SSDs Achieves 55 GB/s Performance"

What I find a bit funny is that most people running such storage-intensive applications would be using a server chassis with a purpose-built backplane, anyhow. You'd probably never want this in a desktop PC, as it probably sounds like a jet engine.

Makes me wonder what the heck their market is, or what those people would be doing with it. If we're talking networked storage, you'd need 400 Gbps Ethernet to reach its theoretical limit.

Compared to the cost of the drives, that's a mere down payment.

I think U.3 is more of a direct successor to U.2, but much of the market will probably move on to E.1S and E.1L. Then again, judging by what's available today, I see that while Micron has completely shifted to U.3, while Solidigm only offers U.2. Both offer E.1S and E.1L, of course.

To continue, it also may be the person writing the article (cant confirm myself of course) may have not fully conveyed that Highpoint's proprietary Cross-Sync RAID allows for the use of 2 x16 slots to be utilized for even more throughput.

Just my guess... -

bit_user Reply

Instead of speculating, just look at the actual claim. The claim was that 55 GB/s required the use of HighPoint's Cross-Sync RAID technology, as I quoted. If you want to understand their claim, then look into that technology, what it requires, and how it works.mickey21 said:I don't have this information myself, but they may be touting the throughput in one direction, since the communication is full duplex capable and may only be stating the throughput in the form of a "read" or "write" operation, and if that is the case, can truthfully say 55GB/s performance in a read/write max capability.

It doesn't help anyone to go making up hypothetical claims, based on hypothetical data, if that's not what they actually claimed. You don't know the architecture of the RAID controller, nor whether or how effectively it can operate in full-duplex mode. Any facts you conjure in your imagination might therefore turn out to be totally false, and therefore misleading.

We value real world data, which is why testing features so prominently into the actual reviews on this site. Even when we don't have the ability to test the product in question, a responsibility exists to understand & communicate, in as much detail as possible, what the manufacturer is actually claiming. -

NeoMorpheus Reply

Never said that, my friend.bit_user said:A NAS with 400 Gbps Ethernet?

I clearly stated firm factor due to the hardware size/configuration.

That said, i would also like to know how a nvme drive could replace a spinning drive based on storage, not speed. Meaning, do whatever necessary to make the drives cheaper than rust drives but matching or exceeding the capacity.