Nvidia GeForce RTX 5090 versus RTX 4090 — How does the new halo GPU compare with its predecessor?

Nvidia is betting heavily on AI and new features, even moreso than with the 40-series.

The Blackwell RTX 50-series GPUs mark the end of the more than two long years of waiting since the RTX 40-series Ada Lovelace GPUs launched in late 2022. Nvidia announced its upcoming GeForce RTX 50-series cards during the CES 2025 keynote, providing the specifications, pricing, and even a preview of performance. Big claims were made, with new technologies like DLSS 4 playing a major role in those claims. As the new halo part, the RTX 5090 takes over from the RTX 4090, boasting more memory, more compute, more features, and more power. It's not yet available, but there's a lot going on that's worth dissecting before cards go on sale.

Will the RTX 5090 be one of the best graphics cards when it arrives? If by "best" you mean "fastest" then yes, there's little doubt it will surpass it's predecessor. Will it be twice as fast? Depending on how you want to measure performance, maybe, but that's putting a lot of trust in AI techniques that aren't the same as traditional rendering. Let's dig into the specifications and features that we know about to discuss how the old and new kings of the GPU world stack up.

| Graphics Card | RTX 5090 | RTX 4090 |

|---|---|---|

| Architecture | GB202 | AD102 |

| Process Node | TSMC 4NP | TSMC 4N |

| Transistors (Billion) | 92 | 76.3 |

| Die size (mm^2) | 744 | 608.4 |

| SMs | 170 | 128 |

| GPU Shaders | 21760 | 16384 |

| Tensor Cores | 680 | 512 |

| RT Cores | 170 | 128 |

| Boost Clock (MHz) | 2407 | 2520 |

| VRAM Speed (Gbps) | 28 | 21 |

| VRAM (GB) | 32 | 24 |

| VRAM Bus Width | 512 | 384 |

| L2 Cache | 128? | 72 |

| Render Output Units | 240? | 176 |

| Texture Mapping Units | 680 | 512 |

| TFLOPS FP32 (Boost) | 104.8 | 82.6 |

| TFLOPS FP16 (INT8 TOPS) | 1676? (3352) | 661 (1321) |

| Bandwidth (GB/s) | 1792 | 1008 |

| TBP (watts) | 575 | 450 |

| Launch Date | Jan 2025 | Oct 2022 |

| Launch Price | $1,999 | $1,599 |

Let's talk raw specs first. The RTX 5090 has 170 Blackwell Streaming Multiprocessors (SMs), compared to 128 SMs on the 4090. That's a 33% increase in GPU cores — and the number of CUDA cores, tensor cores, RT cores, texture units, etc. is directly tied to the SM counts, so that's basically a 33% increase overall.

Clock speeds also play a role, however, and the 4090 has a 2520 MHz boost clock compared to (based on calculations and Nvidia's official specs) a 2407 MHz boost clock. That means for raw compute, the 5090 'only' offers a 27% improvement over the 4090. However, that's assuming no other architectural differences exist, which almost certainly isn't a good assumption.

Memory capacity, speed, and bandwidth are all higher with the RTX 5090, thanks to GDDR7 as well as a bigger, beefier chip. The RTX 5090 has 33% more VRAM than the 4090, clocked 33% higher, for a net 78% improvement in raw bandwidth. We don't know the L2 cache size or if there are any other changes that could impact bandwidth, and both of those are important considerations. Still, that's a big increase in raw memory bandwidth.

Nvidia is betting big on AI with the RTX 50-series, and that's where we see some of the biggest changes. The RTX 4090 has 661 TFLOPS of FP16 tensor compute (with sparsity), and 1321 TOPS (teraops) of INT8 tensor compute (again with sparsity). That's far more than AMD's RX 7900 XTX that only offers 123 TFLOPS / TOPS of FP16 / INT8 compute (without sparsity). But it still pales in comparison to the RTX 5090.

We're not certain on the FP16 figure, but assuming Nvidia follows the same ratios as the prior generation, the RTX 5090 will deliver up to 1676 TFLOPS of tensor FP16 compute, and double that for 3352 TOPS of tensor INT8 compute (both with sparsity). That's a 154% increase (2.54X) in AI computational performance with the new generation. And Nvidia intends to put the AI potential to good use.

As we've discussed elsewhere, Nvidia DLSS 4 will leverage the new features in Blackwell to power its AI algorithms. Multi frame generation will "predict the future" and generate up to three additional frames from one rendered (and potentially upscaled) frame. Because it's using frame projection rather than interpolation, the latency penalty shouldn't be all that different from what we've seen already with DLSS 3 frame generation, but the additional frames will make everything look smoother.

How does that actually feel? We haven't had a chance to test it ourselves, so we'll withhold any final judgement, but we're quite skeptical. It will probably work decently, but one rendered frame based on user input followed by three AI generated frames with no new user input won't have the same feel as a game where every frame takes any new user input and gets fully rendered.

There are other changes coming as well, however, some exclusive to the Blackwell RTX 5090 and others that will work with older RTX cards. RTX Neural Materials appears to use AI compression and learning to reduce memory requirements for the textures and material descriptions used in games by about a third. However, the hardware pipeline needs to be able to use AI alongside the shaders to have this work, so it will be another 50-series exclusive.

DLSS Transformer upscaling on the other hand uses a newly trained network built off of AI transformers, rather than the convolutional neural network (CNN) used with earlier DLSS upscaling algorithms. Transformers have been at the heart of the AI revolution, power things like ChatGPT, DALL-E, and other AI content generators. The sample videos Nvidia has shown of old versus new DLSS upscaling look very impressive, and we're eager to try it out in person. What's more, the new DLSS Transformer algorithm apparently runs faster than the older CNN version, and it will be available for all RTX GPUs.

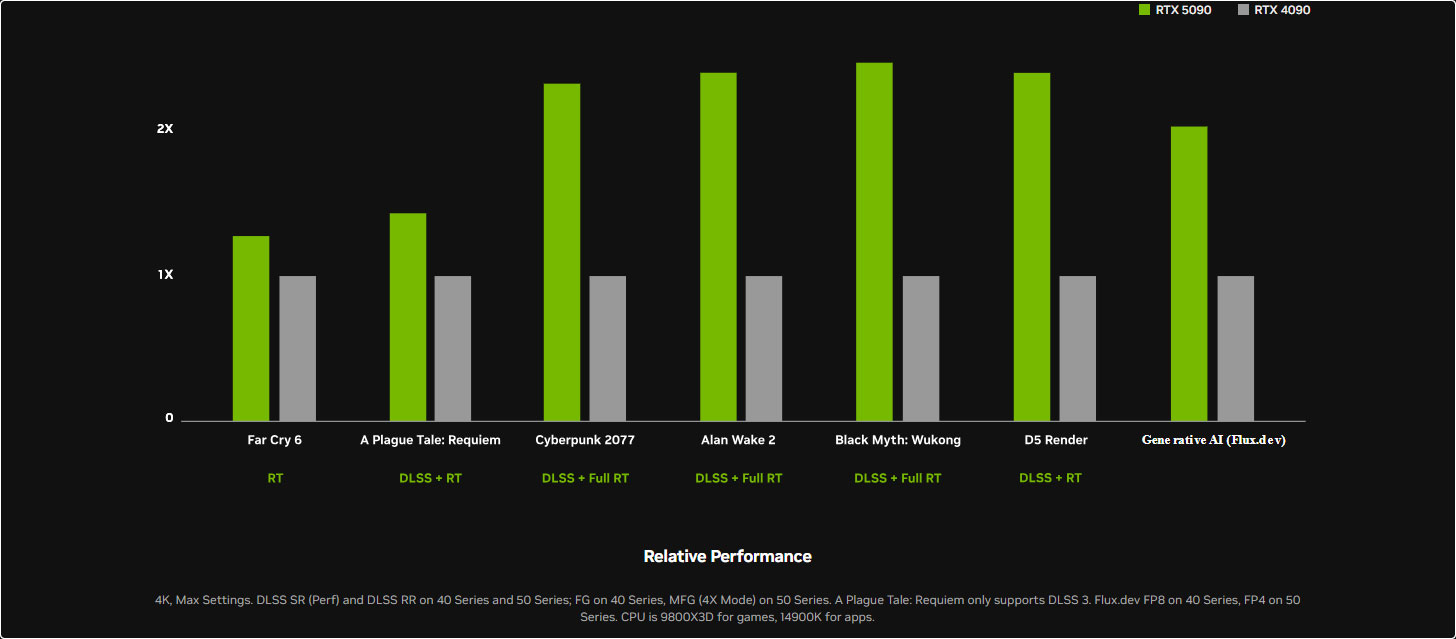

Nvidia's own performance preview, where it suggests RTX 5090 can be up to twice as fast as the RTX 4090, also show a couple of games where there's no DLSS 4 or even DLSS 3 in one instance to muddy the waters. Looking at the Far Cry 6 results, it appears the 5090 will offer about 27% more performance than the 4090 in games where the new AI features aren't part of the equation. In A Plague Tale: Requiem, the gap increases to about 43% (yes, I'm counting pixels!). While in the games that use DLSS 4 MFG (versus DLSS 3 FG), Nvidia shows a 2.3X–2.45X improvement.

Does that mean the RTX 5090 is or isn't worth the higher price? We think it will largely depend on what you're doing. There will almost certainly be a lot of people and companies that are interested in AI who will jump at the chance to pay $1,999 for an RTX 5090. Those same groups have been buying RTX 4090 cards for the past couple of years. In generative AI testing, the 5090 also showed a massive 2X jump in performance using Flux.dev.

But if you're mostly playing games, and you don't love frame generation? It's probably not a bad idea to sit back and wait to see how things develop for a bit. Maybe DLSS 4 in actual use will look and feel great. Or maybe pulling up to 575W of power through the new 16-pin connector will result in Meltgate Part 2. But however you slice it, two grand is a lot of money to spend on a gaming GPU — and you'll definitely want the rest of your PC to be up to the task, as powering the RTX 5090 and providing a steady stream of game updates will need a very potent PC.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Elusive Ruse Thank you for the analysis Jarred, I'm eying the 5080 for my next upgrade and its pricing is reasonable all things considered; yet I'm not sure if the 16GB VRAM on an 80 class is still a good deal even if it's GDDR7. I have a feeling there's a refresh or an 80Ti in the works with 20/24GB coming within the year.Reply -

Thunder64 ReplyElusive Ruse said:Thank you for the analysis Jarred, I'm eying the 5080 for my next upgrade and its pricing is reasonable all things considered; yet I'm not sure if the 16GB VRAM on an 80 class is still a good deal even if it's GDDR7. I have a feeling there's a refresh or an 80Ti in the works with 20/24GB coming within the year.

5080 with 16GB of RAM should be boycotted. I'd prefer the 5070 Ti as it has the same RAM and close enough performance. I strongly expect to see a 5080 Super/Ti with 20/24GB of RAM at some point. -

bit_user Reply

FWIW, I had predicted "37% to 44% ... I feel more comfortable with the lower end of that range":The article said:Looking at the Far Cry 6 results, it appears the 5090 will offer about 27% more performance than the 4090 in games where the new AI features aren't part of the equation. In A Plague Tale: Requiem, the gap increases to about 43%

https://forums.tomshardware.com/threads/dont-waste-money-on-a-high-end-graphics-card-right-now-%E2%80%94-rtx-4090-is-a-terrible-deal.3862012/post-23382209

While 27% is a fair bit less than I estimated, 43% falls directly in my range.

I also predicted that it would be a better value in perf/$ than the RTX 4090, which it just barely manages. They increased the list price by 25%, so if 27% is truly the typical uplift for non-DLSS usage, then it's not actually a worse deal. However, we have yet to see what near-term availability & street prices end up being like. -

bit_user Reply

It's possible there could be a 24 GB version, since Samsung has already developed 24-Gbit GDDR7 dies.Elusive Ruse said:I have a feeling there's a refresh or an 80Ti in the works with 20/24GB coming within the year.

https://news.samsung.com/global/samsung-develops-industrys-first-24gb-gddr7-dram-for-next-generation-ai-computing

Normally, I'd say "no way", because the ASIC certainly has only a 256-bit memory interface on it and the price differential between the RTX 5080 and RTX 5090 is so big that I doubt they would sell partially-disabled GB102 dies a RTX 5080 Super. Maybe as a RTX 5080 Ti, but it would cost more. -

JarredWaltonGPU Reply

Oh, I am probably 90-ish percent certain that the mid-cycle refresh for Blackwell (meaning sometime in early to mid 2026) will be the same GPUs, possibly with more SMs enabled in some cases, but with 24Gb GDDR7 in place of the current 16Gb models. So every GPU tier could get a 50% upgrade in VRAM capacity. Whether they're called "5080 Super" or "5080 Ti" or something else doesn't really matter.bit_user said:It's possible there could be a 24 GB version, since Samsung has already developed 24-Gbit GDDR7 dies.

https://news.samsung.com/global/samsung-develops-industrys-first-24gb-gddr7-dram-for-next-generation-ai-computing

Normally, I'd say "no way", because the ASIC certainly has only a 256-bit memory interface on it and the price differential between the RTX 5080 and RTX 5090 is so big that I doubt they would sell partially-disabled GB102 dies a RTX 5080 Super. Maybe as a RTX 5080 Ti, but it would cost more.

The exception to this, possibly, is the 4060 Ti and 4060. Depending when those launch, it's conceivable that Nvidia goes straight to 24Gb chips and ends up with the same 12GB as the 5070, but with 33% less bandwidth due to using a 128-bit interface.

That's my guess as to what will happen at least. And I've definitely been wrong on this stuff once or twice. LOL -

Peksha Reply

Based on linear scaling, we see that there are no architectural improvements in the shader compute unit. Even the power and cost scale linearly.Elusive Ruse said:Thank you for the analysis Jarred, I'm eying the 5080 for my next upgrade and its pricing is reasonable all things considered; yet I'm not sure if the 16GB VRAM on an 80 class is still a good deal even if it's GDDR7. I have a feeling there's a refresh or an 80Ti in the works with 20/24GB coming within the year.

For the 5080, this means ~ no improvements in gaming performance for the same price...

Bad release? -

thestryker Quite frankly I would be surprised if there wasn't a mid cycle refresh for anything $500+ with 24Gb memory IC. While it's a low volume part they're already using it in the mobile 5090 and MSI had an oopsie showing a 24GB capacity for the 5080.Reply

There also ought to be plenty of room between the 5080 and 5090 for both a new SKU (say a Ti) and a Super refresh with higher clocks. If they pursue the same strategy as with the 40 series and retire the cards being refreshed I could see clock/core increases across the stack of the die allows for it.

I find looking at this generation a lot different since I decided after the B580 I'd wait to see what Celestial could deliver. Now it's mostly down to an academic type interest and hope they aren't screwing over people who actually need a new card at a reasonable price like this last generation did. -

Lucky_SLS ReplyJarredWaltonGPU said:Oh, I am probably 90-ish percent certain that the mid-cycle refresh for Blackwell (meaning sometime in early to mid 2026) will be the same GPUs, possibly with more SMs enabled in some cases, but with 24Gb GDDR7 in place of the current 16Gb models. So every GPU tier could get a 50% upgrade in VRAM capacity. Whether they're called "5080 Super" or "5080 Ti" or something else doesn't really matter.

That's my guess as to what will happen at least. And I've definitely been wrong on this stuff once or twice. LOL

This is exactly what I need. My 4070Ti Super is struggling with modded SkyrimVR. 98% VRAM usage with medium to low LODs and shadows :(

Need some love for VR tech/features... -

AngelusF "A massive 2X jump in performance using Flux.dev"? Read the small print - the 4090 was using FP8 but the 5090 was using FP4. No wonder it was twice as fast.Reply -

Elusive Ruse Reply

I highly doubt there would be no gaming performance bump, I dunno how you came to this conclusion or maybe I didn’t understand your post?Peksha said:Based on linear scaling, we see that there are no architectural improvements in the shader compute unit. Even the power and cost scale linearly.

For the 5080, this means ~ no improvements in gaming performance for the same price...

Bad release?