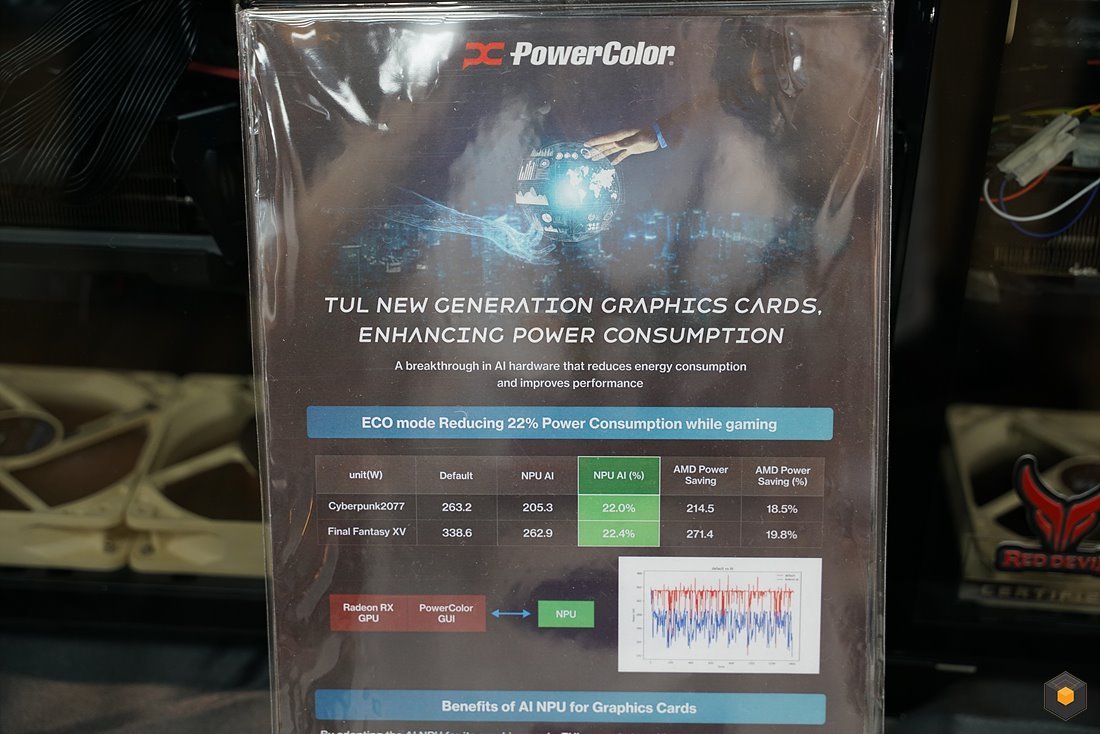

PowerColor's new tech uses the NPU to reduce gaming power usage — vendor-provided benchmarks show up to 22.4% lower power consumption

Gamers may have a reason to care about NPUs now.

PowerColor (via harukaze5719) is developing a new technology called "Edge AI" that enables AMD Radeon RX graphics cards to use NPUs (Neural Processing Units) to reduce power consumption.

In the vendor's demonstration, Cyberpunk 2077's power consumption decreased from 263.2W to 205.3W, representing a 22% power savings. It's noticeably better than AMD's power-saving mode, which came in at 18.5%. Numbers for Final Fantasy XV were also available, with power-saving results matching within a percentage point.

PowerColor describes that its Edge AI technology works through the communication of the graphics card and PowerColor's own GUI, which works together to bridge the gap to the NPU. According to IT Media, the testbench at Computex with the NPU powered on had it externally connected to the GPU, leaving us to wonder whether similar NPU power saving could be achieved via an NPU on the CPU, such as the Ryzen 8000 series. PowerColor also claimed in press material that Edge AI On increases performance slightly. At the same time, tech reviewers on-site confirmed a 10% dip in FPS between the more efficient NPU test computer and the standard GPU.

PowerColor integrated NPU to Radeon GPU. (Still developing)Through AI, they claim power consumption deceased 22%. More saved than AMD Power Saving. pic.twitter.com/9I8iEikotDJune 7, 2024

NPUs seem to be here to stay, and their potential viability in helping gaming performance would be a massive win for the tech on desktops. NPUs have become a standard offering on high-end laptops since 2023 when Intel Core Ultra, or Meteor Lake, was first released. NPUs make perfect sense for mobile applications; AI computations are extremely processor-heavy, so built-in AI accelerators fit in on laptops, which need high efficiency to conserve battery life. The desktop market has less need for NPUs; however, they typically run dedicated graphics cards that can pump out AI calculations with more power but less efficiency than NPUs.

AMD first brought NPUs to desktops with its Ryzen 8000G series, but it has no plans to bring back NPUs in its freshly announced Ryzen 9000 series. Depending on how PowerColor's continued research on Edge AI goes, it's plausible that we could see PowerColor AMD graphics cards released this year with an NPU built-in, or perhaps we may see a resurgence of desktop CPU NPUs that can be leveraged for gaming efficiency in the same way. We don't know when or where we'll next see Edge AI spring up in PowerColor's or AMD's stable.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Sunny Grimm is a contributing writer for Tom's Hardware. He has been building and breaking computers since 2017, serving as the resident youngster at Tom's. From APUs to RGB, Sunny has a handle on all the latest tech news.

-

The NPU which was used here tactfully regulates the power supply to the GPU based on an algorithm.Reply

This relieves the voltage regulator module and MOSFETs of the card, by offloading the burden from the VRMs and MOSFETs. That's why we can see power savings and also lower temperature values here.

The NPU itself requires roughly 2.5 watts though, and is soldered to the video card.

So this is how it stacks up based on the testing done by the company. Cyberpunk 2077 and Final Fantasy XV tested on a "prototype" AMD 7900 XTX:

Default power settings: 263.2W / 338.6W

AMD ECO mode settings: 214.5W / 271.4W

With Power Saving (NPU): 205.3W / 262.9W -

Reply

We don't know when or where we'll next see Edge AI spring up in PowerColor's or AMD's stable.

BTW, this is PowerColor's own in-house invention tech (confirmed this from a company rep), and has nothing to do with AMD in general. So it seems unlikely we will see other board partners/AIBs implement this tech in their upcoming GPUs in near future, unless of course they are interested.

However, a consumer version of this modified RX 7900 XTX may be released soon by PowerColor. But it's not going to be cheap.

They also reiterated the fact that AMD's upcoming RX 8000 series would NOT be released until early 2025 (unless plans change), so they will focus on the current GPU lineup for now. -

parkerthon I was just about to wonder aloud why we couldn’t use this technology to overclock the gpu, but now I understand that it doesn’t effect the gpu chips thermals/power consumption, just the supporting power regulation facets on the board. Yet another interesting application of AI. Sounds like adding this NPU is expensive though?Reply -

Using an NPU alongside a GPU will certainly add to the overall cost. But how feasible and useful this tech feature really is in real world gaming still remains to be seen.Reply

PowerColor might soon release a consumer version of this modified RX 7900 XTX GPU in China though, so I expect to see some gaming benchmarks as well, alongside power consumption values. -

Pierce2623 Reply

Small NPUs are very cheap. A Google Coral on a m.2 is like $35 is probably sufficient for such a small simple model. They can probably buy the actual chips in bulk for $15 a piece.parkerthon said:I was just about to wonder aloud why we couldn’t use this technology to overclock the gpu, but now I understand that it doesn’t effect the gpu chips thermals/power consumption, just the supporting power regulation facets on the board. Yet another interesting application of AI. Sounds like adding this NPU is expensive though? -

LabRat 891 We sure this isn't the GPU being 'restrained' to a smoother-lower framerate, then using the NPU to AFMF-generate frames?Reply

The power savings seems in-line with fake frames and GPU clock/rendering moderation, not 'enhanced AI power control'. -

bit_user Reply

It's probably way cheaper and lower-end than that. I'd bet it's just a DSP that's being used in some sort of digi-VRM.Pierce2623 said:Small NPUs are very cheap. A Google Coral on a m.2 is like $35 is probably sufficient for such a small simple model. They can probably buy the actual chips in bulk for $15 a piece. -

Amdlova Another thing to be hacked or be a problem...Reply

And ads cost to the hardware it self.

Better just to buy a Nvidia:) -

bit_user Reply

Hacked... maybe, like if it's on PCIe or mapped into the GPU's address range. I doubt either.Amdlova said:Another thing to be hacked or be a problem...

Could be, but it might just be a glorified digi-VRM, in which case the cost could be negligible.Amdlova said:And ads cost to the hardware it self.

Okay, fanboy.Amdlova said:Better just to buy a Nvidia:)

; ) -

CmdrShepard Reply

I bet it is sentient and it won't allow you to play power hungry games if it gets upset.bit_user said:It's probably way cheaper and lower-end than that. I'd bet it's just a DSP that's being used in some sort of digi-VRM.

/s