AMD Radeon R9 Nano Review

Small, fast and pricey — that’s how AMD wants to establish a whole new product category. But does the Radeon R9 Nano have the performance to back up its price tag?

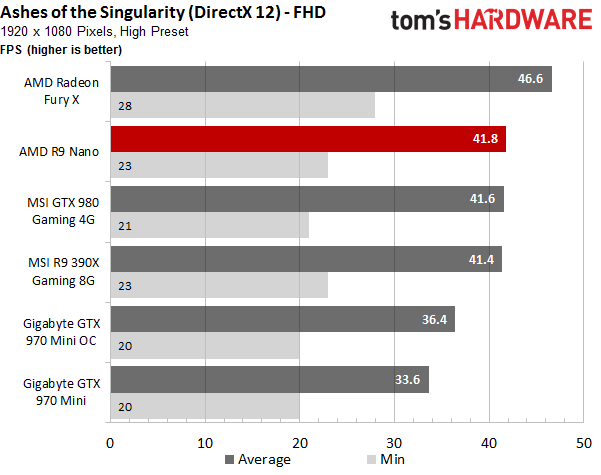

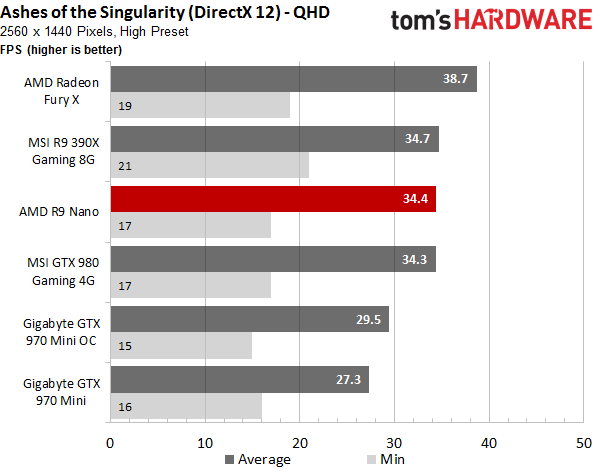

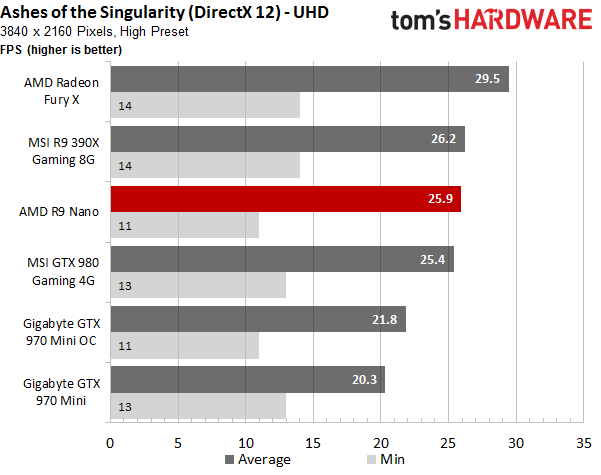

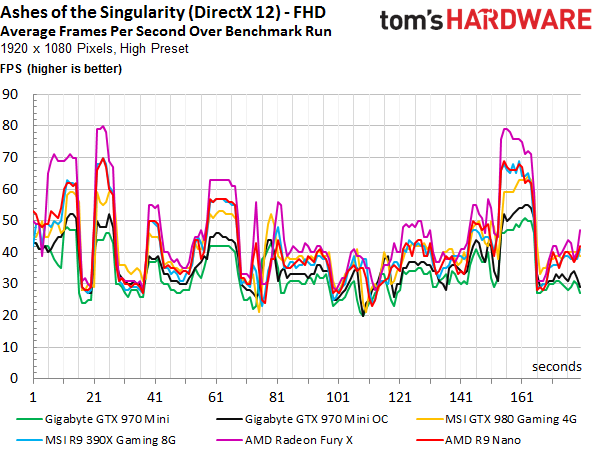

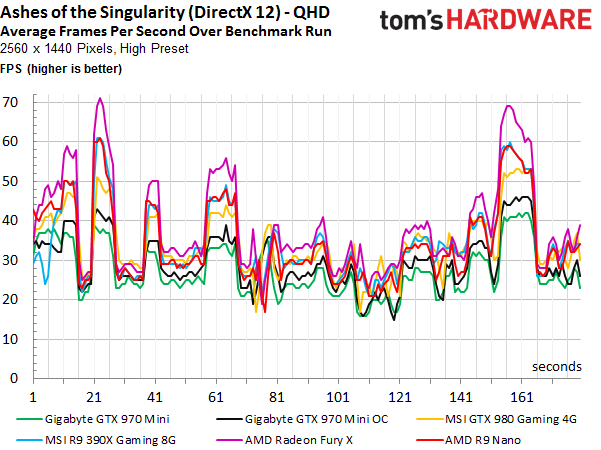

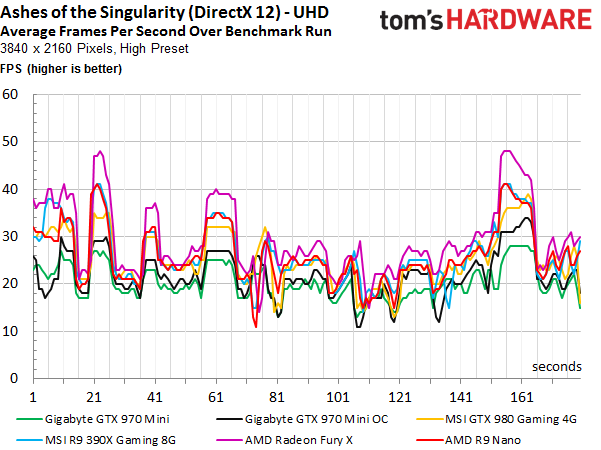

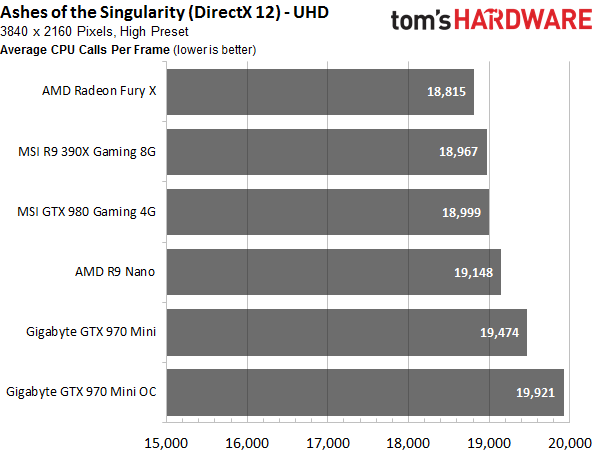

Results (DirectX 12): Ashes Of The Singularity

Since there are no mature DirectX 12 games yet, we had to fall back on Ashes of the Singularity, which is currently in pre-beta. Due to its lack of optimization, this benchmark doesn’t have the same validity as our other metrics. It should still provide us with a preview of things to come, though. We originally meant to add Ark: Survival Evolved to our suite as well, but ultimately had to skip it since the promised DirectX 12 patch keeps getting pushed back.

This brings us to the second point of our preamble, which concerns a somewhat touchy subject originating with an interesting discussion on the overclock.net forums. In short, the statements of an Studio Oxide Games employee, as well as Nvidia’s and AMD’s reactions to them, say a lot if you read between the lines and add the content of a few individual posts that were apparently deleted.

The exchange is about DirectX 12’s asynchronous computing/shading function, which allows for a parallel and, more importantly, asynchronous (which is to say independent of order) execution of computational (computing) and graphical (shading) tasks. This function can massively cut down latency if it’s implemented well and fully supported by the hardware.

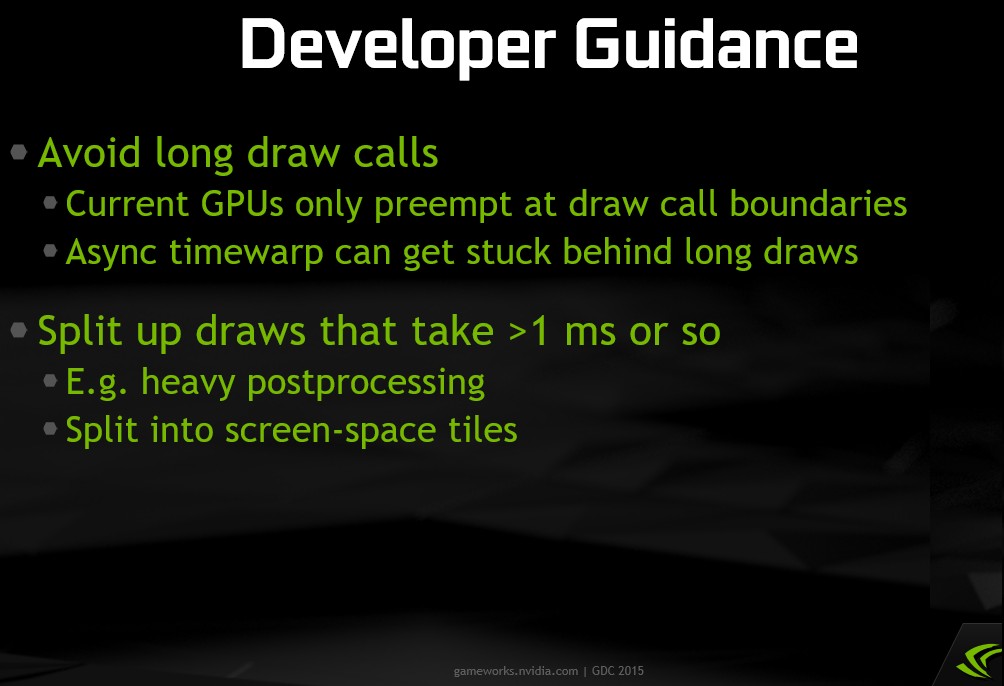

To quickly summarize, evidence points toward an issue with all of Nvidia’s graphics cards, starting with Fermi. They seem to have an efficiency problem with this specific function since they can only execute one context at a time at runtime. That alone isn't news, especially since Nvidia pointed it out already. And it's especially true when the developer advises that command chains should generally be kept as short as possible to avoid an accumulation of tasks, and with it the circumvention of priorities when the tasks get executed.

The reason seems to be that Nvidia designed its current GPUs so that the draw level always gets priority and the context switches for the pure computing operations can only be inserted after it’s finished. This means that the graphics card hangs until the end of a call, when it becomes possible to change again.

We can’t really judge the overarching implications of this issue, since Nvidia hasn’t officially commented on it yet. What we do have, however, are our benchmark results, as well as the results of an interpreter that we programmed to automatically analyze the log files. It outputs the number of CPU calls and the ratio between those calls and the number of frames that were actually rendered, giving us the reason why Nvidia’s benchmark results are (currently) as bad as they are.

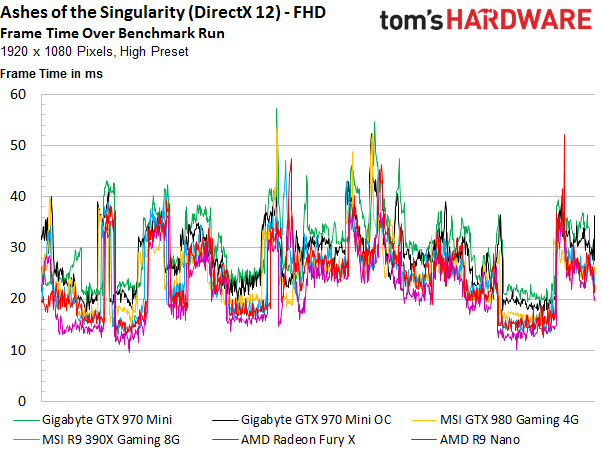

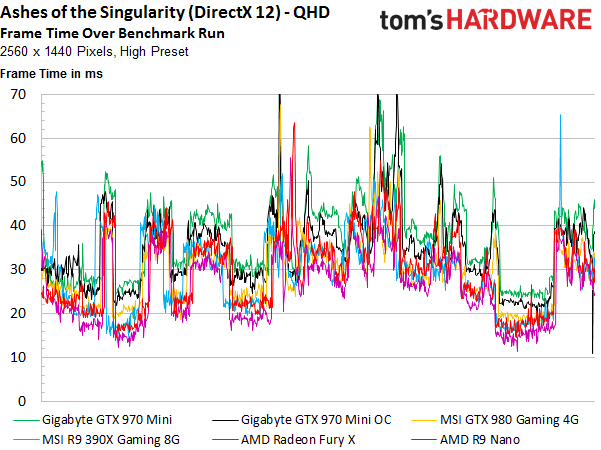

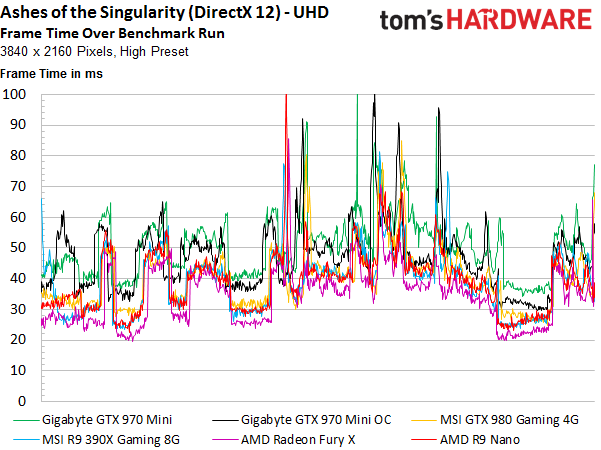

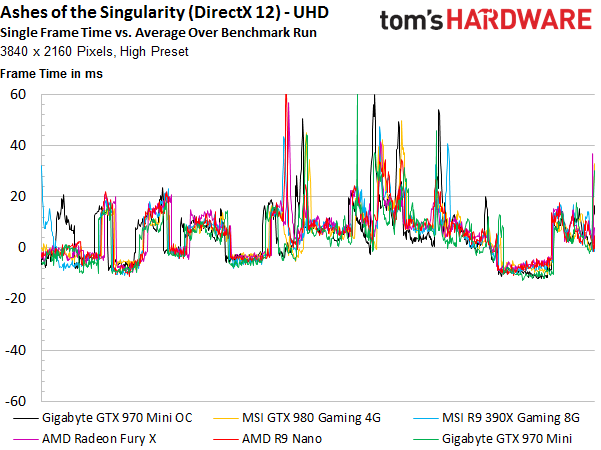

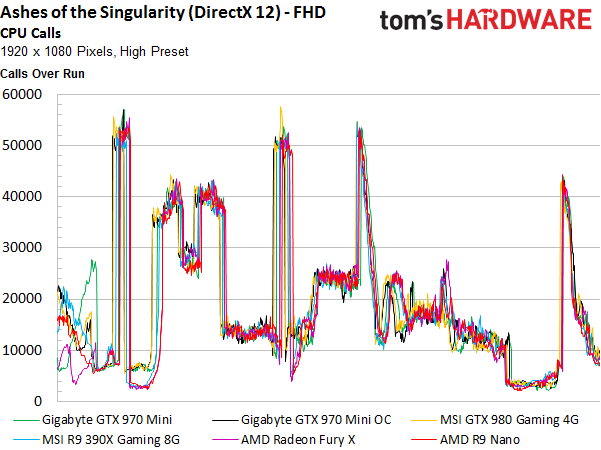

The different views of the individual frame render times are interesting as well. The total times per frame result in a curve that represents the challenge of the individual benchmark scenes pretty well.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

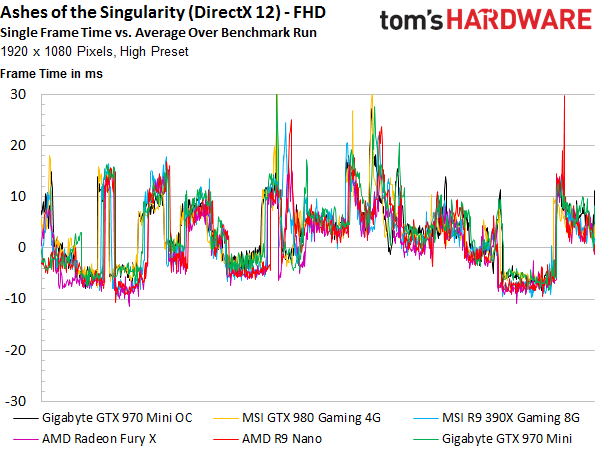

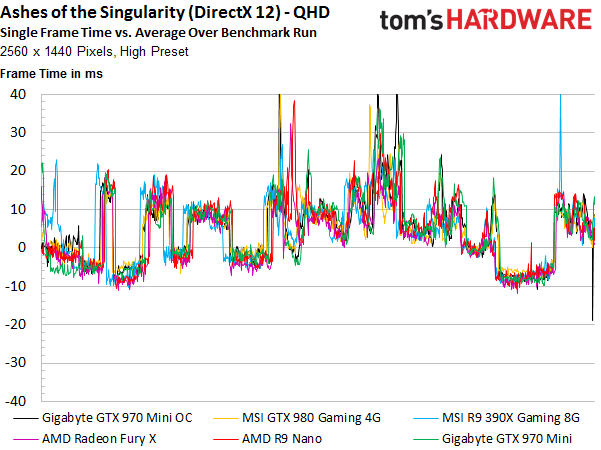

A similar result is achieved after normalizing the frame render times that make up the curve by subtracting the overall average frame time. This provides a comparable impression of the different loads during the benchmark.

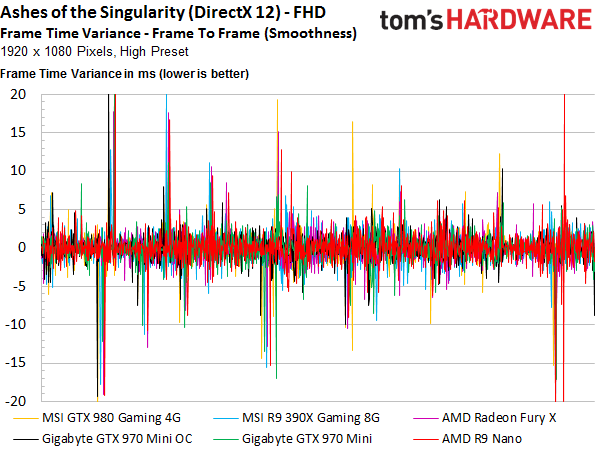

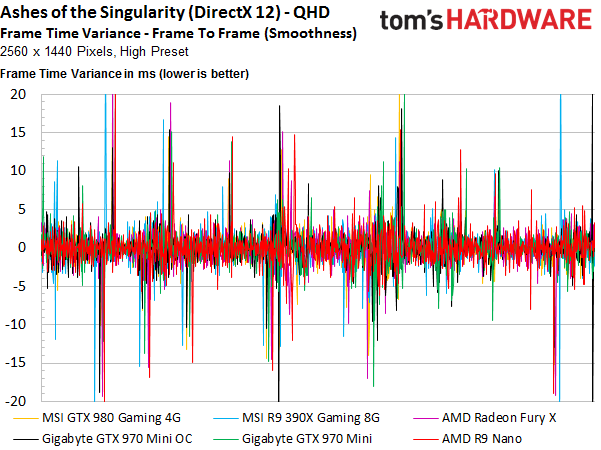

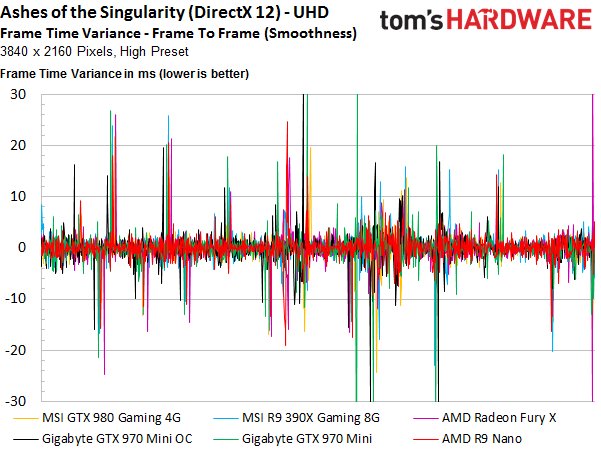

Finally, let’s take a look at the smoothness, which is to say the ratio between the individual frame render times. Large jumps should only occur during scene changes. But, once again, the results show that all of the graphics cards encounter massive jumps that result in perceptible stuttering, no matter how good their performance looks in the FPS bar graph. Not even minimum and maximum frame rates help with this.

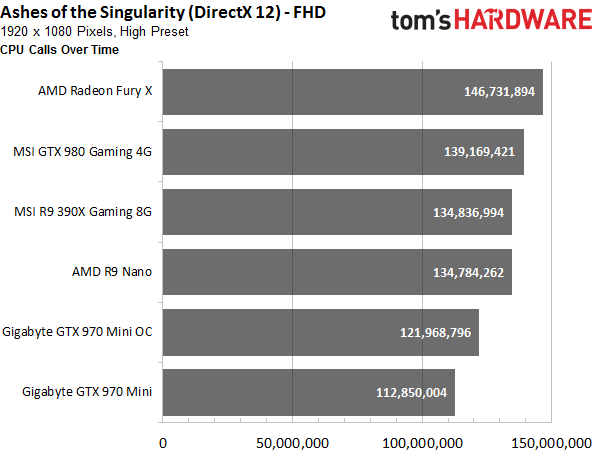

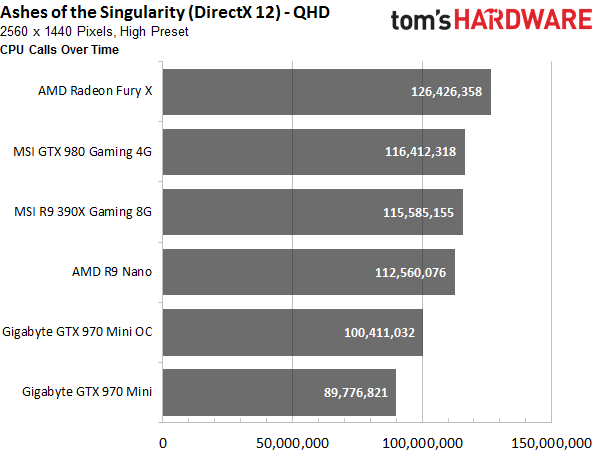

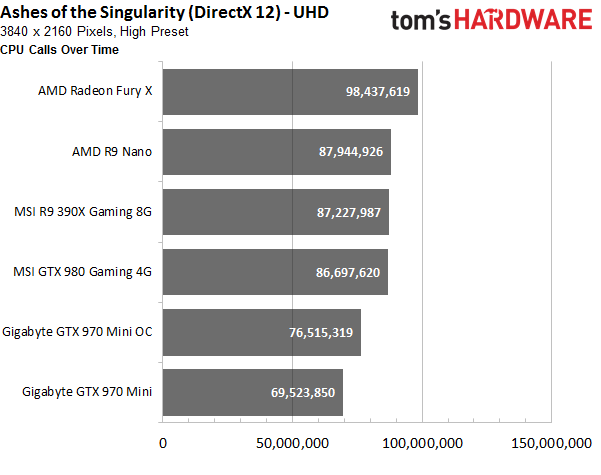

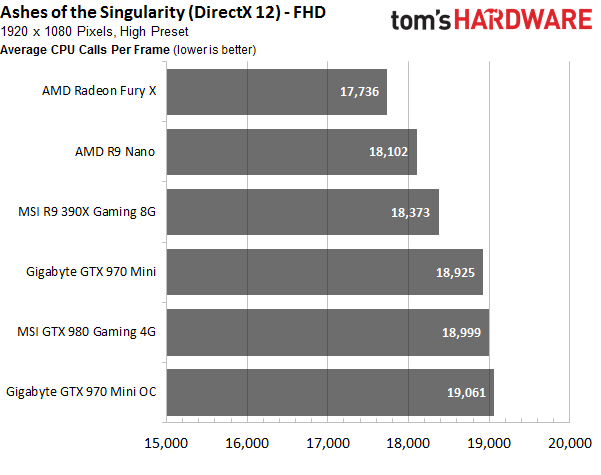

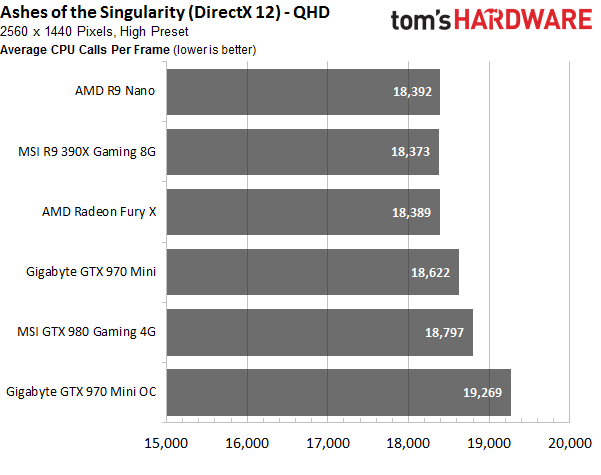

After our FPS and frame time benchmark results, we turn our attention to the total number of CPU calls during the benchmark run, represented in a bar graph. It makes sense that faster graphics cards have more CPU calls, but that's not the whole story.

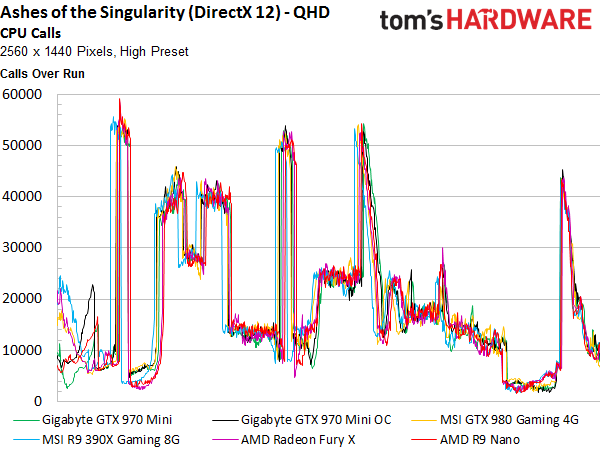

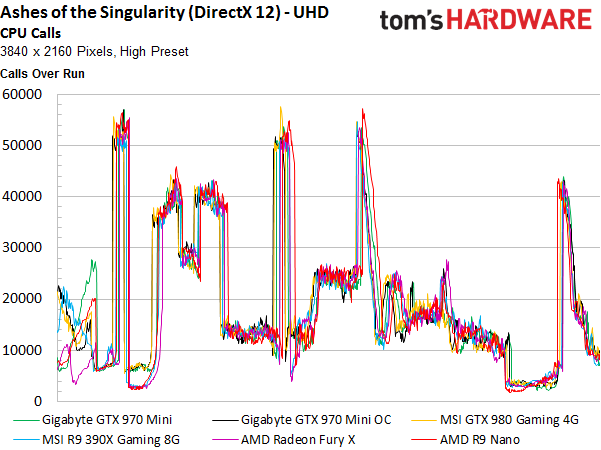

Next, we look at the results as a curve instead. We find that, particularly at the beginning of the benchmark, all of AMD’s and all of Nvidia’s graphics cards act in a similar fashion.

Looking at the entire benchmark run, as well as the ratio of CPU calls to rendered frames, AMD looks good.

Growing fluctuations in the Radeon R9 Nano’s results with increasing resolution are a bit irritating, though. This trend is consistent and most definitely not a fluke.

Bottom Line

Overall, we’re left with the impression that AMD's Radeon R9 Nano does well in our DX12 benchmark, along with the feeling that there's actually something to the whole asynchronous computing/shading function debate. We’ll keep an eye on this as announced DirectX 12 titles approach, including Caffeine, Project Cars, DayZ, Umbra, Deus Ex: Mankind Divided, Hitman, Fable Legends, Gears of War: Ultimate Edition and King of Wushu, as well as several Windows 10 exclusive titles such as Minecraft Windows 10 Edition, Halo Wars 2 and Gigantic.

Update: Earlier in the week, Nvidia announced that it will publish a driver that’s supposed to take care of, or at least mitigate, the asynchronous computing/shading problem. We weren’t able to find out anything more specific than that, but we’ll keep following up on this topic.

Current page: Results (DirectX 12): Ashes Of The Singularity

Prev Page Results: Middle-earth: Shadow Of Mordor And Thief Next Page Results: Power Consumption And Efficiency

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

Eximo Looks like the table had a hiccup. GTX970 (OC) is showing a lot of the numbers from the R9-390X, and maybe a few numbers from the 980 column.Reply -

-Fran- It is a nice card and I agree, but... It's not USD $650 nice.Reply

This card is a very tough sell for AMD, specially since ITX cases that can house current long cards are not hard to find or weird enough to make short cards a thing.

It's nice to see it's up there with the GTX970 in terms of efficiency, since HTPCs need that to be viable and the card has no apparent shortcomings from what I could read here.

All in all, it needs to drop a bit in price. It's not "650 nice", but making it "~500 nice" sounds way better. Specially when the 970 mini is at 400.

Cheers! -

sna no HDMI2.0 in itx small system near the 4k TV is unforgivable AMD , what were you thinking?Reply -

sna Reply16605176 said:It is a nice card and I agree, but... It's not USD $650 nice.

This card is a very tough sell for AMD, specially since ITX cases that can house current long cards are not hard to find or weird enough to make short cards a thing.

It's nice to see it's up there with the GTX970 in terms of efficiency, since HTPCs need that to be viable and the card has no apparent shortcomings from what I could read here.

All in all, it needs to drop a bit in price. It's not "650 nice", but making it "~500 nice" sounds way better. Specially when the 970 mini is at 400.

Cheers!

well this card is for the smallest case ... not the easy to find huge long itx case.

I personaly find long itx cases useless ... they are very near to Matx case in size .. and people will pick up MATX ovet ITX any time if the size is the same.

BUT for 170mm long card ? this is a winner.

the only thing killing this product is the lack of HDMI2.0 which is very important for itx .. ITX are the console like PC near the tv.

-

heffeque Replyno HDMI2.0 in itx small system near the 4k TV is unforgivable AMD , what were you thinking?

I guess that they were thinking about DisplayPort? -

Nossy I'd go with the 950 GTX for a mini ITX build for a 1080pgaming/4k video HTPC.Reply

For a $650 bucks video card. I'd go with a 980TI and use a Raven RVZ01 if I want an ITX build with performance.