Why you can trust Tom's Hardware

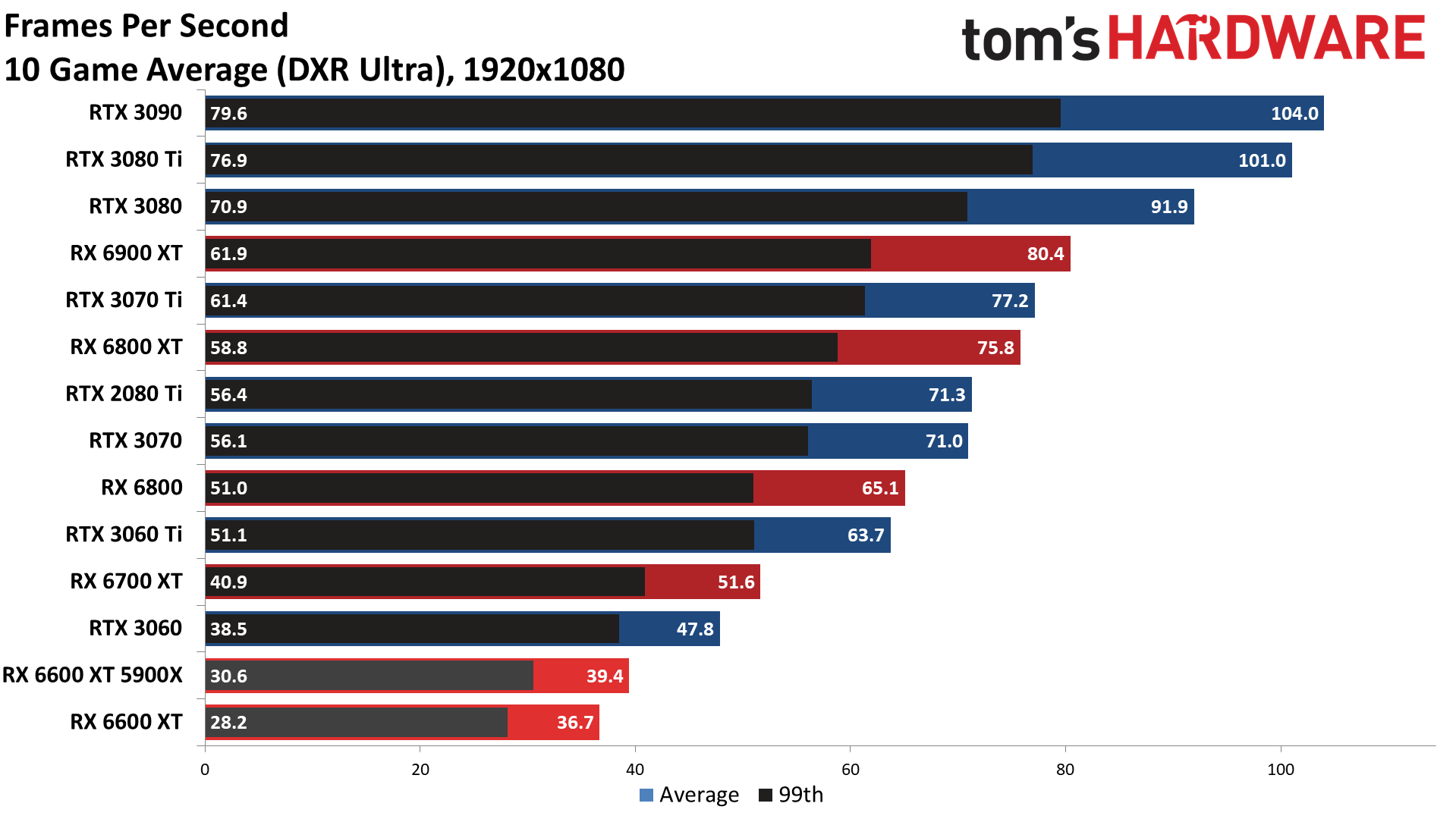

1920x1080p DXR Ultra

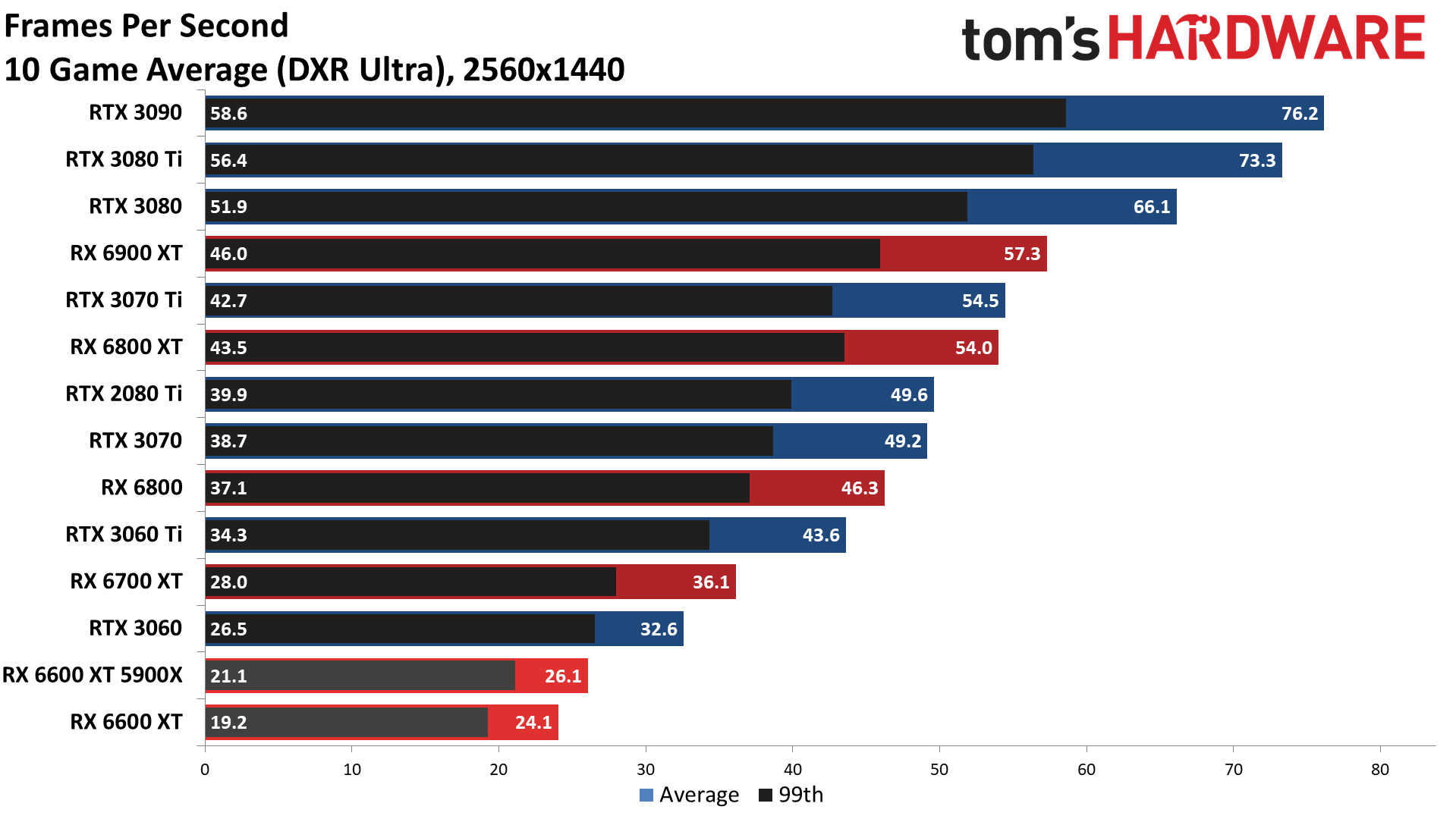

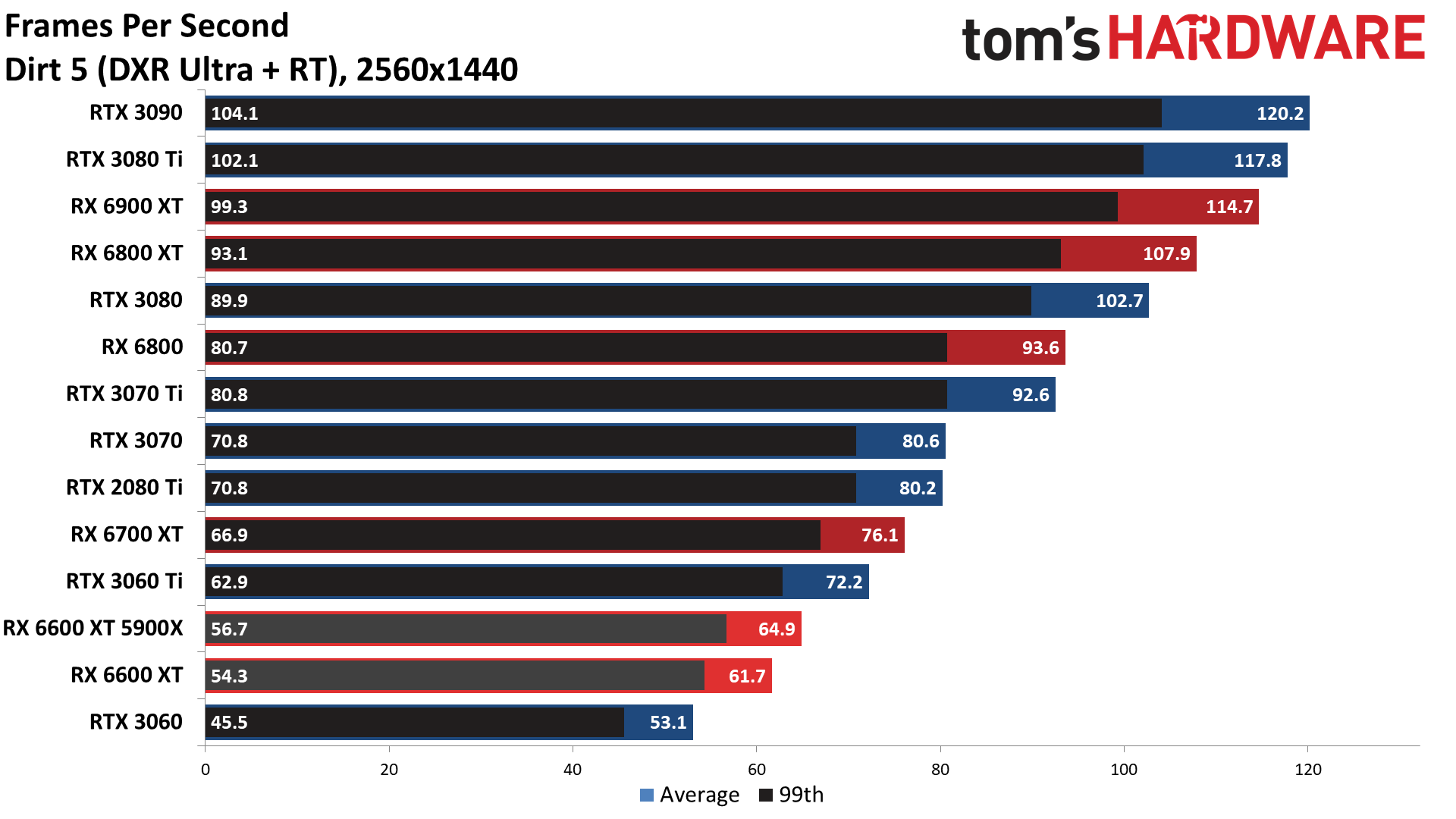

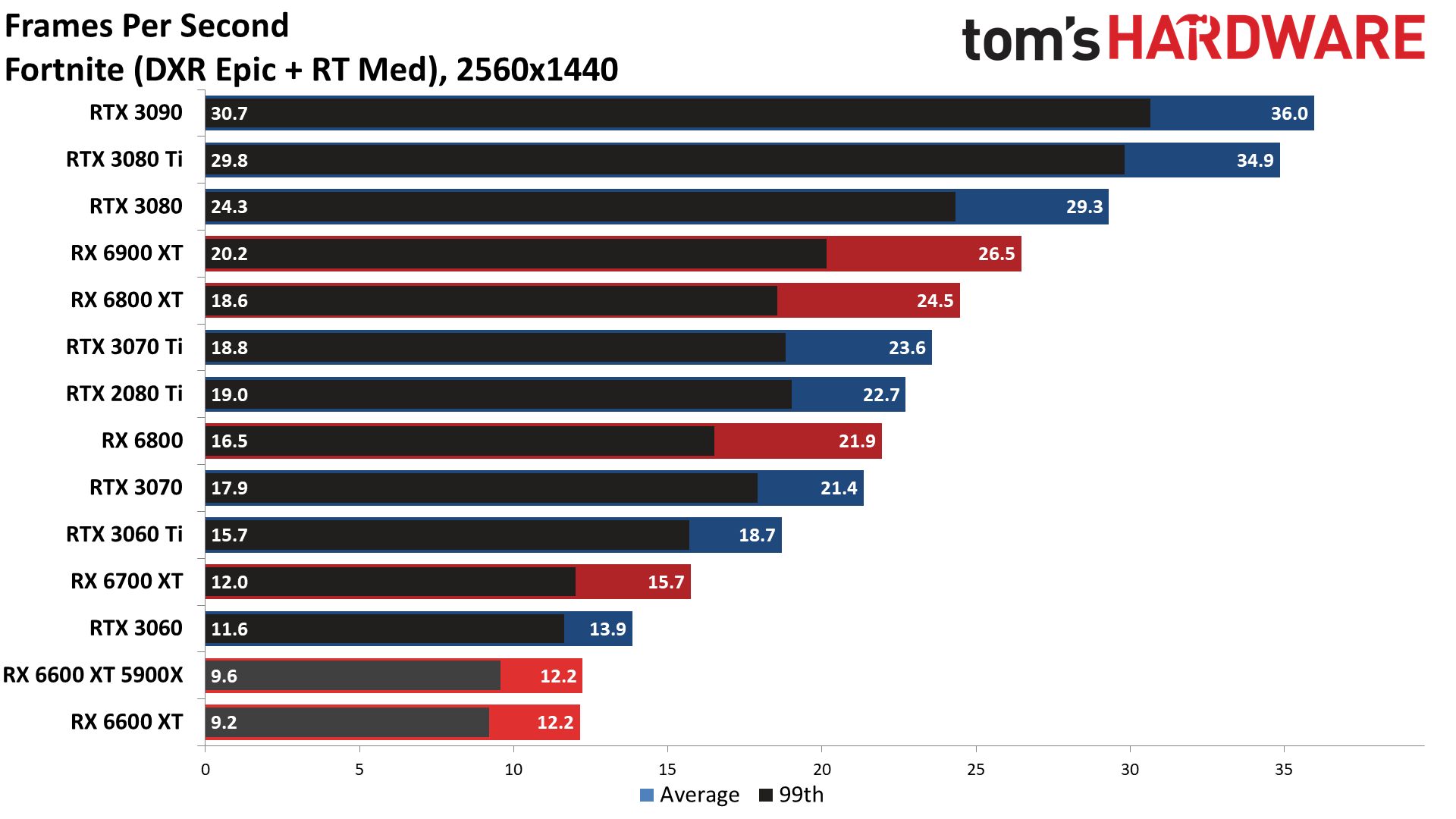

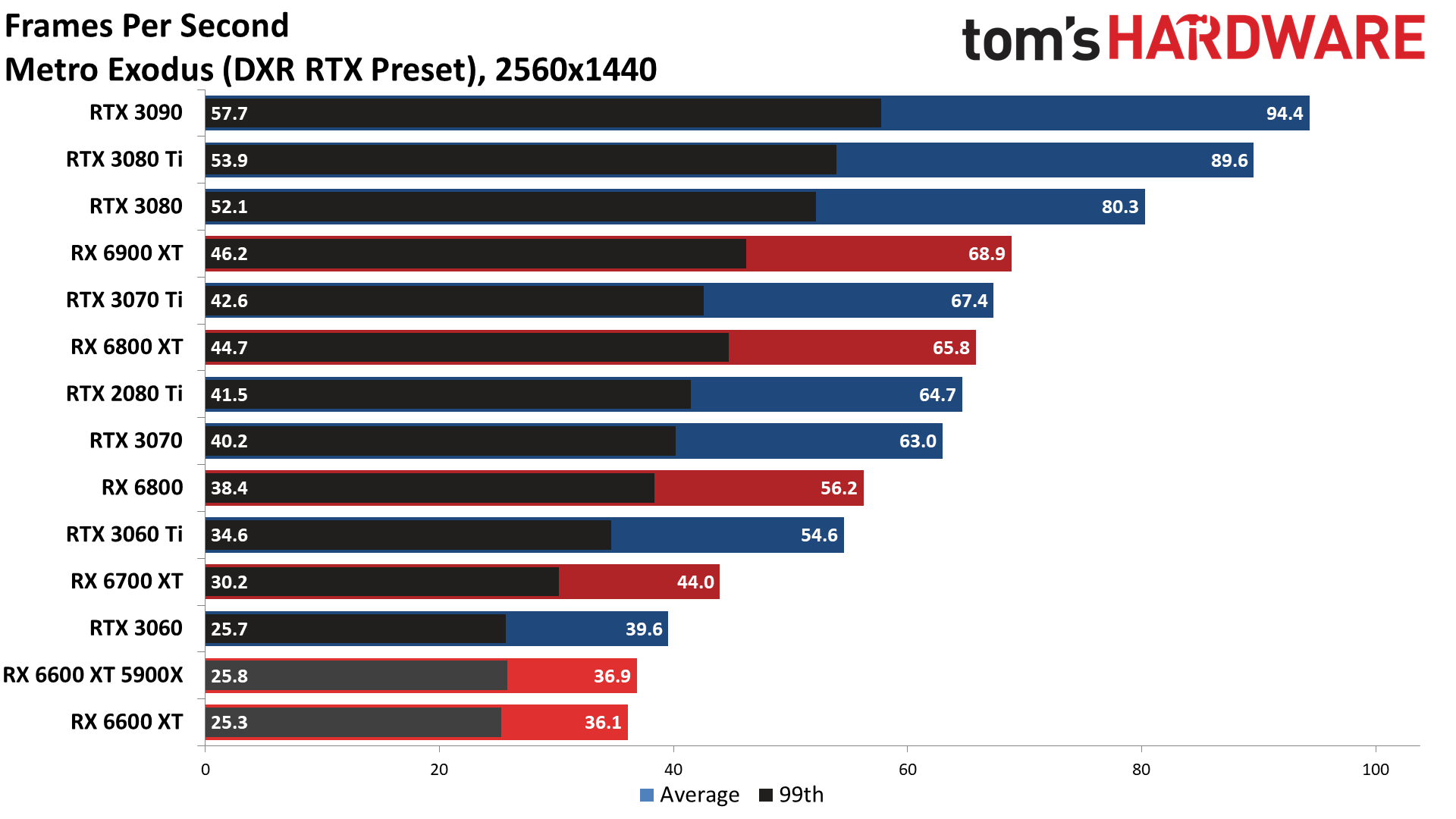

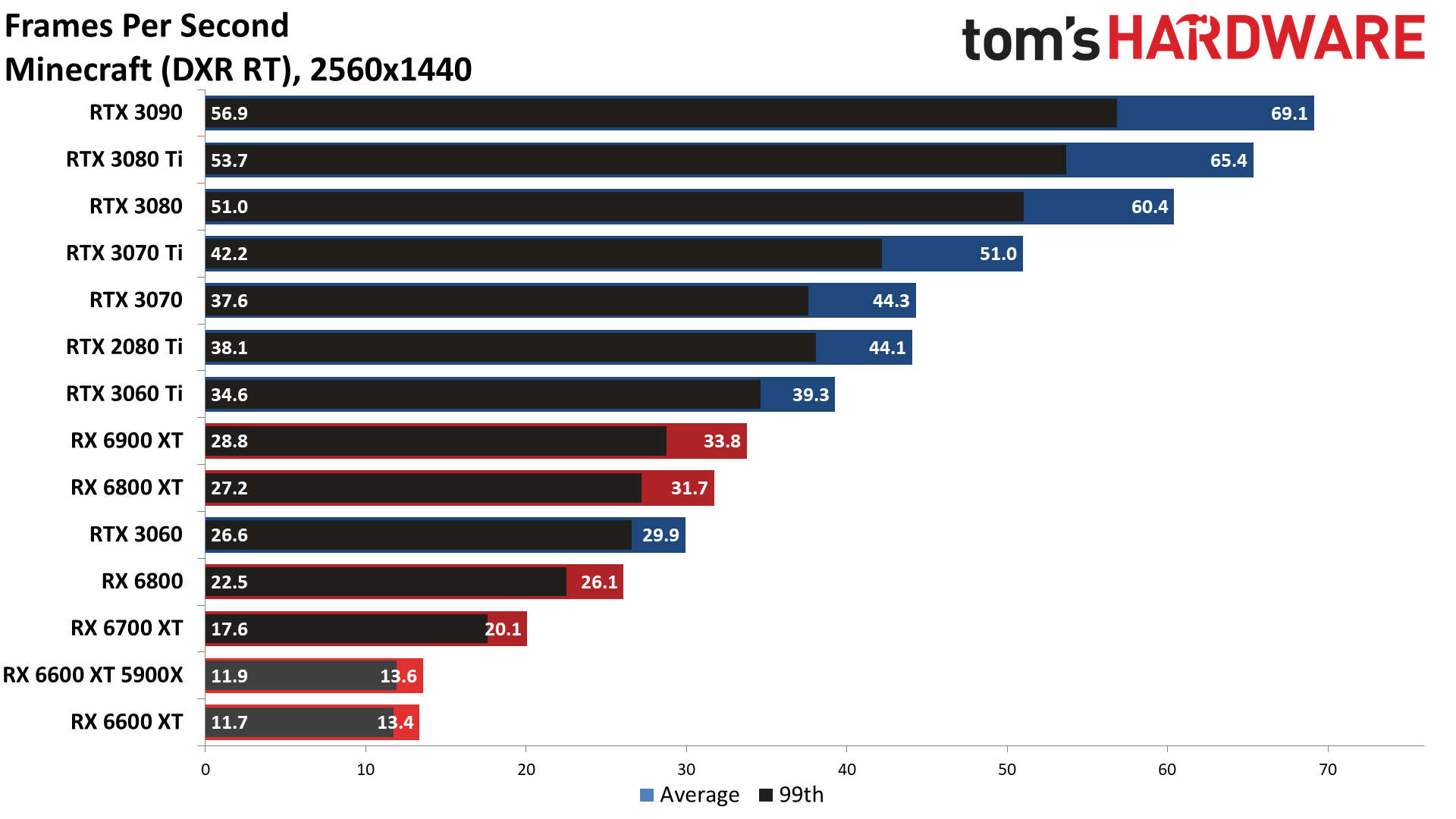

2560x1440p DXR Ultra

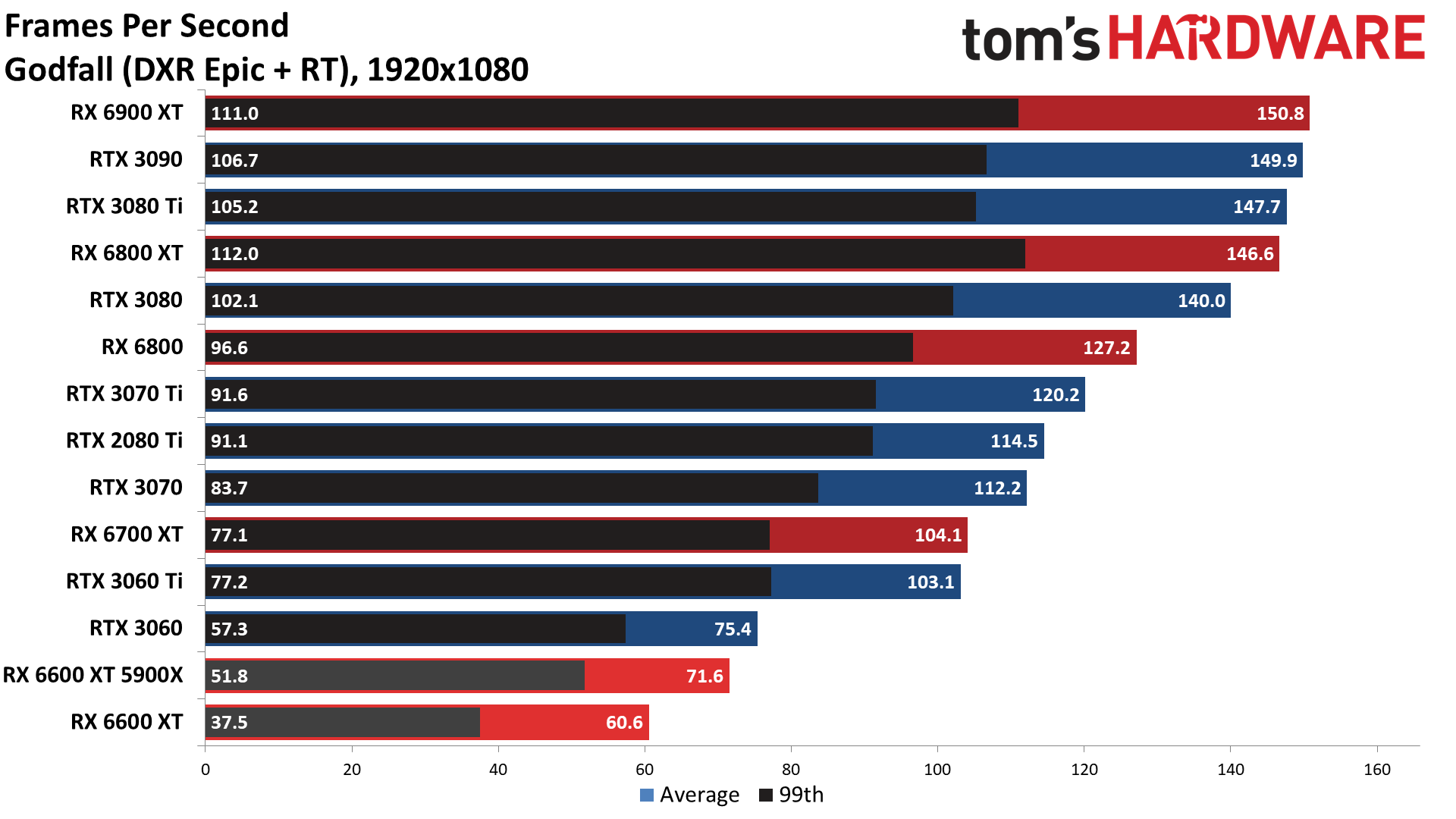

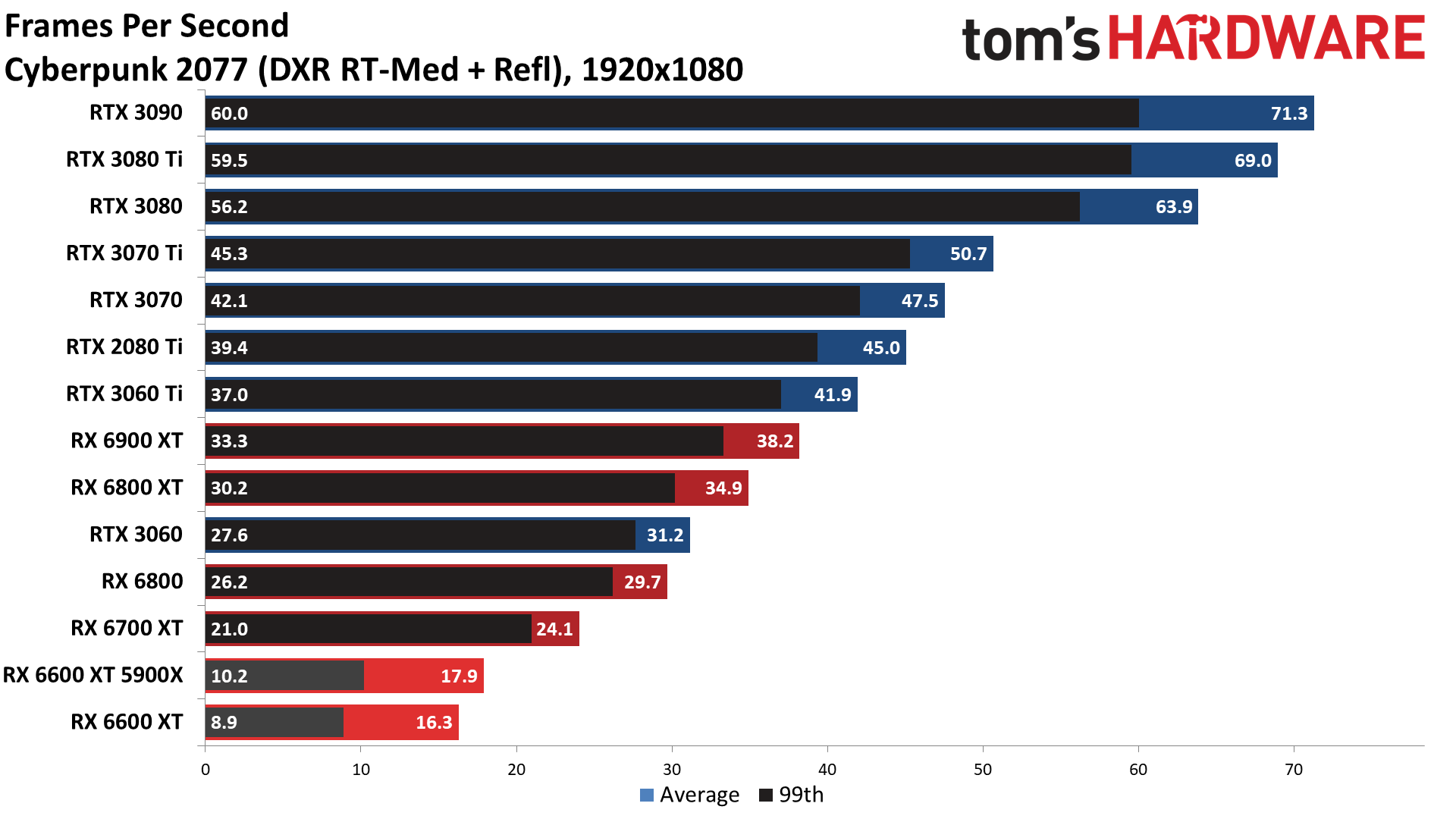

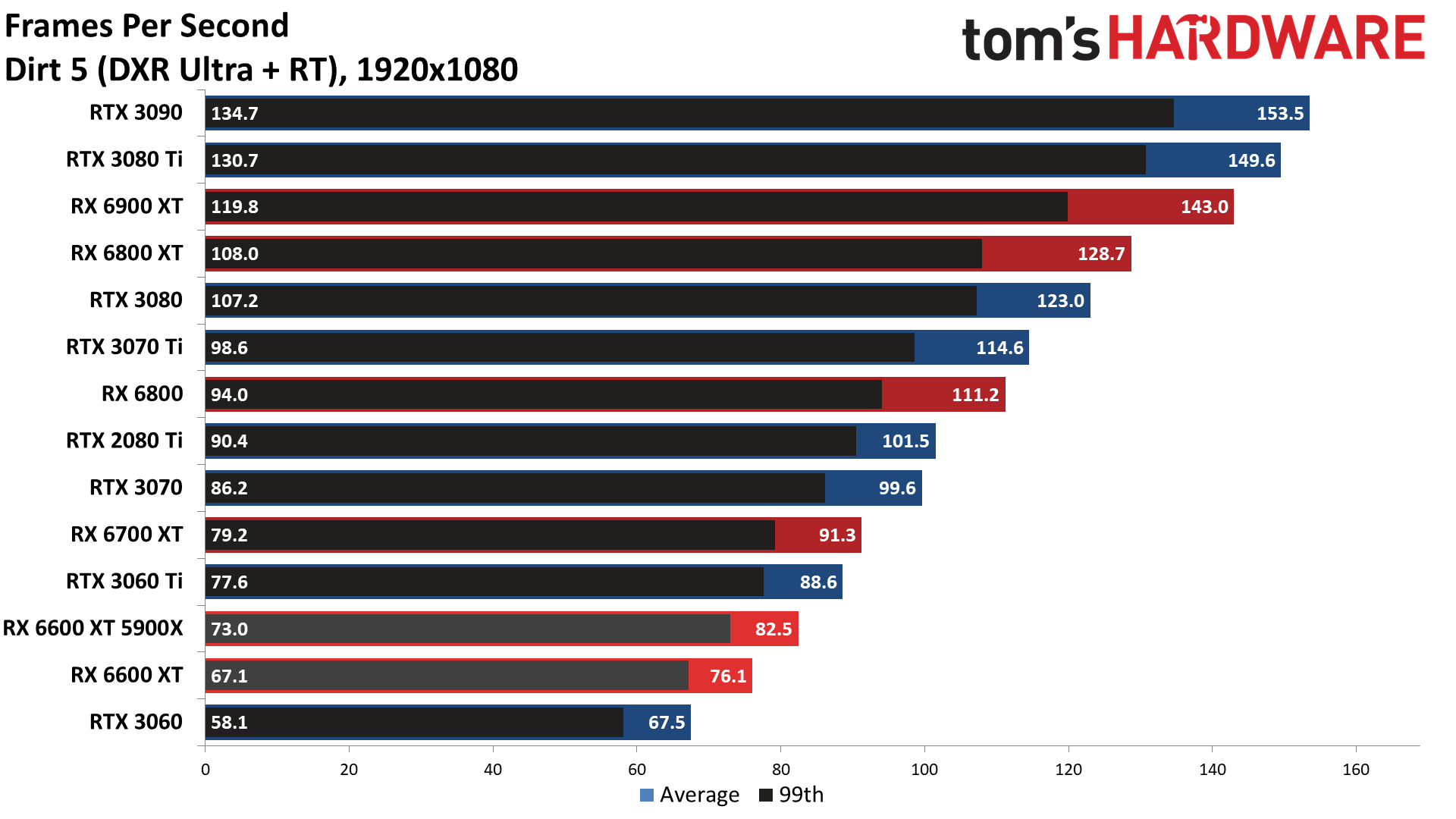

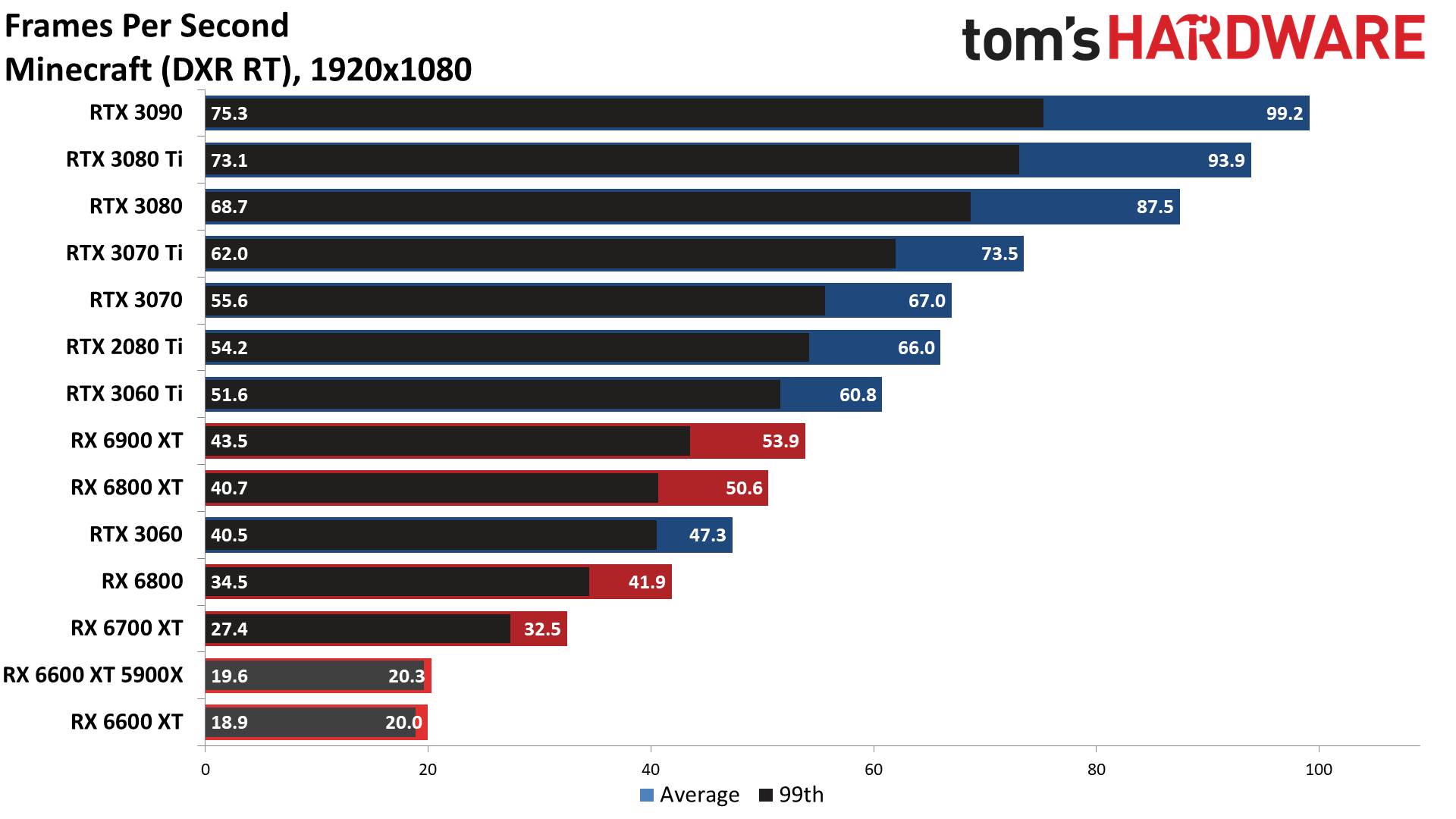

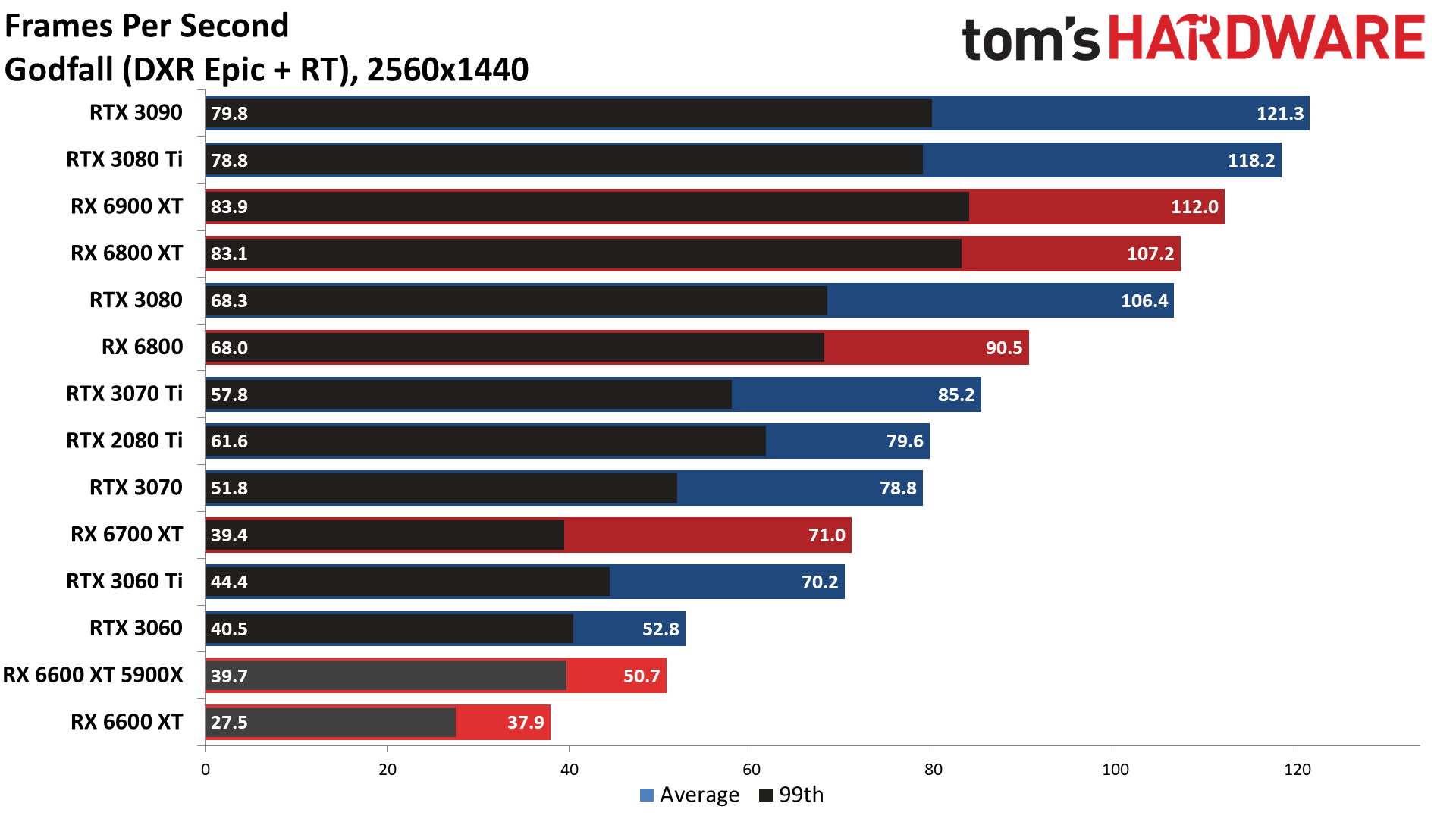

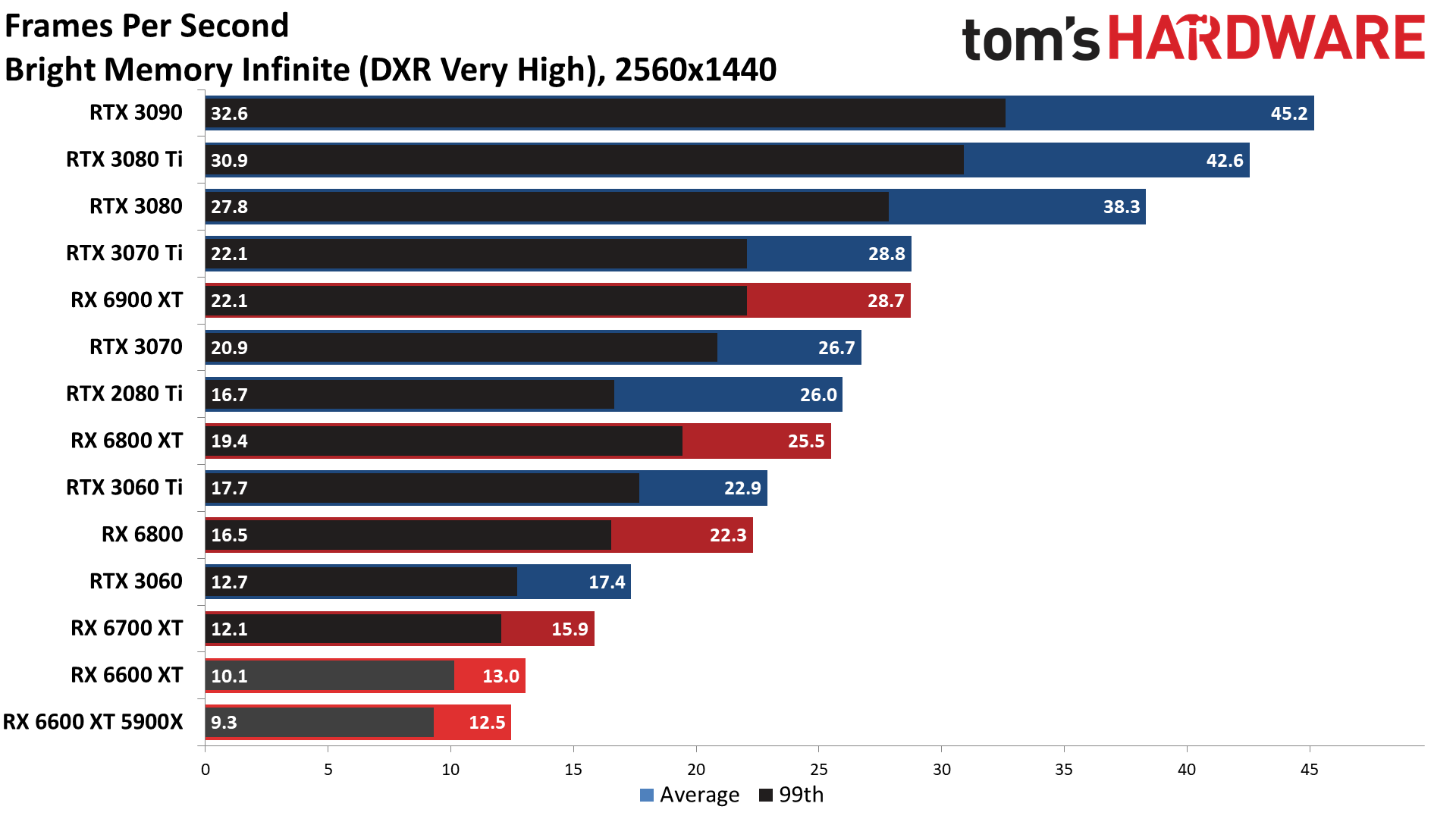

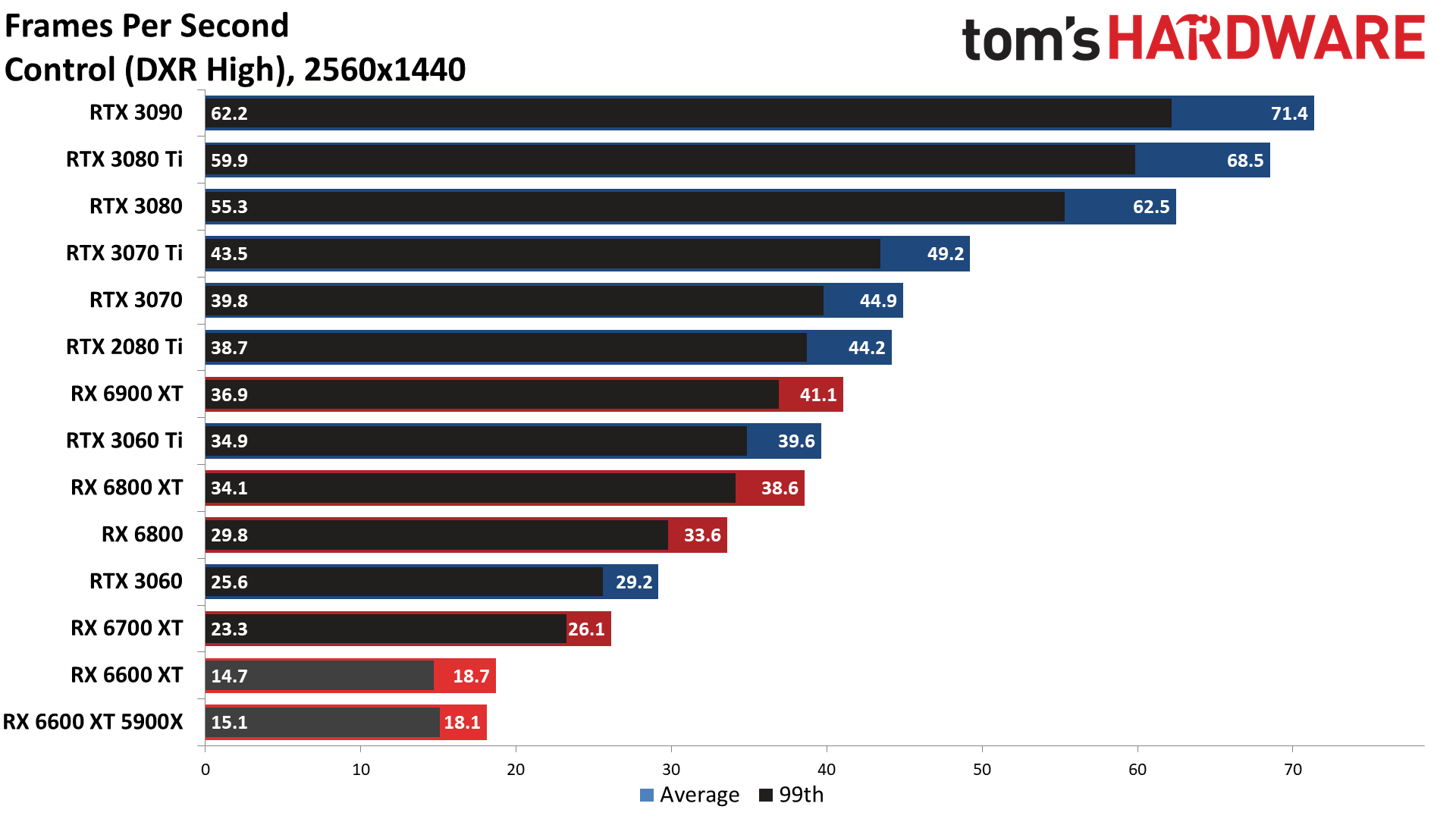

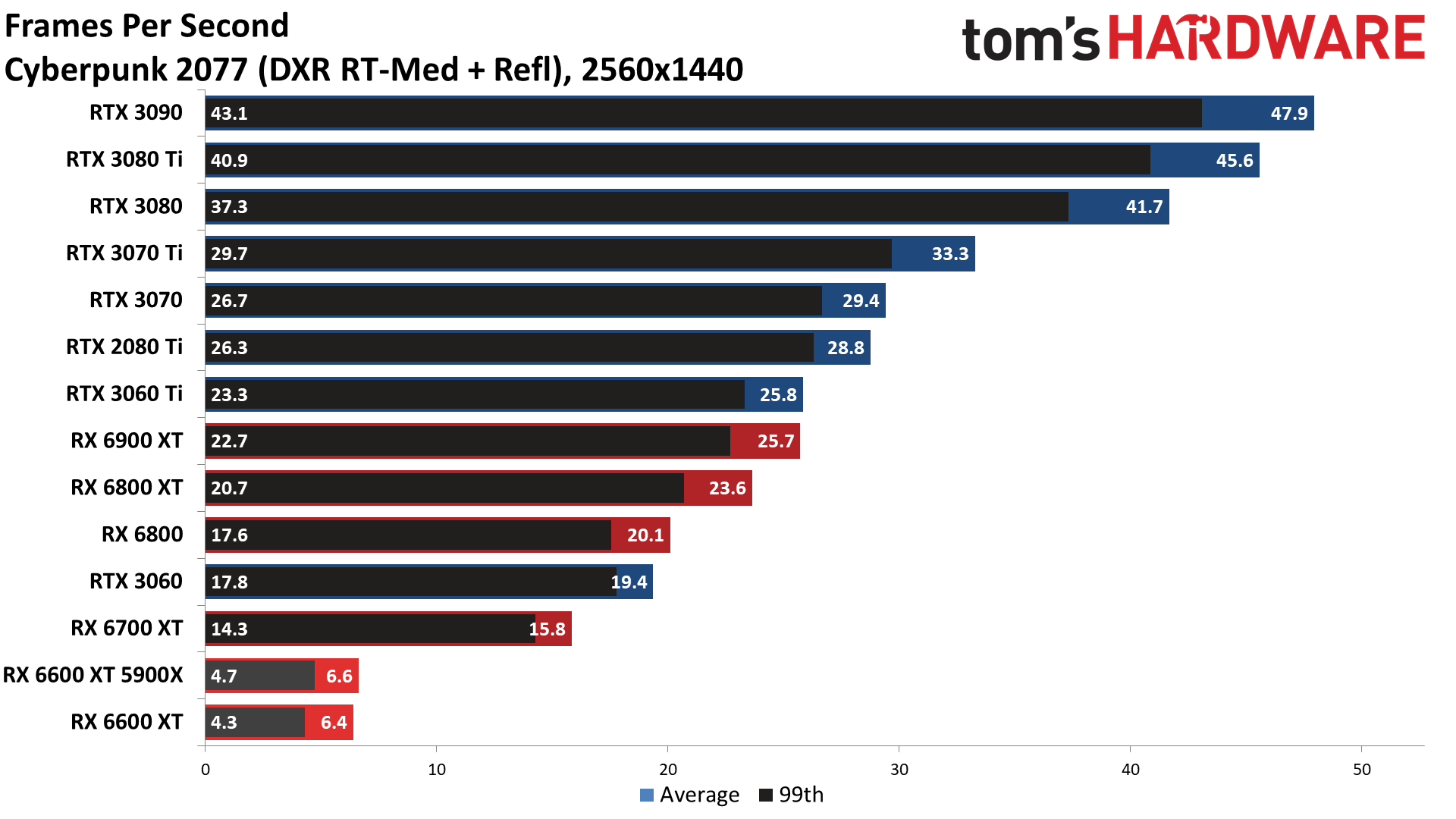

So far, all of our gaming benchmarks have focused on traditional rasterization performance, but the RX 6600 XT also supports DXR, just like all other RDNA2 GPUs. What happens when you play a game that supports ray tracing? In some cases, like the AMD promoted Dirt 5 and Godfall, performance is still above 60 fps for the most part. Other times, it's not just the wheels falling off: The transmission drops to the ground, and the engine throws a rod for good measure.

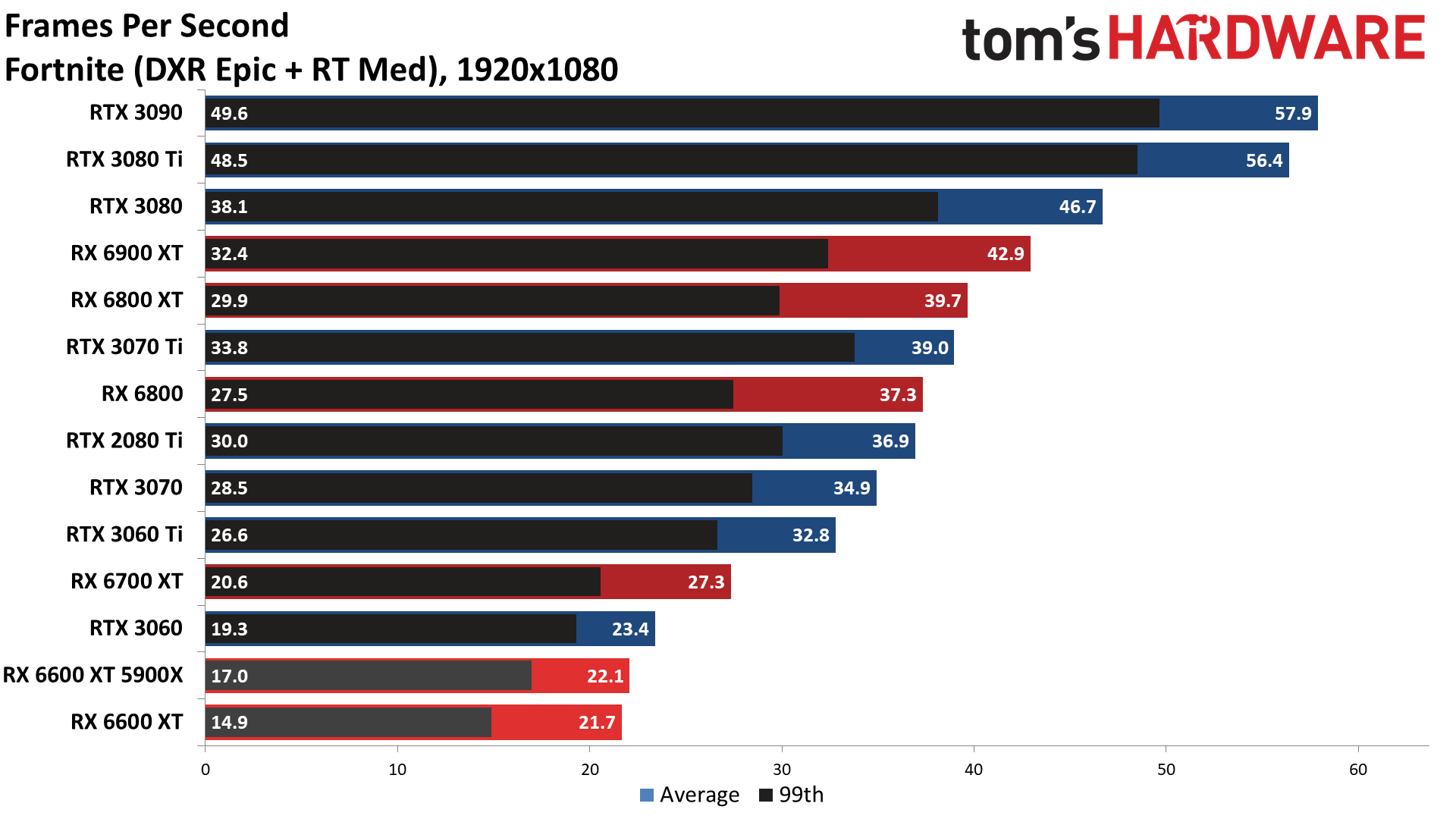

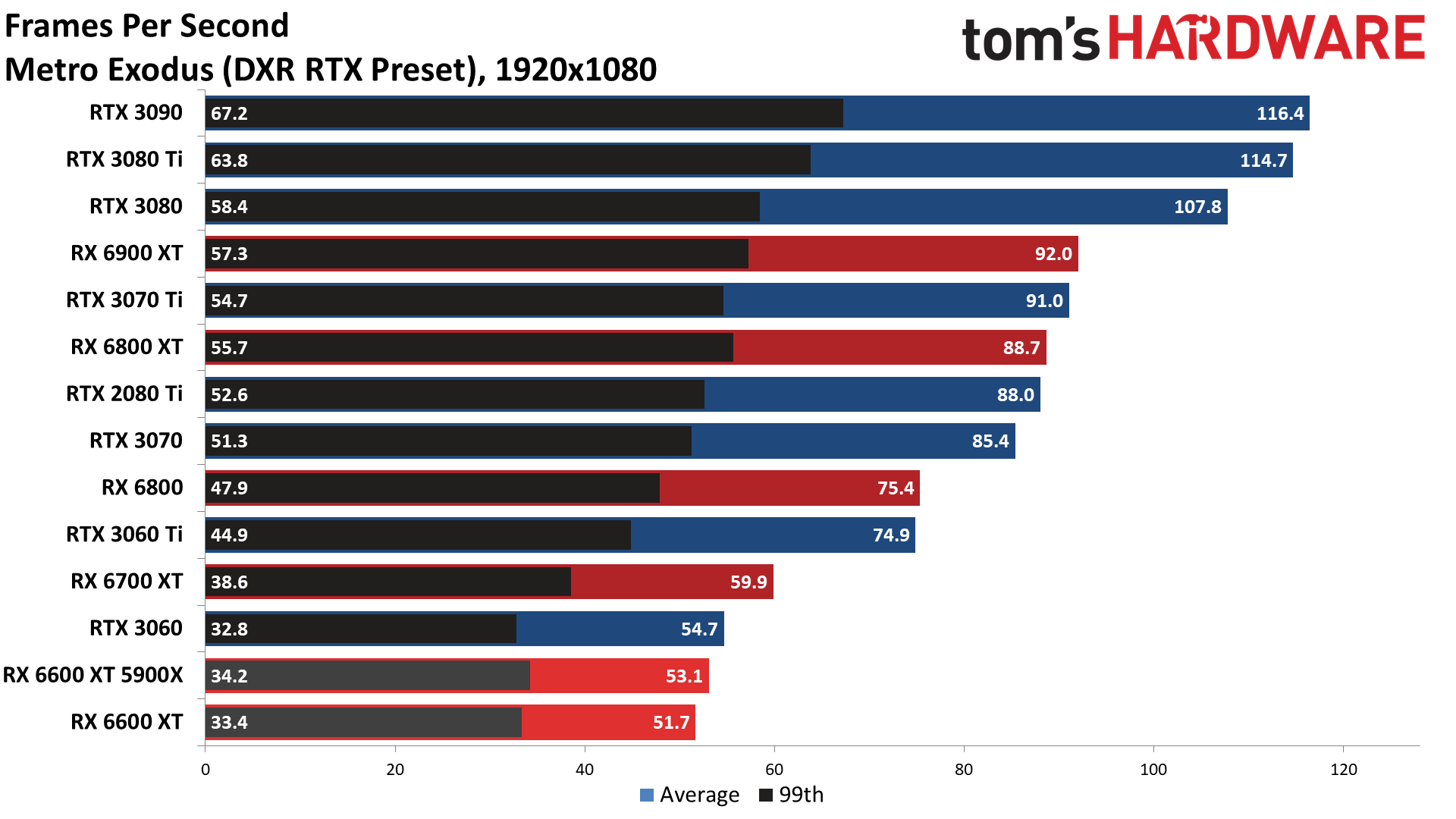

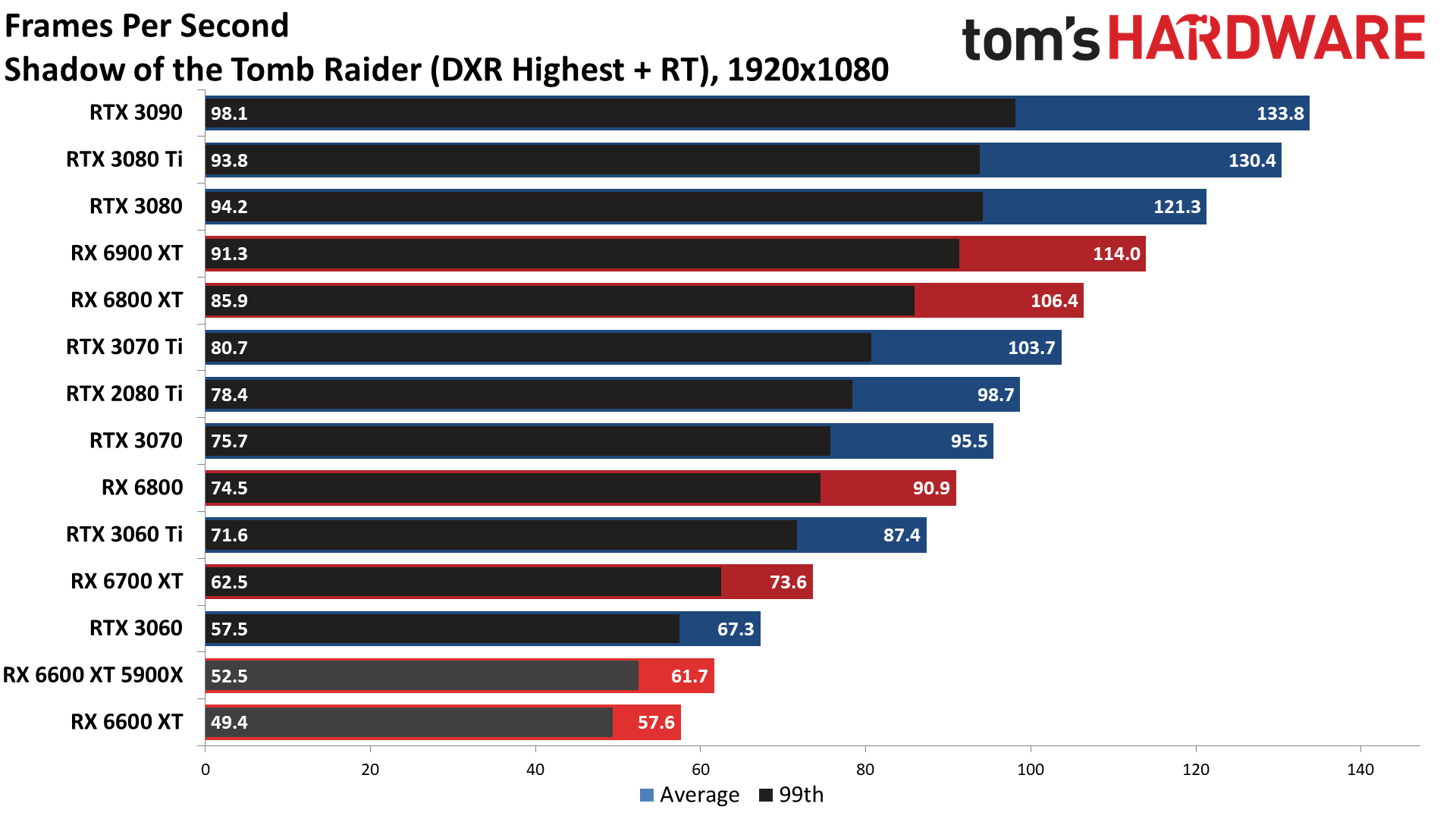

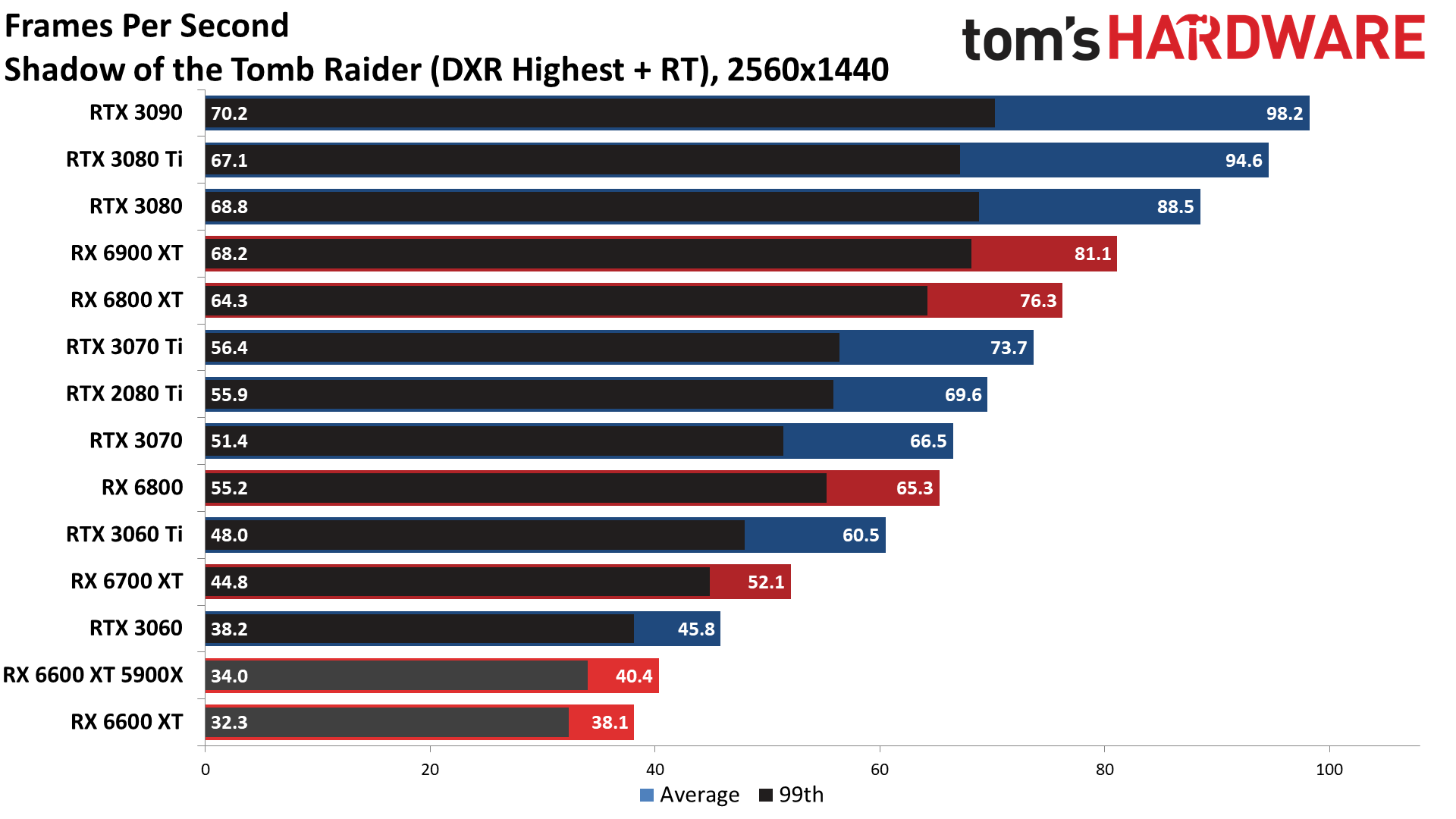

Even without factoring in DLSS performance — which is still an advantage Nvidia holds over AMD — the RTX 3060 outperformed the RX 6600 XT by 52% across our ray tracing test suite at 1080p and by 70% at 1440p. Dirt 5 was the only game where AMD's GPU came out ahead, and as we've mentioned previously, the ray traced shadows in Dirt 5 aren't all that compelling an argument for ray tracing in general. We'd say the same about the shadows in Godfall and Shadow of the Tomb Raider; ray tracing is better suited to reflections and global lighting effects rather than shadows that can be 'faked' pretty nicely using faster algorithms.

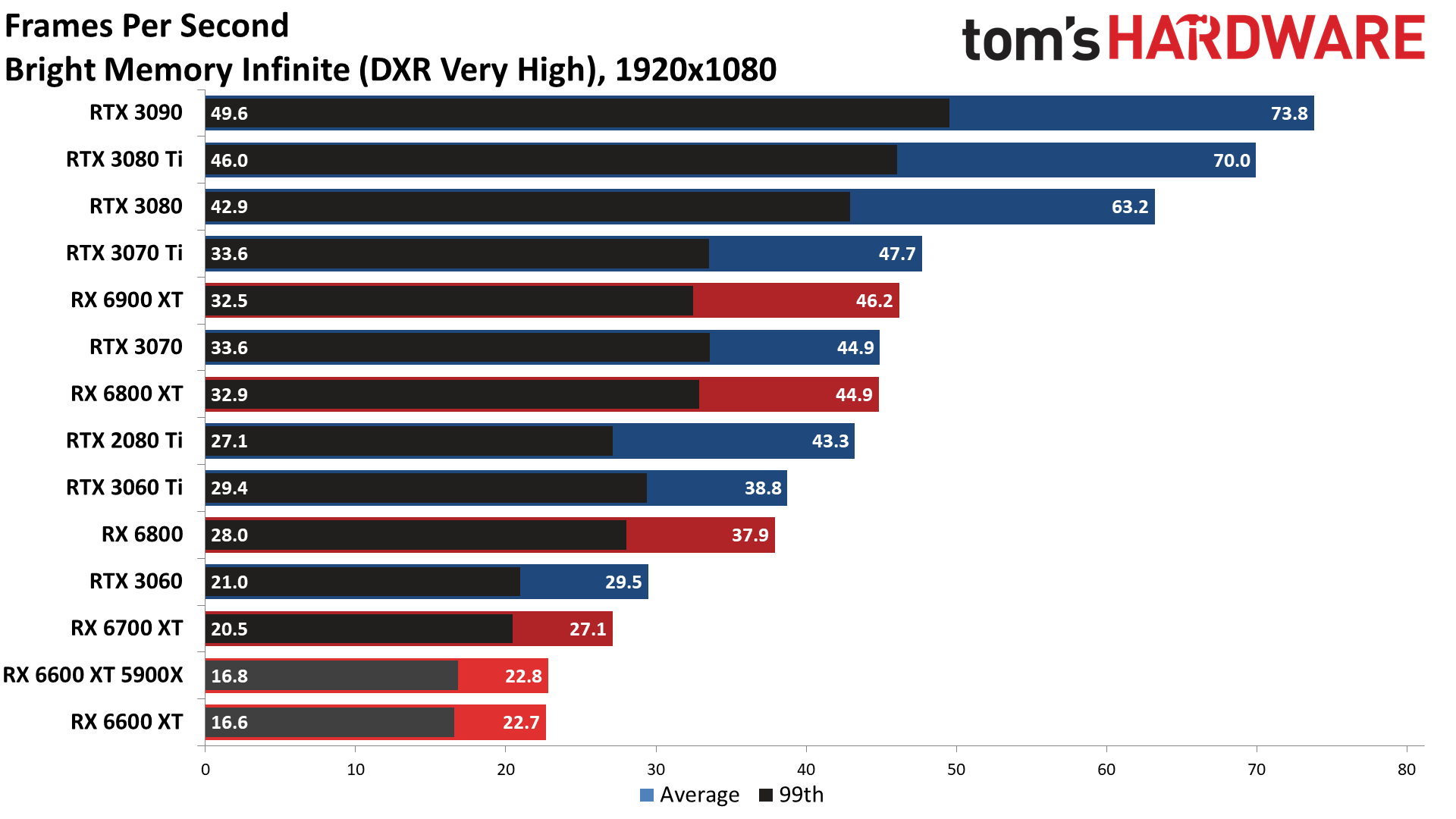

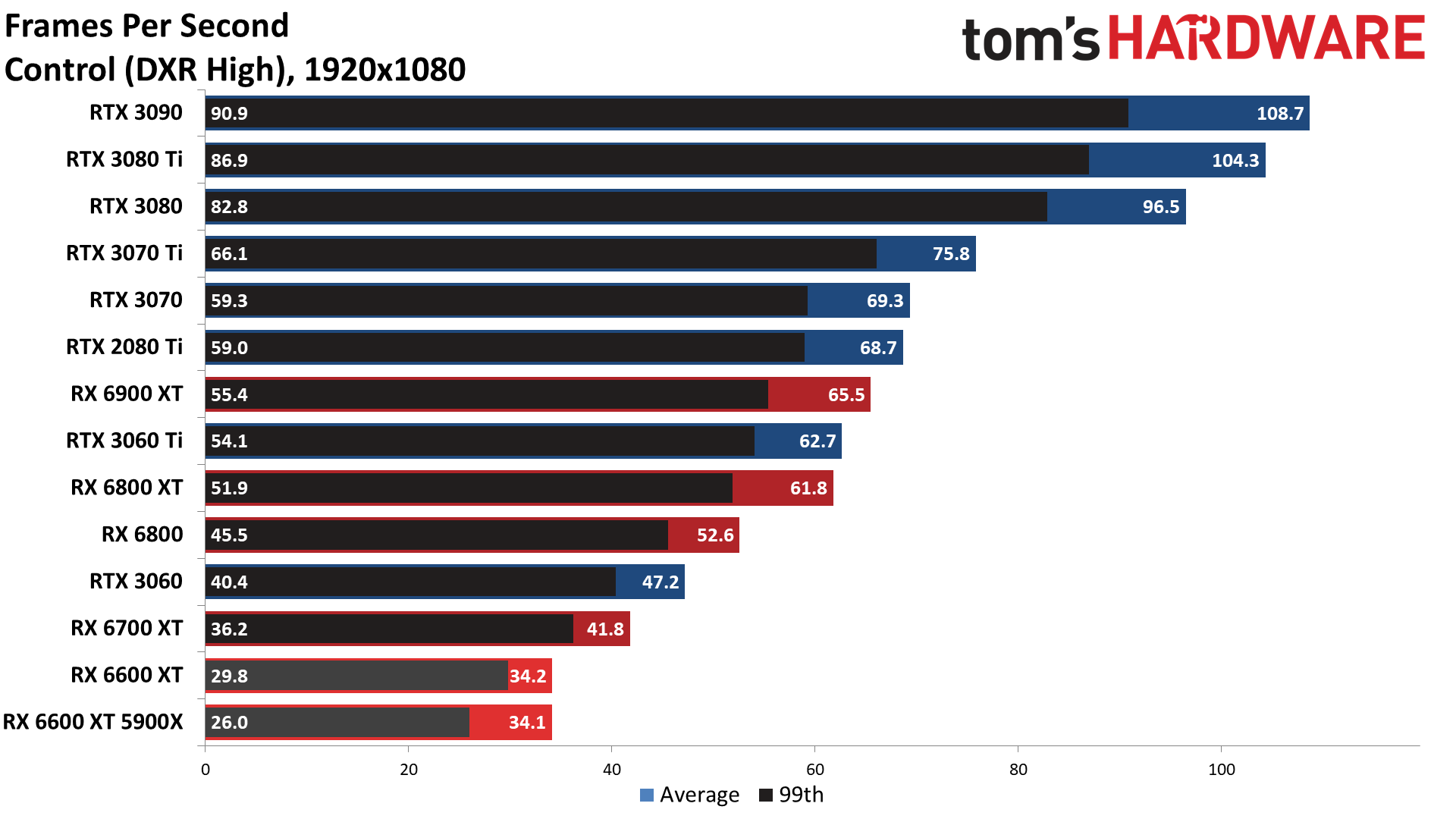

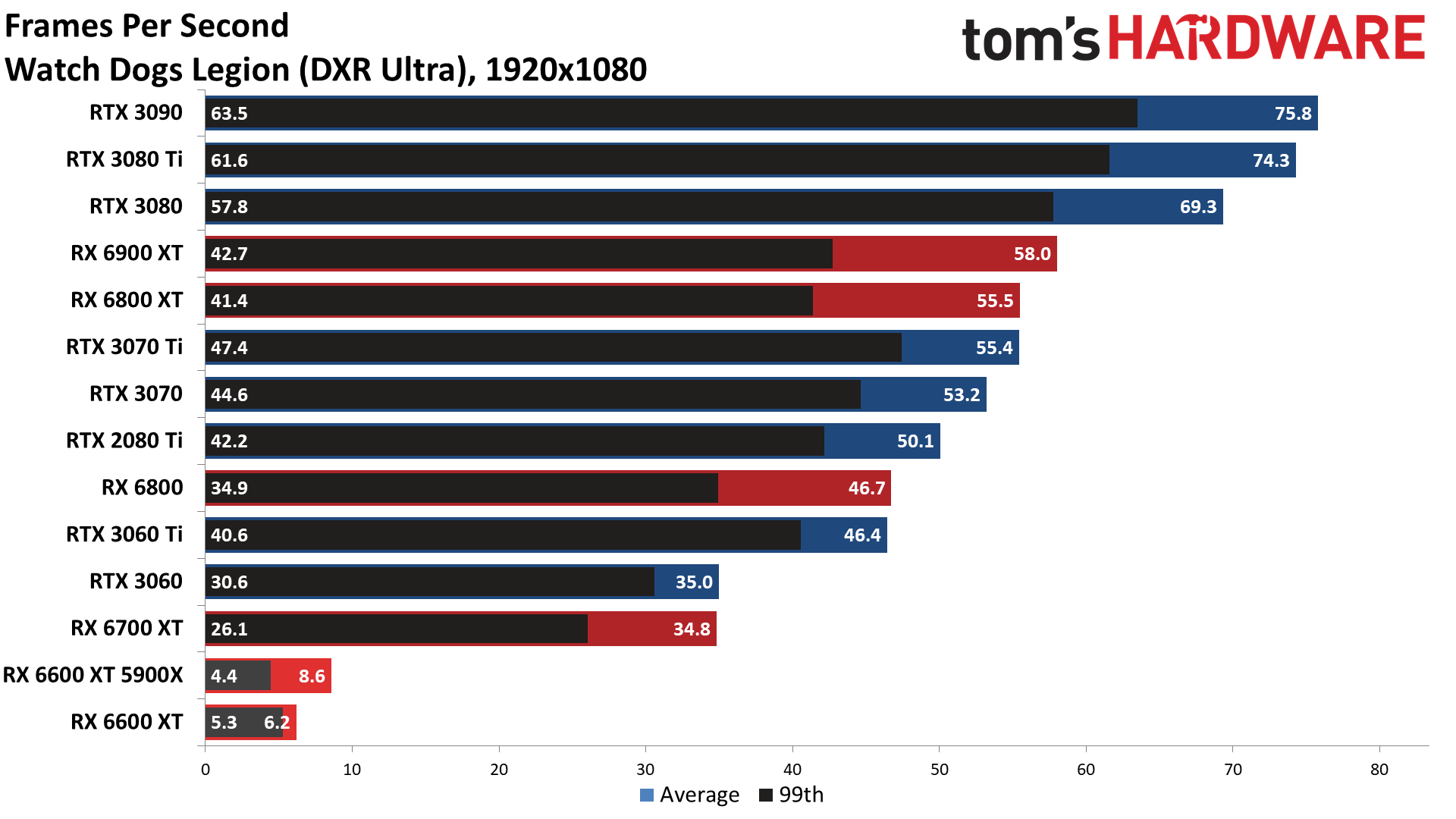

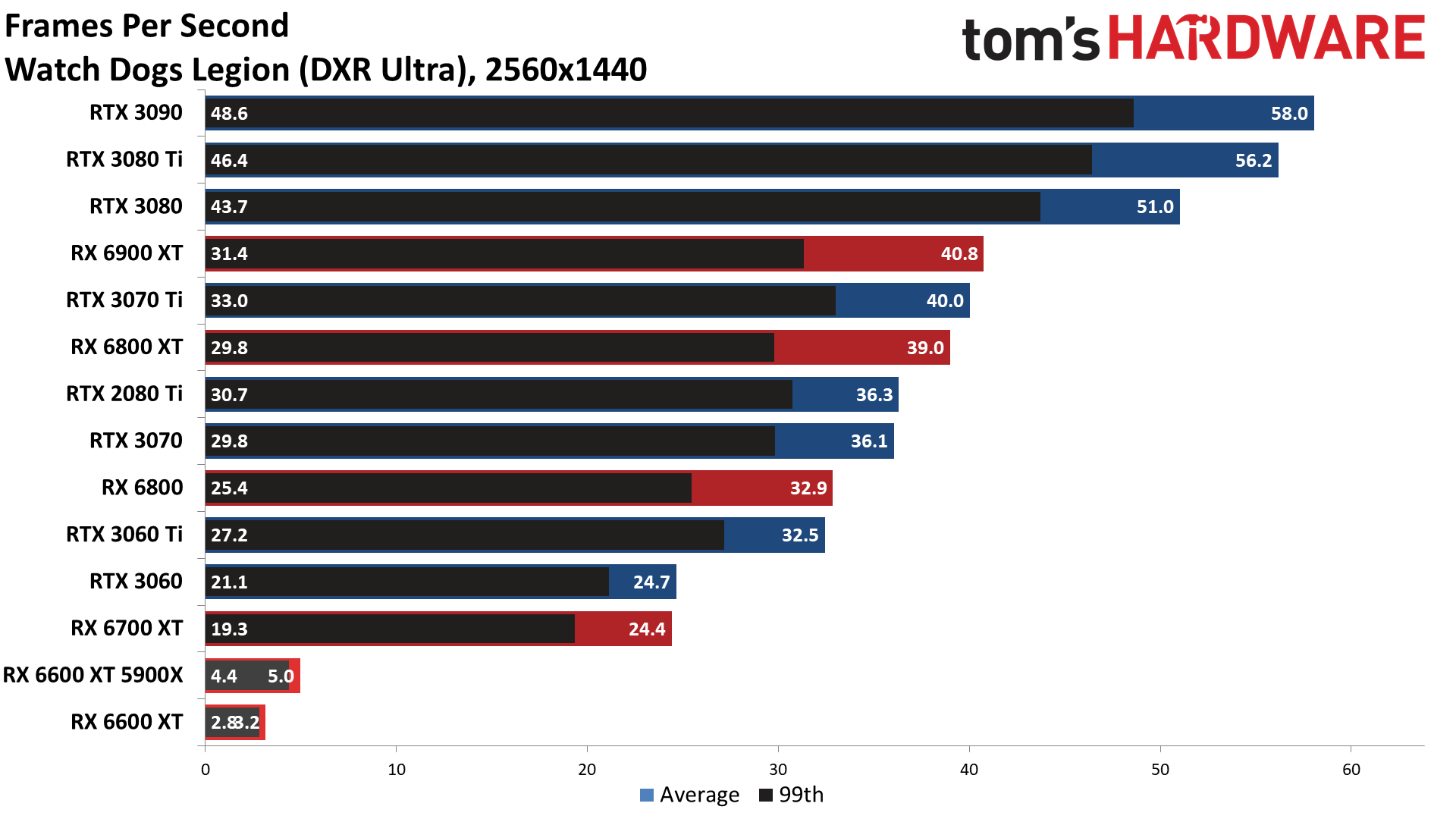

Games with more ray tracing effects can really punish the RX 6600 XT. Bright Memory Infinite, Cyberpunk 2077, Control, and Minecraft all favor the RTX 3060 by anywhere from 30% (Bright Memory Infinite) to over 130% (Minecraft). And then there's Watch Dogs Legion, which seems to have some driver problems holding it back as it only managed 6.2 fps at 1080p — though that did improve to 8.6 fps when we tested on the Ryzen 5900X, so perhaps it's related to memory thrashing rather than just drivers. Still, the RTX 3060 Ti and other 8GB Nvidia cards didn't have that sort of difficulty.

The real concern is that across our suite of ten games that support ray tracing effects, only half of the games managed more than 30 fps at 1080p — and only Control managed more than 30 fps while using more than one RT effect. Actually, there's a second game with multiple RT effects that broke 30 fps on the 6600 XT: Metro Exodus Enhanced Edition, which ran at 38 fps (but we don't have a full suite of results on the other GPUs yet, so that's not in the charts).

Again, it's difficult to pinpoint whether the bottleneck is the 8GB of VRAM, the 128-bit memory interface, or the 32MB of Infinity Cache. It's probably all of those to varying degrees, with some missing driver optimizations also playing a role. It's worth pointing out how the RX 6700 XT basically destroys the RX 6600 XT as well. The 6700 XT was at most 37% faster overall in rasterization testing at 4K ultra. With ray tracing turned on, the 6700 XT was 54% faster at 1080p and 73% faster at 1440p. Except, a big part of that was the failed Watch Dogs Legion testing.

Navi 23 and the RX 6600 XT are now the lowest performance graphics card supporting ray tracing effects. Technically the RTX 2060 does perform a bit worse in several of the DXR games we tested, but the RTX 2060 also supports DLSS, which can make a big difference since most of the ray tracing games support it. RX 6600 XT is a foot in the ray tracing door, but unless some clever algorithms can drastically improve RT performance without dropping image quality, it's a card that's much better suited to traditional rasterization techniques.

MORE: Best Graphics Cards

MORE: GPU Benchmarks and Hierarchy

MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Radeon RX 6600 XT Ray Tracing at 1080p and 1440p

Prev Page Radeon RX 6600 XT Gaming Performance Next Page Radeon RX 6600 XT Power, Temps, Clocks, and Fans

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Zarax I know this is a lot to ask but given the ridicolous MSRP you might want to design a benchmark of discounted games (you could use isthereanydeal to see which ones have been at least once 50% off) that would be good to use with lower end cards or ones available used for acceptable prices.Reply

Something like "Budget gaming: how do the cheapest cards on ebay perform?" could be a very interesting read, especially given your high standards in writing and testing. -

salgado18 I like the decision to lower memory bus width to 128 bits. It lowers mining performance without affecting gaming performance, and can't be undone like Nvidia's software-based solution.Reply -

ottonis Due to production capacity constraints, AMD's main problem is they can't produce nearly as many GPUs as they would like and are thus being outsold by Nvidia by far.Reply

It's pretty obvious that AMD had one goal in mind with Navi23: increase production output as much as possible by shrinking die size while maintaining competitive 1080p gaming performance.

Apparently, they accomplished that task. Whether or not the MSRP will have to be adapted: we will see,but I guess not as long as the global GPU shortage lasts. -

InvalidError Reply

Still having a 128bits on a $400 GPU is outrageous, especially if VRAM bandwidth bottleneck is a major contributor to the 6600(XT)'s collapse at higher resolutions and DXR.salgado18 said:I like the decision to lower memory bus width to 128 bits. It lowers mining performance without affecting gaming performance, and can't be undone like Nvidia's software-based solution.

With only 8GB of VRAM, the GPU can only work on one ETH DAG at a time anyway, so narrowing the bus to 128bits shouldn't hurt too much. A good chunk of the reason why 12GB GPUs have a significant hash rate advantage is because they can work on two DAGs at a time while 16GB ones can do three and extra memory channels help with that concurrency. -

-Fran- Reply

Sorry, but you're not entirely correct there. It does affect performance. This is a very "at this moment in time" type of thing that you don't see it being a severe bottleneck, but crank up resolution to 1440 and it falls behind, almost consistently, against the 5700XT; that's not a positive look to the future of this card, even at 1080p. There's also the PCIe 3.0 at x8 link which will remove about 5% performance. HUB already tested and the biggest drop was DOOM Eternal with a whooping 20% drop in performance. That's massive and shameful.salgado18 said:I like the decision to lower memory bus width to 128 bits. It lowers mining performance without affecting gaming performance, and can't be undone like Nvidia's software-based solution.

I have no idea why AMD made this card this way, but they're definitely trying to angry a lot of people with it... Me included. This card cannot be over $300 and that's the hill I will die on.

Regards. -

InvalidError Reply

Were it not for the GPU market going nuts over the last four years, increases in raw material costs and logistics costs, this would have been a $200-250 part.Yuka said:I have no idea why AMD made this card this way, but they're definitely trying to angry a lot of people with it... Me included. This card cannot be over $300 and that's the hill I will die on. -

-Fran- Reply

I would buy that argument if it wasn't for the fact both AMD and nVidia are reeking in the cash like fishermen on a school of a million fish.InvalidError said:Were it not for the GPU market going nuts over the last four years, increases in raw material costs and logistics costs, this would have been a $200-250 part.

Those are just excuses to screw people. I was definitely giving them the benefit of the doubt at the start, but not so much anymore. Their earn reports are the damning evidence they are just taking advantage of the situation and their excuses are just that: excuses. They can lower prices, period.

Regards. -

ottonis ReplyYuka said:I would buy that argument if it wasn't for the fact both AMD and nVidia are reeking in the cash like fishermen on a school of a million fish.

Those are just excuses to screw people. I was definitely giving them the benefit of the doubt at the start, but not so much anymore. Their earn reports are the damning evidence they are just taking advantage of the situation and their excuses are just that: excuses. They can lower prices, period.

Regards.

The market has its own rules. As long as there is larger demand than the amount of GPUs AMD can produce, they will keep the prices high. That's just how (free) markets work.

You can't blame a company for maximizing their profits within the margins the market provides to them.

For a bottle of water, you usually pay less than a Dollar. Now, in the desert, with the next station being 500 miles away, you would pay even 10 Dollars (or 100?) for a bottle of water if you are thirsty.

This will not change as long as global GPU shortage is lasting. -

-Fran- Reply

You're misunderstanding the argument: I do not care about their profit over my own money expenditure. I understand perfectly well they're Companies and their only purpose in their usable life is maximizing profit for their shareholders.ottonis said:The market has its own rules. As long as there is larger demand than the amount of GPUs AMD can produce, they will keep the prices high. That's just how (free) markets work.

You can't blame a company for maximizing their profits within the margins the market provides to them.

For a bottle of water, you usually pay less than a Dollar. Now, in the desert, with the next station being 500 miles away, you would pay even 10 Dollars (or 100?) for a bottle of water if you are thirsty.

This will not change as long as global GPU shortage is lasting.

So sure, you can defend free market and their behaviour all you want, but why? are you looking after their own well being? are you a stakeholder? do you have a vested interest in their market value? are you getting paid to defend their scummy behaviour towards consumers? do you want to pay more and more each generation for no performance increases per tier? do you want to pay a cars worth for a video card at some point? maybe a house's worth?

Do not misunderstand arguments about AMD and nVidia being scummy. You should be aware you have to complain and not buy products at bad price points or they'll just continue to push the limit, because that's what they do.

Regards.