Dying Light: Performance Analysis And Benchmarks

Results: 720p And 1080p

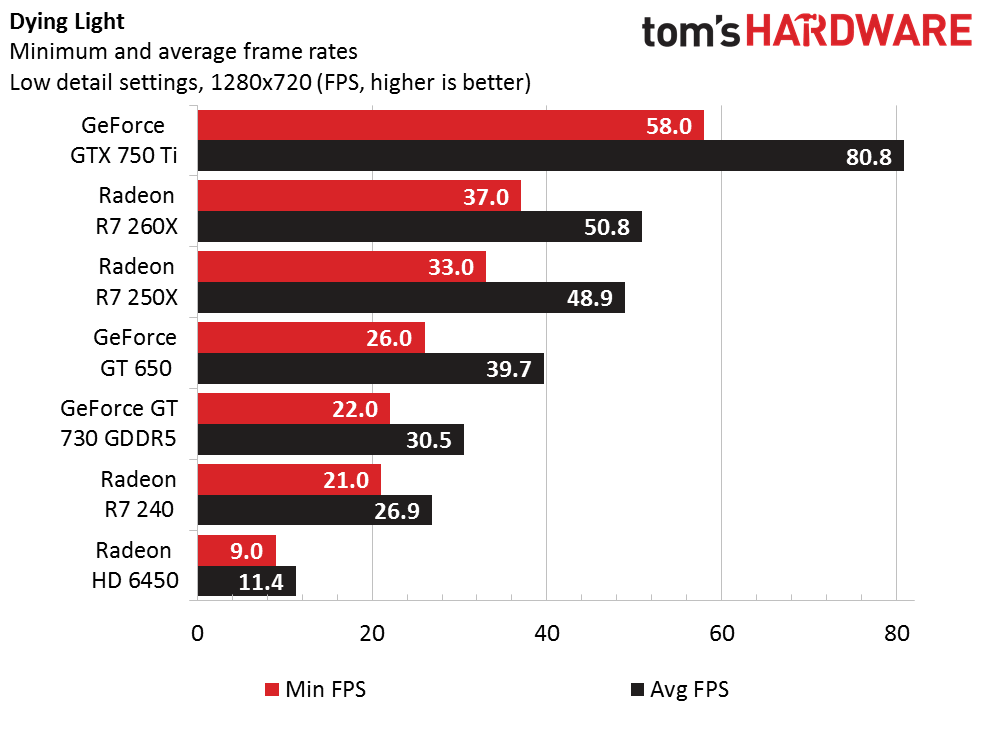

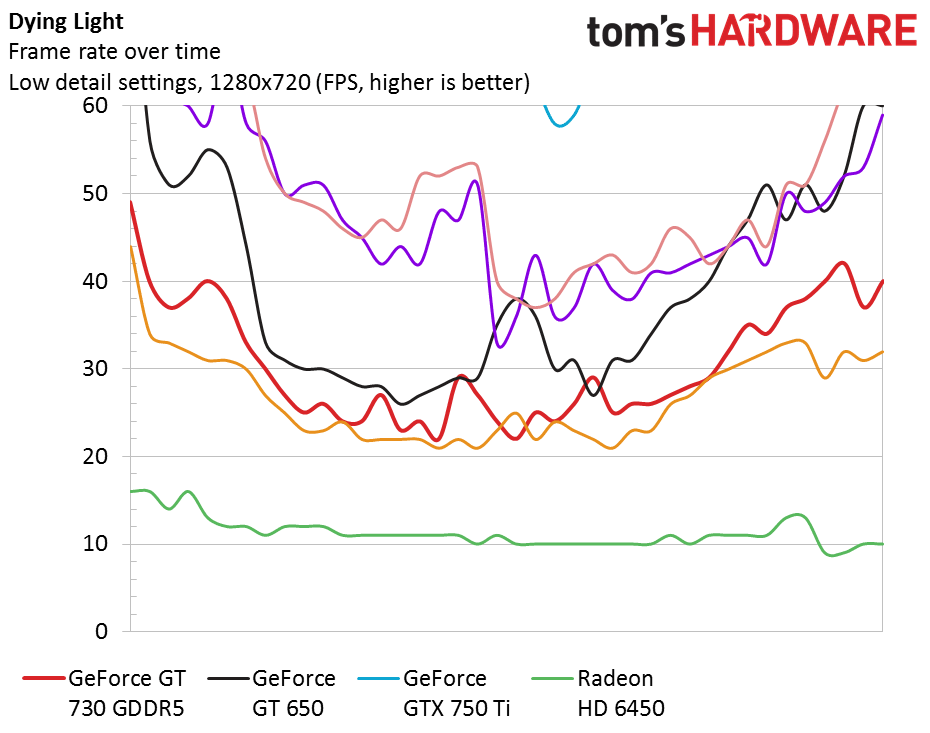

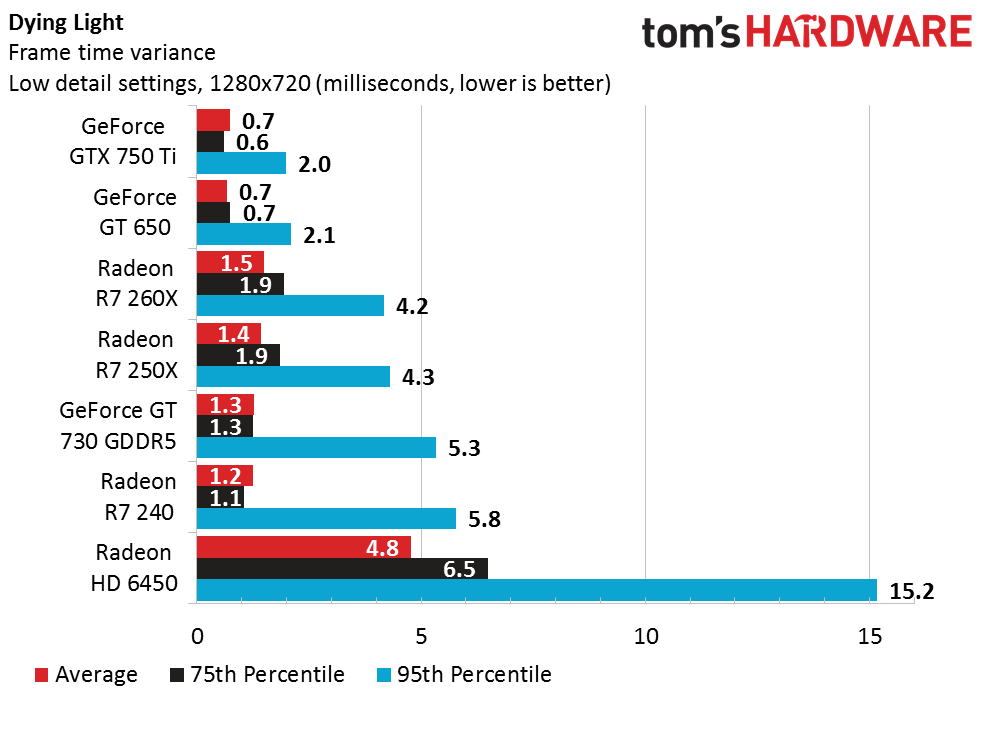

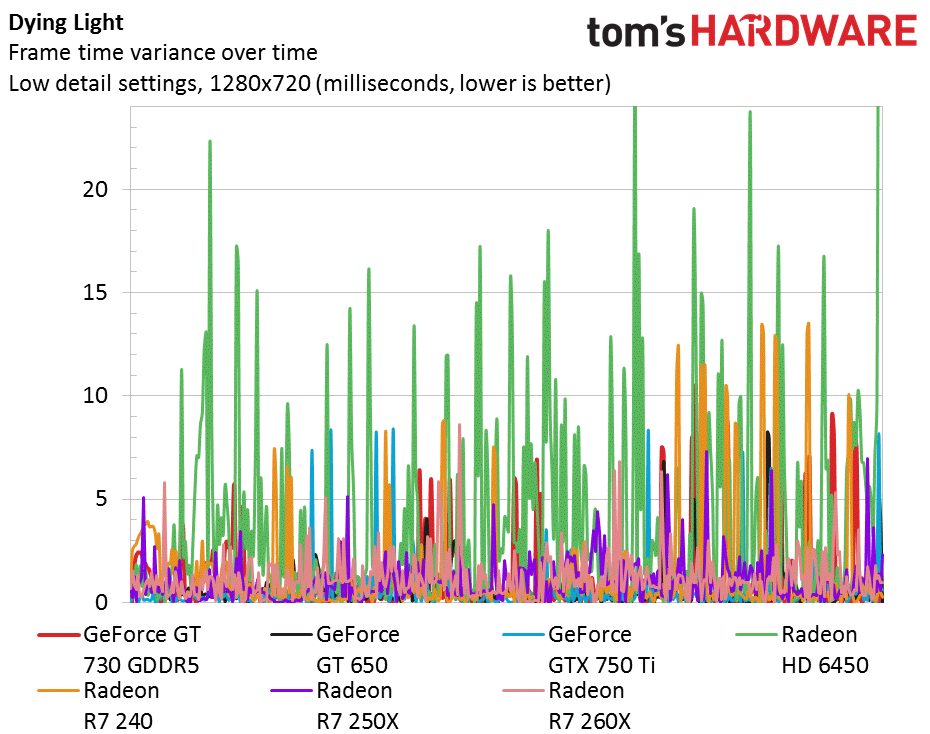

Low Details, 1280x720 (720p)

As with many modern titles, Dying Light puts a tremendous amount of stress on PC hardware. Even at 720p, mainstream HTPC-oriented cards buckle under the pressure, dropping to unplayable frame rates. Only the GeForce GTX 750 Ti reaches an impressive 60 FPS minimum, although the Radeon R7 250X and 260X are quite playable, never dropping below 30 FPS.

The Radeon R7 250X is a good starting point for Dying Light, though you're stuck at 720p and low detail settings if you want to maintain at least 30 FPS. Frame time variance is higher than we'd like to see from most of these cards, except for Nvidia's GeForce GTX 750 Ti and 650. This can be a symptom of micro-stuttering. The only board that outright fails is the Radeon HD 6450.

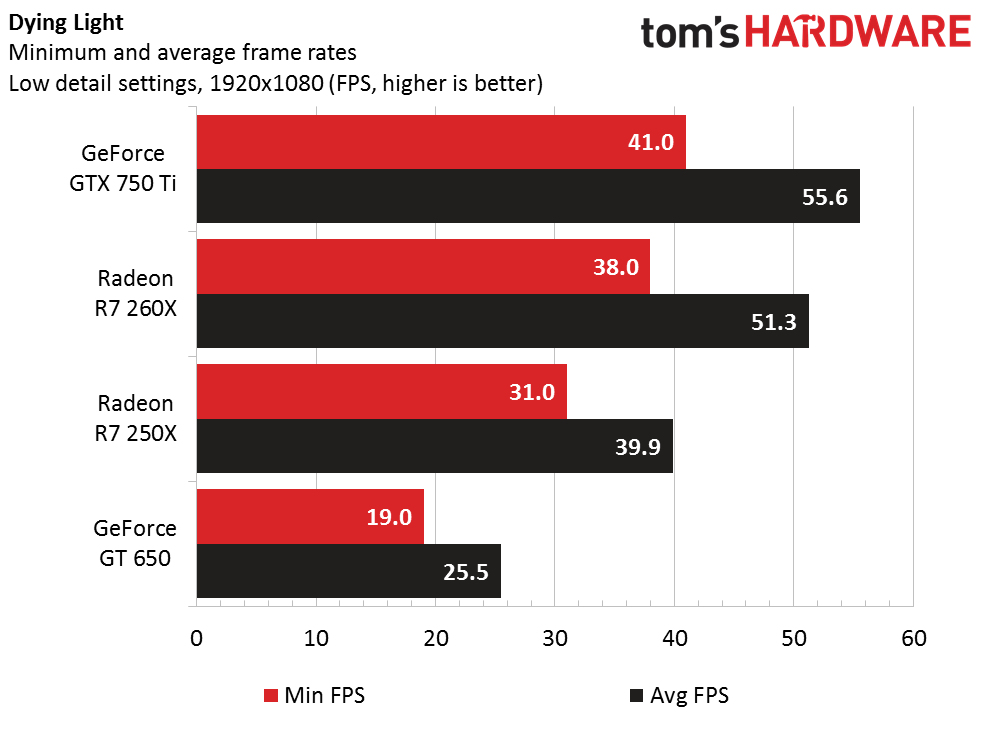

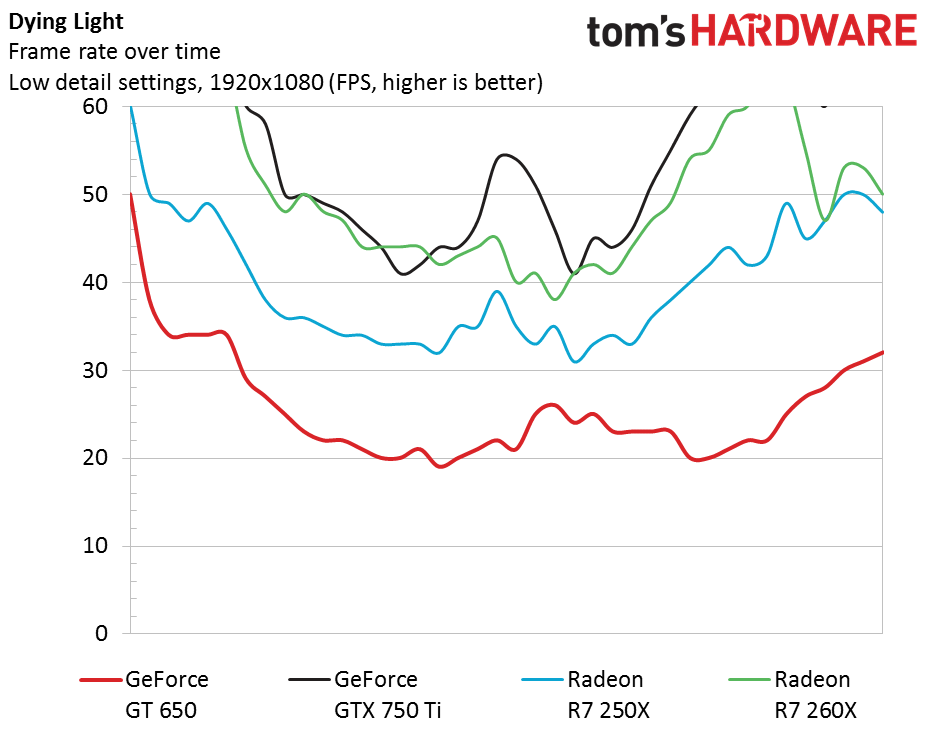

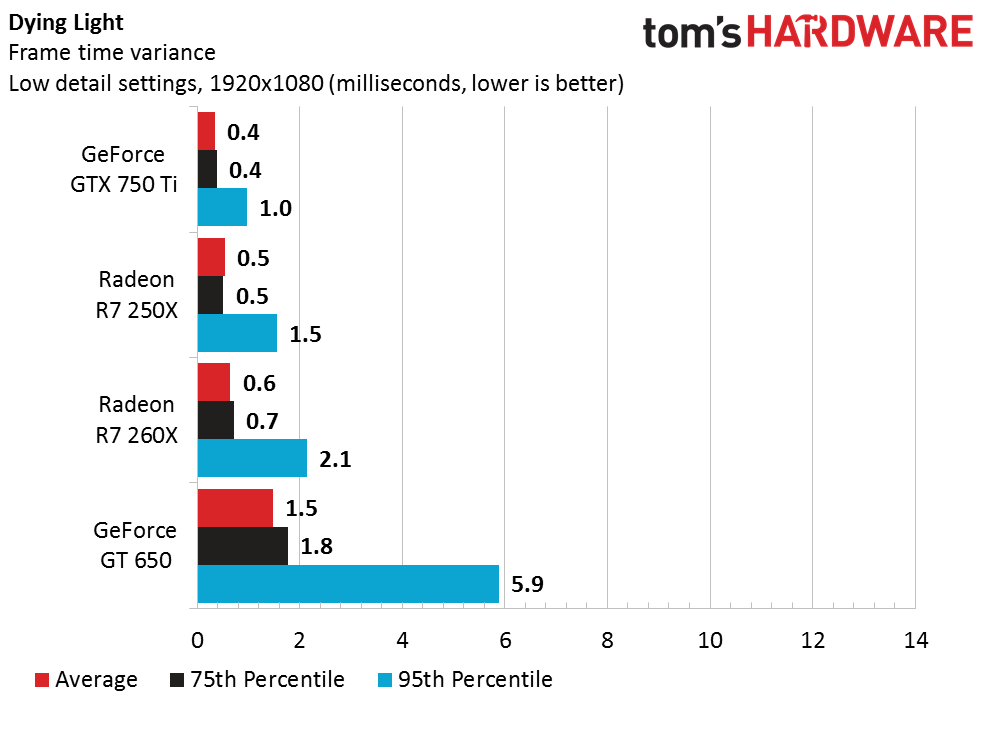

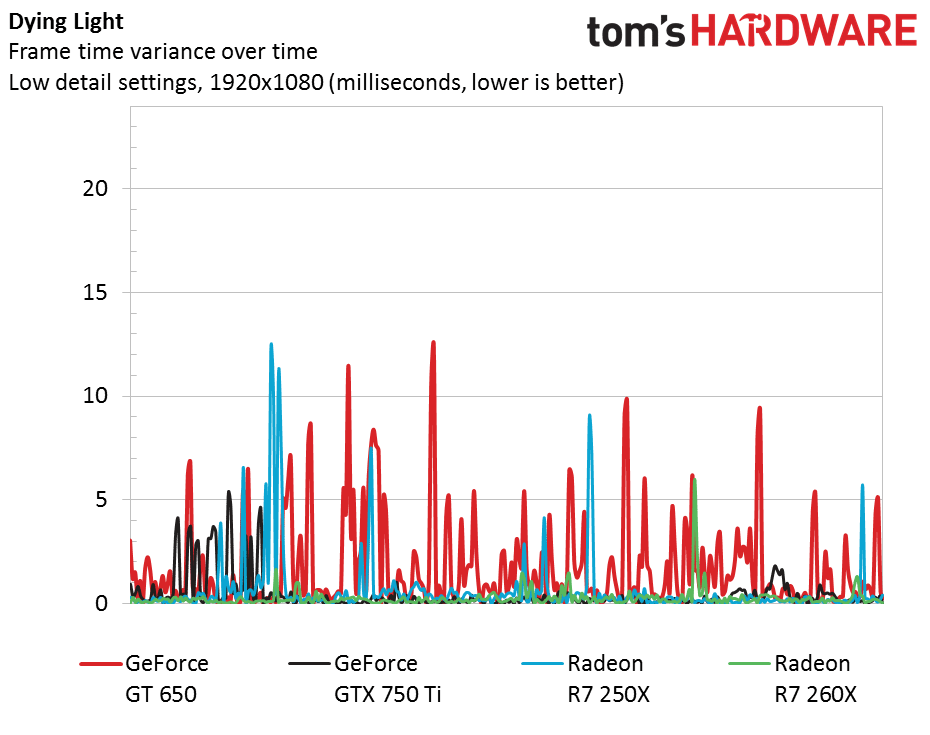

Low Details, 1920x1080 (1080p)

The difference isn't particularly profound, since the frame rates were already low for most of these cards. AMD's Radeon R7 250X maintains a 30 FPS minimum, but just barely. The GeForce GTX 750 Ti remains at the top of the pack, though the Radeon R7 260X closes the gap by a fair amount. Frame time variance is really only a problem for the GeForce GTX 650, though its frame rates are too low to be playable anyway.

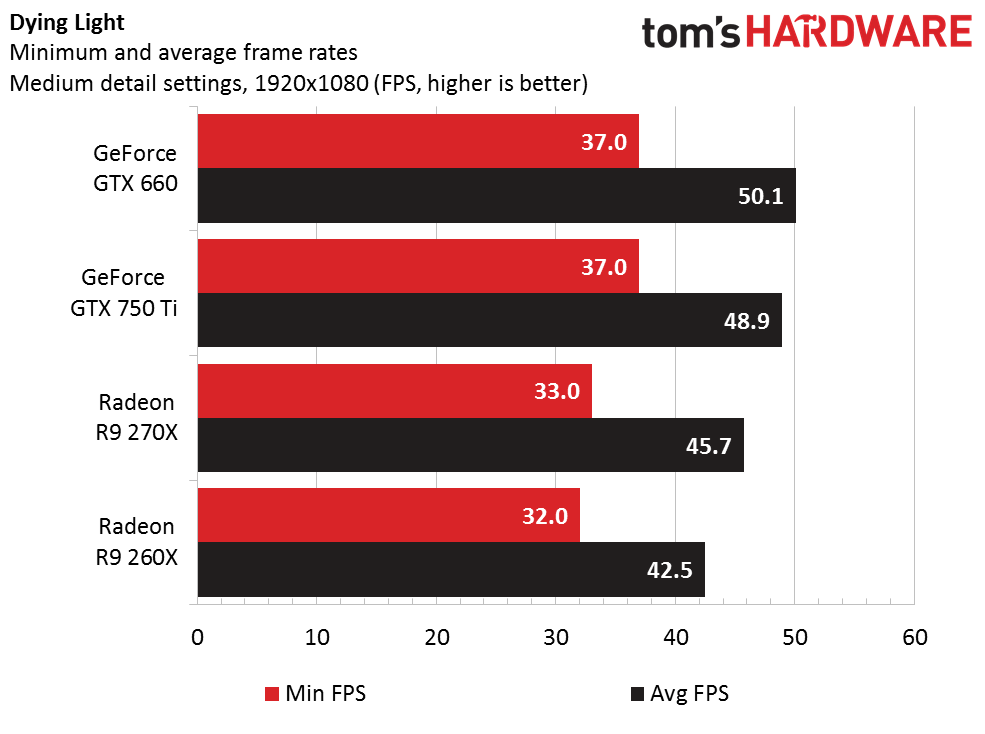

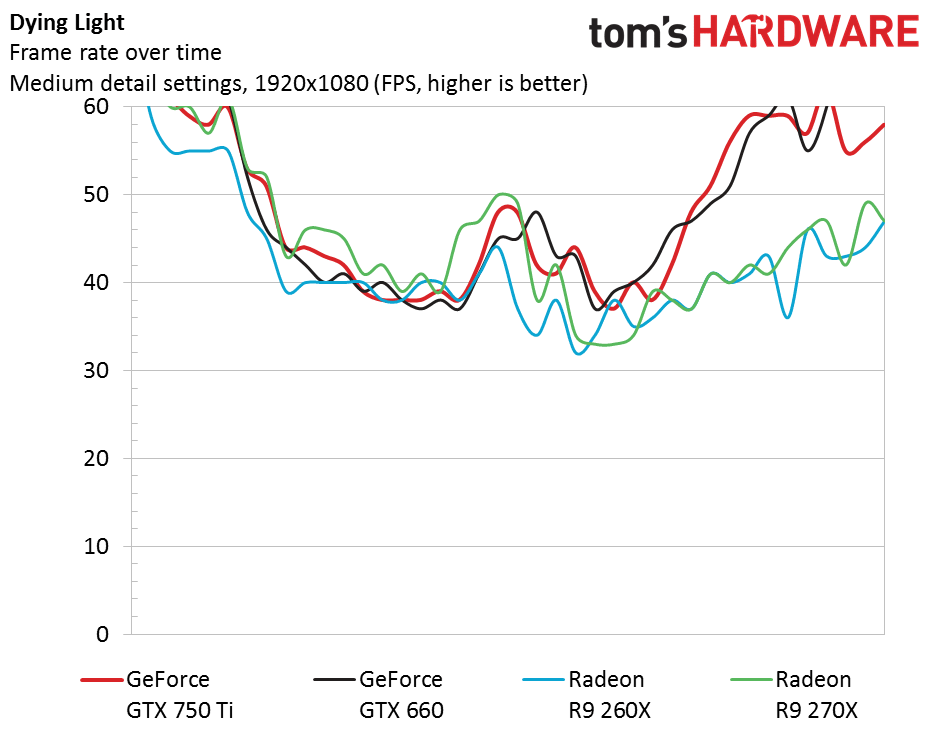

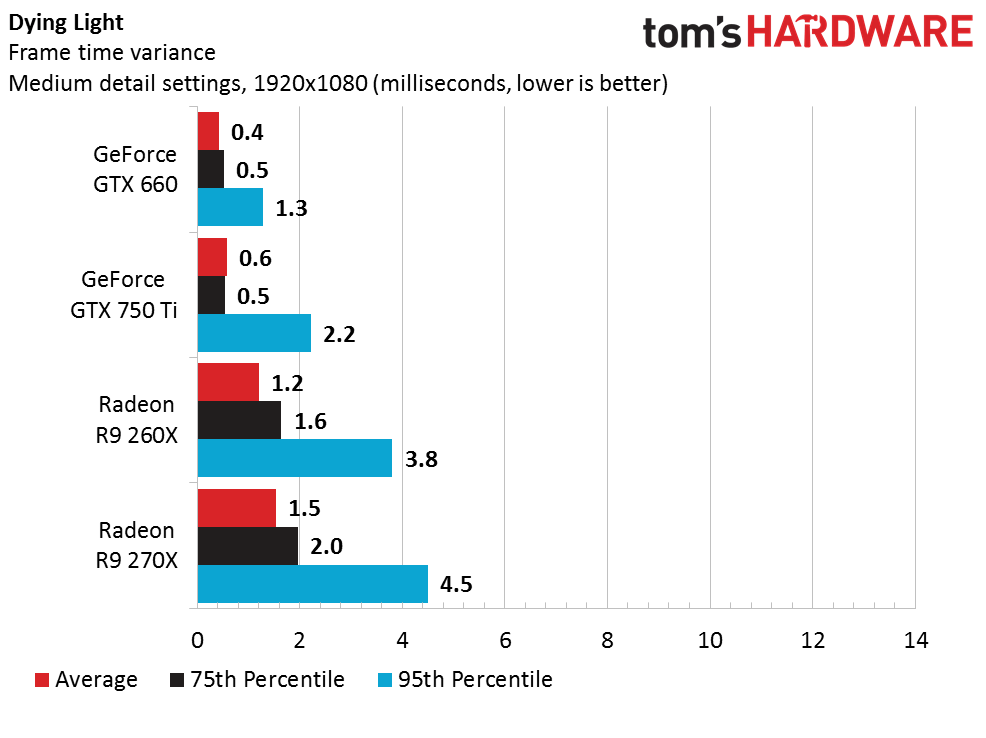

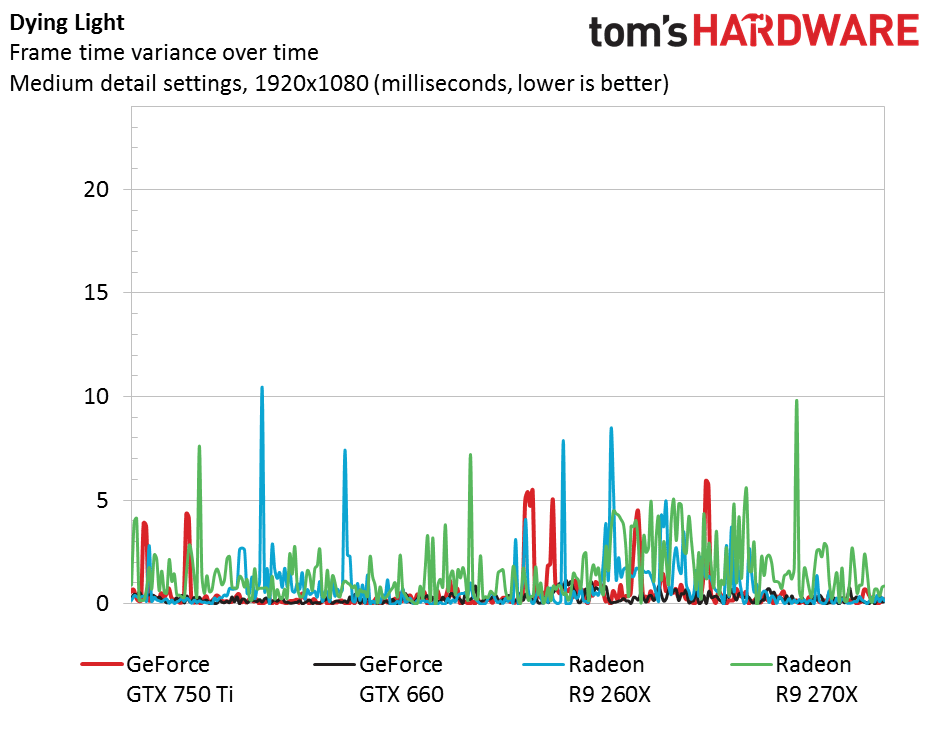

Medium Details, 1920x1080 (1080p)

Increasing the detail preset to Medium evens out the playing field a bit; all of the cards we tested at this setting come close to each other. The GeForce GTX 750 Ti holds its own against the GTX 660, while AMD's Radeon R9 270X does not perform as well. Frame time variance continues to be acceptable, although the GeForce cards show slightly better results.

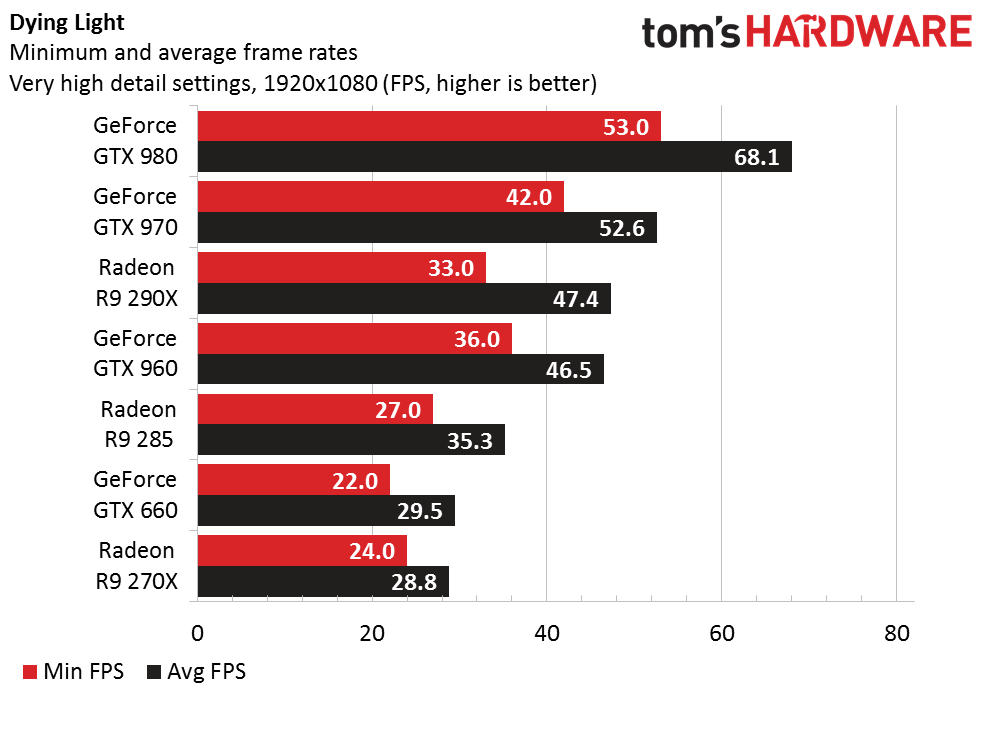

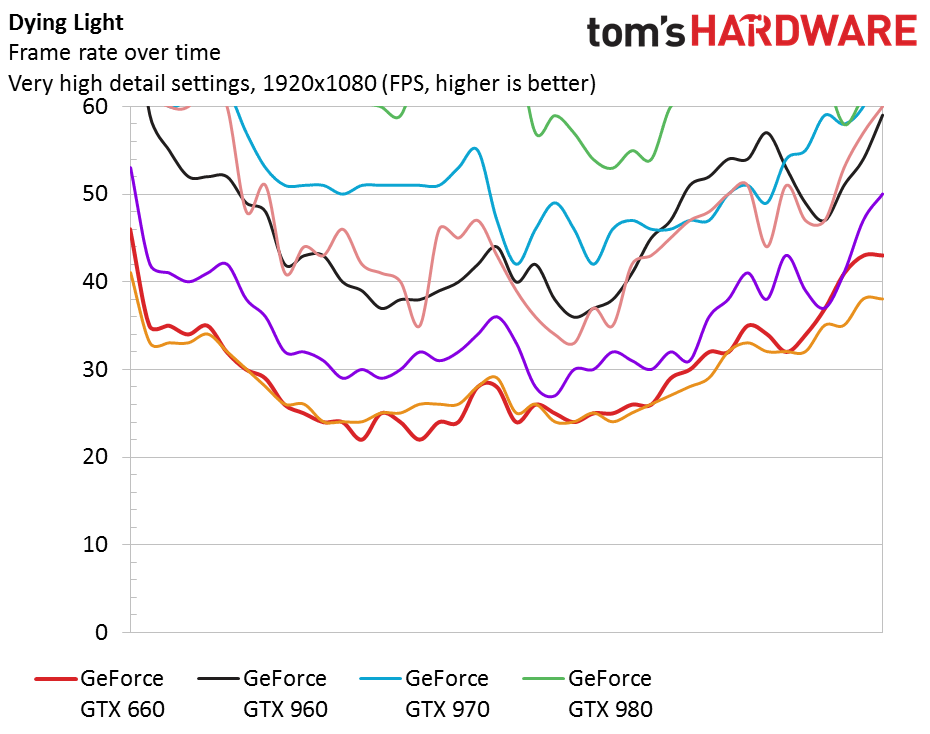

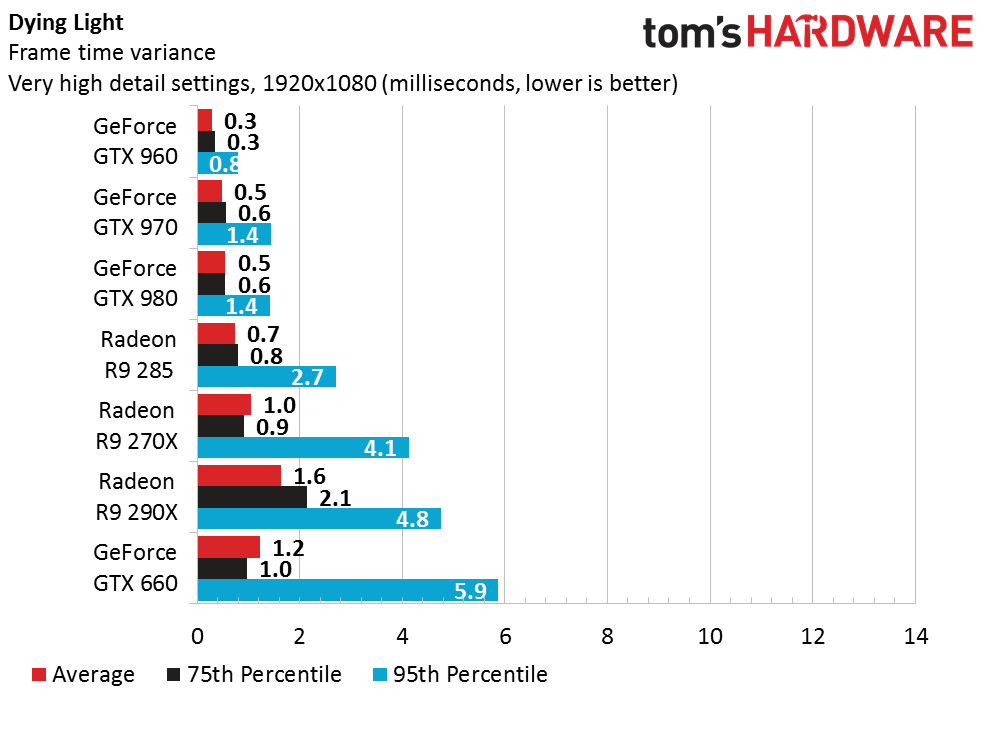

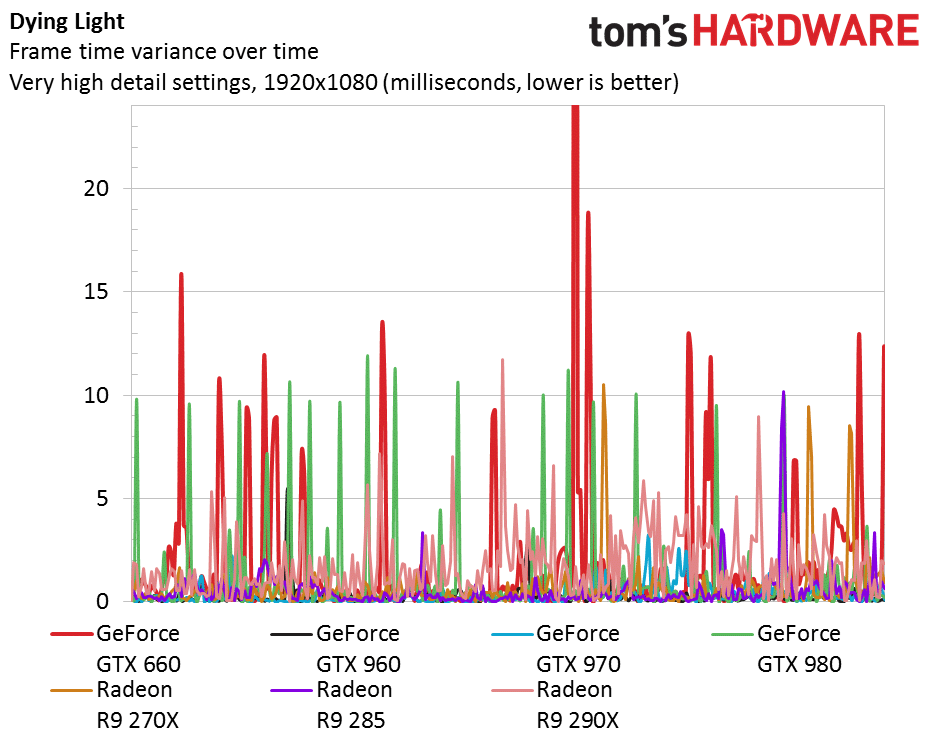

Very High Details, 1920x1080 (1080p)

With the highest detail settings enabled, mid-range cards are forced to their knees. The Radeon R9 285 drops under the 30 FPS mark, and the GeForce GTX 960 pulls past it. What's really interesting, though, is that the Radeon R9 290X also struggles to maintain a minimum frame rate over 30 FPS, battling on the level of Nvidia's GeForce GTX 960. It is apparent that AMD's hardware isn't well-optimized for this game yet. We can only hope that this is one driver revision away from being resolved.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Results: 720p And 1080p

Prev Page Image Quality, Settings And Test Setup Next Page Results: 1440p, 4K And CPU-

chimera201 i5 in FPS chart, i7 in FPS over time chart. Which one is it?Reply

Edit: Gotta say that FX 9590 looks like a joke -

alidan there is one setting in the game, i believe its draw distance, that is able to halve if not drop the games fps to 1/3rd what you would get if you set it to minimum, and from what people have tested, it impacts gameplay in almost no meaningful way.Reply

what was that set to?

did you change it per benchmark?

is it before or after they patched it so even on max draw distance they lowered how far the game was drawing?

i know on my brothers 290X, i dont know if he was doing 1920x1200 or 2560x1600 was benching SIGNIFICANTLY higher than is shown here.

when you do benchmarks like this in the future, do you mind going through 3 or 4 setups and trying to get them to play at 60fps and list what options you have to tick to get that? it would be SO nice having an in depth analysis for games like this, or dragon age which i had to restart maybe 40 god damn times to see if i dialed in so i have the best mix between visuals and fps... -

rush21hit Q6600 default clockReply

2x2GB DDR2 800mhz

Gigabyte g31m-es2L

GTX 750Ti 2GB DDR5

Res: 1366x768

Just a piece of advice to anyone on about the same boat as mine(old PC+new GPU and want to play this), just disable that Depth of Field and/or Ambient Occlusion effect(also applies on any latest game titles). And you're fine with your new GPU + its latest driver. Mine stays within 40-60FPS range without any lag on input. While running it on Very High Preset on other things...just without those effects.

Those effects are the culprits for performance drops, most of the time. -

Cryio Was Core Parking taken into account when benchmarking on AMD hardware ? It makes no sense that the FX 4170 is faster than the 9590.Reply

The game works rather meh on my 560 Ti and Fx 6300 @4.5 GHz. But once I mess with the core affinity in task manager my GPU is getting 99% usage and all is for with the world. -

ohim Just shows how badly they optimize for AMD hardware ...no wonder everything works faster on Intel. This comes from an Intel CPU user BTW.Reply -

xpeh Typical Nvidia Gameworks title. Anyone remember Metro: Last Light? Unplayable on AMD cards until 4A issued a game update a few months later. Can't make a card that competes? Pay off the game developers.Reply -

Grognak @ xpeh - Couldn't agree more. A 750 Ti beating a 270X? 980 better than 295X2? I'm gonna stay polite but this is beyond ridiculous. This is pure, unabashed, sponsored favoritism.Reply

Edit: after checking some other sites it seems the results are all over the place. Some are similar to Tom's while others appear to be relatively neutral regarding both GPU and CPU performance (though the FXs still struggle against a modern i3) -

Empyah Sorry guys but you messed something up in these tests - cause my 290X is getting higher averages than your 980(i got an 4930k and view distance at at 50%), everybody knows know that you are heavily biased towards Nvidia and Intel, to the point it stops being sad and starts being funny how obvious it is - but if this test is on purpose we have ourselves found a new low today - cmon guys were in the same boat here - we love hardware and want competition.Reply -

silverblue Looks like very high CPU overhead with the AMD drivers, and really poor use of multiple cores with the CPU test. The former can be solved easier, the latter sounds like the developers haven't yet grasped the idea of more than two CPU cores working on a problem. Is this game really heavy on the L3 cache? It could explain major issues for the 9590 in trying to use it effectively with more cores and its higher clock speeds counting for naught (and/or the CPU is being throttled), but as the L3 cache is screwed on FX CPUs, that would also have a detrimental effect on things - it'd be worth testing a 7850 alongside a Phenom II X4/X6 to see if the removal of L3 or falling back to a CPU family with good L3 would make any sort of difference to performance.Reply