SSD 102: The Ins And Outs Of Solid State Storage

The benefits introduced by solid state drives are undeniable. However, there are a few pitfalls to consider when switching to this latest storage technology. This article provides a rundown for beginners and decision makers.

How SSDs Work

With the internal SSDs we're discussing today, flash memory and a controller are installed onto a printed circuit board (PCB) and packaged into a small enclosure. This housing is typically in one of the 1.8”, 2.5”, or 3.5” form factors that we all know and love from conventional hard drives. These can be mounted into PCs, laptops, or certain rackmount server environments. Indeed, flash SSDs look and largely behave like hard drives, with the exceptions that there are no moving parts and they weigh less. In addition, modern SSDs require very little cooling. Most SSDs employ a 2.5” housing and utilize 3 or 6Gb/s interface speeds.

MLC and SLC NAND Flash

Internally, all flash SSD products store data onto either single-layer cell (SLC) or multi-layer cell (MLC) NAND memory, able to store a single bit or multiple bits per cell, respectively. SLC cells offer less capacity per transistor than MLC, but higher write performance and data durability.

Modern Controller Architectures

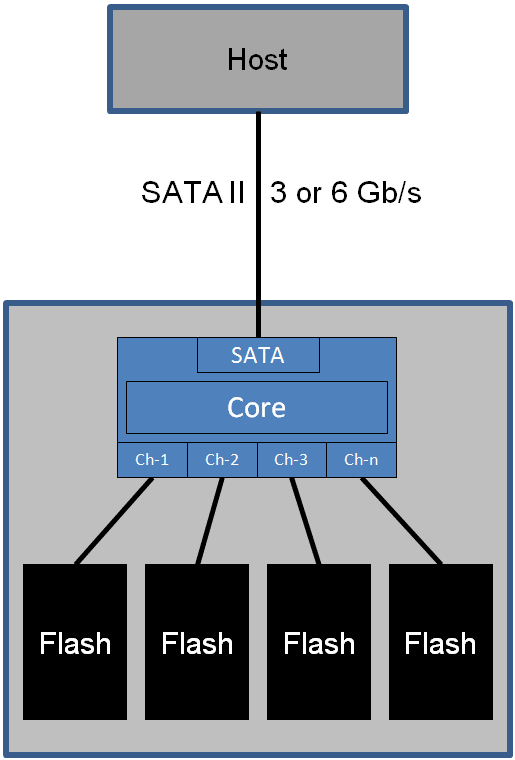

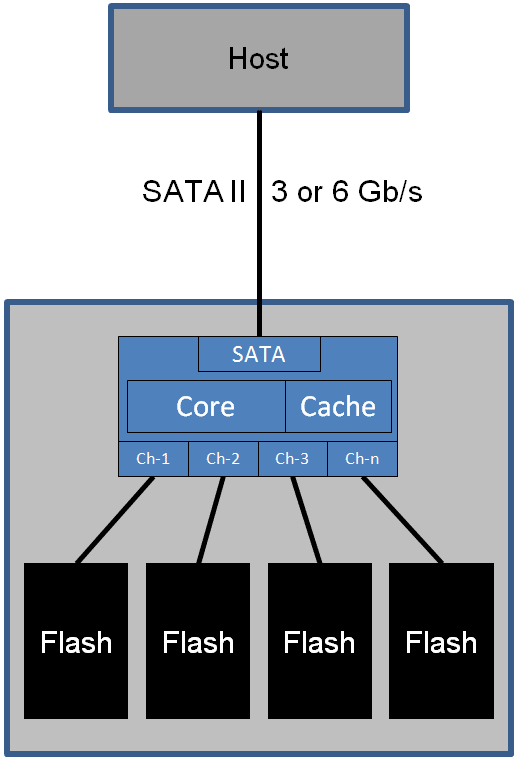

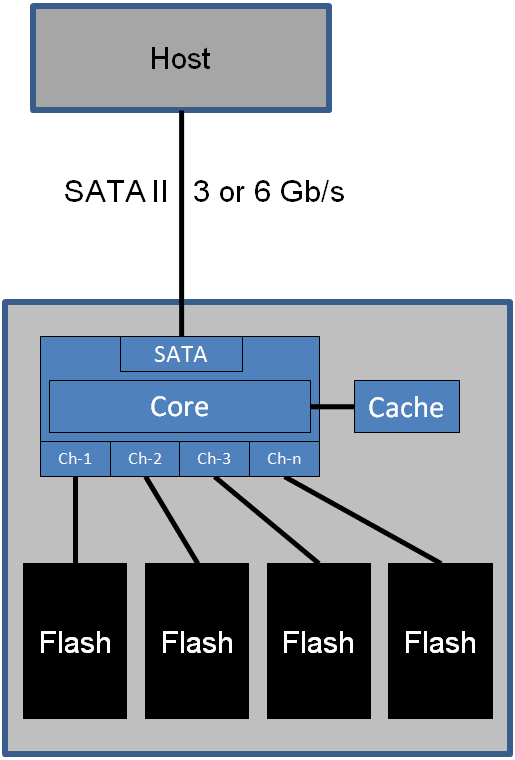

All SSD designs are based on flash controllers that drive the storage circuits and connect to the host system via Serial ATA. Modern designs utilize the controller "brain" to tackle various needs. For example, data durability is addressed through wear leveling algorithms, ensuring that flash memory cell usage distribution is as even as possible to maximize the device’s life span. Performance is optimized through multiple flash memory channels, load balancing, and different methods of caching. Some controllers have an integrated cache, others work with a separate DRAM memory chip, and other designs utilize a part of the flash memory across multiple channels for data reorganization. Please read the article Tom’s Hardware’s Summer Guide: 17 SSDs Rounded Up for more details on architectures and specific products.

Trend: Toggle DDR NAND Flash

Samsung introduced Toggle DDR NAND flash memory a few months ago. This is a flash memory design that transfers data during the rising and falling edge of a memory signal, much like DDR DRAM. This approach debuted in the enterprise segment but will soon also be available in consumer SSDs. The main benefit of Toggle DDR is its increased bandwidth of 66 to 133 Mb/s per channel as opposed to 40 Mb/s. Drives using the new approach will probably not employ the faster peak bandwidth, but will instead try to maximize SATA II performance on 3 Gb/s interfaces while further lowering power consumption. We’ll explain in a bit why this is important.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: How SSDs Work

Prev Page A Dive Into The SSD World Next Page Some Numbers: Performance and Power Consumption

Patrick Schmid was the editor-in-chief for Tom's Hardware from 2005 to 2006. He wrote numerous articles on a wide range of hardware topics, including storage, CPUs, and system builds.

-

Lewis57 A very good article. I love these articles explaining everything. I'm planning on buying two OCZ Vertex 2E 60GB for RAID-0 when I get enough money. Can't wait, should be one hell of an upgrade from a single 5400rpm WD green drive.Reply -

ares1214 Memristors might make SSD's sorter lived than people thought, but who knows. Great article btw.Reply -

JoeSchmuck From what I understand, TRIM is supported under IDE mode using Win7 as well so you do not need AHCI. I have a Samsung’s VBM19C1Q firmware device and running IDE mode.Reply -

Earlier this year we deployed a 5 node failover cluster with iSCSI backend. Each of the VM Host servers utilize a pair of solid state drives for booting and operating, with VM's running off of iSCSI shared cluster volumes. The servers are unbelievably fast and stable - 6 months of 100% uptime on Windows 2008R2. We only use magnetic HDD's now for transporting backups off site.Reply

-

One thing that I'm very curious, if we follow Tomshardware's advice to turn off disk defragmentation, the files on SSD would be defragmented over time.Reply

Upon SSD data loss, can we recover the data files if it's defragmented, especially on a SSD that has never been defragmented as Tomshardware had recommended? -

randomizer Defragmentation of an SSD is not entirely unnecessary. It's important to distinguish between file fragmentation and free space fragmentation. The former is not an issue with SSDs because all parts of an SSD can be read at the same rate (the same is true for writing if the blocks are clean). But fragmentation of free space, whereby free space is largely distributed across partially-filled blocks, can severely reduce the performance of an SSD. Any time a file of <512kB is written to an SSD, it will take up only part of a block. However, the SSD will eventually run out of clean blocks and will need to re-arrange the data by erasing partially-filled blocks and consolidating them to free up more blocks for further writing. Running a free space defragmentation on the drive will aggressively consolidate the data on-demand so that you don't have the problem occurring when you didn't plan for it.Reply

Most SSDs will perform this process themselves when idle for extended periods, but it happens at a slow rate. This is what most manufacturers refer to when they talk about Garbage Collection. -

Alvin Smith Please send me the four fastest 256GB SSDs on the market, so that I might perform my own comparison ... I'll just sit by the door and wait for UPS to arrive.Reply

Thanks, in advance !!

= Alvin =

-

gordonaus I put an SSD in my new computer and it was good but after i got the firmware update and changed to AHCI it was AMAZING (OZC Vertex 2 60gb). I would say tho that 60 gb is not enough, i installed windows photoshop and a few other design programs and i only have 20GB leftReply