Apple iPad Mini Review: Our New Favorite Size, But...That Price?

Web Browsing Tests

The restrictive nature of mobile operating systems like iOS and Windows RT is stunting cross-platform benchmark development. Although Google is more flexible about development on Android, quality benchmarks remain few and far between. As a result, we’re often left with Geekbench and GLBenchmark as our two performance-oriented tablet metrics. Using just two benchmarks, both of which are theoretical, makes me uncomfortable, even if the results scale as we'd expect them to. Right now, browser-based tests help fill that void, if only because they’re easy to run.

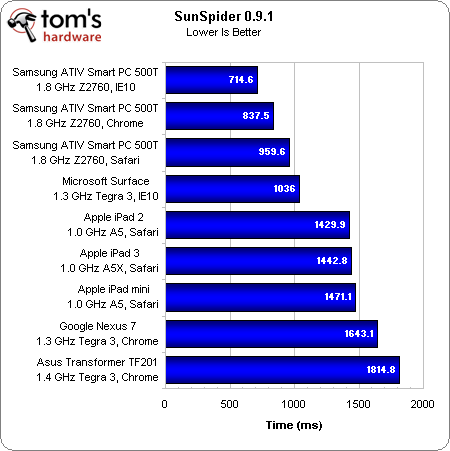

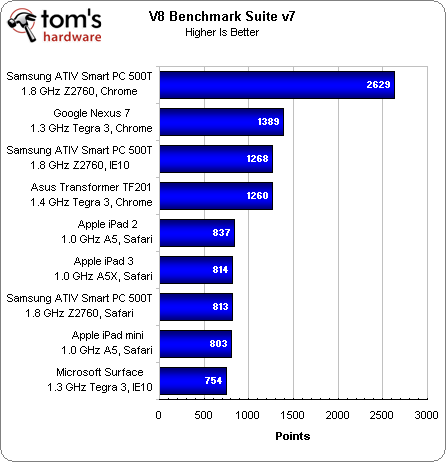

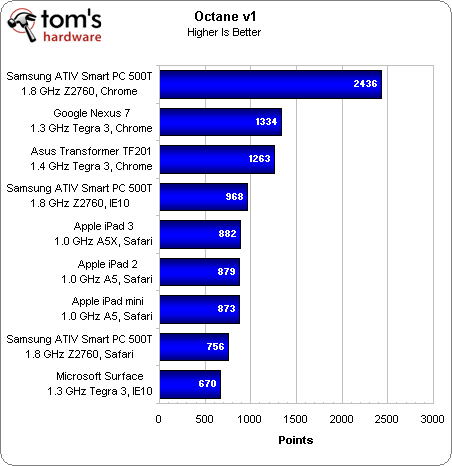

SunSpider, the V8 Benchmark Suite, and now Octane are ubiquitous in tablet and smartphone reviews. However, they are imperfect. As on the desktop, swapping from one browser to another can dramatically change the performance reflected in a browser-oriented test without necessarily reflecting the performance of your platform. In short, the browser is a variable. At least if you keep all of your hardware constant, the Web browser is the only component that changes. Here, though, we're using different browsers on different hardware, so it's difficult to draw conclusions about either.

Even though it's a more expensive machine running the full version of Windows 8, I'm including results from our upcoming Samsung ATIV Smart PC 500T review for a couple of reasons. Most superficially, it's based on Intel's Atom Z2760 (Clover Trail), allowing us to compare a slightly faster version of the Medfield SoC, designed for tablets, to the ARM-based competition. Second, it’s the first truly mobile hardware platform that lets us test several browser versions (IE10, Chrome, and Safari).

When you keep your hardware platform constant, IE10 rises to the top in SunSpider (at least under Windows 8), while Chrome tops the chart in V8 and Octane. They're all JavaScript-based benchmarks, but Google publishes the latter two, so it's a little difficult for us to take them seriously.

Apple’s iPad mini performs on par with the iPad 2, as we'd expect. It trails behind the Nexus 7 in V8 and Octane, while it beats the Google tablet in SunSpider.

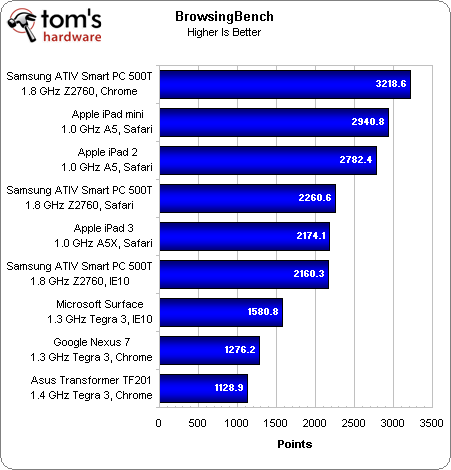

BrowsingBench was created by the Embedded Microprocessor Benchmark Consortium, a non-profit organization tasked with finding ways to develop testing methodology, specifically for embedded hardware. We're been playing around with this tool in the lab, and we love it. Intended for testing "smartphones, netbooks, portable gaming devices, navigation devices, and IP set-top boxes," it's also useful for measuring browser performance in general.

Unlike SunSpider or V8, BrowsingBench evaluates the total performance of a browser: page loading, processing, rendering, compositing, and so on. This helps reflect real-world use, unlike a single JavaScript-based metric. Frankly, these results are more representative of our own subjective experience.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The second- and third-generation iPads share the same CPU hardware, and yet the iPad 2 pushes ahead. Resolution plays a big part in the rendering workload, and at 2048x1536, more is asked of the third-gen iPad than the iPad 2 at 1024x768. So even though the iPad 3 sports twice as many graphics cores and a beefier memory subsystem, it’s asked to render three times as many pixels.

And that takes us to the iPad mini. You'd think that because it shares the same SoC and native resolution as the iPad 2, both tablets would perform similarly. We connected both devices over 2.4 GHz 802.11n, and, despite repeated test iterations, the iPad mini always comes out 100 points or more ahead of the iPad 2.

BrowsingBench measures the totality of performance, and some things have changed that may impact performance. For example, we know the iPad mini employs the Murata 339S0171 Wi-Fi module based on Broadcom’s BCM4334 chipset, whereas the iPad 2 leverages Broadcom’s BCM3429.

Current page: Web Browsing Tests

Prev Page Apple's A5 SoC: Familiar CPU And Graphics Performance Next Page LCD Performance Analyzed-

azathoth While the device is certainly nice, I don't like the fact that it has no support for MicroSD, and I would be unable to tinker around with it as I can for an Android based device.Reply

...And the price. I'm not going to give a second thought when I see a $200 tablet with removable storage versus $330 for 16GB of internal storage and no expansion options.

If the device was closer to say $260 for the 32GB version, or just included an option for removable storage... Then I would certainly see the iPad mini as being a viable option even for someone used to Android.

The main factors (in my opinion) for a great device are,

1: A good quality screen, it needs to have vibrant, accurate colours.

2: Even if during benchmarks the device is slow, if it FEELS snappy and quick, that's all that counts.

3: Removable storage for god sake, I know by practice apple enjoys their closed system, but COME ON!

4: It doesn't need to have some amazing 15 hour battery life, but I certainly don't want it to die on a full charge after a movie and a few youtube videos. -

hardcore_gamer I'm waiting for a 7 inch version of surface. It is the only productive tablet out there.Reply -

Darkerson Overpriced, but thats not really a surprise, since its an apple product. Sadly, people are eating them up regardless.Reply -

shikamaru31789 It's definitely overpriced, but I've come to expect that with Apple, you're mostly paying for a name and some unique styling with them. It has some features going for it, but I wouldn't buy one, not when there are several cheaper options in the mini tablet lineup. That's not stopping the legions of Apple sheep from buying it though.Reply -

Tomtompiper This is a blatant rip off of the Samsung Tab, I hope Samsung sue their ass off :kaola:Reply -

Jigo AzathothWhile the device is certainly nice, I don't like the fact that it has no support for MicroSD, and I would be unable to tinker around with it as I can for an Android based device....And the price. I'm not going to give a second thought when I see a $200 tablet with removable storage versus $330 for 16GB of internal storage and no expansion options.If the device was closer to say $260 for the 32GB version, or just included an option for removable storage... Then I would certainly see the iPad mini as being a viable option even for someone used to Android.The main factors (in my opinion) for a great device are, 1: A good quality screen, it needs to have vibrant, accurate colours.2: Even if during benchmarks the device is slow, if it FEELS snappy and quick, that's all that counts.3: Removable storage for god sake, I know by practice apple enjoys their closed system, but COME ON!4: It doesn't need to have some amazing 15 hour battery life, but I certainly don't want it to die on a full charge after a movie and a few youtube videos.Reply

you're right

I'm glad that i bought the Nexus7. 16GB is enough, and rootet i can plug in external device. And as for all my techy stuff, i doubt i've to send it in before the 2y warranty expires