AMD Radeon HD 7990: Eight Games And A Beastly Card For $1,000

We've been waiting for this since 2011. AMD is ready to unveil its Radeon HD 7990, featuring a pair of Tahiti graphics processors. Can the dual-slot board capture our hearts with great compute and 3D performance, or does Nvidia walk away with this round?

Results: Far Cry 3

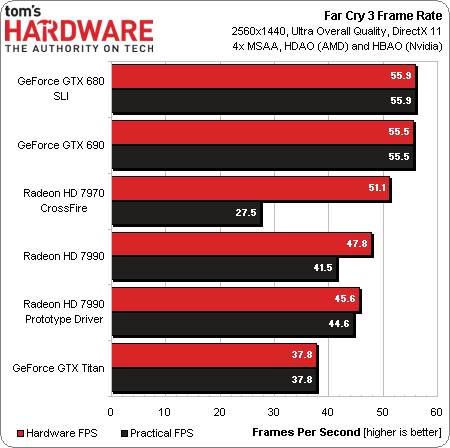

For about $920, two GeForce GTX 680s in SLI deliver the highest average frame rate in Far Cry 3, followed by the pricier GeForce GTX 690. AMD’s Radeon HD 7970s in CrossFire and 7990 would appear to fall in just behind Nvidia’s multi-GPU solutions. However, dropped and runt frames chip away at the frame rate you actually experience, taking two Radeon HD 7970s below even what a single GTX Titan achieves. The Radeon HD 7990 fares better, and is bolstered further by work AMD is doing in preparation of a driver release expected later this year.

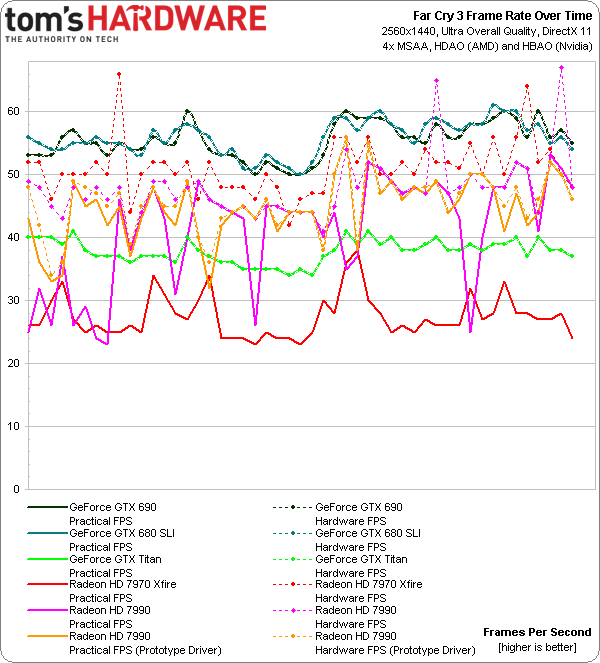

This does not look good for two Radeon HD 7970s in CrossFire. It’s not immediately apparent why they’re behaving so much differently than the Radeon HD 7990. However, a peek at the frame time over time chart that FCAT spits out shows the 7970s ranging between 0 and 45 ms per frame throughout our benchmark. The 7990 fluctuates between the same to numbers, but for far less of the test. AMD’s prototype driver cleans up a lot of the dips and spikes, resulting in a better practical frame rate.

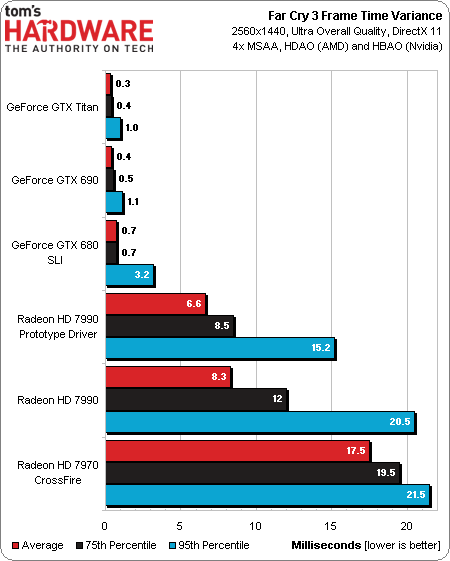

And this is the visual representation of those frame time differences. We see how the 7970s and 7990 are close to comparable at the 95th percentile. However, the Radeon HD 7990 spends a lot less time swinging between high-latency frames, pulling the average and 75th percentile numbers down significantly. AMD’s Malta board isn’t the strongest performer in Far Cry 3, but it runs smoothly more of the time than two Radeon HD 7970s in CrossFire.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

blackmagnum If I had 1,000 dollars... I would buy a Titan. Its power efficiency, drivers and uber-chip goodness is unmatched.Reply -

timw03878 Here's an idea. Take away the 8 games at 40 bucks a piece and deduct that from the insane 1000 price tag.Reply -

donquad2001 this test was 99% useless to the average gamer,Test the card at 1900x1080 like most of us use to get a real ideal of what its like,only your unigine benchmarks helped the average gamer,who cares what any card can do at a resolution we cant use anyway?Reply -

cangelini whysoPower usage?Thats some nice gains from the prototype driver.Power is the one thing I didn't have time for. We already know the 7990 is a 375 W card, while GTX 690 is a 300 W card, though. We also know AMD has Zero Core, which is going to shave off power at idle with one GPU shut off. I'm not expecting any surprises on power that those specs and technologies don't already insinuate.Reply -

cangelini donquad2001this test was 99% useless to the average gamer,Test the card at 1900x1080 like most of us use to get a real ideal of what its like,only your unigine benchmarks helped the average gamer,who cares what any card can do at a resolution we cant use anyway?If you're looking to game at 1920x1080, I can save you a ton of money by recommending something less than half as expensive. This card is for folks playing at 2560 *at least.* Next time, I'm looking to get FCAT running on a 7680x1440 array ;)Reply -

hero1 Nice article. I was hopping that they would have addressed the whining but they haven't and that's a shame. Performance wise it can be matched by GTX 680 SLI and GTX 690 without the huge time variance and runt frames. Let's hope they fix their whining issue and FPS without forcing users to turn on V-sync. For now I know where my money is going consider that I have dealt with AMD before:XFX and Sapphire and didn't like the results (whining, artifacts, XF stops working etc). Sorry but I gave the red team a try and I will stick with Nvidia until AMD can prove that they have fixed their issues.Reply