Why you can trust Tom's Hardware

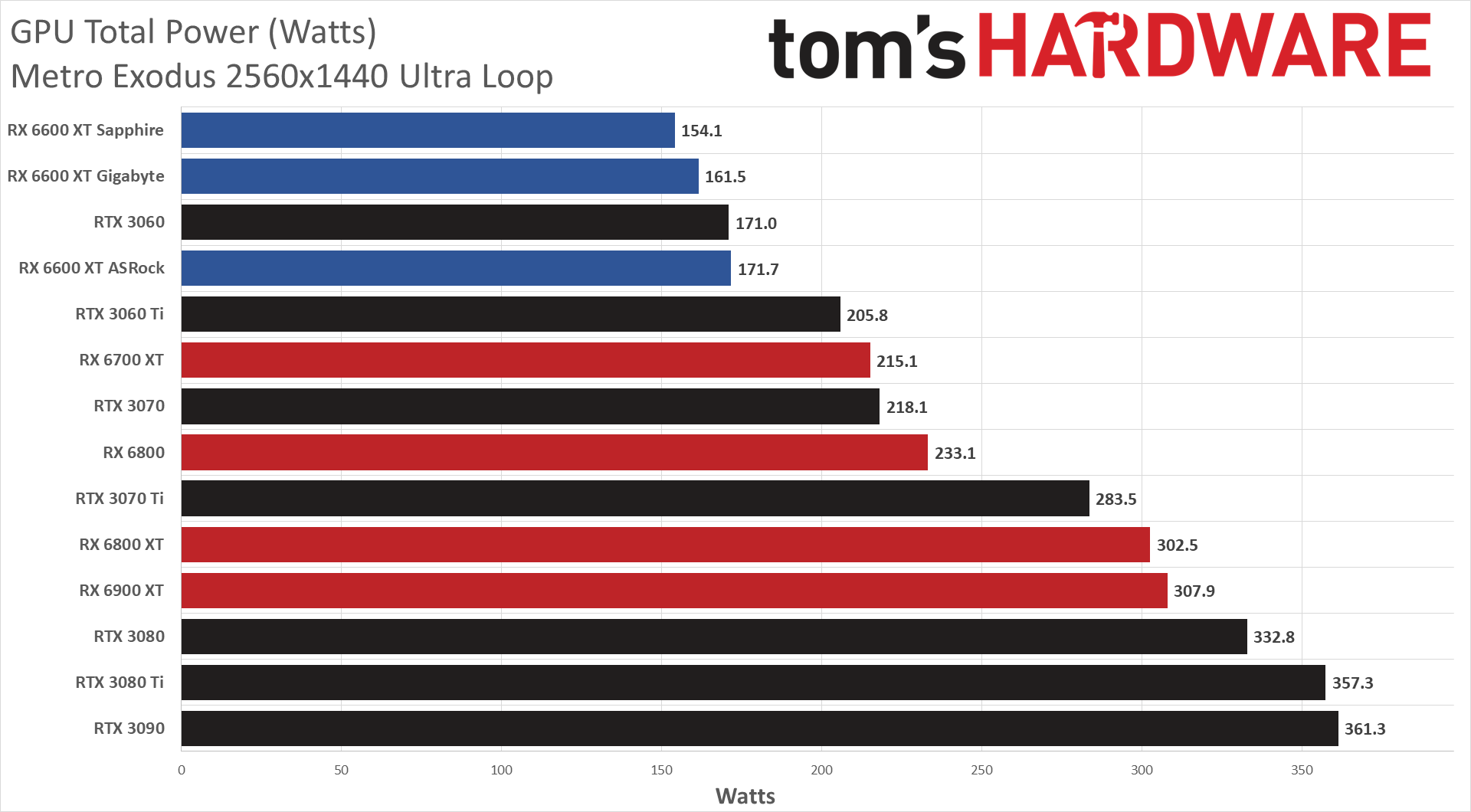

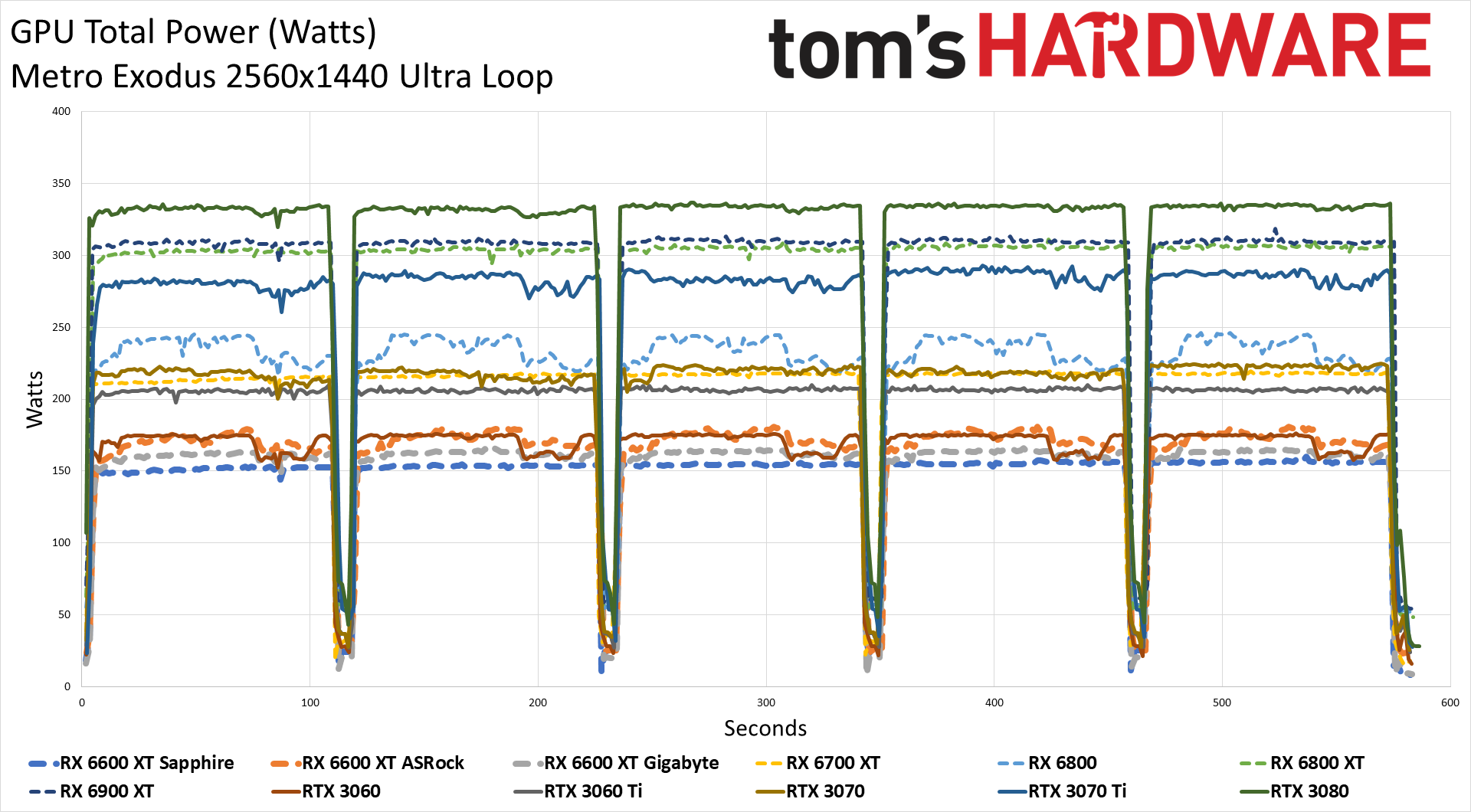

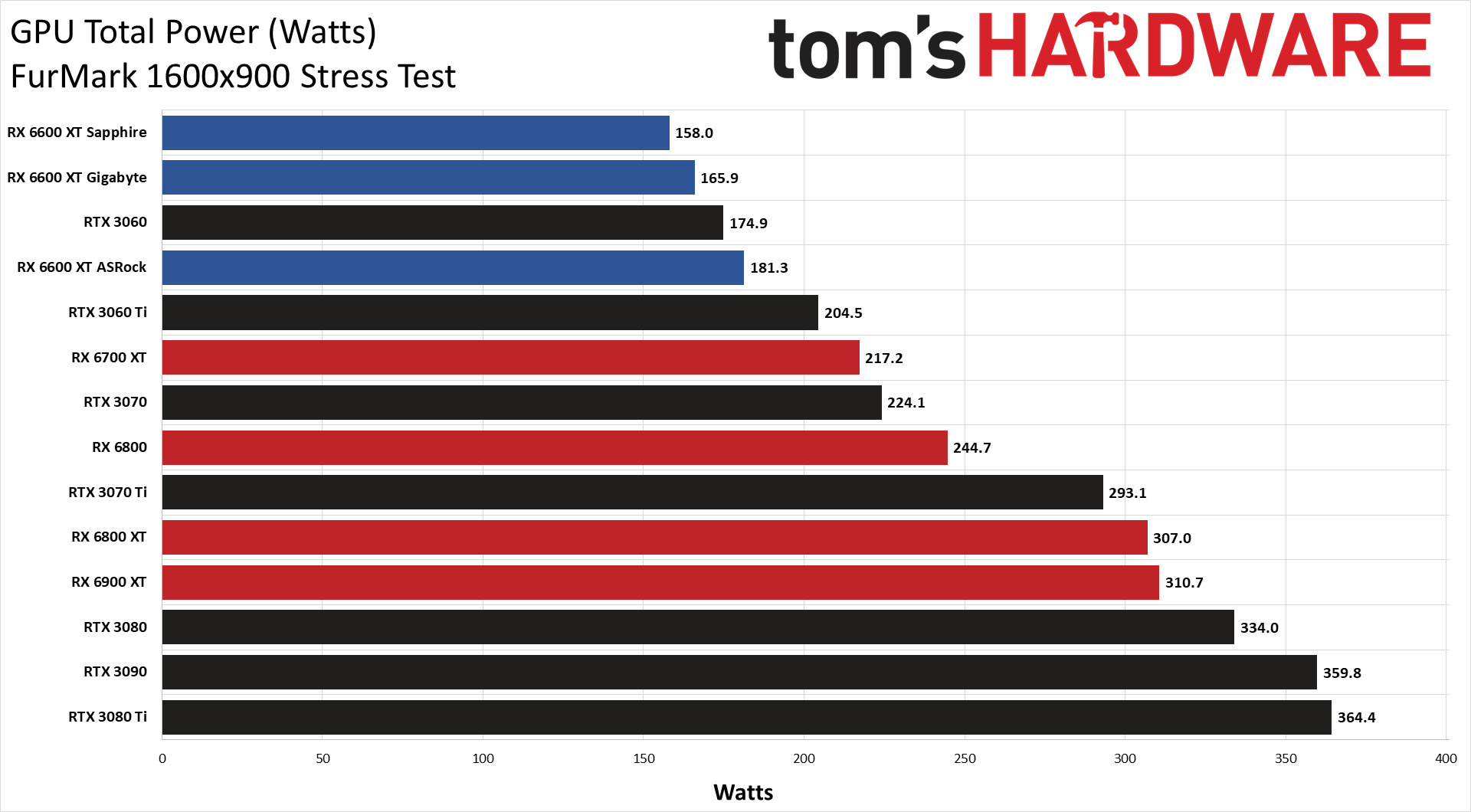

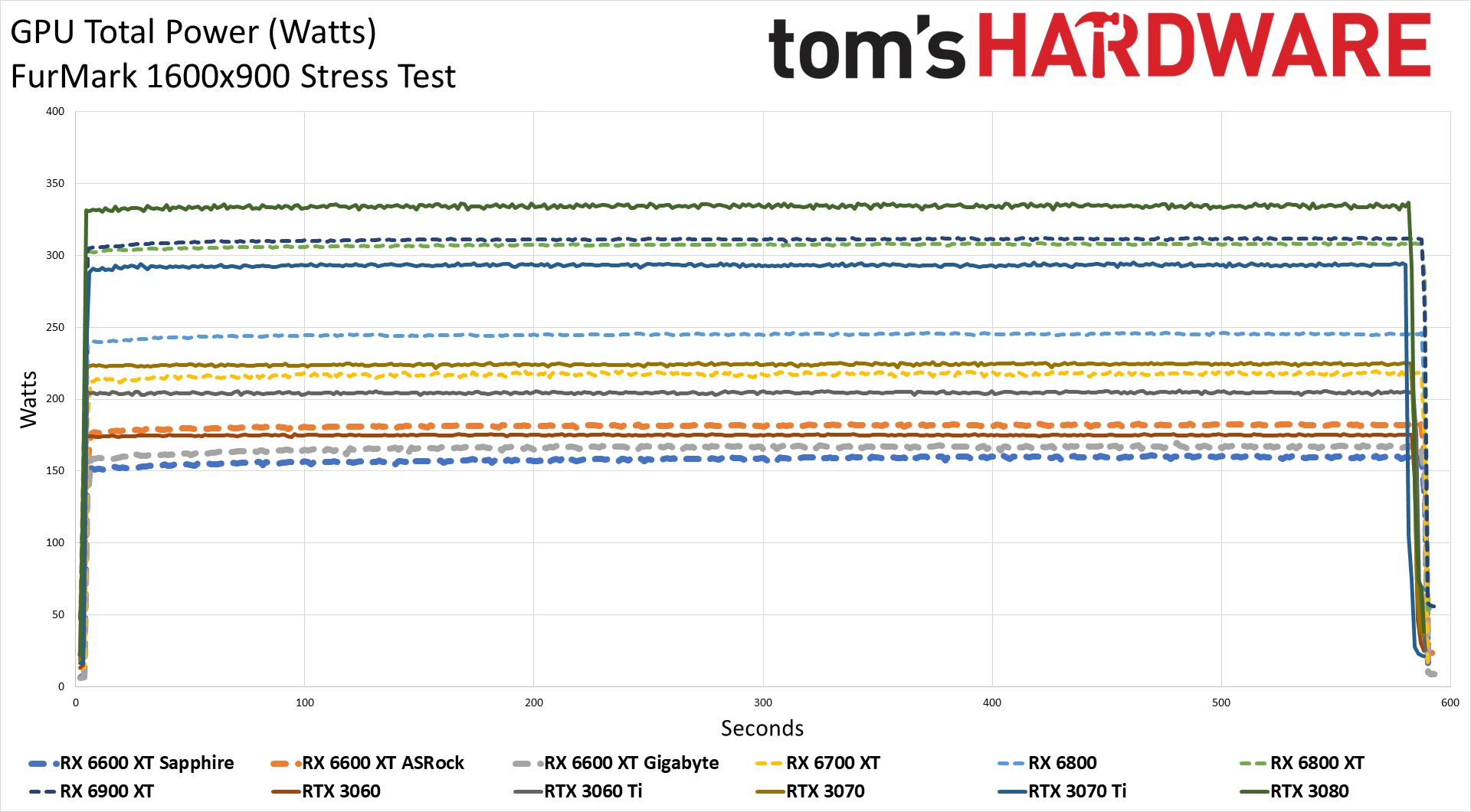

We're running our normal suite of Powenetics testing to check the GPU power consumption and other aspects of the cards, using Metro Exodus at 1440p ultra and FurMark stress test at 900p. Things are perhaps a bit more interesting here, as there are more noticeable differences between the three RX 6600 XT cards. Let's start with power.

The Sapphire Pulse came in slightly below AMD's official 160W TBP rating in Metro Exodus and FurMark and used 17–23W less power than the ASRock Phantom. Meanwhile, the Gigabyte card landed in between the two, though it's slightly closer to the Sapphire card. Interestingly, power use was measurably lower despite having nearly identical performance, though a few watts at least likely goes to the RGB lighting and extra fan on the Phantom card.

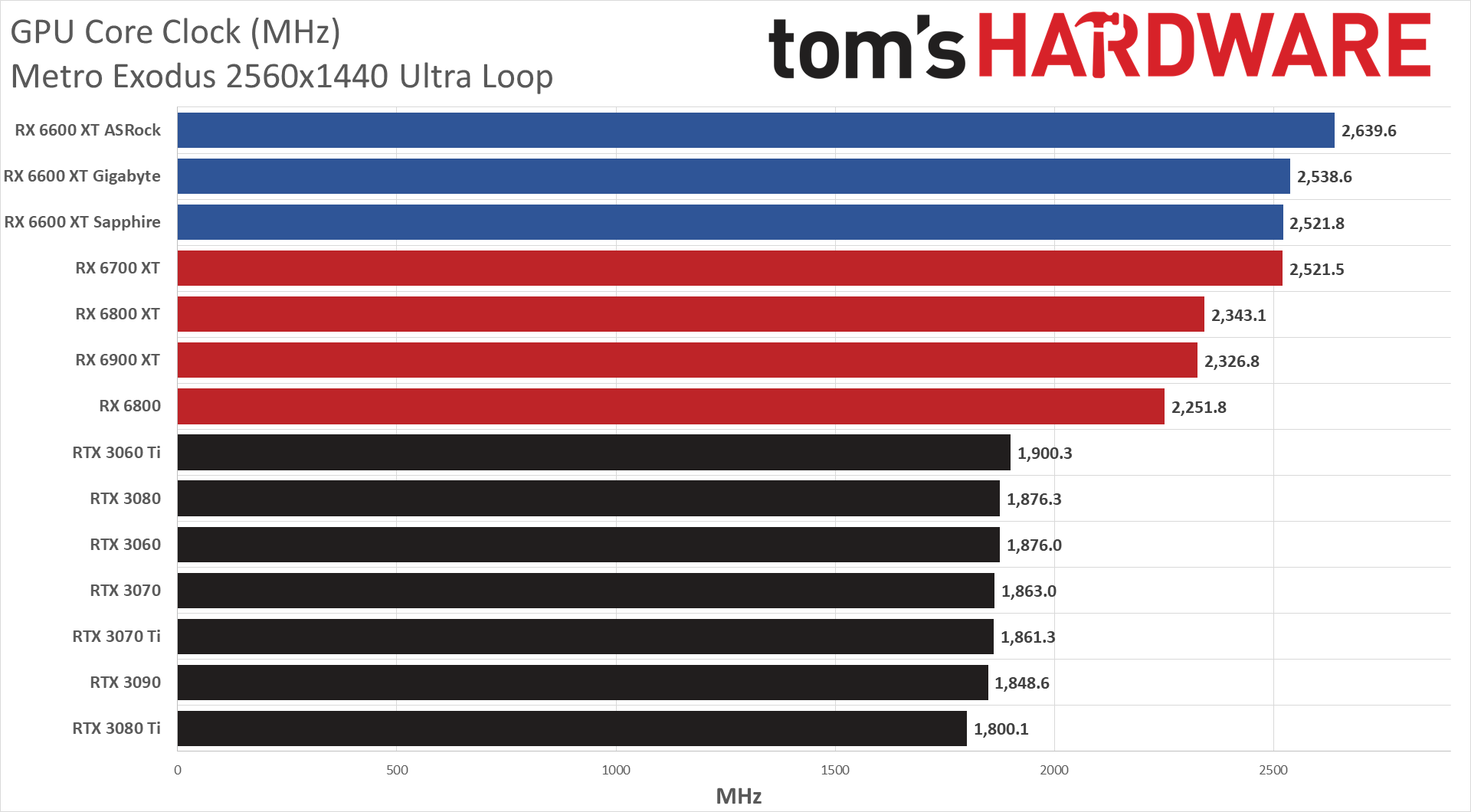

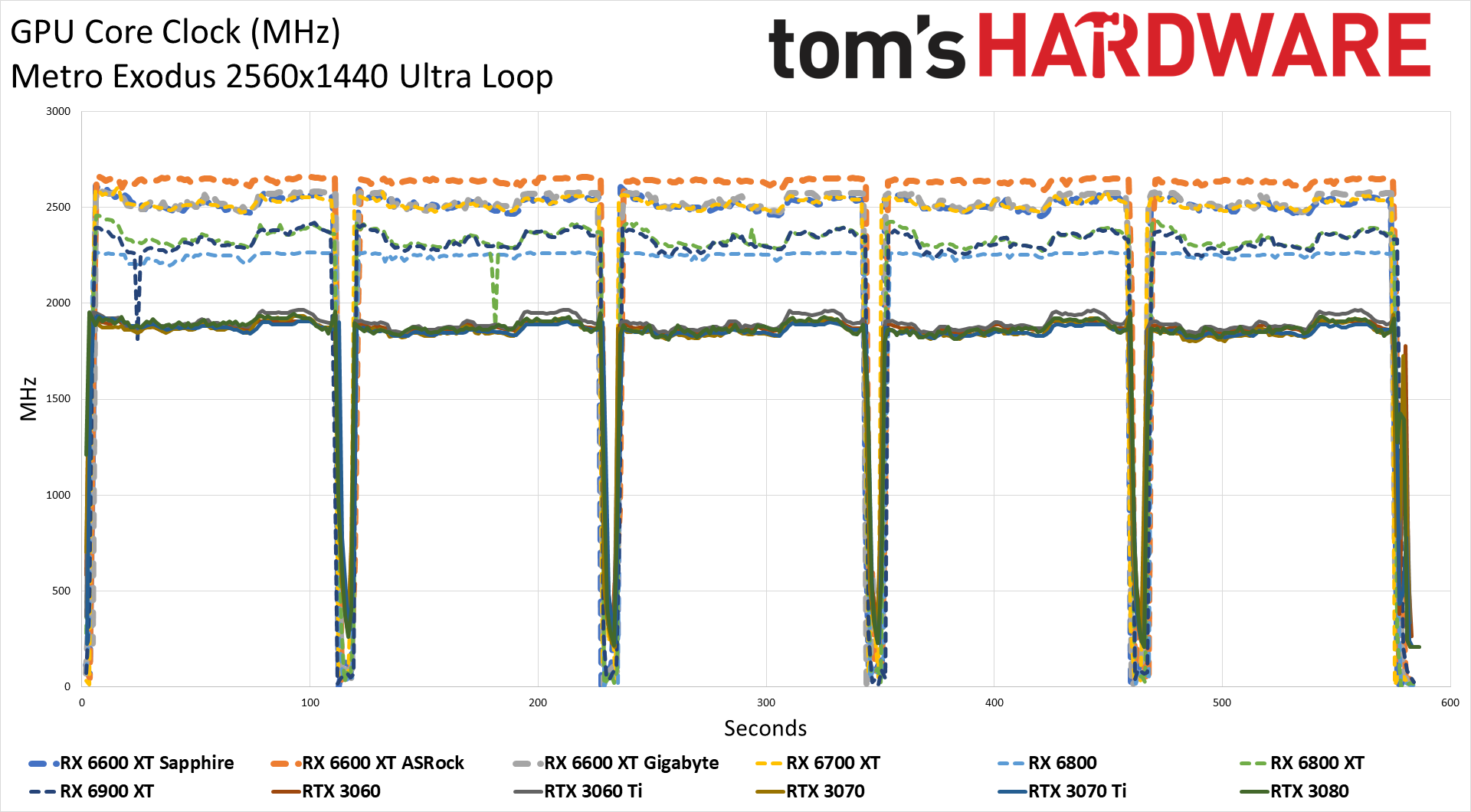

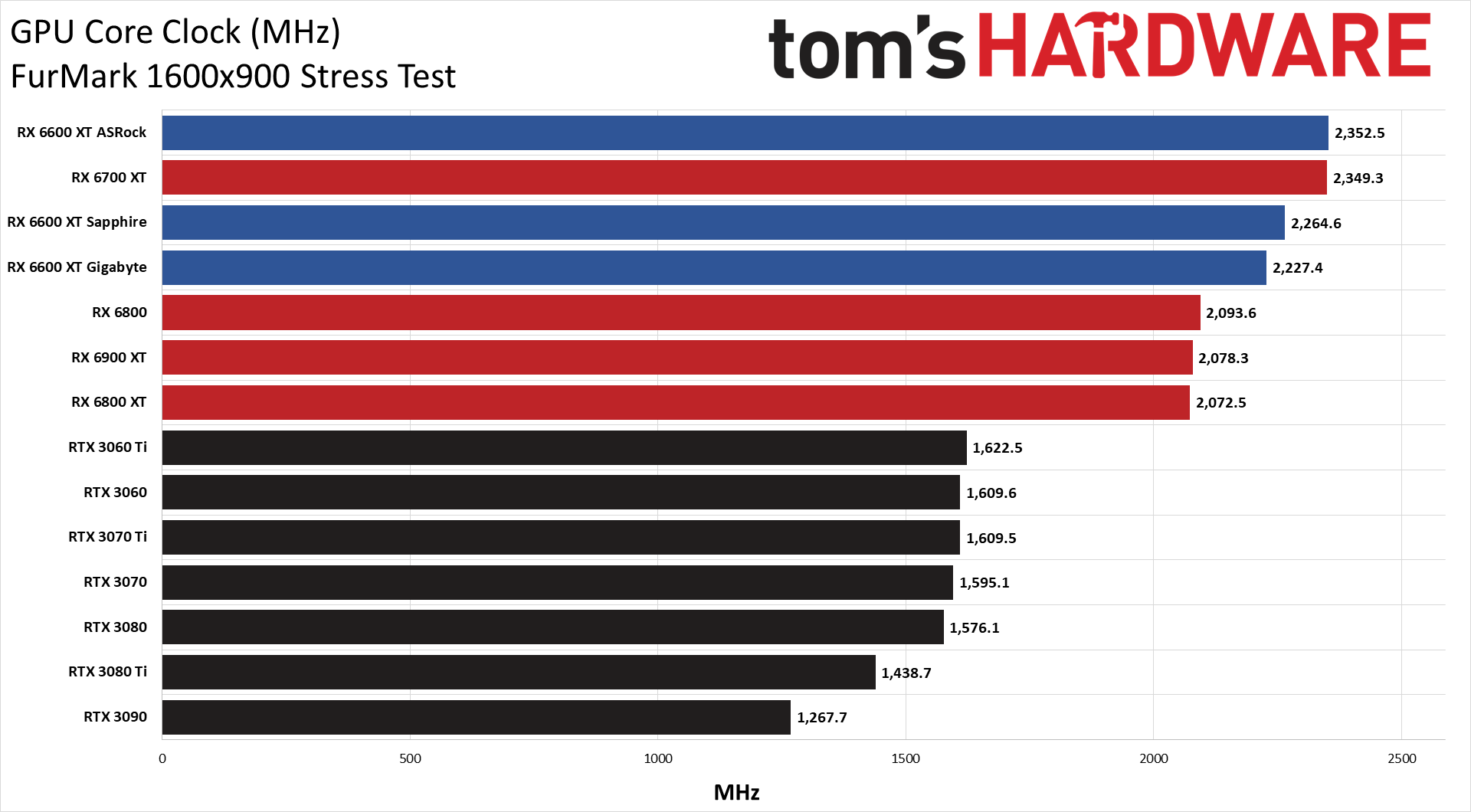

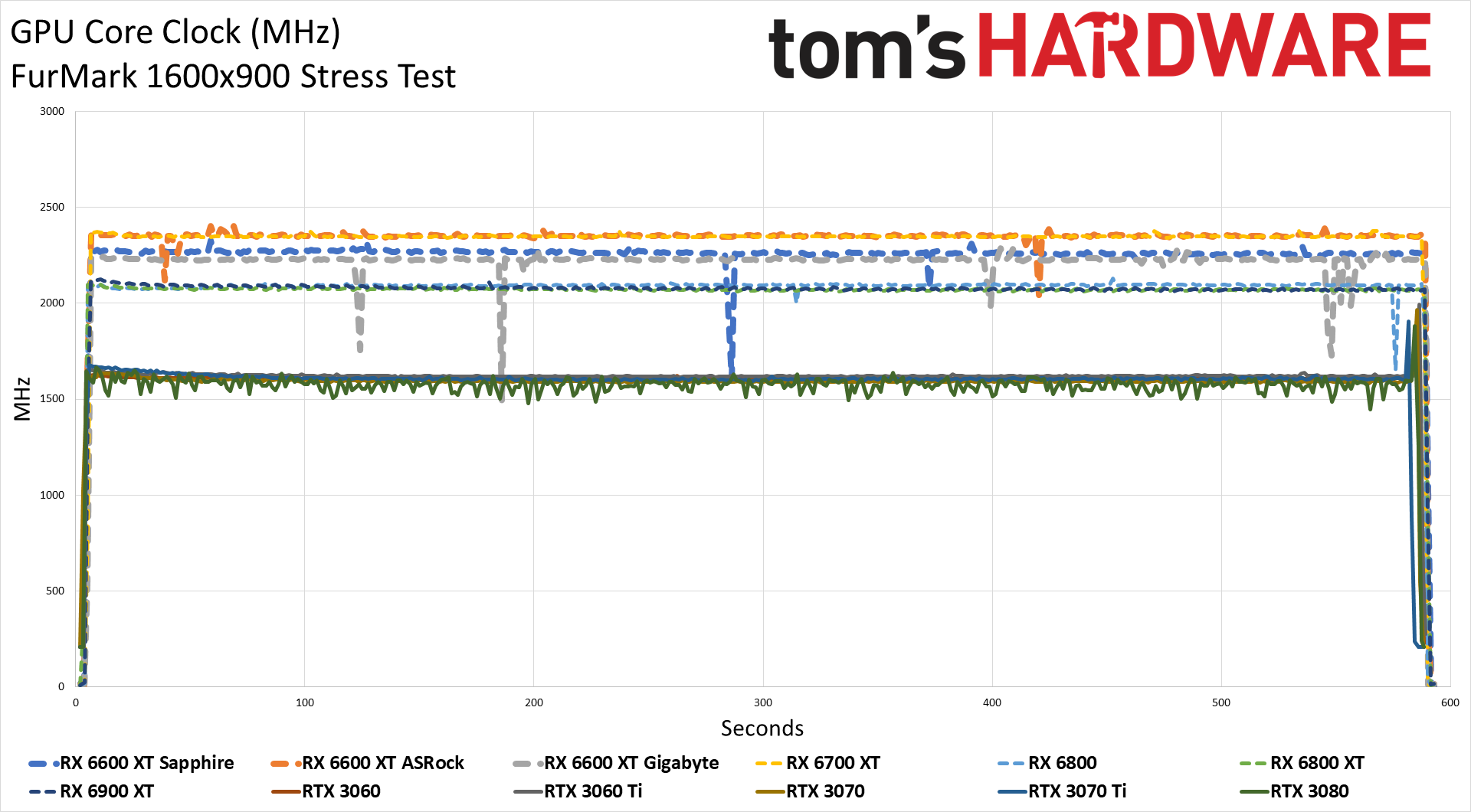

Average clock speeds on the Sapphire Pulse were lower than the Phantom by 100MHz in Metro, which was a bit surprising. Clocks were also about 90MHz lower in FurMark, though that hits power limits and cards often behave quite differently in that sort of workload than in gaming. We noticed some oddities with Metro Exodus after our initial benchmarking, however, which may account for some of the differences here.

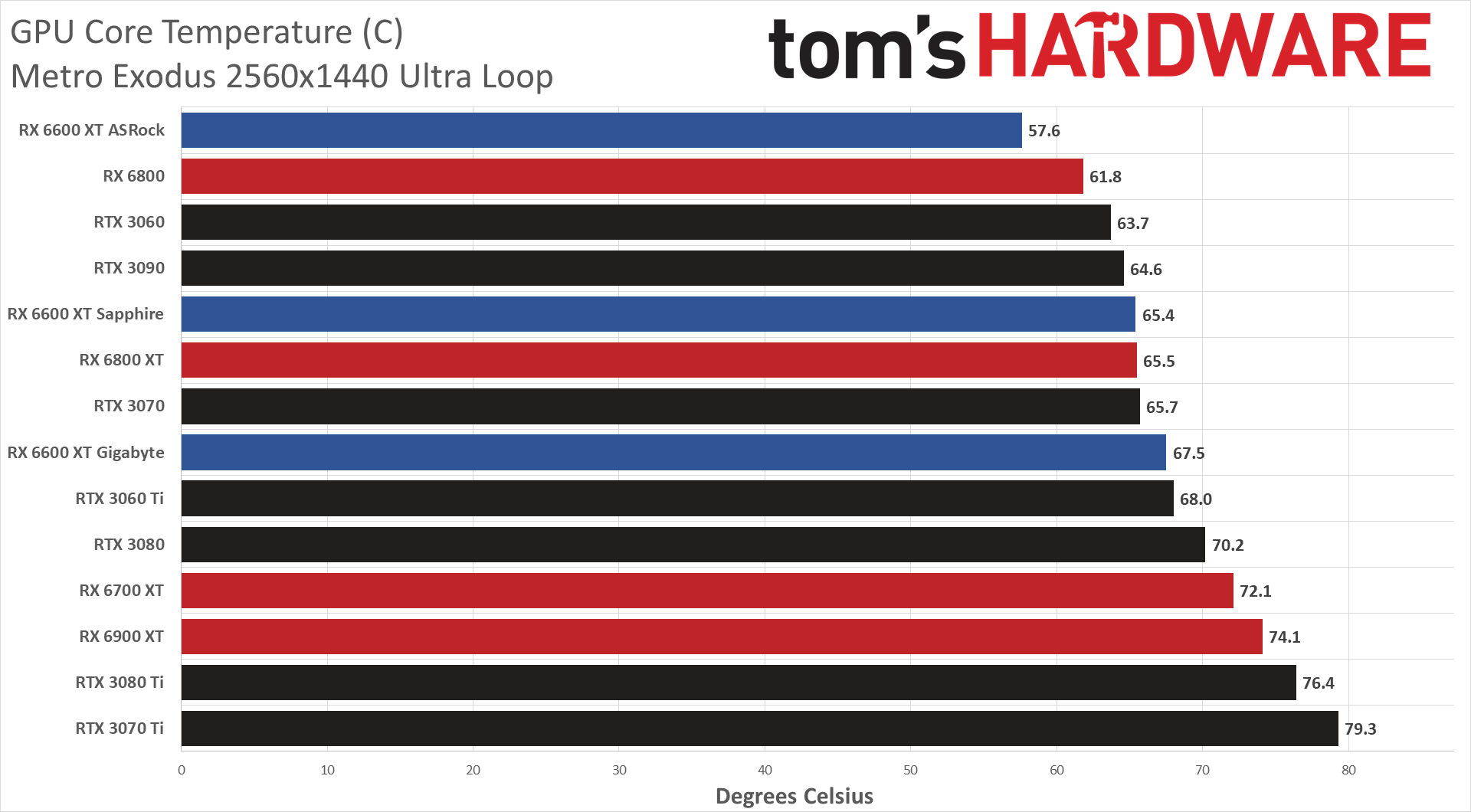

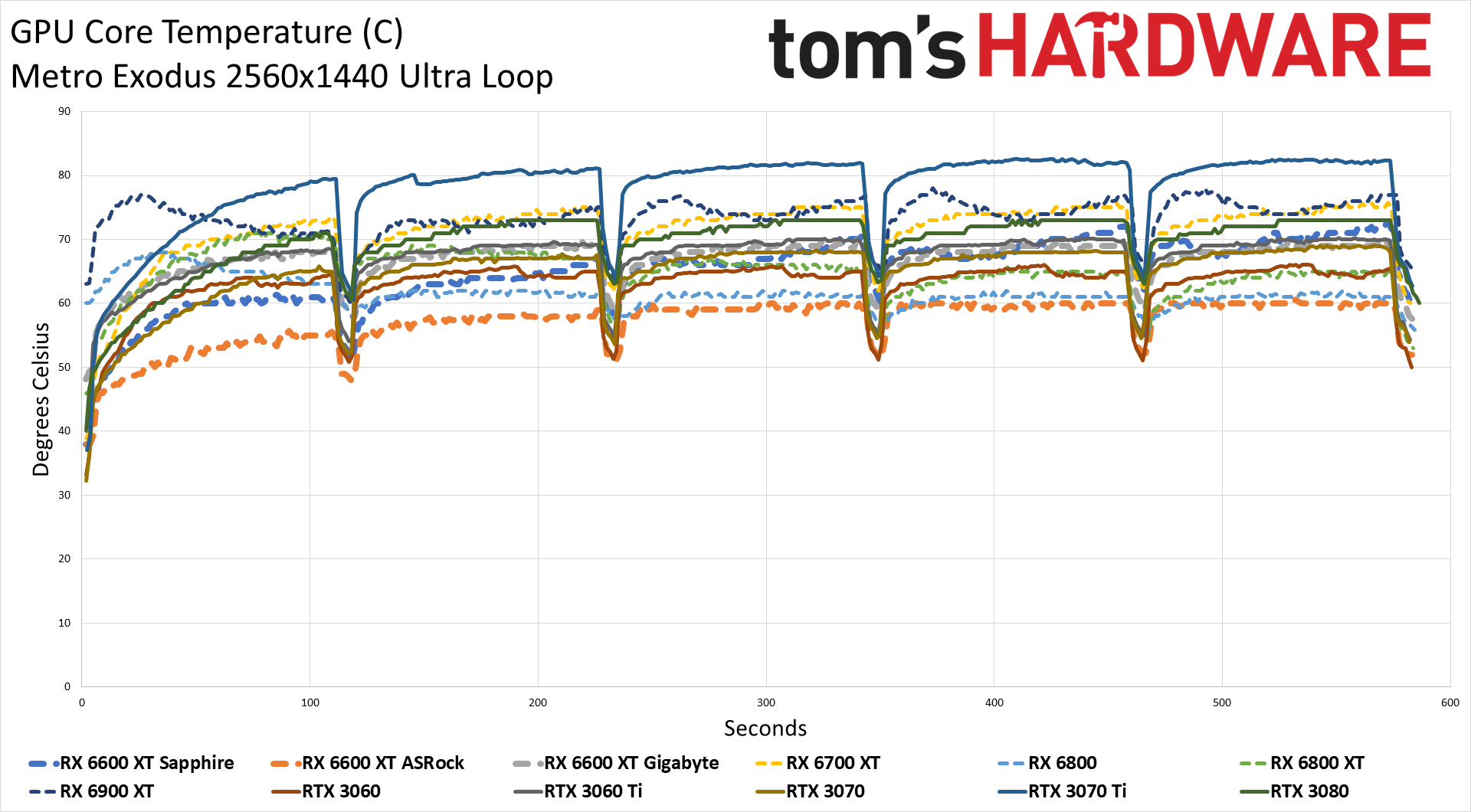

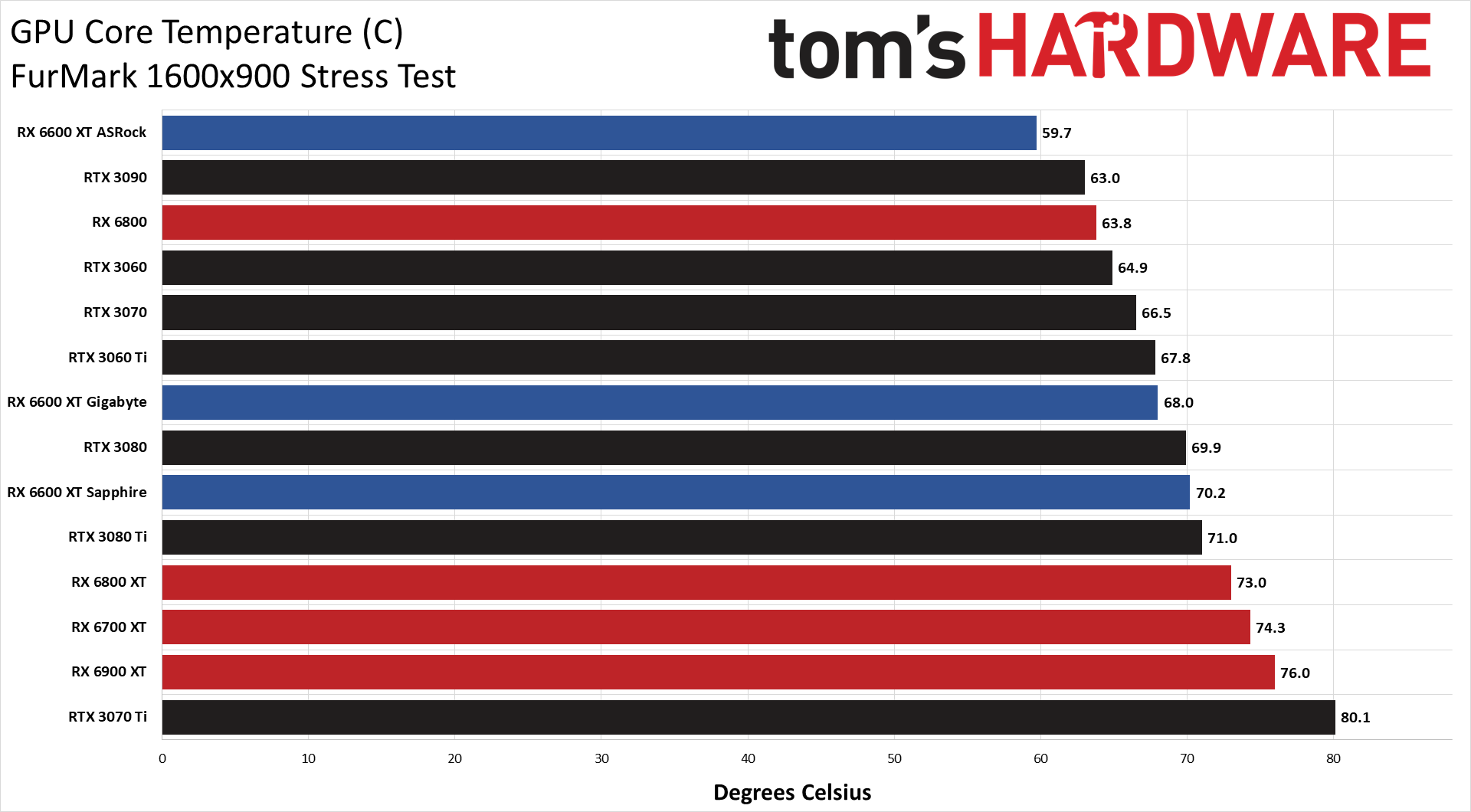

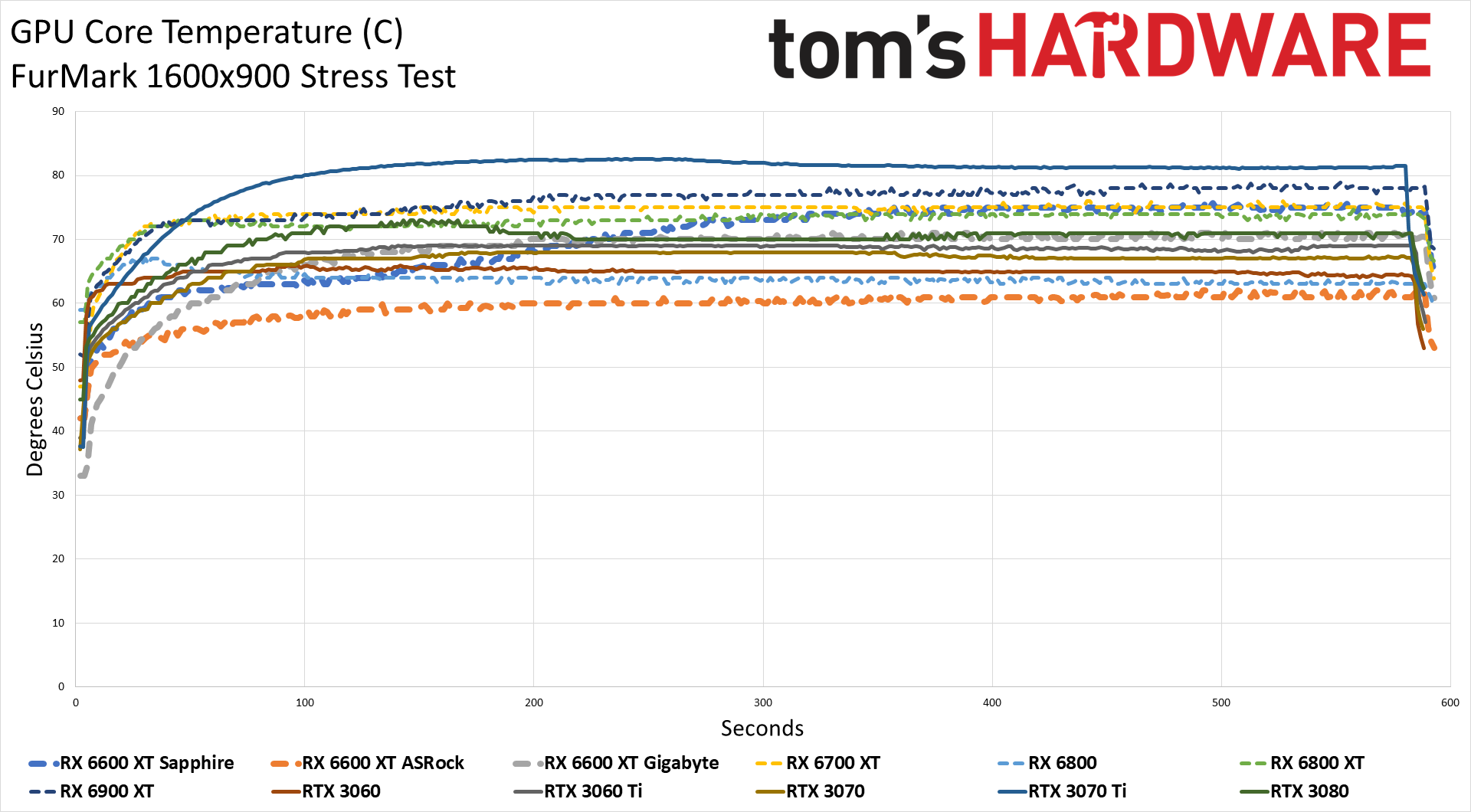

As far as temperatures go, the ASRock Phantom came out with a clear lead. It ran at higher clocks, drew more power, and still had temperatures 8–10C lower than the Sapphire Pulse and Gigabyte Eagle. But temperatures on their own don't tell the full story.

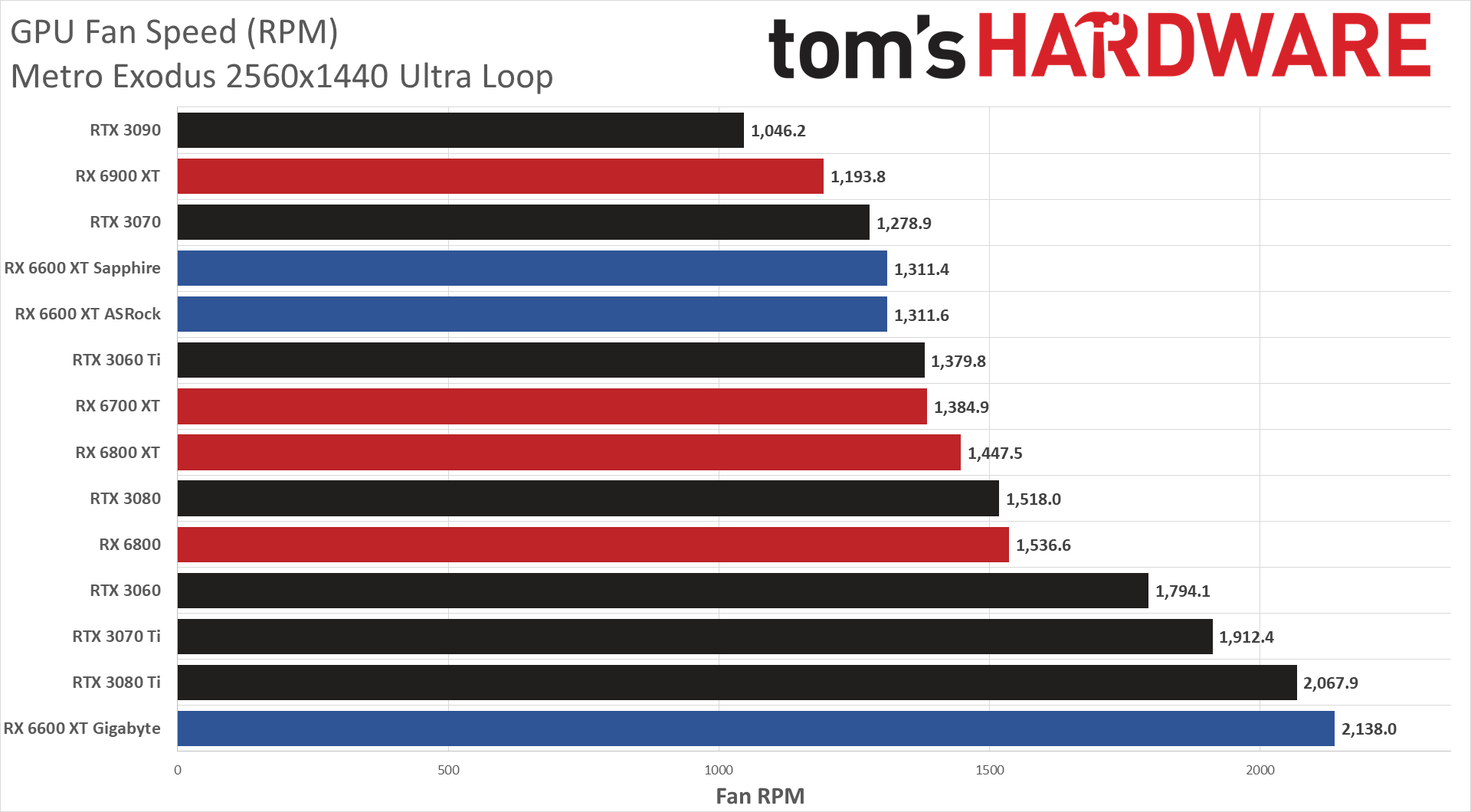

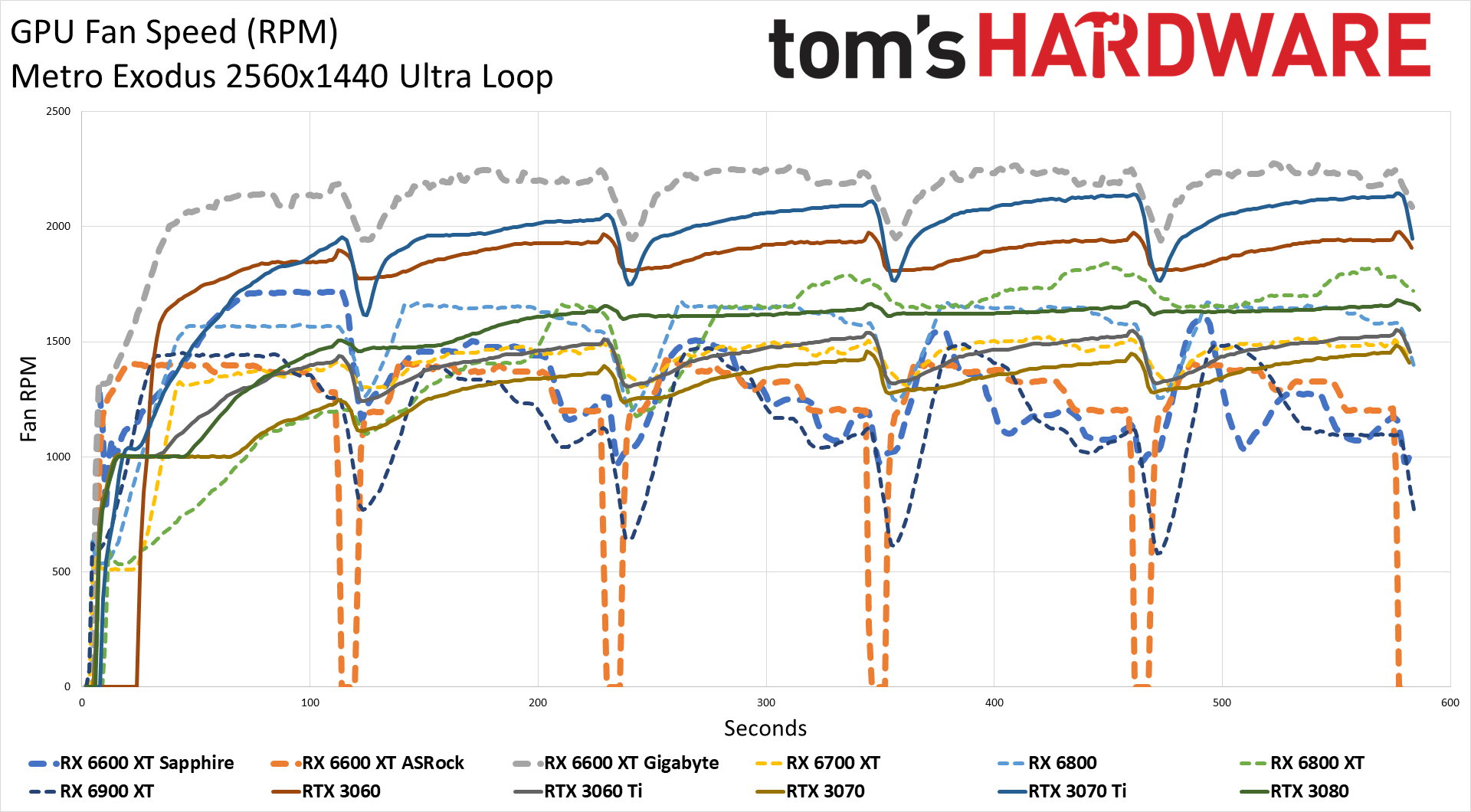

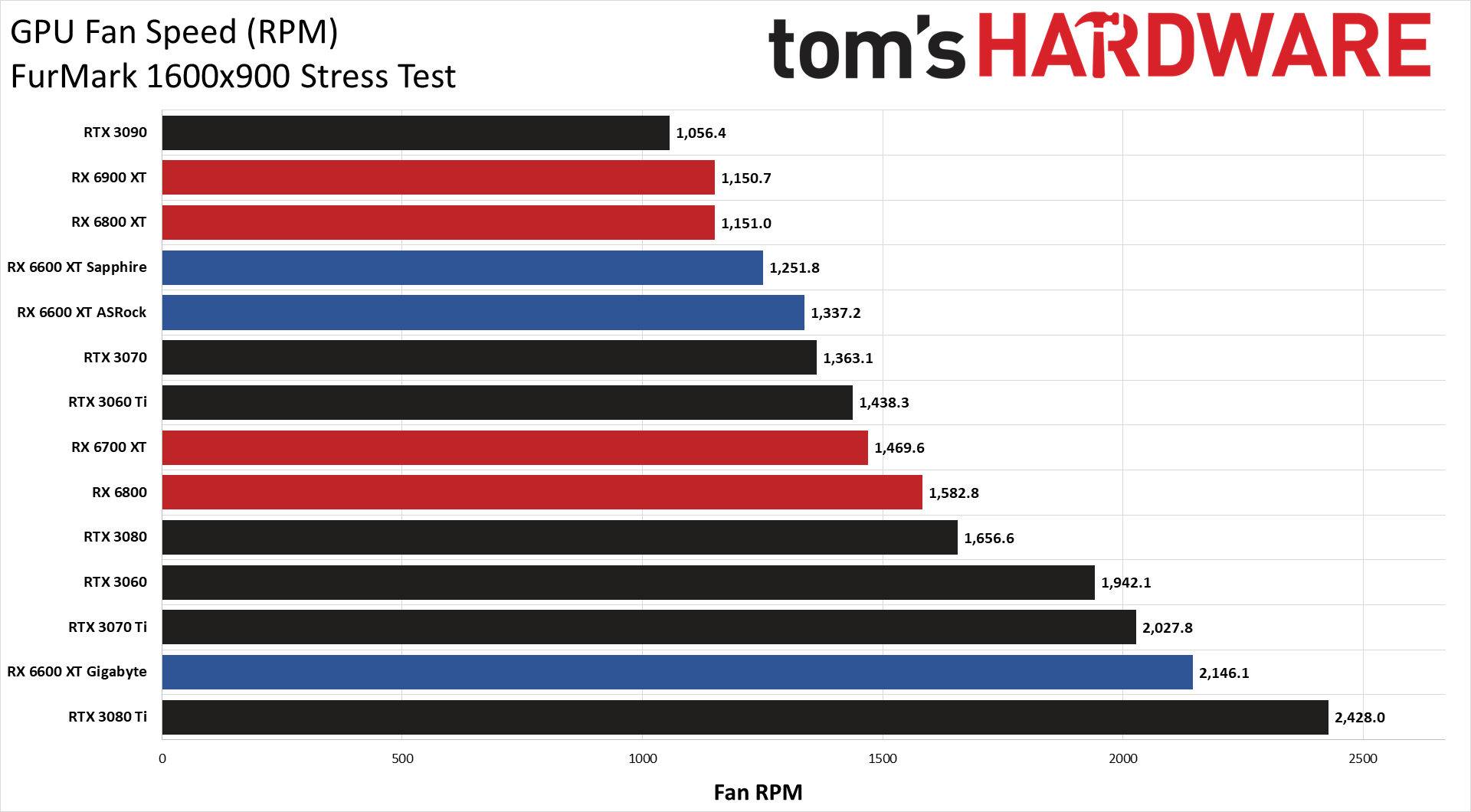

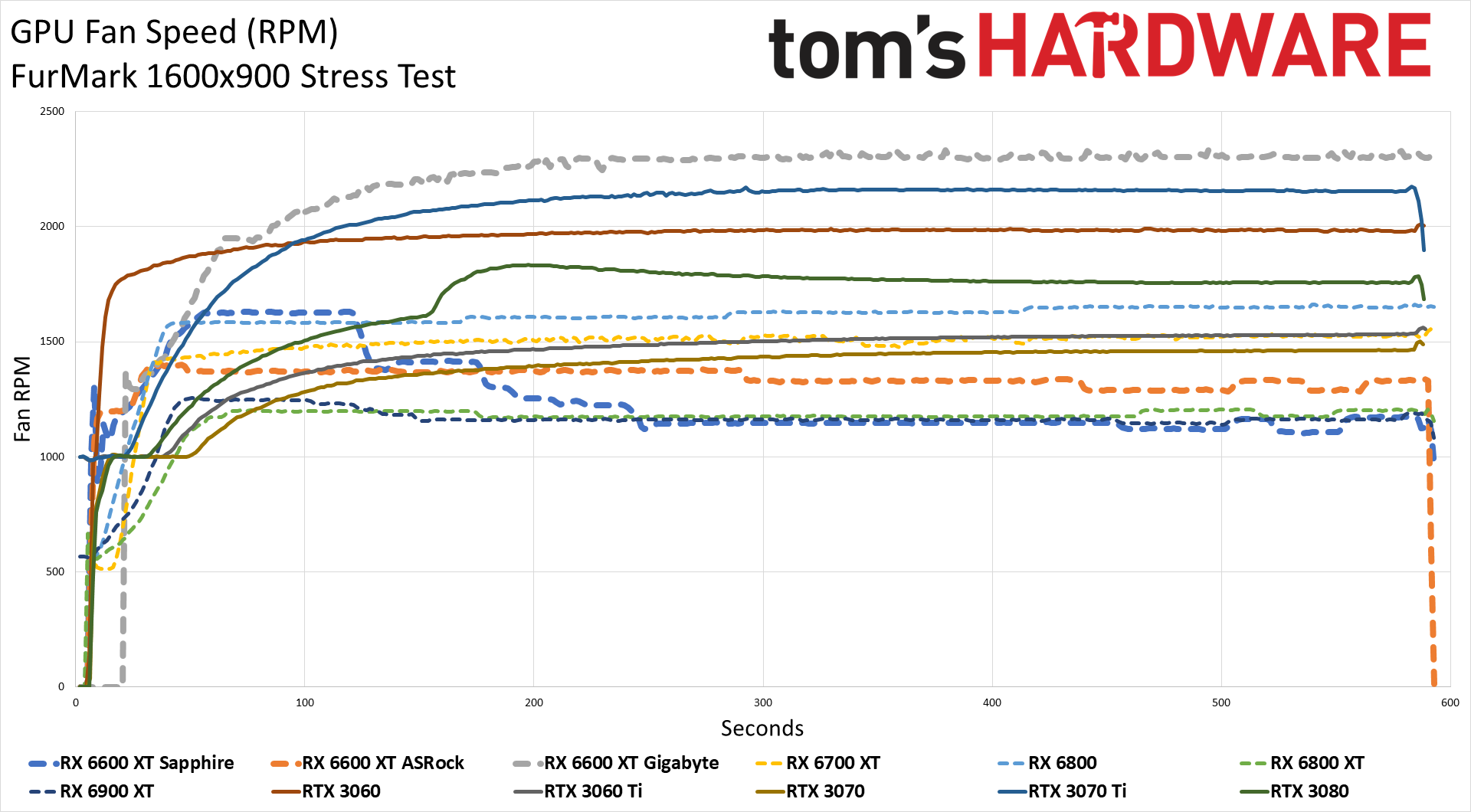

Higher fan speeds can improve temperatures at the cost of making more noise. That didn't happen with the Sapphire Pulse, where the fans had slightly lower RPMs than on the ASRock Phantom card — and the Gigabyte card didn't do so well. But even fan speeds don't tell you everything, as different fans have different acoustics.

We measured noise levels using an SPL meter at a distance of around 15cm. That helps it to focus on the GPU noise and not system or CPU fans. Ambient noise levels for testing were 33 dBA, and like most other modern GPUs, the fans stop spinning when GPU temperature falls below about 55C. You can see how quickly that happened in the fan speed over time chart, where the RX 6600 XT cards are the only ones to actually halt the fan in between loops of the Metro benchmark. While gaming, noise from the Sapphire Pulse was only 37 dBA, tying the ASRock Phantom, so not only does it run cool, but it's also nearly silent. (The Gigabyte Eagle was noticeably louder, if you're wondering — about 44 dBA.)

During gaming, the fan was spinning at around 30%, which was all that was needed to keep temperatures in check. We also set the fan speed to a static 75% as a secondary test, and it generated 54.1 dB of noise — slightly quieter than the Phantom, which measured 60 dB. Admittedly, that's not as quiet as some of the other GPUs we've tested, but those mostly have larger fans and bigger heatsinks and cost quite a bit more than the Pulse.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Best Graphics Cards

MORE: GPU Benchmarks and Hierarchy

MORE: All Graphics Content

Current page: Sapphire Radeon RX 6600 XT Pulse Power, Temps, Fan Speed, and Noise

Prev Page Sapphire Radeon RX 6600 XT Pulse Gaming Performance Next Page Sapphire Radeon RX 6600 XT Pulse: Cool, Quiet, and Subdued

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

logainofhades No bling and few extras isn't exactly a con, nor is its performance, at higher resolutions, as it was marketed as a 1080p card.Reply -

-Fran- One super important point about Sapphire cards: they have upsampling built in into TRIXX. It may not be FSR or DLSS, but you can upscale ANY game you want through it using the GPU. I have no idea how it does it, but you can.Reply

Other than that, this card looks clean and tidy. Not the best looking Sapphire in history, as that one goes to the original RX480 Nitro+ IMO. What a gorgeous design it was. I wish they'd use it for everything and get rid of the fake-glossy plastic garbo they've been using as of late.

Regards. -

JarredWaltonGPU Reply

Wow! It's almost like you ... didn't read the review. LOLYuka said:One super important point about Sapphire cards: they have upsampling built in into TRIXX. It may not be FSR or DLSS, but you can upscale ANY game you want through it using the GPU. I have no idea how it does it, but you can.

Other than that, this card looks clean and tidy. Not the best looking Sapphire in history, as that one goes to the original RX480 Nitro+ IMO. What a gorgeous design it was. I wish they'd use it for everything and get rid of the fake-glossy plastic garbo they've been using as of late.

Regards.

I talk quite about about Trixx Boost and even ran benchmarks with it enabled at 1440p, FYI. -

-Fran- Reply

I did read it; I must have omitted it from my mind :PJarredWaltonGPU said:Wow! It's almost like you ... didn't read the review. LOL

I talk quite about about Trixx Boost and even ran benchmarks with it enabled at 1440p, FYI.

Apologies. -

TheAlmightyProo ReplyYuka said:One super important point about Sapphire cards: they have upsampling built in into TRIXX. It may not be FSR or DLSS, but you can upscale ANY game you want through it using the GPU. I have no idea how it does it, but you can.

Other than that, this card looks clean and tidy. Not the best looking Sapphire in history, as that one goes to the original RX480 Nitro+ IMO. What a gorgeous design it was. I wish they'd use it for everything and get rid of the fake-glossy plastic garbo they've been using as of late.

Regards.

iirc that RX 480 Sapphire Nitro (did they do this in 580 too?) is the one with all the little holes in it and otherwise straight lines etc. I also seem to recall swappable fans, but could be wrong...

But yeah, it was a beaut, my fave design at the time, and I'd have so gone for one if I hadn't decided on a 1070 (Gigabyte Xtreme Gaming) as a safer bet holding 2560x1080 for longer before needing to drop to 1080p. That said, I've always liked Sapphires and eventually got one this year (6800XT Sapphire Nitro+ SE @3440x1440) and I'm absolutely not disappointed in that or my first full AMD CPU in 16 years (5800X) Sure, not so great at RT and FSR needs to catch up and catch on but I have maybe 2-3 games out of 50 I'd play that'll make use of either, no great loss yet until they become more refined and ubiquitous imo. It runs like a dream and cool too. Assuming AMD keep up or overtake the next gen but one, I'd be happy to buy Sapphire again. -

TheAlmightyProo ReplyJarredWaltonGPU said:Wow! It's almost like you ... didn't read the review. LOL

I talk quite about about Trixx Boost and even ran benchmarks with it enabled at 1440p, FYI.

Trixx looks like a damn good app tbh. Having a Sapphire 6800XT Nitro+ SE I could be using it but omitted doing so... I dunno, maybe cos it's already good enough at 3440x1440?

However, I do have a good gaming UHD 120Hz TV (Samsung Q80T) waiting to game from the couch (after an upcoming house move) which might do well with a little boost going forward as I'm not even thinking of upgrading for at least 3-5 years and after the first iterations of the 'big new things' have been refined somewhat.

So thanks for spending some time on that info and testing with it on. I might've ignored or forgotten it but knowing it's there as a tried and tested option is good to know. -

JarredWaltonGPU Reply

FWIW, you can just create a custom resolution in AMD or Nvidia control panel as an alternative if you don't have a Sapphire card. It's difficult to judge image quality, and in some cases I think it does make a difference. However, I'm not quite sure how Trixx Boost outputs a different resolution via RIS. If you do a screen capture, it's still at the Trixx Boost resolution, as though it's simply rendering at a lower resolution and using the display scaler to stretch the output. Potentially it happens internal to the card's output, so that 85% scaling gets bumped up to native for the DisplayPort signal, but then how does that use RIS since that would be a hardware/firmware feature?TheAlmightyProo said:Trixx looks like a damn good app tbh. Having a Sapphire 6800XT Nitro+ SE I could be using it but omitted doing so... I dunno, maybe cos it's already good enough at 3440x1440?

However, I do have a good gaming UHD 120Hz TV (Samsung Q80T) waiting to game from the couch (after an upcoming house move) which might do well with a little boost going forward as I'm not even thinking of upgrading for at least 3-5 years and after the first iterations of the 'big new things' have been refined somewhat.

So thanks for spending some time on that info and testing with it on. I might've ignored or forgotten it but knowing it's there as a tried and tested option is good to know.

Bottom line is rendering fewer pixels requires less GPU effort. How you stretch those to the desired output is the question. DLSS and FSR definitely scale to the desired resolution, so that Windows+PrtScrn capture images at the native resolution. Trixx Boost doesn't seem to function in the same way. ¯\(ツ)/¯ -

InvalidError Reply

Performance at higher resolution is definitely a con since in a sane GPU market, nobody would be willing to pay anywhere near $400 for a "1080p" gaming GPU with a gimped 4.0x8 interface and 128bits VRAM in a healthy market. This is the sort of penny-pinching you'd only expect to see on sub-$150 GPUs. On Nvidia's side, you don't see the PCIe interface get cut down until you get into sub-$100 SKUs like the GT1030.logainofhades said:No bling and few extras isn't exactly a con, nor is its performance, at higher resolutions, as it was marketed as a 1080p card.

As some techtubers put it, all GPUs are turd sandwiches. The 6600XT isn't good for the price, it is just the least worst turd sandwich at the moment if you absolutely must buy a GPU now. -

logainofhades Price aside, the card was advertised as a 1080p card, and the 6600xt does 1080p quite well. I don't understand the gimped interface either, but AMD promised 1080p, and delivered. Prices are stupid, and will be for quite some time, as many are saying 2023, before this chip shortage ends.Reply -

InvalidError Reply

$400 GPUs have been doing "1080p quite well" with contemporary titles for over a decade. I personally find it insulting that AMD would brag about that in 2021.logainofhades said:Price aside, the card was advertised as a 1080p card, and the 6600xt does 1080p quite well.