Benchmarking GeForce GTX Titan 6 GB: Fast, Quiet, Consistent

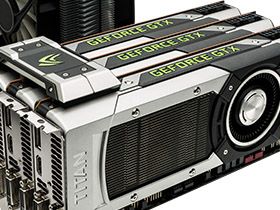

We've already covered the features of Nvidia's GeForce GTX Titan, the $1,000 GK110-powered beast set to exist alongside GeForce GTX 690. Now it's time to benchmark the board in one-, two-, and three-way SLI. Is it better than four GK104s working together?

GeForce GTX Titan: Putting Rarified Rubber To The Road

Two days ago, we gave you our first look at a beastly single-GPU graphics board in Nvidia GeForce GTX Titan 6 GB: GK110 On A Gaming Card. For some reason, the company wanted to split discussion of Titan’s specs and its performance across two days. That’s not the direction I would have gone (had I been asked for my opinion, that is). But after knocking out several thousand words on the first piece and benchmarking for a week straight in the background, cutting our coverage in half didn’t bother me as much.

If you missed the first piece, though, pop open a new tab and check it out; that story lays the foundation for the numbers you’re going to see today.

I’m not going to waste any time rehashing the background on GeForce GTX Titan. In short, we ended up with a trio of the GK110-based boards. One was peeled off to compare against GeForce GTX 690, 680, and Radeon HD 7970 GHz Edition. Then, we doubled- and tripled-up the Titans to see how they’d fare against GeForce GTX 690s in four-way SLI. Of course, compute was important to revisit, so I spent some time digging into that before measuring power consumption, noise, and temperatures.

Let’s jump right into the system we used for benchmarking, the tests we ran, and the way we’re reporting our results, since it differs from what you’ve seen us do in the past.

| Test Hardware | |

|---|---|

| Processors | Intel Core i7-3970X (Sandy Bridge-E) 3.5 GHz at 4.5 GHz (45 * 100 MHz), LGA 2011, 15 MB Shared L3, Hyper-Threading enabled, Power-savings enabled |

| Motherboard | Intel DX79SR (LGA 2011) X79 Express Chipset, BIOS 0553 |

| Memory | G.Skill 16 GB (4 x 4 GB) DDR3-1600, F3-12800CL9Q2-32GBZL @ 9-9-9-24 and 1.5 V |

| Hard Drive | Crucial m4 SSD 256 GB SATA 6Gb/s |

| Graphics | Nvidia GeForce GTX Titan 6 GB |

| Row 5 - Cell 0 | Nvidia GeForce GTX 690 4 GB |

| Row 6 - Cell 0 | Nvidia GeForce GTX 680 2 GB |

| Row 7 - Cell 0 | AMD Radeon HD 7970 GHz Edition 3 GB |

| Power Supply | Cooler Master UCP-1000 W |

| System Software And Drivers | |

| Operating System | Windows 8 Professional 64-bit |

| DirectX | DirectX 11 |

| Graphics Driver | Nvidia GeForce Release 314.09 (Beta) For GTX Titan |

| Row 13 - Cell 0 | Nvidia GeForce Release 314.07 For GTX 680 and 690 |

| Row 14 - Cell 0 | AMD Catalyst 13.2 (Beta 5) For Radeon HD 7970 GHz Edition |

We ran into a snag right away when the Gigabyte X79S-UP5-WiFi motherboard we use as our test bench proved incompatible with Titan. Neither Nvidia nor Gigabyte was able to explain why the card wouldn’t output a video signal, though the system seemed to boot otherwise.

Switching out for Intel’s DX79SR solved the issue. So, we overclocked a Core i7-3970X to 4.5 GHz, dropped in 32 GB of DDR3-1600 from G.Skill, and installed all of our apps on a 256 GB Crucial m4 to avoid bottlenecks in every possible way.

One thing to keep in mind about GPU Boost 2.0: because the technology is now temperature-based, it's even more sensitive to environmental influence. We monitored the feature's behavior across a number of games and, left untouched, core clock rates tended to stick around 993 MHz. A higher allowable thermal ceiling easily allowed them to approach 1.1 GHz. As you might imagine, the difference between benchmarks run on a cold GPU, peaking at 1.1 GHz, and a hot chip in a warm room can vary significantly. We made sure to maintain a constant 23 degrees Celsius in our lab, only recording benchmark results after a warm-up run.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

| Benchmarks And Settings | |

|---|---|

| Battlefield 3 | Ultra Quality Preset, V-Sync off, 1920x1080 / 2560x1600 / 5760x1200, DirectX 11, Going Hunting, 90-Second playback, Fraps |

| Far Cry 3 | Ultra Quality Preset, DirectX 11, V-Sync off, 1920x1080 / 2560x1600 / 5760x1200, Custom Run-Through, 50-Second playback, Fraps |

| Borderlands 2 | Highest-Quality Settings, PhysX Low, 16x Anisotropic Filtering, 1920x1080 / 2560x1600 / 5760x1200, Custom Run-Through, Fraps |

| Hitman: Absolution | Ultra Quality Preset, 2x MSAA, 1920x1080 / 2560x1600 / 5760x1200, Built-In Benchmark Sequence |

| The Elder Scrolls V: Skyrim | Ultra Quality Preset, FXAA Enabled, 1920x1080 / 2560x1600 / 5760x1200, Custom Run-Through, 25-Second playback, Fraps |

| 3DMark | Fire Strike Benchmark |

| World of Warcraft: Mists of Pandaria | Ultra Quality Settings, 8x MSAA, Mists of Pandaria Flight Points, 1920x1200 / 2560x1600 / 5760x1200, Fraps, DirectX 11 Rendering, x64 Client |

| SiSoftware Sandra 2013 Professional | Sandra Tech Support (Engineer) 2013.SP1, GP Processing, Cryptography, Video Shader, and Video Bandwidth Modules |

| Corel WinZip 17 | 2.1 GB Folder, OpenCL Vs. CPU Compression |

| LuxMark 2.0 | 64-bit Binary, Version 2.0, Room Scene |

| Adobe Photoshop CS6 | Scripted Filter Test, OpenCL Enabled, 16 MB TIF |

Using one Dell 3007WFP and three Dell U2410 displays, we were able to benchmark our suite at 1920x1080, 2560x1600, and 5760x1200.

In the past, we would have presented a number of average frame rates for each game, at different resolutions, with and without anti-aliasing. Average FPS remains a good measurement to present, particularly for the ease with which it conveys relative performance. But we know there is plenty of valuable information missing still.

Last week, Don introduced a three-part approach to breaking down graphics performance in Gaming Shoot-Out: 18 CPUs And APUs Under $200, Benchmarked. The first component involves average frame rate, so our old analysis is covered. Second, we have frame rate over time, plotted as a line graph. This shows you how high and low the frame rate goes during our benchmark run. It also illustrates how long a given configuration spends in comfortable (or unplayable) territory. The third chart measures the lag between consecutive frames. When this number is high, even if your average frame rate is solid, you’re more likely to “feel” jittery gameplay. So, we’re calculating the average time difference, the 75th percentile (the longest lag between consecutive frames 75 percent of the time), and the 95th percentile.

All three charts complement each other, conveying the number of frames each card is able to output each second, and how consistently those frames show up. The good news is that we think this combination of data tells a compelling story. Unfortunately, instead of one chart for every resolution we test in a game, we now have three charts per resolution per game, which is a lot to wade through. As we move through today’s story, we’ll do our best to explain what all of the information means.

Current page: GeForce GTX Titan: Putting Rarified Rubber To The Road

Next Page Results: 3DMark-

Novuake Pure marketing. At that price Nvidia is just pulling a huge stunt... Still an insane card.Reply -

whyso if you use an actual 7970 GE card that is sold on newegg, etc instead of the reference 7970 GE card that AMD gave (that you can't find anywhere) thermals and acoustics are different.Reply -

cknobman Seems like Titan is a flop (at least at $1000 price point).Reply

This card would only be compelling if offered in the ~$700 range.

As for compute? LOL looks like this card being a compute monster goes right out the window. Titan does not really even compete that well with a 7970 costing less than half. -

downhill911 If titan costs no more than 800USD, then really nice card to have since it does not, i call it a fail card, or hype card. Even my GTX 690 make more since and now you can have them for a really good price on ebay.Reply -

spookyman well I am glad I bought the 690GTX.Reply

Titan is nice but not impressive enough to go buy. -

hero1 jimbaladinFor $1000 that card sheath better be made out of platinum.Reply

Tell me about it! I think Nvidia shot itself on the foot with the pricing schim. I want AMD to come out with better drivers than current ones to put the 7970 at least 20% ahead of 680 and take all the sales from the greedy green. Sure it performs way better but that price is insane. I think 700-800 is the sweet spot but again it is rare, powerful beast and very consistent which is hard to find atm. -

raxman "We did bring these issues up with Nvidia, and were told that they all stem from its driver. Fortunately, that means we should see fixes soon." I suspect their fix will be "Use CUDA".Reply

Nvidia has really dropped the ball on OpenCL. They don't support OpenCL 1.2, they make it difficult to find all their OpenCL examples. Their link for OpenCL is not easy to find. However their OpenCL 1.1 driver is quite good for Fermi and for the 680 and 690 despite what people say. But if the Titan has troubles it looks like they will be giving up on the driver now as well or purposely crippling it (I can't imagine they did not think to test some OpenCL benchmarks which every review site uses). Nvidia does not care about OpenCL Nvidia users like myself anymore. I wish there more people influential like Linus Torvalds that told Nvidia where to go.

Most Popular