Pushing Intel Optane Potential: RAID Performance Explored

Performance Testing

Comparison Products

We used a handful of the fastest consumer and prosumer NVMe SSDs available to compare to the Optane RAID 0 array. Sadly, we don't have any pairs of NVMe SSDs to test their RAID performance. These products would provide increased sequential bandwidth in RAID, but we're really looking for increased low queue depth (QD) random performance because it relates directly to a better user experience. SSDs in RAID 0 on the Intel PCH don't improve random performance at low queue depths very much. We are running RAID 0 for increased capacity instead of using it to boost performance.

The Intel PCH has a hard bandwidth limit to the CPU. The PCH chip links to the CPU via PCI Express, but several devices share the total available bandwidth. We've recorded up to 3500 MB/s from three M.2 devices, and that seems to be the usable limit without other devices connected to the PCH, such as USB, SATA, and so on, consuming a lot of bandwidth.

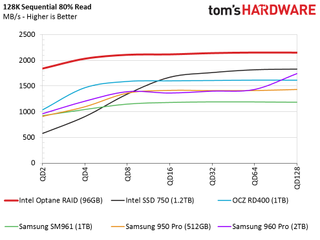

Sequential Read Performance

To read about our storage tests in-depth, please check out How We Test HDDs And SSDs. We cover four-corner testing on page six of our How We Test guide.

The Optane RAID 0 array pegs the PCH bus's available bandwidth right from the get-go. The array delivers a staggering 3,500 MB/s of sequential read performance from QD1 to 128!

Sequential Write Performance

Intel limited Optane Memory's sequential write performance to just 290 MB/s. Intel designed the drives to accelerate random performance, and with limited sequential write speeds, it takes users longer to reach the endurance limits. We recently pushed an Intel 600p to its endurance limits, and the drive transitioned into a read-only state before it would lose or corrupt data. We suspect the Optane Memory SSD would do the same at, or near, its 182.5TB write endurance cap.

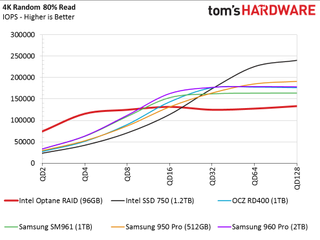

Random Read Performance

We're all here to see random read performance at low queue depths and mixed random performance. Those are the two areas that directly relate to application and multitasking performance. At QD1 and QD2, the Optane RAID 0 array provides roughly a 3x improvement over the NAND-based NVMe SSDs. This is in line with the performance you would see with a single Optane SSD in a cache configuration when the algorithms place all the data on the Optane device. Unfortunately, caching techniques only keep a portion of the data on the device. With this array, all the data is guaranteed to come directly from the Optane Memory SSDs.

Random Write Performance

Intel neutered Optane Memory's random write performance, and that hurts RAID 0 performance. The best we could muster at QD1 was around 30,000 IOPS. That's the equivalent of a good consumer SATA MLC SSD. The real question is if performance is sufficient enough to maintain a high level of performance. Random data writes come to the disk system in bursts, so we would like to see lower latency. However, the score is somewhat misleading--30,000 IOPS at QD1 is still very fast.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

80 Percent Mixed Sequential Workload

We describe our mixed workload testing in detail here and describe our steady state tests here.

Optane dominates the mixed workload tests at low queue depths just like SLC NAND did several years ago. The Optane array nearly doubles the performance of the next closest NVMe SSD at QD2.

80 Percent Mixed Random Workload

We see the same results during the mixed random workload test. Optane Memory is simply on a different level. The NAND devices can deliver higher peak performance than Optane in this, and many other, tests. But they require higher queue depths. Most users simply will never see that type of workload because the drives are too fast to allow it. You can't stack commands that high in the real-world because the SSD fulfills data requests very quickly. You can't double click applications fast enough to place a heavy enough load on the device to reach high queue depths.

Sequential Steady-State

3D XPoint Memory doesn't suffer from the same limitations as NAND, so the write performance doesn't fall off due to the background tasks created by dirty cells.

Random Steady-State

What steady-state conditions, Optane asks? It's as if Optane taunts NAND with its performance, and consistency is also impressive. Optane is a natural for RAID.

PCMark 8 Real-World Software Performance

For details on our real-world software performance testing, please click here.

The Optane Memory array runs over the other products in every test that doesn't incur heavy write traffic, but the difference in performance difference doesn't look like much. Microsoft could help the technology along by giving us a flash-friendly file system.

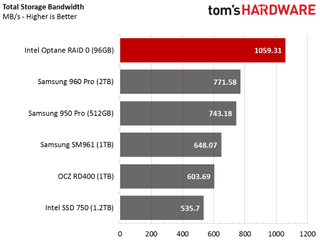

Application Storage Bandwidth

A little goes a long way at these speeds. The Optane Memory array achieves nearly 300 MB/s over the nearest SSD when we average the results into a simple throughput score.

PCMark 8 Advanced Workload Performance

To learn how we test advanced workload performance, please click here.

Optane Memory provides untouchable application bandwidth, and regular NAND-based SSDs can't keep pace. Before Optane, we would examine slivers of performance differences between the NAND-based SSDs. This set of charts really shows what we've said about 3D XPoint--it puts all NAND in the same category.

Total Access Time

Optane has some competition in the service/access time tests. The Intel SSD 750 Series still delivers amazing performance during this test, but when it comes to consistency, Optane remains the clear winner.

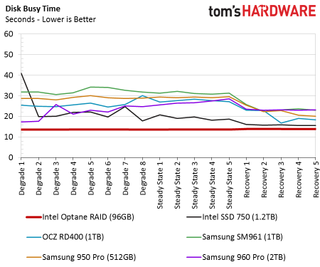

Disk Busy Time

Consumer workloads barely even stress the RAID 0 array. I don't think power consumption is a real worry for the desktop with a trio of Optane Memory SSDs. The drives are still able to complete the task and return to a low power state very quickly.

MORE: Best SSDs

MORE: How We Test HDDs And SSDs

MORE: All SSD Content

Alleged cryptojacker arrested for money laundering, $3.5 million in cloud service fraud — ultimately mined less than $1 million in crypto

Determined modder gets 'Halo 2' running at 720p on the original Xbox — after tweaks to the console hardware, kernel, and game

Chinese researchers use low-cost Nvidia chip for hypersonic weapon —unrestricted Nvidia Jetson TX2i powers guidance system

-

gasaraki Impressive but... you should have tested the 960Pro in RAID also as a direct performance comparison.Reply -

InvalidError Other PCH devices sharing DMI bandwidth with M.2 slots isn't really an issue since bandwidth is symmetrical and if you are pulling 3GB/s from your M.2 devices, I doubt you are loading much additional data from USB3 and other PCH ports. It is more likely that you are writing to those other devices.Reply

As for "experiencing the boot time", you wouldn't need to do that if you simply put your PC in standby instead of turning it off. If standby increases your annual power bill by $3, it'll take ~50 years to recover your 2x32GB Optane purchase cost from standby power savings. Standby is quicker than reboot and also spares you the trouble of spending many minutes re-opening all the stuff you usually have open all the time. -

takeshi7 Next, get 4 Optane SSDs, put them in this card, and put them in a PCIe x16 slot hooked up directly to the CPU.Reply

http://www.seagate.com/files/www-content/product-content/ssd-fam/nvme-ssd/nytro-xp7200/_shared/_images/nytro-xp7200-add-in-card-row-2-img-341x305.png -

gasaraki "Next, get 4 Optane SSDs, put them in this card, and put them in a PCIe x16 slot hooked up directly to the CPU.Reply

http://www.seagate.com/files/www-content/product-conten..."

Still just 4X bus to each M.2 so no different than on board M.2 slots. -

takeshi7 Reply19731006 said:"Next, get 4 Optane SSDs, put them in this card, and put them in a PCIe x16 slot hooked up directly to the CPU.

http://www.seagate.com/files/www-content/product-conten..."

Still just 4X bus to each M.2 so no different than on board M.2 slots.

But it is different because those M.2 slots are bottlenecked by the DMI connection on the PCH. The CPU slots don't have that issue.

-

InvalidError Reply

The PCH bandwidth is of little importance here as once you set synthetic benchmarks aside and step into more practical matters such as application launch and task completion times, you are left with very little real-world application performance benefits despite the triple Optane setup being four times as fast as the other SSDs, which means negligible net benefits from going even further overboard.19731050 said:But it is different because those M.2 slots are bottlenecked by the DMI connection on the PCH. The CPU slots don't have that issue.

The only time where PCH bandwidth might be a significant bottleneck is when copying files from the RAID array to RAMdisk or a null device. The rest of the time, application processing between accesses is the primary bottleneck.

Most Popular