Nvidia GeForce GTX 260/280 Review

Reworked Streaming Multiprocessors

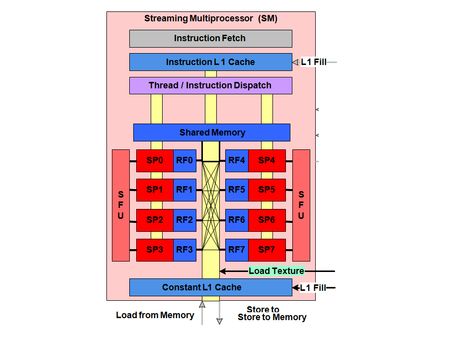

Aside from their increased number, each multiprocessor has undergone several optimizations. The first is the increased number of active threads per multiprocessor – from 768 to 1,024 (from 24 32-thread warps to 32). A larger number of threads are especially useful for masking the latency of texturing operations. For the totality of the GPU the increase is from 12,288 active threads to 30,720.

The number of registers per multiprocessor has doubled – from 8,192 registers to 16,384. With the concomitant increase in the number of threads, the number of registers usable simultaneously by a thread has increased from 10 registers to 16. On the G8x/G9x, our test algorithm used 67% of the processing units; on a GT200 that figure would be 100%. Combined with the two texture units, performance should be substantially higher than with the G80 we used for our test. Unfortunately, CUDA 2.0 requires a driver that’s still in a beta version and doesn’t recognize the GeForce 200 GTX. As soon as the main branch of the drivers adds support, we’ll redo the test.

That’s not the only improvement made to the multiprocessors: Nvidia announces that they’ve optimized the dual-issue mode. You’ll recall that since the G80, multiprocessors are supposed to be able to execute two instructions per cycle: one MAD and one floating MUL. We say “supposed to” because at the time we weren’t able to see this behavior in our synthetic tests – not knowing if this was a limitation of the hardware or the drivers. Several months and several driver versions later, we now know that MUL isn’t always easy to isolate on the G80, which led us to believe the problem was at the hardware level.

But how does dual-issue mode operate? At the time of the G80 Nvidia provided no details, but since then, by studying a patent, we’ve learned a little more about the way instructions are executed by the multiprocessors. First of all the patent clearly specifies that the multiprocessors can only launch execution of a single instruction for each GPU cycle (the “slow” frequency). So where is this famous dual-issue mode? In fact it’s a specificity of the hardware: One instruction uses two GPU cycles (four ALU cycles) to be executed on a warp (32 threads executed by 8-way SIMD units), but the front end of the multiprocessor can launch execution of one instruction at each cycle, provided that the instructions are of different types: MAD in one case, SFU in the other.

In addition to transcendental operations and interpolation of the values of each vertex, the SFU is also capable of executing a floating-point multiplication. By alternating execution of MAD and MUL instructions, there’s an overlap of the duration of the instructions. In this way each GPU cycle produces the result of a MAD or a MUL on a warp – that is, 32 scalar values. Whereas from Nvidia’s description you might expect to get the result of a MAD and a MUL every two GPU cycles. In practice, the result is the same, but from a hardware point of view it greatly simplifies the front end, which handles launching execution of the instructions, with one starting at each cycle.

What was it that limited the ability to do this on the G8x/G9x and has been corrected on the GT200? Nvidia, unfortunately, isn’t specific about that. They simply say that they’ve worked on such points as register allocation and scheduling and launching of instructions. But you can rely on us to pursue our investigation. Now let’s see if the changes Nvidia has made are useful in practice in a synthetic test – GPUBench.BenchlFor purposed of comparison we’ve added the 9800 GTX’ scores to the graph. This time it’s clear; you can see the higher rate for MUL instructions compared to MAD instructions. But we’re still a long way from doubled values, with a gain of approximately 32% compared to the rate for MAD instructions. But that will do for now. We should mention that the results for DP3 or DP4 instructions shouldn’t be taken into account, since the scores aren’t consistent. The same goes for the results for POW instructions, which are probably due to a driver problem.

The last change made to the Streaming Multiprocessors is support for double precision (floating-point numbers on 64 bits instead of 32). Let’s be clear – the additional precision is only moderately useful in graphics algorithms. But as we know, GPGPU is taking on more and more importance for Nvidia, and in certain scientific applications, double precision is a non-negotiable demand!

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nvidia is not the first company to take note of that. IBM recently modified its Cell processor to increase the performance of the SPUs for this type of data. In terms of performance, the GT200 implementation leaves something to be desired – double-precision floating-point calculations are managed by a dedicated Streaming Multiprocessor unit. With a unit capable of executing one double-precision MAD calculation per cycle, we get a peak performance of: 1.296 x 10 (TPC) x 3 (SM) x 2 (Multiply+Add) = 77.78 Gflops, or between 1/8th and 1/12th of the single-precision performance. AMD has introduced support by using the same processing units over several cycles, with noticeably better results – only between two and four times slower than single precision calculations.

-

Lunarion what a POS, the 9800gx2 is $150+ cheaper and performs just about the same. Let's hope the new ATI cards coming actually make a differenceReply -

foxhound009 woow,.... that's the new "high end" gpu????Reply

lolz.. 3870 x2 wil get cheaper... and nvidia gtx200 lies on the shelves providing space for dust........

(I really expectede mmore from this one... :/ ) -

thatguy2001 Pretty disappointing. And here I was thinking that the gtx 280 was supposed to put the 9800gx2 to shame. Not too good.Reply -

cappster Both cards are priced out of my price range. Mainstream decently priced cards sell better than the extreme high priced cards. I think Nvidia is going to lose this round of "next gen" cards and price to performance ratio to ATI. I am a fan of whichever company will provide a nice performing card at a decent price (sub 300 dollars).Reply -

njalterio Very disappointing, and I had to laugh when they compared the prices for the GTX 260 and the GTX 280, $450 and $600, calling the GTX 260 "nearly half the price" of the GTX 280. Way to fail at math. lol.Reply -

NarwhaleAu It is going to get owned by the 4870x2. In some cases the 3870x2 was quicker - not many, but we are talking 640 shaders total vs. 1600 total for the 4870x2.Reply -

MooseMuffin Loud, power hungry, expensive and not a huge performance improvement. Nice job nvidia.Reply -

compy386 This should be great news for AMD. The 4870 is rumored to come in at 40% above the 9800GTX so that would put it at about the 260GTX range. At $300 it would be a much better value. Plus AMD was expecting to price it in the $200s so even if it hits low, AMD can lower the price and make some money.Reply -

vochtige i think i'll get a 8800ultra. i'll be safe for the next 5 generations of nvidia! try harder nv crewReply