Intel Presents Its AMD EPYC Server Test Results

Intel has released its internal benchmarking results with AMD's EPYC server platforms. Intel originally fired a preemptive salvo at AMD in the form of a controversial slide deck with a number of EPYC performance projections. At the time, Intel did not have any working EPYC silicon to test, and AMD had not officially launched its products.

A few weeks later, AMD pulled back the veil on its new processors and shared more details. Many of the things we learned from AMD countered Intel's projections, which we covered in depth in our Intel Plays Defense: Inside Its EPYC Slide Deck article. Later, AMD took to the stage at HotChips 2017 and addressed several of Intel's other claims (though not directly) and outlined even more details.

Now, Intel has finally sourced AMD's EPYC server silicon. Like many other companies, Intel conducts competitive performance analysis and presents the result of those benchmarks publicly. This isn't unlike AMD's similar tactic of posting internal benchmarks of Intel's hardware during the EYPC launch.

We have to caution that these results are from Intel's internal testing. Intel admitted that further optimizations for the EPYC processors could result in better performance in some scenarios. That means we'll have to take the results with the obligatory grain of salt. We're posting all of the slides from Intel’s presentation. We also include the test notes at the bottom of our article in a click-to-expand format.

We've also reached out to AMD for comment. As usual, if AMD has counterpoints or benchmark data, we are happy to cover it and/or include it in this article.

Publicly Available Performance Measurements

Intel's Datacenter Marketing Group notes that Intel has published 22 third-party auditable performance results for its two-socket Xeon Scalable servers, while AMD has published four for its EPYC lineup.

Intel also pushed a few of its key marketing messages, such as Xeon's 110+ world records, broad OEM ecosystem support with 200 OEM systems, Skylake-SP's fast adoption rate in HPC applications, and the continued development of Xeon ecosystem. Notably, the company points out that Xeon D is coming in "early 2018" and that the Xeon Scalable processors will soon come with an integrated FPGA.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

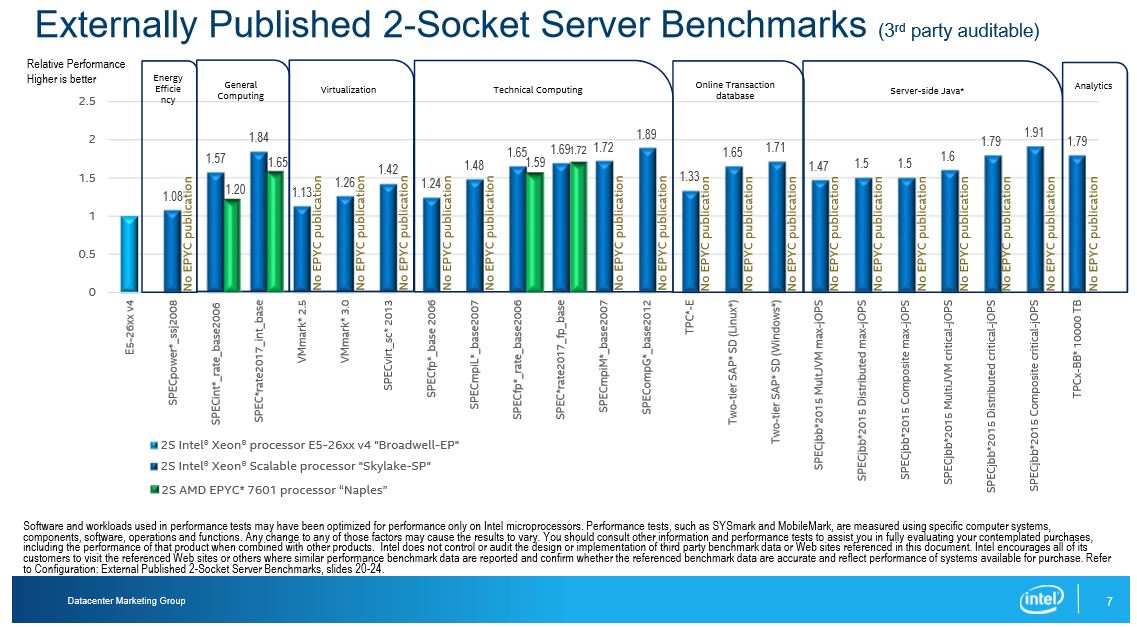

Intel provided performance comparisons between its Broadwell-EP, Skylake-SP, and AMD's EPYC processors. These results all come from publicly available SPEC (Standard Performance Evaluation Corporation) benchmarks. SPEC is a third-party organization that audits and posts results from standardized benchmarks. Only the hardware manufacturer can submit SPEC test results, so it's natural to assume that these results are generated on highly-optimized systems that might not reflect a normal server deployment. Intel does have EPYC hardware in-house, which we'll get to shortly, but it cannot post results of its internal testing with SPEC benchmarks.

Intel highlighted that AMD hadn't submitted many auditable benchmarks (noted by the 18 "No EPYC Publication" entries). AMD's two-socket server with EPYC 7601 processors is represented with the green bars. Intel normalizes the test results against a two-socket Broadwell-EP server (far left), which highlights its own gen-on-gen performance improvement with the Skylake-SP (aka Purley/Xeon Scalable) parts.

These tests consist of Intel's fastest processors against AMD's fastest, and while Intel takes the lead in most tests, the price-v-performance ratio is very lopsided. For instance, Intel's Skylake-SP 8180 MSRP is $10,000 while AMD's EPYC 7601 is $4,000.

AMD's EPYC servers are just beginning to come to market in OEM systems. For instance, HPE announced its new Proliant DL385 Gen10 server last week and revealed that it had set floating point world records in SPECrate2017_fp_base and SPECfp_rate2006. We will see more auditable benchmark results as more OEM EPYC systems come to market.

Intel did include AMD's world record SPEC submissions, but Intel focuses on the base test results. AMD's world records were measured with the peak test. The SPECfp_rate2006 test has 17 different tests, while SPECrate2017 has 12. The base test requires that the same compiler flags are used for all the sub-tests, while the peak test allows for different compiler options with each sub-test. Intel doesn't feel that peak scores are representative of real-world performance, stating "Peak scores can be used to promote perceived higher performance, but don’t reflect the real-world where static compiler configurations are commonly used."

So, Intel's chart only includes base results. In either case, AMD leads the Intel Skylake-SP platform in the base SPECrate2017 test with a score of 257 compared to Intel's score of 252.

Intel contends that AMD hasn't released any application performance results for non-NUMA applications, like databases and virtualized environments, which could be a pain point due to AMD's unique architecture. Intel has its own results for those types of applications below.

Intel's Internal Two-Socket Server Testing

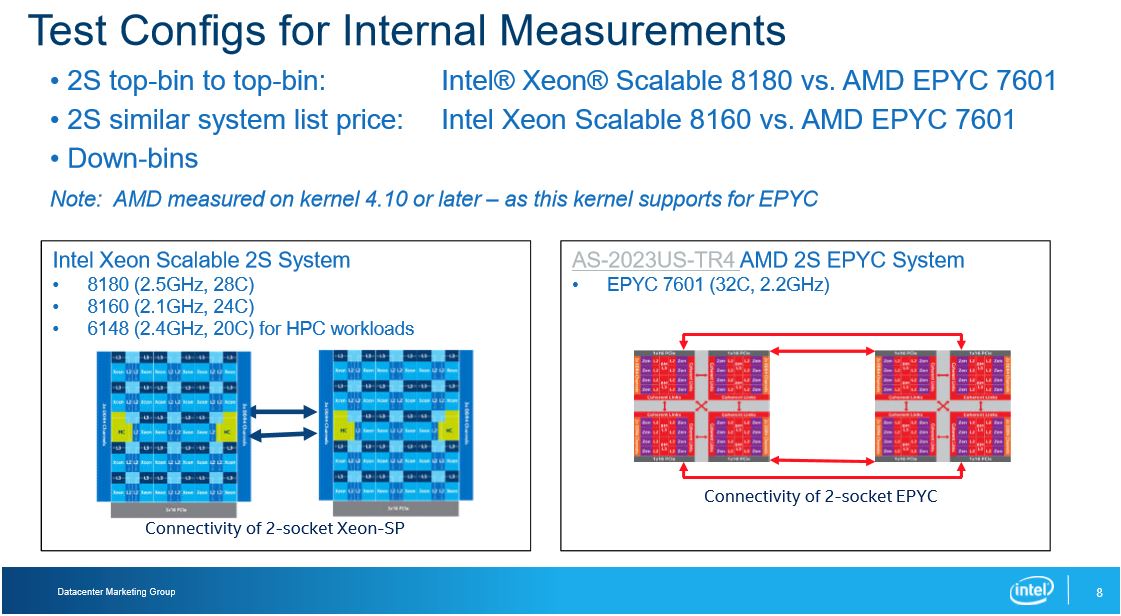

Intel also presented test results from its own internal two-socket server testing. Intel stresses that it is using both top-bin (Xeon 8180) and price-comparable (Xeon 8160) systems for these comparisons. Intel hasn't been able to source lower-priced EPYC SKUs yet, so it used the EPYC 7601 processor. The company says it will provide comparisons with the less-expensive down-bin EPYC SKUs soon.

For testing, Intel used new Linux kernels (4.1 or later) that are compatible with AMD's EPYC processors. Intel used its own compiler for its processors but used GCC, AOCC/LLVM, and Intel compilers for EPYC testing. Intel claims it uses the score from the compiler that provided the best result for EPYC (on a test-by-test basis).

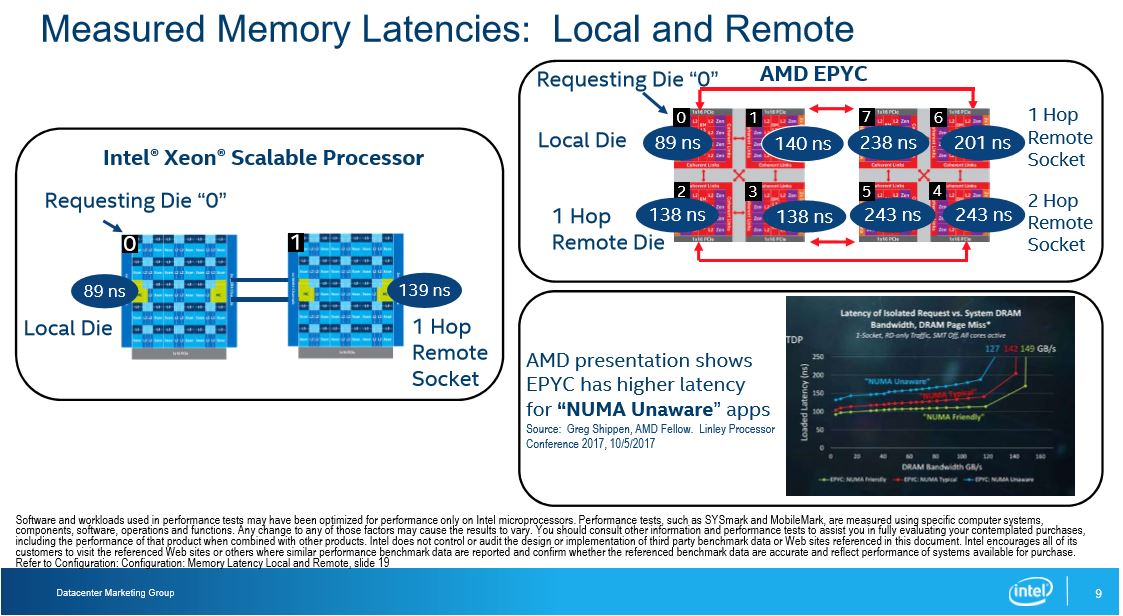

First, Intel provided memory latency tests with the Intel Memory Latency Checker. This utility measures memory bandwidth and latency, and while it is an Intel utility, the company claims it is architecture-agnostic and should provide fair latency measurements. Each EPYC die has its own memory and I/O controllers. AMD combines four die into a single package and connects them with multiple layers of Infinity Fabric. We’ve covered the topology extensively, and it does result in higher memory latency for 'remote' memory access.

In a two-socket server, Intel measured Xeon's peak memory latency at 89ns on a local socket and 139ns when requesting data from the remote socket. Intel claims that EPYC peaks at 140ns on a local socket and 243ns when requesting data from the remote socket. Intel feels these characteristics explain many of the benchmark results on the following slides. Intel also says that EPYC's higher remote memory latency isn't as desirable in non-NUMA environments, which AMD also acknowledged in its HotChips presentation.

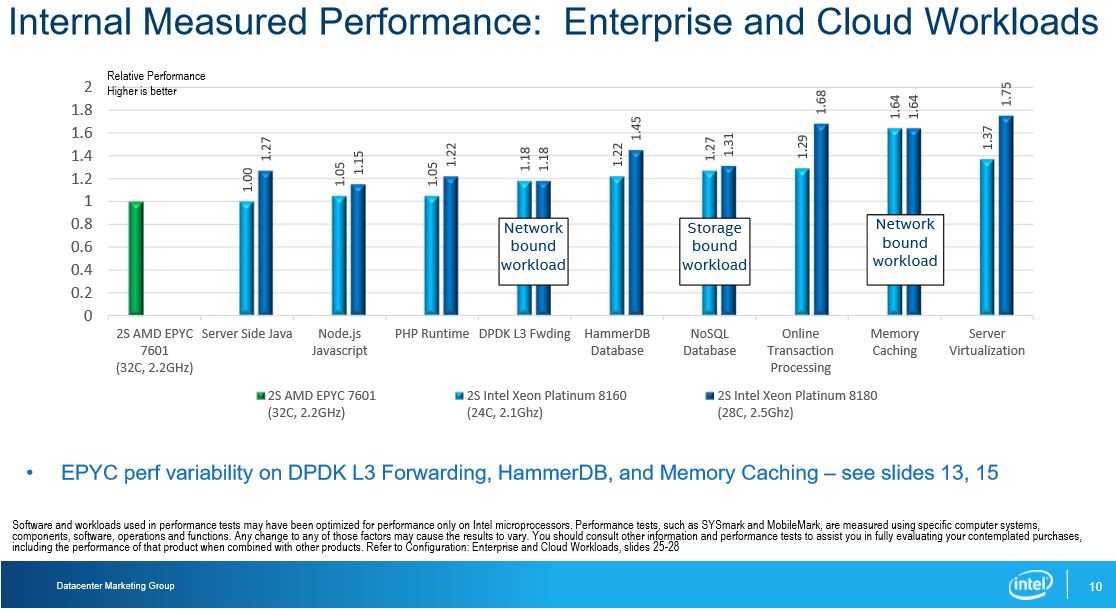

The Enterprise and Cloud Workloads slide consists of a range of common applications in a data center environment. A two-socket AMD system with 32C/64T EPYC 7601's serves as the baseline on the far left. The light blue bars are Intel's price-comparable 24C/48T Xeon 8160's, while the dark blue bars are the top-end 28C/56T Xeon 8180's.

As we can see, Intel claims it leads the EPYC server in nearly every one of the CPU-bound tests (one test is a tie). Other tests are network and storage bound, as indicated in the slides. Intel also claims a substantial advantage in those workloads. Memory-bound workloads are one of AMD's key targets, so its noteworthy that Intel did not include two-socket memory bound workloads like we'll see in the single-socket server tests.

Intel's Internal Single-Socket Server Testing

AMD's single-socket server is also a key value play. Intel restricts several key features, such as memory and PCIe channels (among many others), in its down-bin SKUs. That is a particular weakness for Intel in single-socket servers. AMD offers more PCIe lanes and memory channels/capacity than Intel, but it enables those features on every one of its processors. That poses a big threat to Intel in the single-socket space.

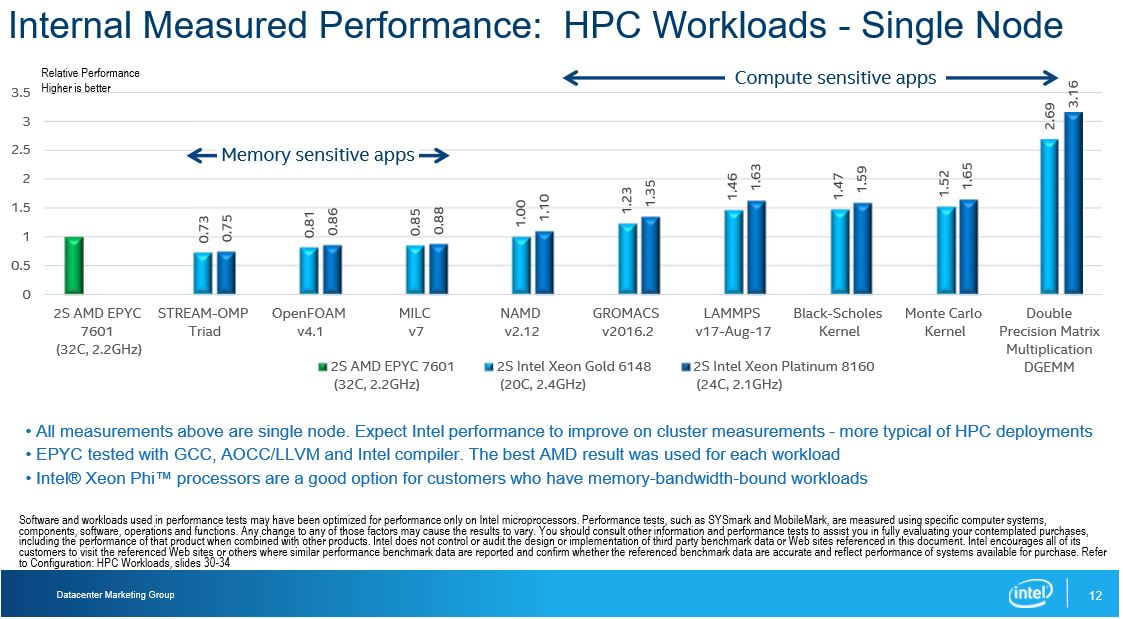

Intel's own "HPC Workloads - Single Node" results show some of that advantage. Intel uses its 20C/40T Xeon Gold 6148 and 24C/48T Platinum 8160 as the comparison processors. The STREAM-OMP Triad test measures memory throughput. AMD's EPYC led with 272.48 GB/s compared to Intel's ~200 GB/s. That extra memory performance equated to AMD wins in the memory-bound OpenFOAM and MILC benchmarks. Intel claims it leads the compute-bound workloads across the board.

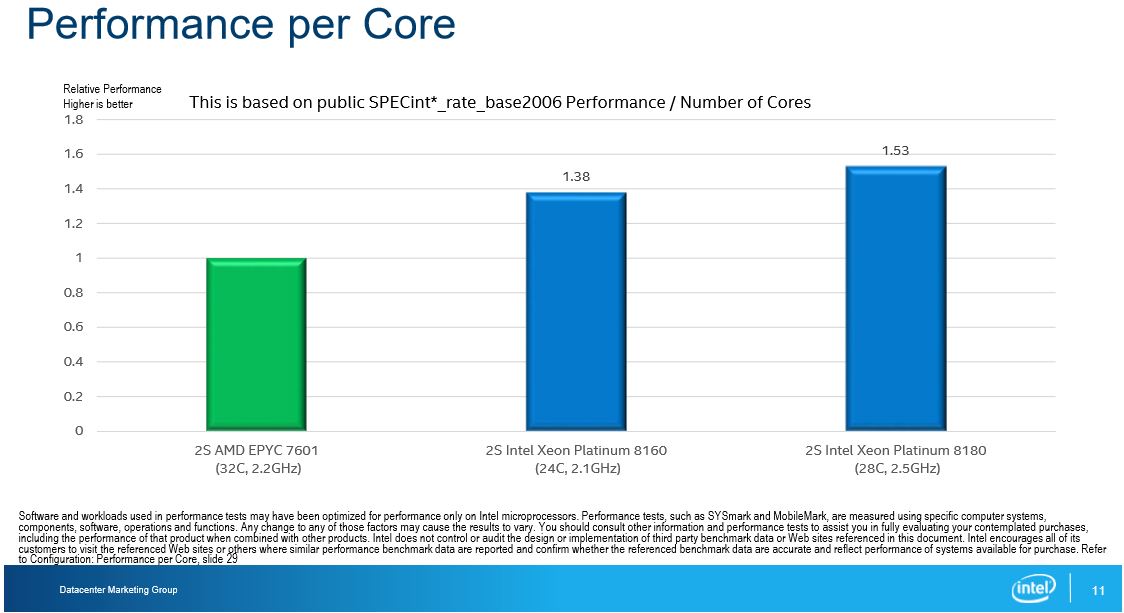

Intel also compared its single-core performance to EPYC by simply dividing the SPECint_rate_base2006 score by the number of cores. This value isn't as useful as a more direct single-threaded performance comparison, but it does serve as a general indicator to Intel's customers.

Many applications are licensed on a per-core/per-socket basis. For instance, a single-processor license for Oracle's NoSQL Database Enterprise Edition carries a $23,000 MSRP, and that's not even the most extreme example. That means a user has to pay $23,000 per processor to use the database. The cost of the processor often isn't as important as licensing fees, so buying several less-expensive processors to achieve the same performance as a single processor is not a winning solution in many cases. The same concepts apply to applications that are licensed on a per-core basis.

Intel's Performance Variability Claims

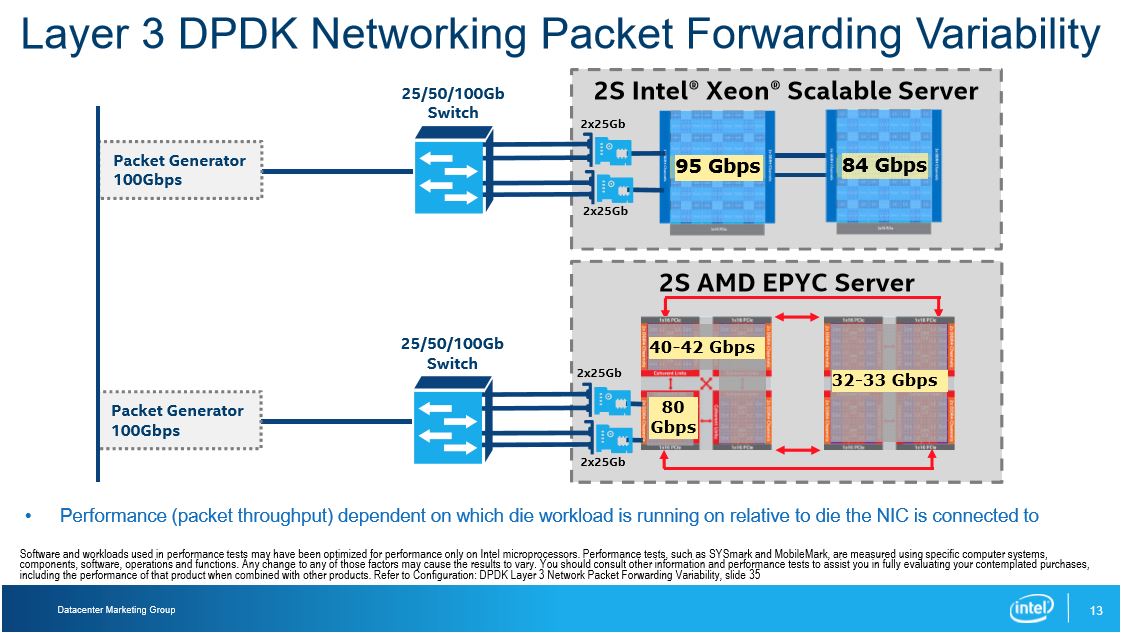

Intel feels that some workloads running on EPYC processors, particularly networking applications that access NICs connected to remote die, could suffer from reduced Infinity Fabric bandwidth between the dies and sockets. Workloads tend to migrate among cores, which Intel theorizes causes the impact of increased latency and reduced throughput to vary. It's easy to imagine this could apply to other remotely-attached PCIe devices, such as storage.

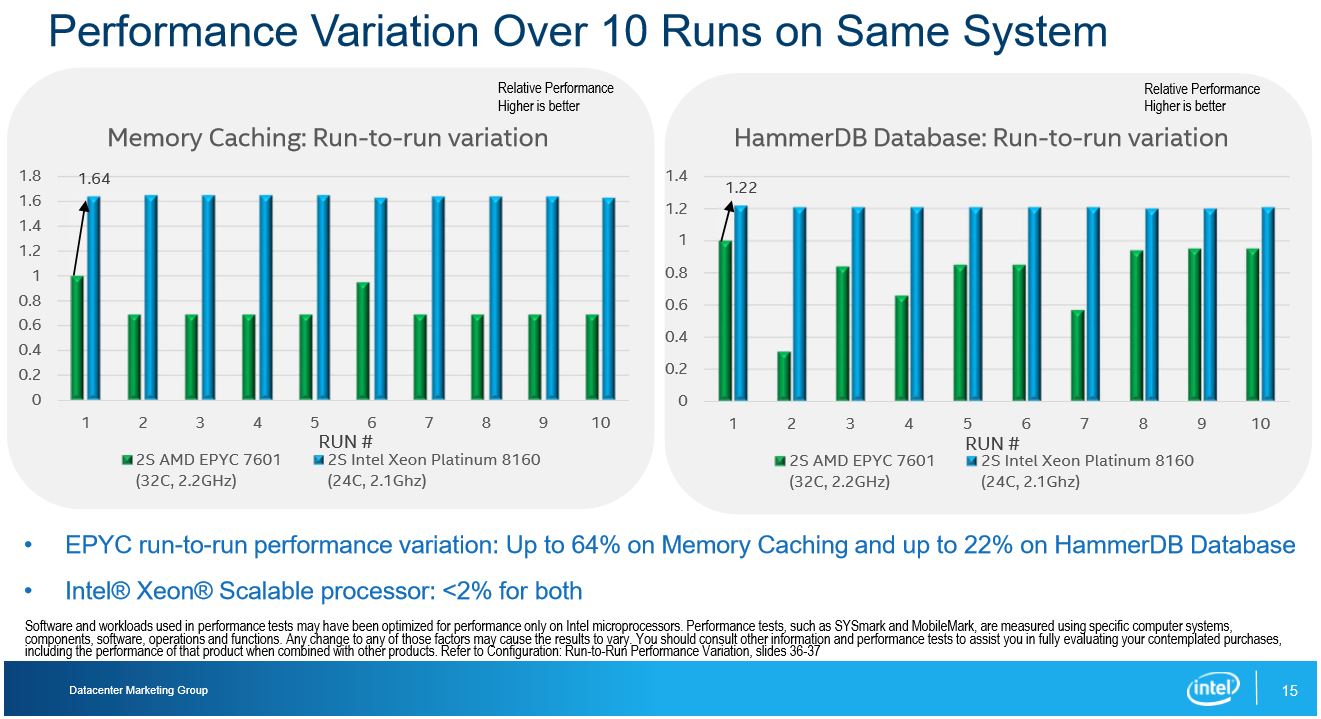

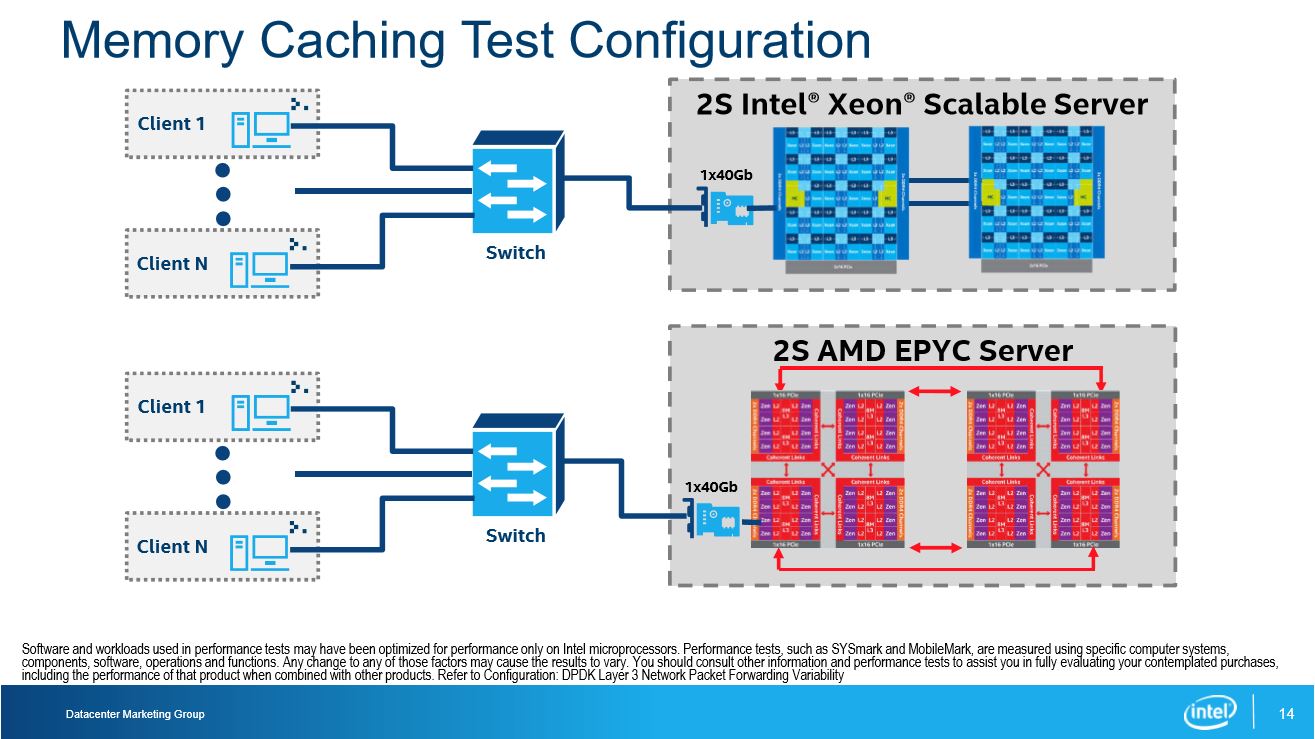

Intel claims it encountered significant performance variation during its testing with AMD's EPYC processors. The company claimed to have recorded up to 64% variation on a run-to-run basis for memory caching workloads and up to 22% variation with HammerDB, among others. Performance consistency is an absolutely critical requirement in most enterprise environments, so these results could be particularly discouraging.

However, there are several caveats. Intel said that it tried to rectify the consistency issues and did achieve some measure of success with some applications. The company acknowledges that it might be able to achieve better performance consistency through more targeted tuning and that it doesn't have access to more information that might help address the situation.

AMD also plainly states that its unique architecture is well-suited for some applications, but it isn't as impressive with others. The company plans to target the applications where it excels with the first generation of EPYC processors, and then improve the architecture as it evolves to make it more potent in a wider gamut of applications.

Thoughts

For the last five years Intel has largely owned the data center; currently, its processors power a whopping ~99.6% of the world's servers. Intel is currently restructuring and has bet a substantial portion of its future on data center-driven revenue, and while the company also faces threats from ARM-based competitors, such as Qualcomm's Centriq and Cavium's ThunderX2, AMD's processors have the advantage of the x86 instruction set architecture.

While the ARM-based competitors are attractive, it will take much more time for the software ecosystem to evolve, even with recent Linux support enhancements. That means EPYC-powered servers are the biggest near-term threat to Intel's dominance because they could serve as a simple and easy replacement.

Even if EPYC is successful, it could take AMD quite some time to regain more than a single-digit market share in the data center. That gives Intel plenty of time to adjust its product stack to counter the threat, but Intel's full-court marketing press highlights a certain amount of urgency. That's because Intel's data center products are the epitome of its high-margin ambitions, and none of its large customers, such as the Super Seven (+1), pay anything remotely near MSRP.

EPYC CPUs are a threat to Intel's margins because they give Xeon customers another option. Consequently, Intel might have to get more price-competitive in key portions of its product stack, especially with high-volume customers. That means EPYC could affect Intel's bottom line, even if it doesn't gain significant market share.

There's also some importance in AMD's return to HPE's Proliant lineup. AMD hasn't been present in that lineup for several years, so its been an all-Intel affair. Now HPE, one of the top OEMs, is embracing EPYC, and there are more to come.

Intel says it will share more test results with down-bin EPYC processors in the future, so the assault on EPYC will continue. AMD's EPYC is clearly setting off alarm bells, and it will be interesting to see if Intel takes the fight to the ARM-based competitors, like Qualcomm's Centriq, in the future.

EDIT: 11/28/17 12:15PST: Corrected Skylake-SP SPECfp2017 score in the text (from 149 to 252).

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

DerekA_C Could you guys imagine what AMD could make with Intel money and resources I mean really they are so small compared to Intel in both finance and fab capabilities, researches engineers and developers are a fraction of Intel and yet AMD still can pack a punch for what they are.Reply -

cmvrgr We should thank that AMD gave price/performance in all markets. Intel still very expensive for what they are giving.Reply -

berezini both companies started out with the similar budget? its about what you do with your investment that counts. Thus the difference between them two!Reply -

soulg1969 I've read the AMD employees created Threadripper in their spare time! Think and write about that.Reply -

samopa Reply20423019 said:We should thank that AMD gave price/performance in all markets. Intel still very expensive for what they are giving.

For that, I thank AMD very much.

PS: I'm an Intel fanboy, all my rigs are Intel's, all my servers are also Intel's

-

popoooper YouHave to read between the lines, the fact that Intel is so desperate to FIND a benchmark where they outperform amd show's how scared they are.Reply

Plus they are saying a 22 core xeon beats a 32 core epyc in "technical computing"? Bull$hit, I'm not sure what benchmark they are using but they must have dug deep to find one that shows that. also that 22 core is 7 thousand dollars.

screw you intel, amd is winning. -

Adam_153 i only have 8c 190x thread ripper , but im not getting no high latencies from die2die and my chip has two and only 4 cores each, 2 dies, and to sepaerate CCXs for both dies, and max latecy i see is like 107ns but thats on like a very large memory caching. 4gb+ not acuall but i forgot the resuts, but intels "INTERNAL" (FAKED) Tests///theyve don this before? and i now it?Reply -

Adam_153 any i bet 100M $$$ that intel isnt even running the Ram @ anything besides 2133Mhz, i bet my life on itReply -

Adam_153 so the infinity fabric Link would only be operating @ 1067Mhz, mine is @ 1667* RAM is @ DDR4 Quad channel 3333Mhz, beat that intel?? try those RAMS settings assholoesReply