AMD Radeon RX 7900 XT Board Design Revealed: 24GB and 450W

AMD's flagship Navi 31 graphics board looks big and power hungry

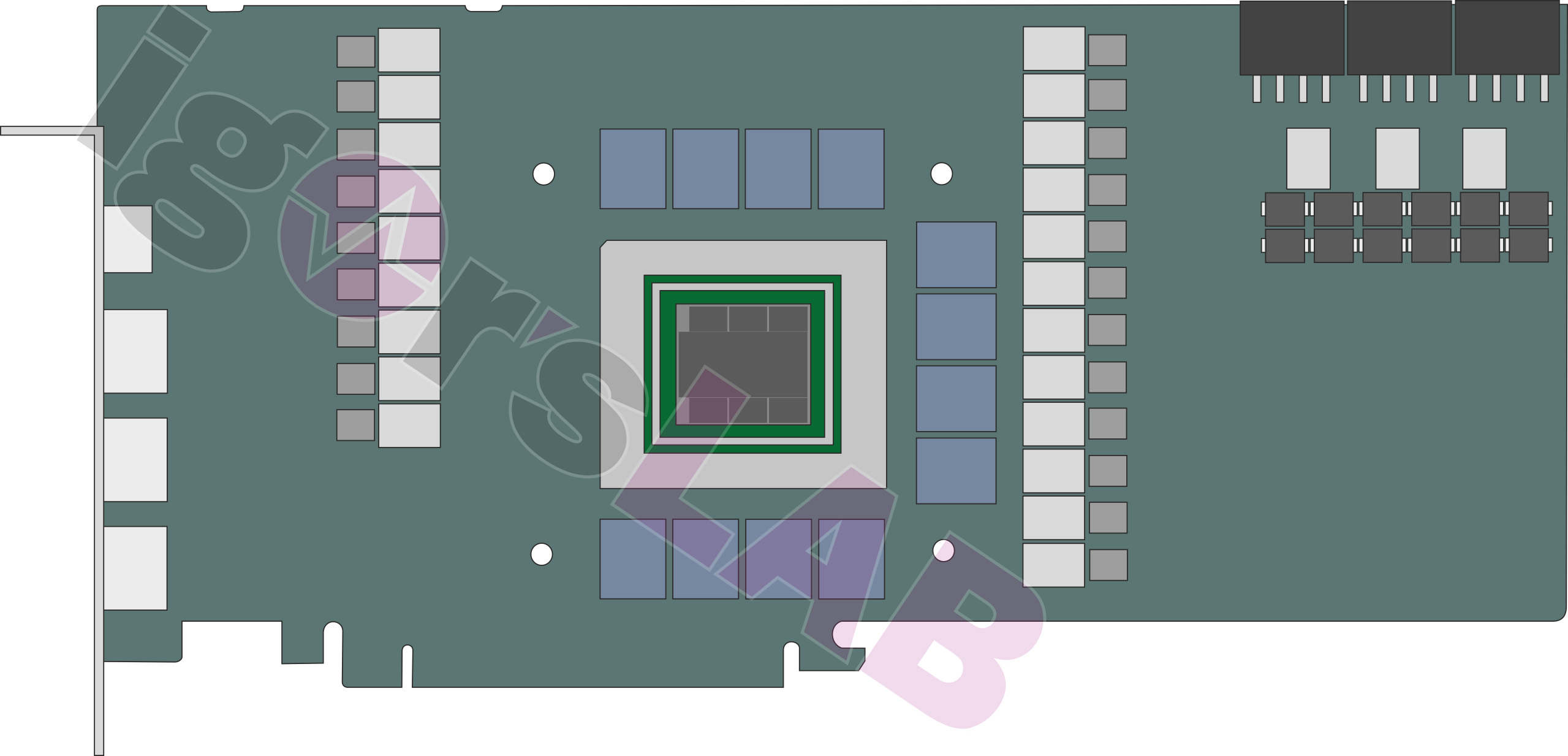

AMD's upcoming Radeon RX 7900 XT design has been dissected by our colleagues from Igor's Lab, who published (sanitized) schematics of the upcoming product. This is set to be one of the best graphics cards when it launches as AMD's flagship RX 7000-series RDNA 3 GPU in the coming months. GPU designers tend to maximize performance on their flagship offerings, which is why modern high-end GPUs are complex, large, power hungry, and require a very sophisticated power delivery subsystem coupled with an advanced cooling system. AMD's new halo part is no exception.

The Radeon RX 7900 XT will use the codenamed Navi 31 GPU based on the RDNA 3 architecture. It will connect to 24GB of GDDR6 memory using a 384-bit interface, with a chiplet design. The GPU is rumored to consist of one graphics chiplet die (GCD) as well as six memory chiplet dies (MCDs) interconnected using AMD's Infinity Fabric. The GCD will be more complex than AMD's current-generation Navi 21 GPU, and deliver substantially more performance. To that end, it will require more power than the existing part.

According to Igor's Lab, AMD's reference Radeon RX 7900 XT will feature three 8-pin auxiliary PCIe power connectors capable of delivering up to 450W of power — plus another 75W from the PCIe slot, though that will likely go largely untapped. This comes from printed circuit board schematic received by Igor, and then "sanitized" to remove any distinctive markings that hint at the manufacturer(s) of the board.

Igor says he has seen multiple board design schematics, and all of them have several things in common. The boards will reportedly use 21 voltage regulator modules (VRMs) that will spread eight 'main' phases across the GPU core, GPU system-on-chip, GPU memory controller, and GDDR6 memory. To some degree, AMD's reference design for the Radeon RX 7900 XT resembles that of the Radeon RX 6900 XT, but it has one extra 8-pin power inputs and is optimized for higher currents and power.

Speaking of auxiliary PCIe power plugs, it is noteworthy that this design uses three 8-pin connectors and not one 12VHPWR (12+4-pin) power connector, which uses the new PCIe 5.0 standard and is set to be used by Nvidia's upcoming flagship solutions. It is unclear whether AMD is sticking to this design on its final boards, but some of its partners with custom PCBs could certainly adopt the latest auxiliary PCIe 5.0 connector.

As far as display outputs are concerned, AMD reportedly plans to install three DisplayPort and one HDMI connector on its reference card, and it will no longer provide a USB-C connector for Virtual Link. It's still unclear whether AMD's upcoming graphics cards will support DisplayPort 2.0 and/or HDMI 2.1 48G. We suspect the answer is "yes," though as with Intel's Arc Alchemist, exact capabilities might be less than the maximum spec.

Since AMD's Radeon RX 7900 XT and other RDNA3-based graphics cards are set to hit the market this fall, makers of add-in-boards are probably finalizing their PCB and cooling system designs for the upcoming products. Igor points out that there might be some changes from the current PCB schematics on final products, but at least we now know that AMD is not afraid of high power consumption for its next-generation flagship graphics product.

It's also entirely possible for AMD to continue to alter specifications at this point. The board designs might be more or less complete, but GPU shader core counts, clock speeds, and memory speeds are all potentially in a state of flux — and pricing "leaks" are basically just guessing at this stage. As with all unofficial information, apply a dash of skepticism, though Igor didn't actually provide any hard numbers on the core specs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Giroro You keep using that word "schematic"... I don't think it means what you think it means.Reply -

BILL1957 Nvidia has always been crucified for their high power draw numbers many claimed they would not buy Nvidia for that reason and now AMD joins them .Reply

Will there be same outcry from the AMD fans now they are in the same situation? -

Eximo Um, triple 8-pin isn't exactly new to AMD...6900XT and 6950XT both have versions with that. Not to mention similar power profiles to the 3080 and 3080Ti.Reply

Fury X was pushing 275W, the Fury Duo clocked in at 350W, and the R9-295X2 was a whopping 500W. 8990 was 375W....

Nvidia wasn't any better, they had plenty of 250W plus cards and their dual GPUs could demand 375W as well. -

JamesJones44 ReplyBILL1957 said:Nvidia has always been crucified for their high power draw numbers many claimed they would not buy Nvidia for that reason and now AMD joins them .

Will there be same outcry from the AMD fans now they are in the same situation?

Based on the comments about what's happening with the 7000 series CPUs, I doubt it. The side stepping and twisting to fit fanboyisms on all sides (AMD, Intel and Nvidia) is always a popcorn worthy event though. -

blacknemesist Good to see there will be alternatives to the 12 pin BS. Some of us already have good expensive and reliable PSU, dropping another sack of money for something you will only use for the GPU(considering CPU and Mobo prices for AM5, most gamers do not even need them and are extremely expensive) is just a plain bad choice, specially because in as shorts as a couple of months affordable PSU and other "new" components will drop.Reply

The power draw is inevitably going to go higher and higher, thinking otherwise when technology did not make a 180º on the architecture is just wishful thinking, in fact, with ATX 3.0 we are going the opposite way by delivering 600W+ in less than 10ms to a GPU otherwise the system crashes. -

BILL1957 Reply

When I did my most recent Alder Lake build I oversized the PSU from what I thought I "needed" and made sure it had ample amps on the 12v output and had 3 separate available 8 pin connectors for the plugging in the 12-pin adapter for the GPU.blacknemesist said:Good to see there will be alternatives to the 12 pin BS. Some of us already have good expensive and reliable PSU, dropping another sack of money for something you will only use for the GPU(considering CPU and Mobo prices for AM5, most gamers do not even need them and are extremely expensive) is just a plain bad choice, specially because in as shorts as a couple of months affordable PSU and other "new" components will drop.

The power draw is inevitably going to go higher and higher, thinking otherwise when technology did not make a 180º on the architecture is just wishful thinking, in fact, with ATX 3.0 we are going the opposite way by delivering 600W+ in less than 10ms to a GPU otherwise the system crashes.

I also bought a solid well respected name brand and spent a little more for a platinum unit over the gold model more because these models are supposed to generally use a little better grade of components in the PSU build which contribute to the better efficiency ratings.

I was originally waiting for the 4080 to drop but with the recent release of now there will be a lower performing 12g 4080 which of course will be the cheapest model, questions concerning release dates and initial product availability and of course pricing being unknown last week I got off the GPU merry go round and purchased one of the 3090ti cards at their new price point.

Now with yesterday's reveal of EVGA not continuing in the GPU market I think initial 40 series product availability may be affected because at least here in the U.S.A. reports are saying EVGA cards represent about 40%of the Nvidia cards sold in North America.

It will be interesting going forward.

My computer upgrades are done for at least the next two -three years so it does feel better just being a spectator now. -

TerryLaze Reply

There is no 180 to be had here, there is no magical way to make things go faster without using more power.blacknemesist said:The power draw is inevitably going to go higher and higher, thinking otherwise when technology did not make a 180º on the architecture is just wishful thinking,

If you want a 180 YOU will have to make it, don't wait for the companies to do it for you.

Get a 1080p card instead of a 4k card and you will be using far less power.

Upscaling has become much better so use it, if you want the max ultra settings at 4k going high power draw is the only way there is. -

blacknemesist ReplyTerryLaze said:There is no 180 to be had here, there is no magical way to make things go faster without using more power.

If you want a 180 YOU will have to make it, don't wait for the companies to do it for you.

Get a 1080p card instead of a 4k card and you will be using far less power.

Upscaling has become much better so use it, if you want the max ultra settings at 4k going high power draw is the only way there is.

There is a thing called efficiency which makes something that 20 years ago required 500W now use 100W and be better. TVs are a good example, Plasma TVs were power sucking monsters compared to LED. That won't happen in GPU or CPUs until there is no way to cool the cards/system, that's when they will have to start using a different approach to make them efficient. The predicted jump in power and required power responsive demands that only ATX v3 can provide do not sound even a little bit efficient if the leaked specs are to be taken as a possibility. -

Geef Replyand pricing "leaks" are basically just guessing at this stage.

I know the price. It is more than I can afford!