AMD Engineers Show Off 'Infinitely' Stackable AM5 Chipset Cards

All the IO you can stack

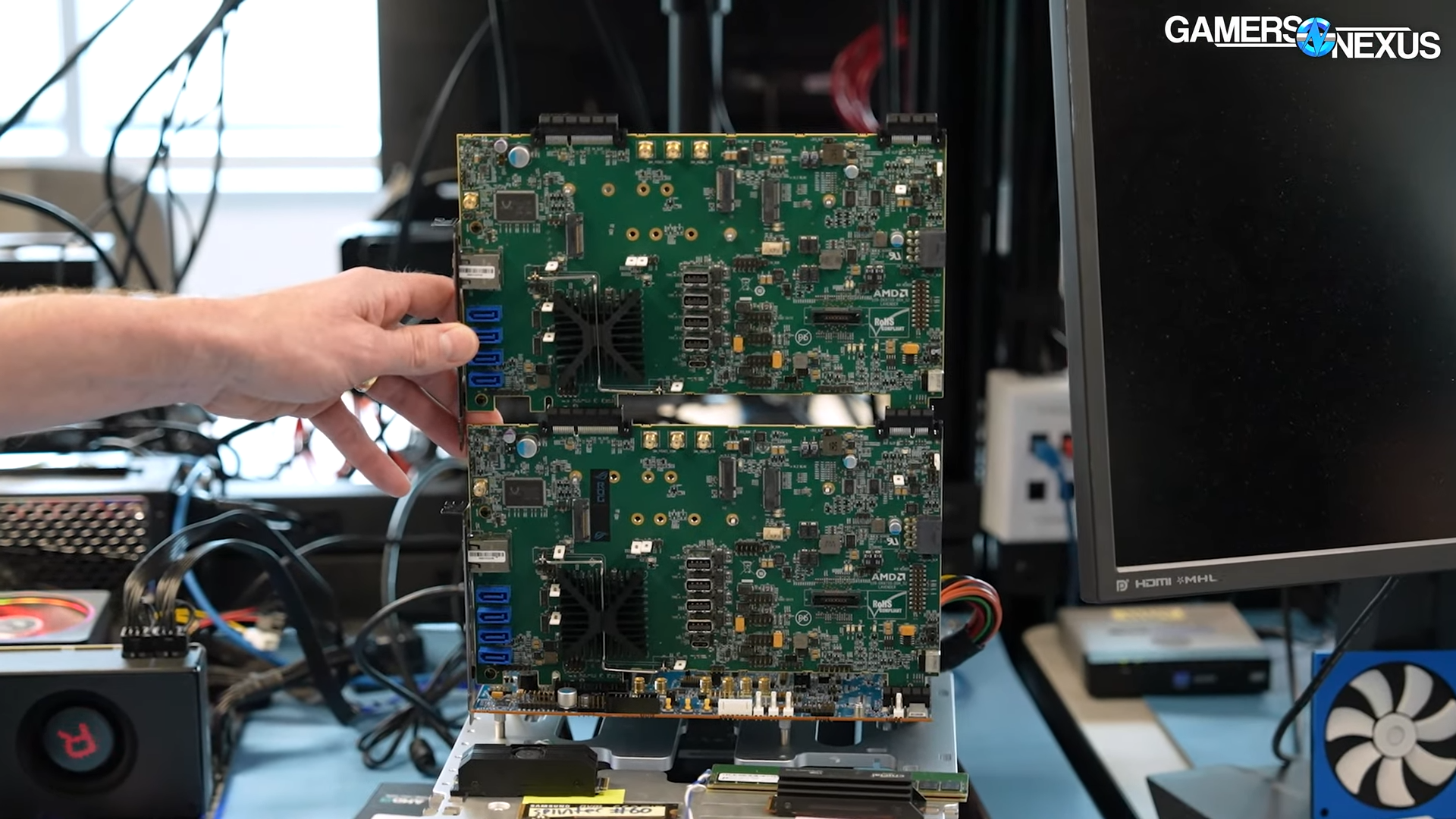

In a recent AMD lab tour documented by Gamers Nexus, AMD engineers showed off some wild equipment used to test chipset functionality on AM5 motherboards. The equipment comes in the form of slot-able, stackable chipset cards that are capable of simulating all of AMD's chipsets, including B650 all the way to X670E, and are capable of creating any I/O configuration motherboard manufacturers might put on their AM5 boards.

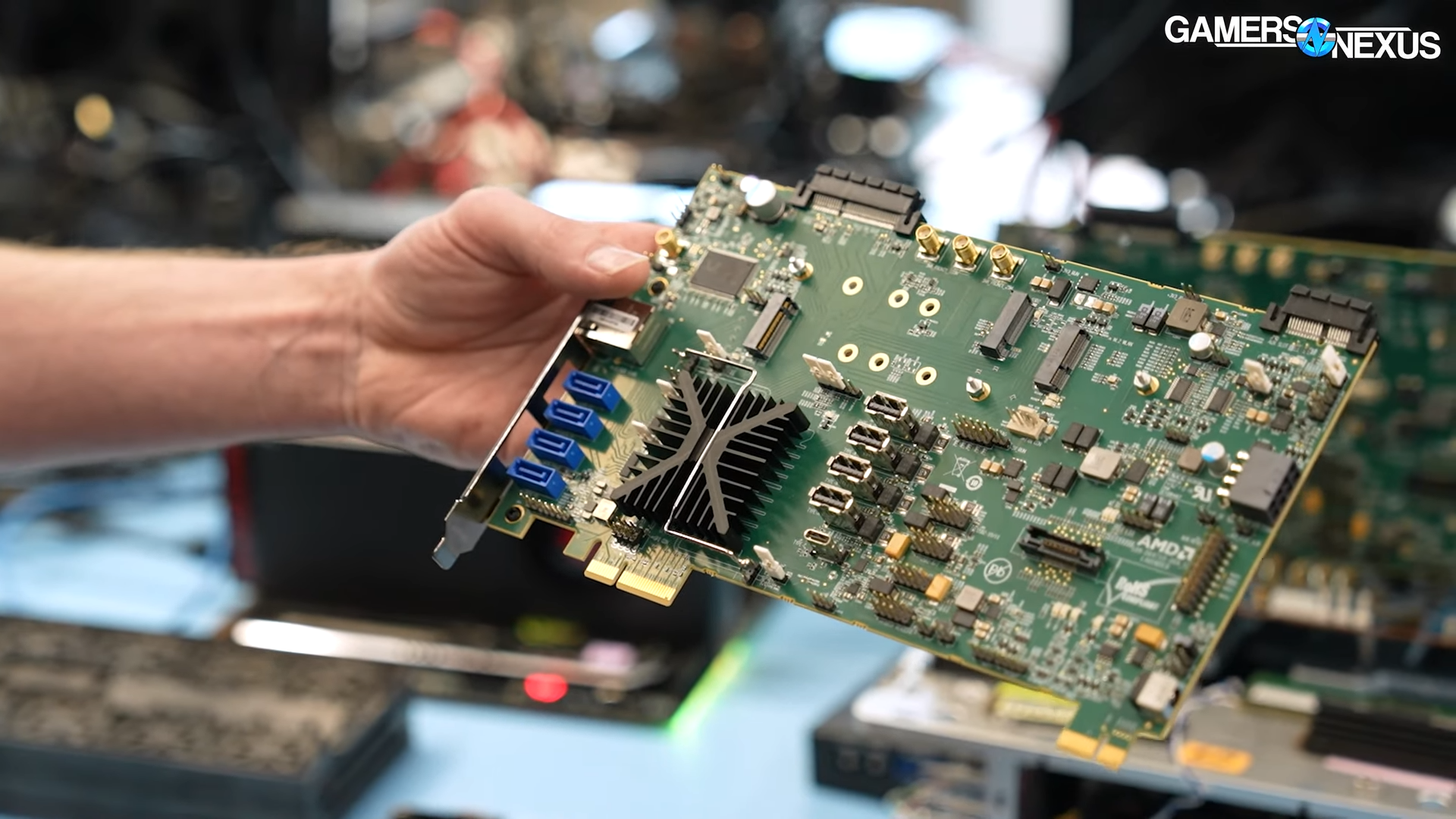

According to AMD's engineers, the chipset cards operate on a reference AM5 motherboard without its own chipset, that has additional PCIe plugs for housing the custom AMD chipset cards. The chipset cards come with a plethora of additional I/O connectivity, including SATA ports, several different versions of USB, M.2 storage, ethernet, and more. AMD's chipset cards can also be stacked on top of each other to add even more connectivity.

AMD's custom chipset cards make it super convenient to test the capabilities of its latest AM5 platform and Ryzen 7000 series processors. The cards can be used to simulate any number of I/O configurations, including B650, B650E, X670, or X670E motherboards, and to ensure the PCIe slots, controllers, and other I/O circuitry are all functioning properly. It also allows AMD to test new chipset designs without replacing its reference (testing) boards, which lowers cost.

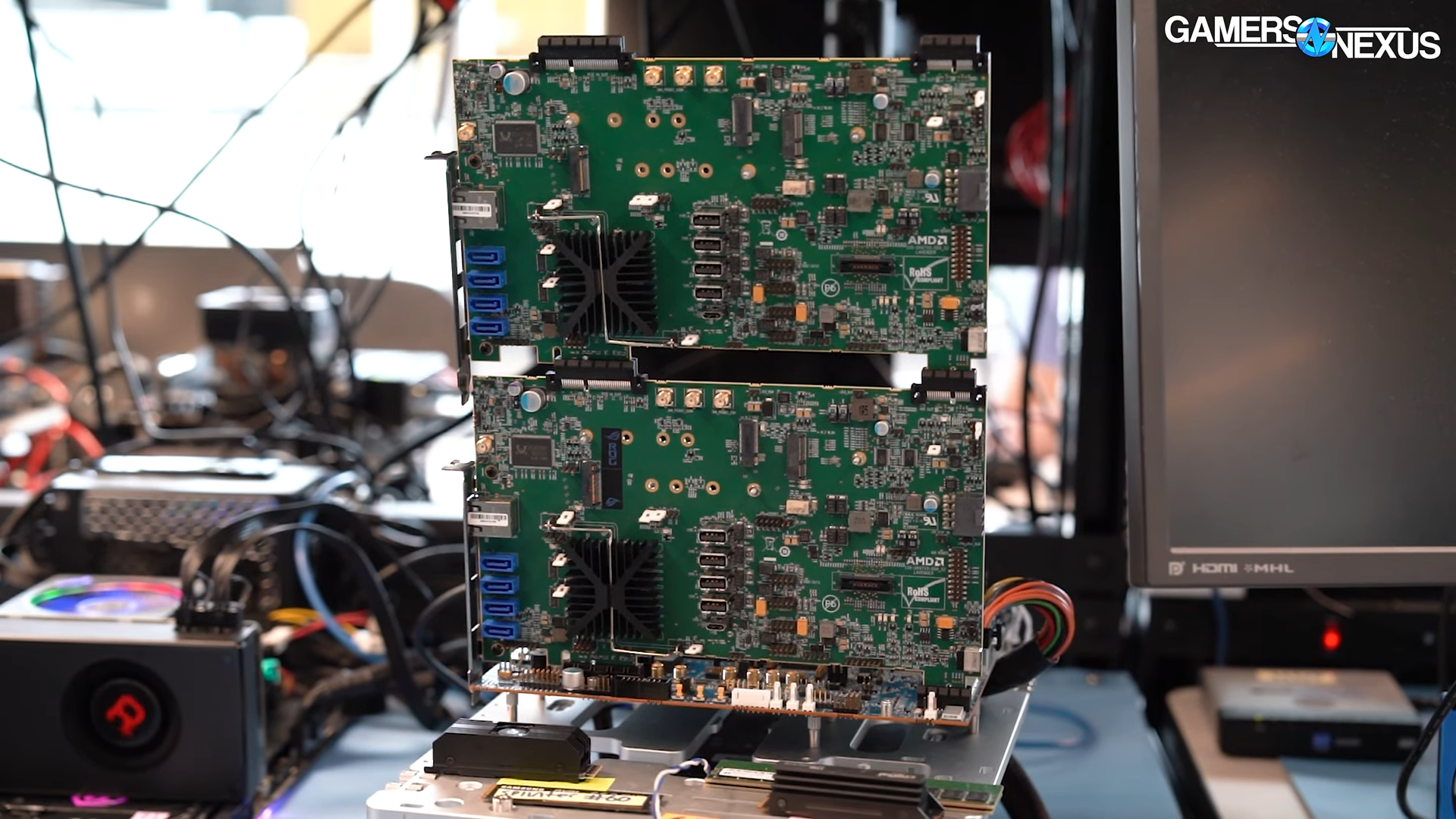

The wildest part of AMD's chipset cards is that they can be "infinitely" stacked to create more I/O than what a standard AM5 chipset might offer. AMD's engineers did not say how many cards they were able to stack, but it appears AMD can stack an unlimited amount of cards together, as long as they stay within the limitations of the mainboard's PCIe Gen 4 interface.

AMD's stackable cards really show the strengths of its multi-chipset design, which it started to use with its AM5 platform. Not only is stacking multiple chipsets cost-effective in consumer-based AM5 motherboards, but it's also useful for testing purposes as well. We've even seen some AMD board makers take inspiration from AMD's stackable solutions and create their own X670 expansion kits that can be used to turn B650 motherboards into their higher-end contemporaries.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Kamen Rider Blade With the popularity of PCIe x16 Riser cards that allow a user to move the Video Card to a different location, why don't we save the end user some $$$ by just moving the PCIe x16 Slot to the bottom of the MoBo, regardless of MoBo Form Factor.Reply

This way the HeatSink & Fans will hang off the MoBo area w/o blocking any existing MoBo PCIe Expansion Card Slots and you can start routing the cooling out of the case through the sides and back.

It's a Win/Win situation for everybody.

All you need is a slightly larger form factor case to handle your existing MoBo Form Factor.

With the advent of 2-5 slot Video Cards, I think it's a good way to get back the Expansion Card slots that are traditionally blocked by your Video Card due to it's THICC by design nature. -

brandonjclark Are these just to test functionality? Seems like the bus speeds wouldn't be the same so performance testing would be out of the question, right?Reply

Correct if I'm wrong, nerds! -

TerryLaze Reply

Same as with riser cards, longer distance from CPU = more connectivity issues and more things that the maker has to do to make the connection stable.Kamen Rider Blade said:With the popularity of PCIe x16 Riser cards that allow a user to move the Video Card to a different location, why don't we save the end user some $$$ by just moving the PCIe x16 Slot to the bottom of the MoBo, regardless of MoBo Form Factor.

This way the HeatSink & Fans will hang off the MoBo area w/o blocking any existing MoBo PCIe Expansion Card Slots and you can start routing the cooling out of the case through the sides and back.

It's a Win/Win situation for everybody.

All you need is a slightly larger form factor case to handle your existing MoBo Form Factor.

With the advent of 2-5 slot Video Cards, I think it's a good way to get back the Expansion Card slots that are traditionally blocked by your Video Card due to it's THICC by design nature.

It's a big added cost.

I mean look at the card in the article, that's all the stuff needed to make it happen, granted that is for a bunch of IO but even for a GPU alone you would need quite a bit of that. -

TerryLaze Reply

Depends on what's under that heat sink...if there is a tiny CPU there that can handle the pressure you would get full speed, probably not for everything connected on it at the same time but one card at a time should be relatively easy.brandonjclark said:Are these just to test functionality? Seems like the bus speeds wouldn't be the same so performance testing would be out of the question, right?

Correct if I'm wrong, nerds! -

PEnns ReplyKamen Rider Blade said:With the popularity of PCIe x16 Riser cards that allow a user to move the Video Card to a different location, why don't we save the end user some $$$ by just moving the PCIe x16 Slot to the bottom of the MoBo, regardless of MoBo Form Factor.

This way the HeatSink & Fans will hang off the MoBo area w/o blocking any existing MoBo PCIe Expansion Card Slots and you can start routing the cooling out of the case through the sides and back.

It's a Win/Win situation for everybody.

All you need is a slightly larger form factor case to handle your existing MoBo Form Factor.

With the advent of 2-5 slot Video Cards, I think it's a good way to get back the Expansion Card slots that are traditionally blocked by your Video Card due to it's THICC by design nature.

Honestly, I am baffled how the motherboard design has barely changed the last, what, 25 - 30 years?? Of course, it changed (barely) in order to make use of advancement in PCI speeds or add an SSD, for example, but one can't really call it a 're-design" in anyway! But everything else, from GPU / CPU /RAM /PSU, etc, the same old tired design is still the same.

And especially now, where GPUs expect to take over 3-5 slots, or whatever slots they wish and they (and the CPU) need more cooling at the expense of other mobo real-estate.

There are so many ways those engineers - busy churning out billions of the same old mobo design - that could have made major changes to enhance and streamline the functionality, instead of looking at the same old tired layout and calling it a day - and leave the end user to deal with the obsolete insanity! -

Kamen Rider Blade Reply

I trust the MoBo Maker to get the connectivity implemented correctly & reliably over the PCIe x16 Riser manufacturer.TerryLaze said:Same as with riser cards, longer distance from CPU = more connectivity issues and more things that the maker has to do to make the connection stable.

It's a big added cost.

I mean look at the card in the article, that's all the stuff needed to make it happen, granted that is for a bunch of IO but even for a GPU alone you would need quite a bit of that.

If somebody is going to make it work correctly & reliably, it's the MoBo makers, they have the most incentive to do it correctly the first time.

I've seen too many people complain about "Dodgy" PCIe x16 Riser when direct pluging into the MoBo's PCIe x16 would've solved the issue. -

Kamen Rider Blade Reply

That's why it's call the "ATX Standard"PEnns said:Honestly, I am baffled how the motherboard design has barely changed the last, what, 25 - 30 years?? Of course, it changed (barely) in order to make use of advancement in PCI speeds or add an SSD, for example, but one can't really call it a 're-design" in anyway! But everything else, from GPU / CPU /RAM /PSU, etc, the same old tired design is still the same.

Common Design Standards allow interchange-able parts from the past to the future.

It's a HUGE benefit to the PC eco system.

Proprietary Crap = Middle Finger and should be "OUTLAWed" from being manufactured.

But the GPU can be easily moved to the "Bottom Slot".PEnns said:And especially now, where GPUs expect to take over 3-5 slots, or whatever slots they wish and they (and the CPU) need more cooling at the expense of other mobo real-estate.

It's the only device that seems to have a ever increasing Thermal & Power Consumption rate over time that takes up ever more slots.

Since 5x Slots have become a some-what common design amongst the High End Video Cards, we might as well make a slight change to the Placement of the PCIe x16 slot to accomodate it and improve thermals for our PC design.

Those engineers times are being wasted on superficial crap like RGB and stupidly designed Heat Sinks to look pretty.PEnns said:There are so many ways those engineers - busy churning out billions of the same old mobo design - that could have made major changes to enhance and streamline the functionality, instead of looking at the same old tired layout and calling it a day - and leave the end user to deal with the obsolete insanity!

Things that aren't really necessary when good ole Basic HeatSinks have worked for ages.

We don't need to include bullets into our Heat Sinks or some fancy art on it.

We need Tried & True reliability, consistency, & flexibility in connections. -

TerryLaze Reply

Desktop motherboards have stayed the way there are because it's the most efficient way to do things.PEnns said:Honestly, I am baffled how the motherboard design has barely changed the last, what, 25 - 30 years?? Of course, it changed (barely) in order to make use of advancement in PCI speeds or add an SSD, for example, but one can't really call it a 're-design" in anyway! But everything else, from GPU / CPU /RAM /PSU, etc, the same old tired design is still the same.

And especially now, where GPUs expect to take over 3-5 slots, or whatever slots they wish and they (and the CPU) need more cooling at the expense of other mobo real-estate.

There are so many ways those engineers - busy churning out billions of the same old mobo design - that could have made major changes to enhance and streamline the functionality, instead of looking at the same old tired layout and calling it a day - and leave the end user to deal with the obsolete insanity!

If you want a new design mobo get a new design PC...

This is the "mobo" of intel nuc 9 which is a whole PC on a pci slot card.

https://www.anandtech.com/show/15720/intel-ghost-canyon-nuc9i9qnx-review -

TerryLaze Reply

Yes, I'm just saying that it would be a pretty big cost, not that they wouldn't do it well.Kamen Rider Blade said:I trust the MoBo Maker to get the connectivity implemented correctly & reliably over the PCIe x16 Riser manufacturer.

If somebody is going to make it work correctly & reliably, it's the MoBo makers, they have the most incentive to do it correctly the first time.

I've seen too many people complain about "Dodgy" PCIe x16 Riser when direct pluging into the MoBo's PCIe x16 would've solved the issue.