Nvidia's DGX-2, the 'World's Largest GPU,' Just Got Faster

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

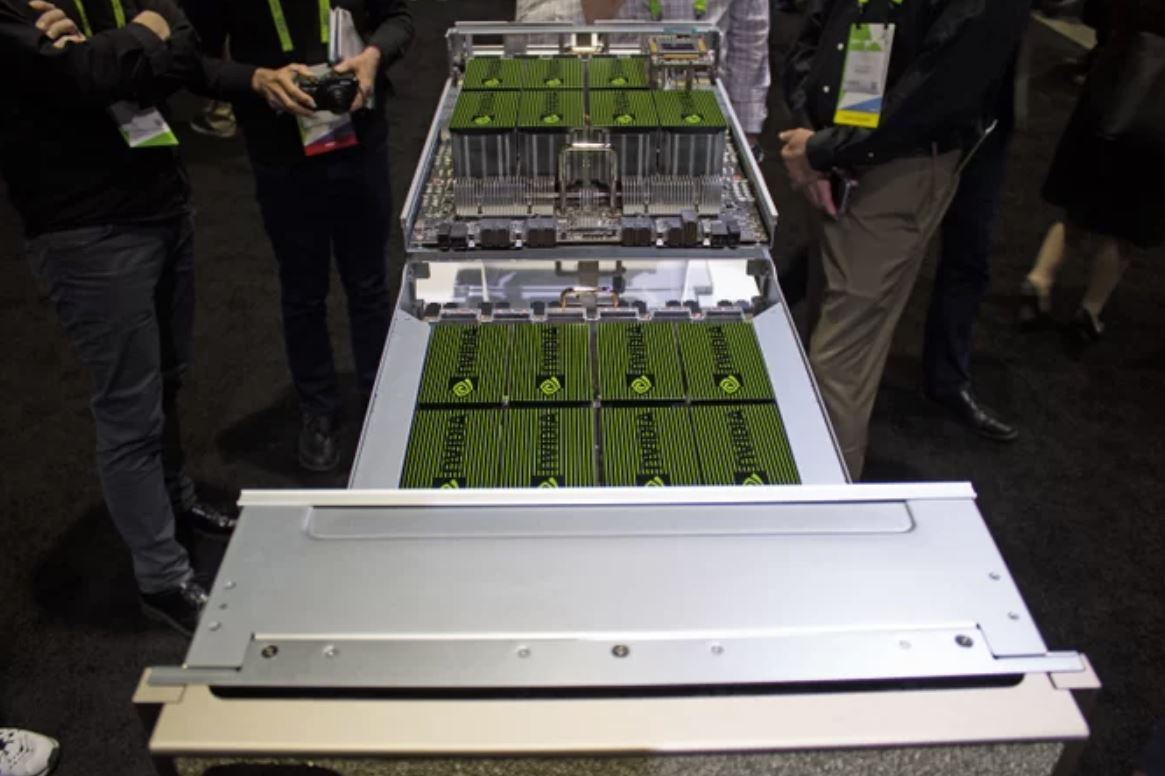

Nvidia CEO Jensen Huang famously introduced the DGX-2 server as the "World's Largest GPU" at GTC 2018. Huang based his fantastic claim on the fact that the system, which actually has 16 powerful Tesla V100 GPUs and a whopping total of 512GB of HBM2 memory, presents itself to the host system as one large GPU with a unified memory space. This massive collection of computing power is used in data centers to churn through demanding machine learning workloads. But now the company has lifted its power limits to eke out yet more performance.

Our friends at Servethehome.com noticed that Nvidia released a new DGX-2H model with little fanfare. This new model features the same ludicrous allotment of GPUs, wielding a total of 81,920 CUDA cores and 12,2240 Tensor cores, but the company has kicked performance up a notch by increasing the thermal boundaries of the V100 GPUs.

Nvidia originally bumped the Tesla V100 from 300W to 350W when it launched the DGX-2. Now the company has raised the V100's power limit further to 450W per GPU and also swapped in faster Intel Platinum 8174 processors, a notable upgrade over the original Platinum 8168 models.

The increased power draw brings the systems' 10kW of power consumption up to 12kW and increases performance from 2 petaflops to 2.1 petaflops. That seems like a small performance improvement for a large increase in power consumption. However, the performance gains from running the GPUs at higher frequencies may impact some workloads more than others, so the tests used for performance measurements may not fully quantify the benefit of two extra kilowatts of power consumption.

Cramming 12kW of power consumption into an 8U chassis is quite the feat: The original DGX even uses a 48V power distribution subsystem to reduce the amount of current needed to drive the system. The increased power consumption could lead to excessive thermal output, but Nvidia hasn't commented on whether or not the system will need a beefed-up cooling system to handle the increased power consumption.

In either case, Nvidia's choice to push the voltage/frequency curve higher to wring more performance out of its GPUs is one of the few easy steps left to increase performance for the now-mature design. The company already increased HBM2 capacity from 16GB to 32GB earlier this year. Even after the debut of the newer Turing architecture, Nvidia has reiterated several times that the Volta V100 GPUs will continue to be its solution for AI workloads, so this may be a stopgap measure until it introduces a new architecture for machine learning workloads.

The original DGX-2 retails for $400,000, but Nvidia hasn't responded yet to our request for updated pricing for the DGX-2H. But as the saying goes, if you have to ask, you probably can't afford it.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.