Supermicro 1024US-TRT Server Review: 128 Cores in a 1U Chassis

Rack 'em and stack 'em

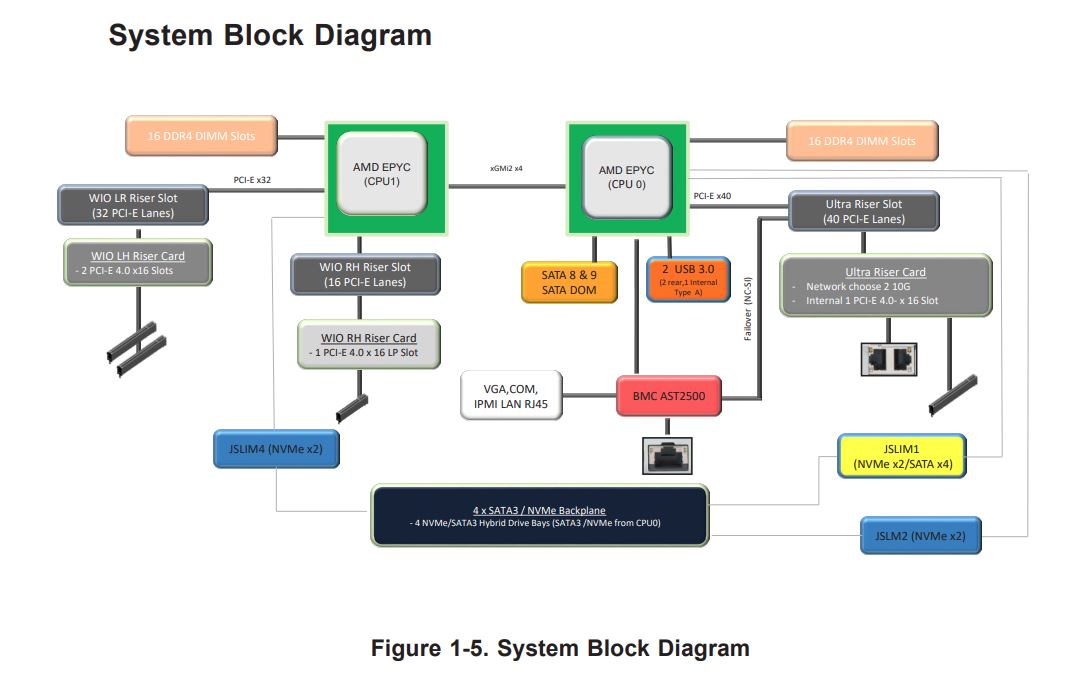

The push for enhanced compute density continues, and servers like Supermicro's 1024US-TRT, which hails from the company's 'A+ Ultra' family, are designed to answer that call with generous compute capabilities paired with copious connectivity options. Supermicro designed this slim 1U dual-socket server for high-density environments in enterprise applications, high-end cloud computing, virtualization, and technical computing workloads. The platform supports up to 8TB of DDR4 memory spread across 32 DIMMs along with plenty of PCIe 4.0 connectivity, dual 10 GbE LAN ports, and up to four NVMe devices in the front bays.

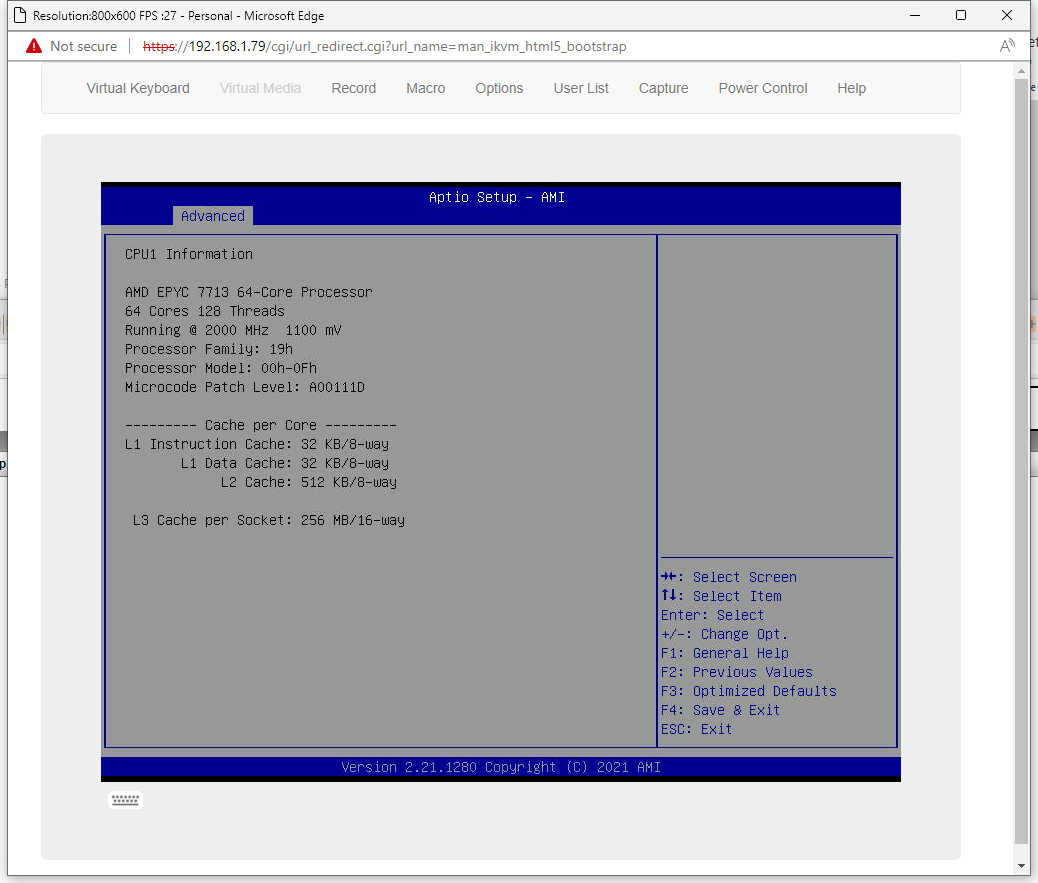

The platform supports dual AMD EPYC 7003 and 7002 processors, meaning it supports up to 64 cores and 128 threads with the EPYC Milan, Milan-X, or EPYC Rome processors. Supermicro's Intel Ice Lake X12 servers can't match that number of cores and threads in a single platform, signifying that the 1024US-TRT offers the utmost density in its portfolio. Naturally, Supermicro competes with other OEM server vendors, like Lenovo, Dell/EMC, and HPE, in the high-volume general-purpose 1U realm with the 1024US-TRT.

AMD's EPYC Genoa will launch later this year to compete with Intel's incessantly-delayed Sapphire Rapids, setting the stage for either AMD's continued dominance or an Intel resurgence. As we wait for those launches, here's a look at some of our benchmarks and the current state of the data center CPU performance hierarchy in several hotly-contested price ranges.

Supermicro 1024US-TRT Server

The Supermicro 1024US-TRT server comes in the 1U form factor, enabling incredible density. The server supports AMD's EPYC 7002 and 7003 processors that top out at 64 cores apiece, translating to 128 cores and 256 threads spread across the dual sockets. In addition, the platform also supports AMD's Milan-X chips (BIOS version 2.3 or newer) that come with up to 64 cores and 128 threads paired with a once-unthinkable 768MB of L3 cache. These chips help gear the 1024US-TRT for more diverse workloads beyond its traditional target markets, expanding to technical computing workloads, too. This includes workloads like Electronic Design Automation (EDA), Computational Fluid Dynamics (CFD), Finite Element Analysis (FEM), and structural analysis.

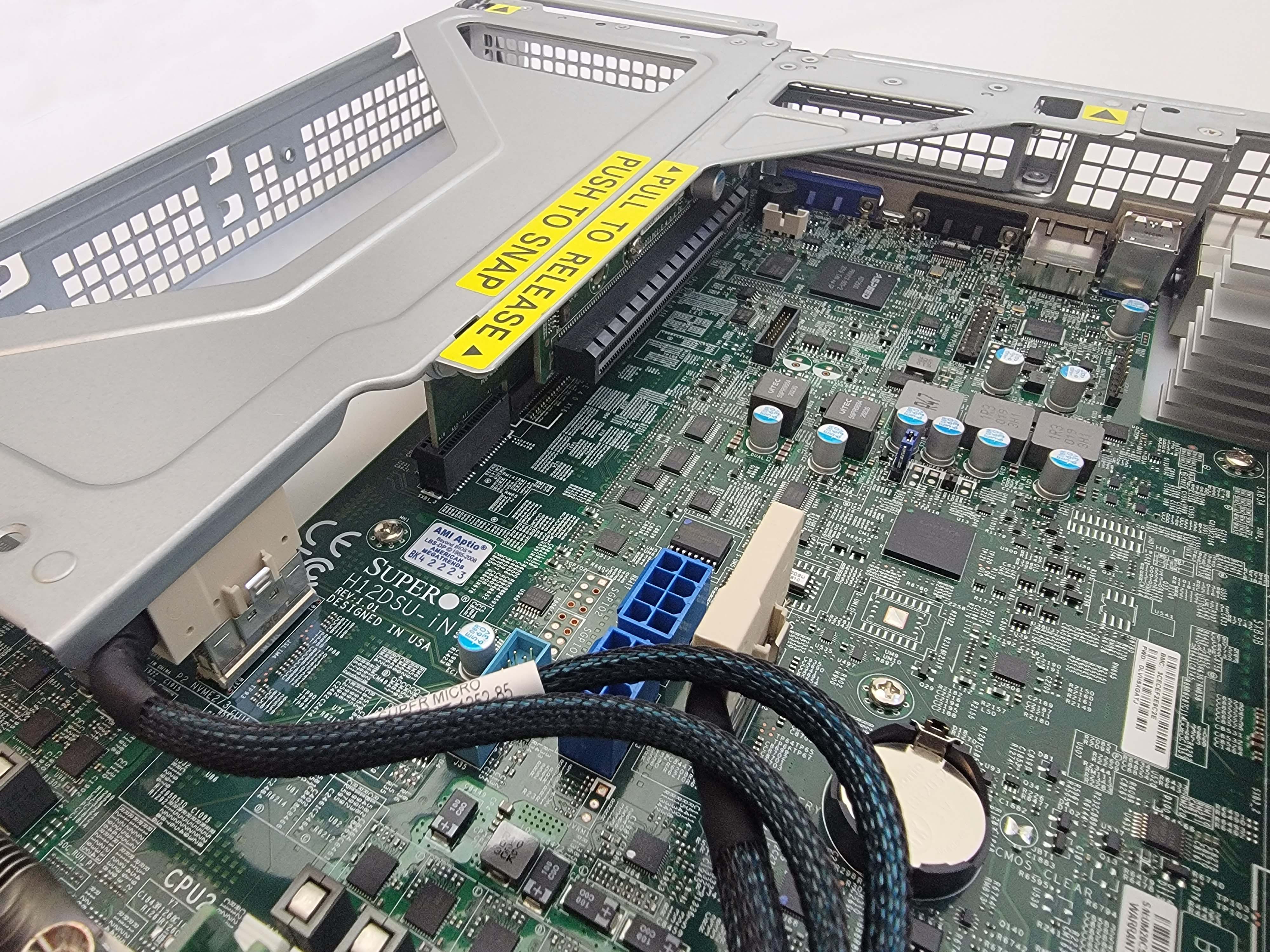

The 1024US-TRT has a tool-less rail mounting system with square pegs that eases installation into server racks, and the CSE-819UTS-R1K02P-A chassis measures 1.7 x 17.2 x 29 inches and slides into 19" racks.

The server accommodates CPU TDPs that stretch up to 280W, but using chips beyond 225W requires special accommodations. As such, the server can technically support the most powerful EPYC processors, like the 7Hxx-series models, but you'll need to verify those configurations with Supermicro.

The front panel comes with standard indicator lights, like a color-coded information light that indicates various types of failures and overheating while also serving as a unit identification LED. It also includes hard drive activity, system power, and two LAN activity LEDs. Power, reset, and unit identification (UID) buttons are also present at the upper right of the front panel, with the latter illuminating a light on the rear of the server for easy location of the unit in a packed rack.

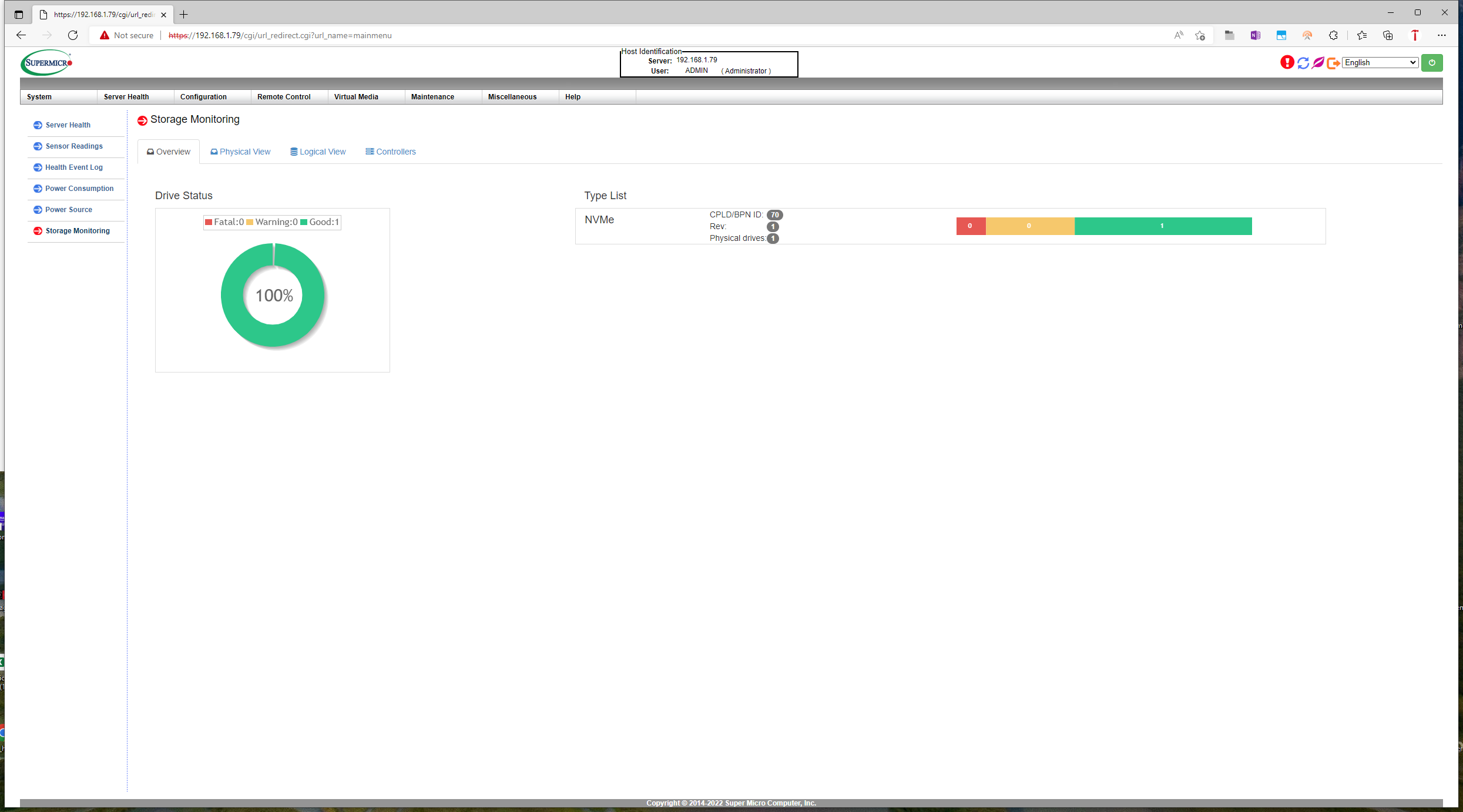

By default, the system has four tool-less 3.5-inch hot-swap SATA 3 drive bays, but you can configure the server to accept four NVMe drives on the front panel (you use 2.5" adaptors for the SSDs). We tested with a PCIe 4.0 Kioxia 1.92TB KCD6XLUL1T92 SSD. You can also add an optional SAS card to enable support for SAS storage devices and an optical drive. The front of the system also houses a slide-out service/asset tag identifier card to the upper left. This is important as it holds the default BMC user ID and password, enabling access to the remote management features.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

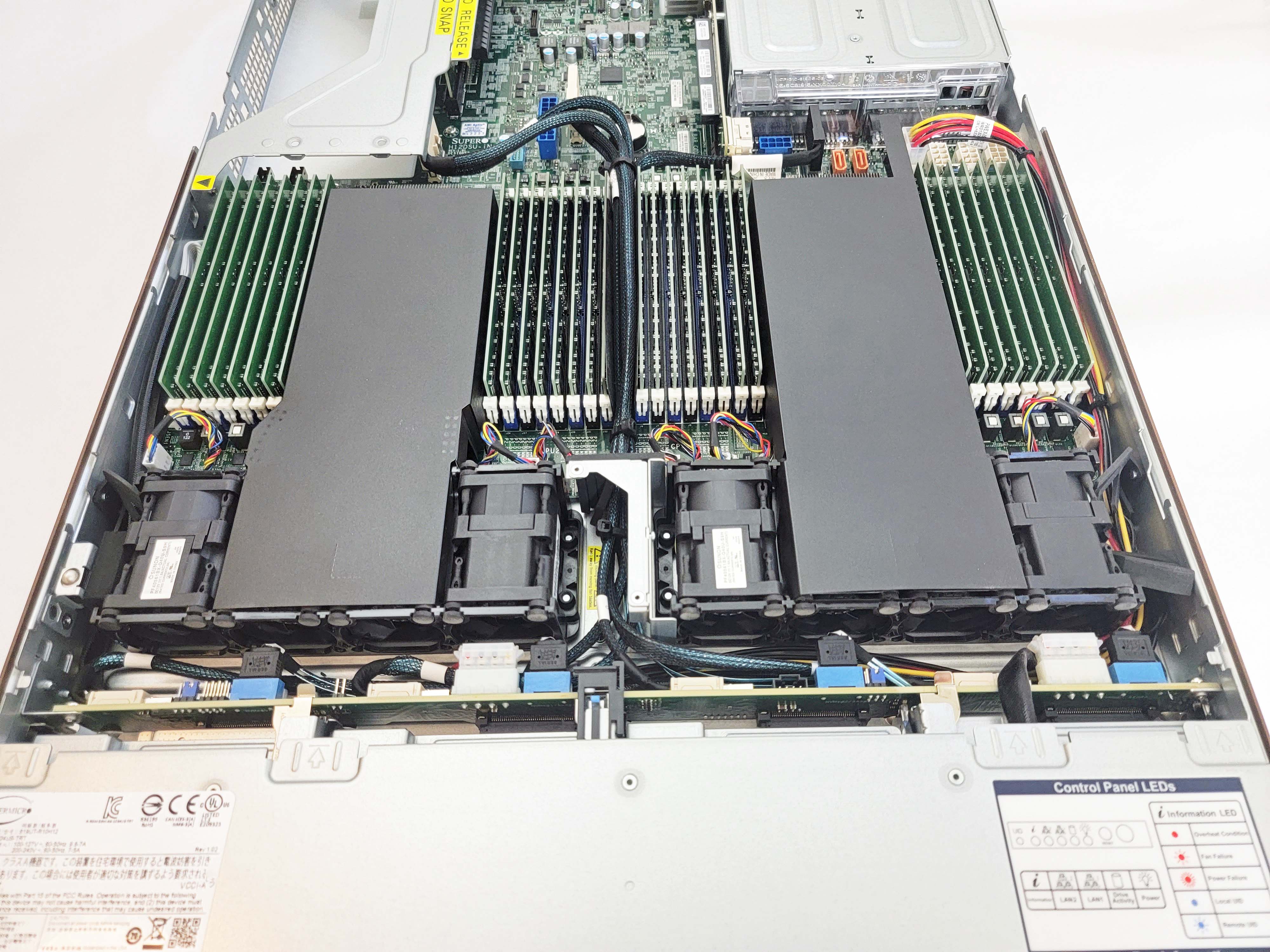

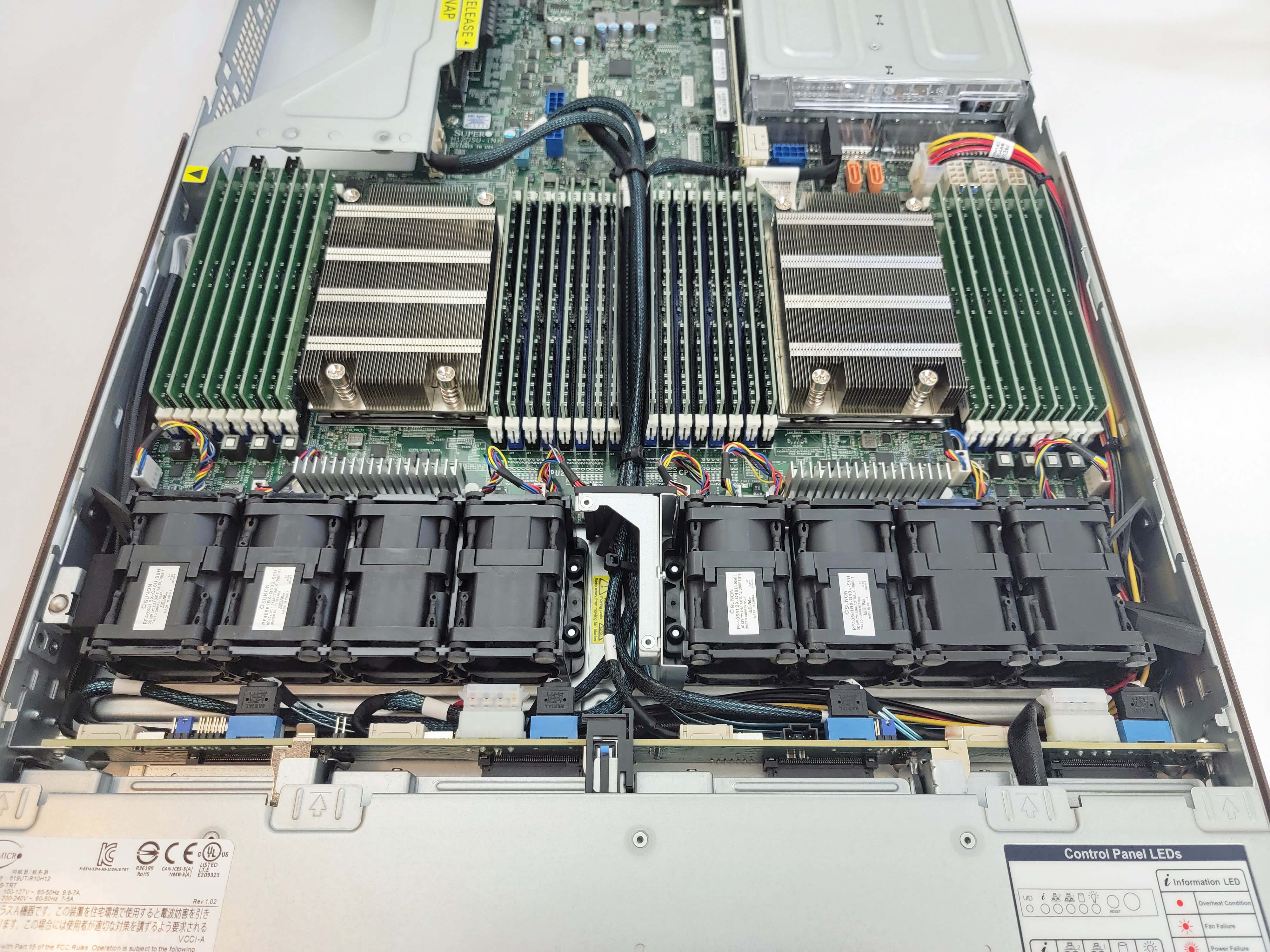

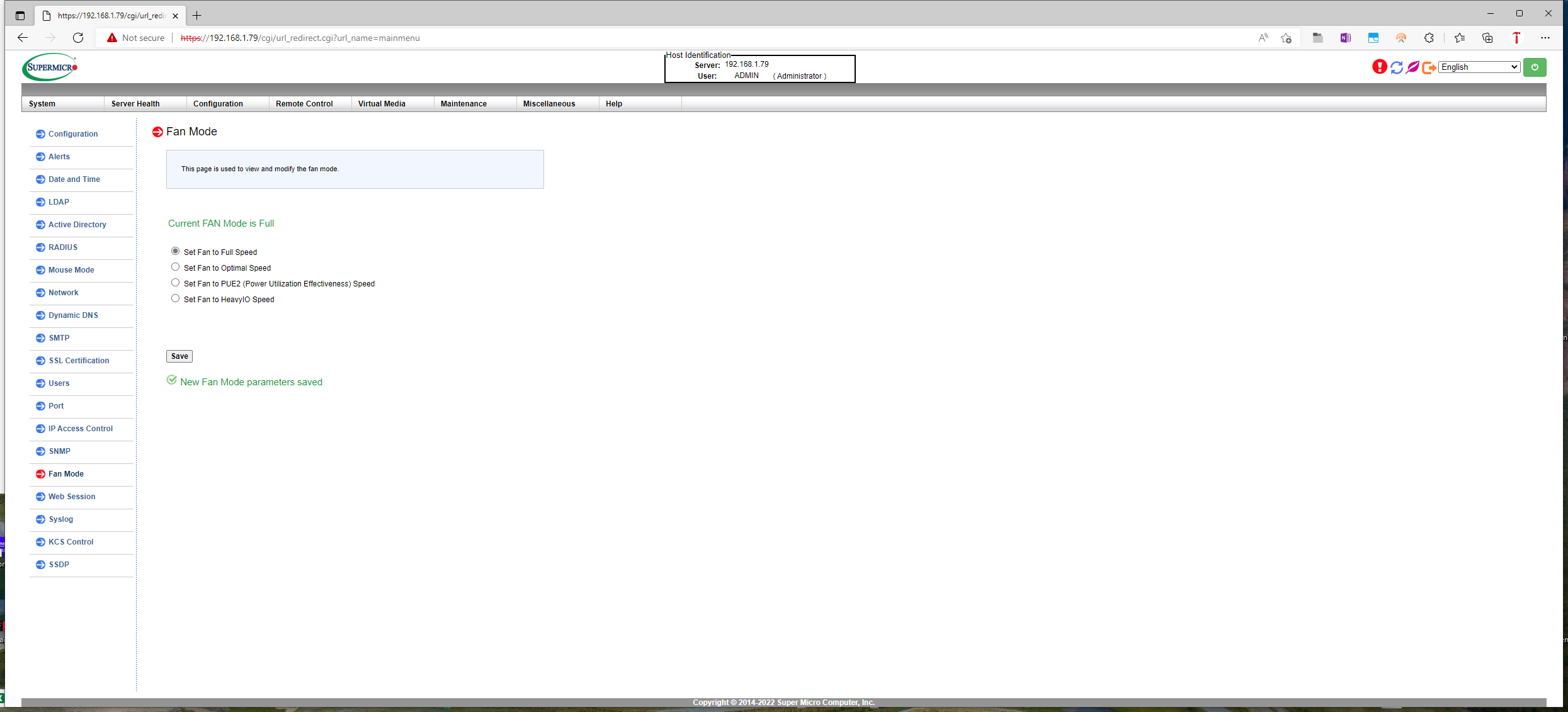

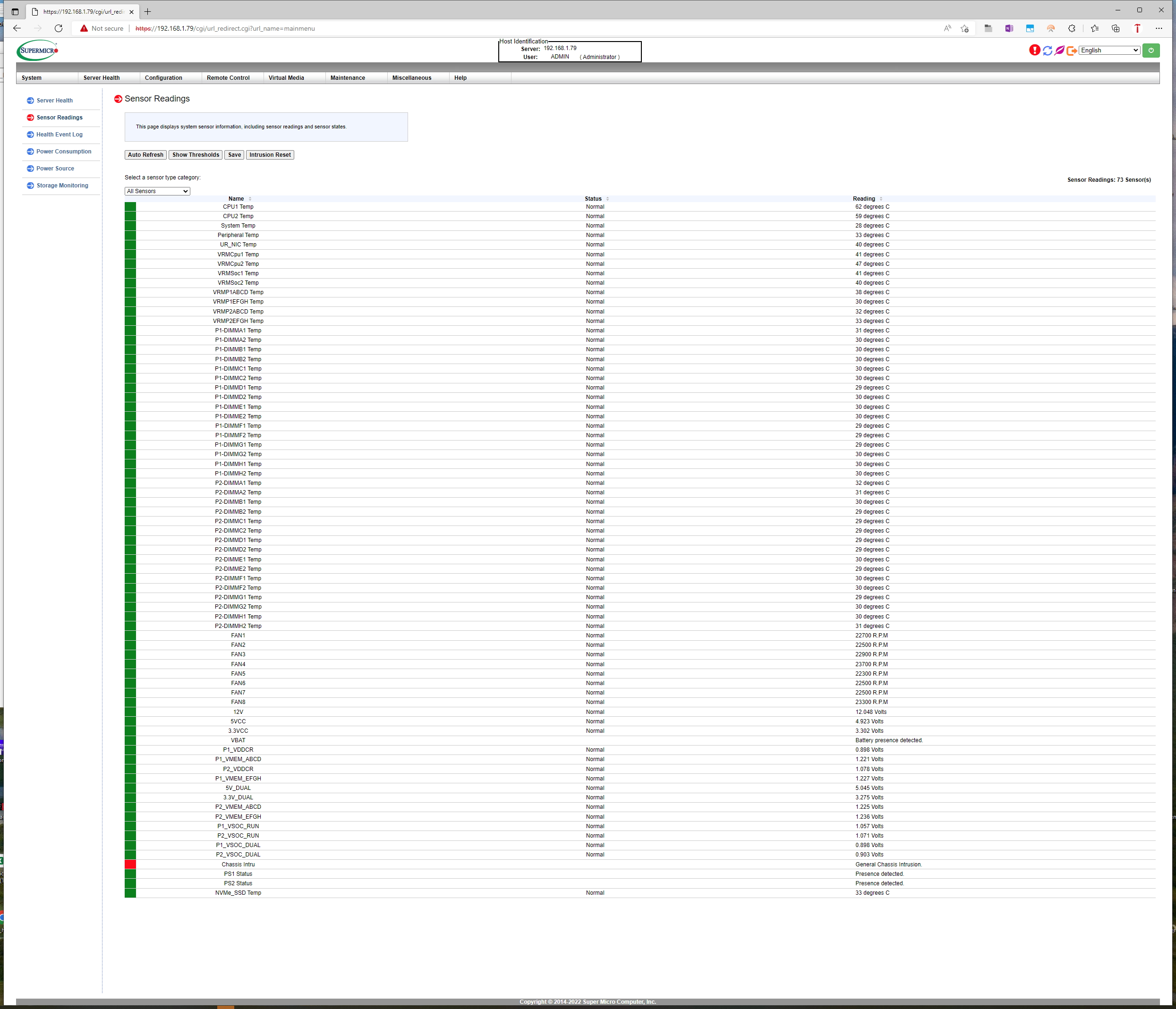

Popping the top off the chassis reveals two housings that hold fans. A total of eight fans feed air to the system, and each housing includes four Sunon 23,300 RPM counter-rotating 40 x 40 x 56 mm fans for maximum static pressure and reduced vibration. As expected with servers intended for 24/7 operation, the system can continue to function in the event of a fan failure. However, the remainder of the fans will automatically run at full speed if the system detects a failure. Naturally, these fans are loud, but that's not a concern. You manage the fan speed and profiles via the BMC (not the BIOS).

Four fans cool each CPU, and a simple black plastic shroud directs air to the heatsinks underneath. Dual SP3 sockets house the two processors, which are covered by standard CPU heatsinks optimized for linear airflow.

A total of 16 memory slots flank each processor, for a total of 32 slots that support up to an incredible 8TB of ECC DDR4-3200 memory (via 256GB DIMMs), easily outstripping the memory capacity available with competing Intel platforms. We tested the EPYC Milan processor with 16x 16GB DDR4-3200 SK hynix modules for a total memory capacity of 256GB. In contrast, the Icel Lake Xeon comparison platform came with 16x 32GB SK hynix ECC DDR4-3200 for a total capacity of 512GB of memory.

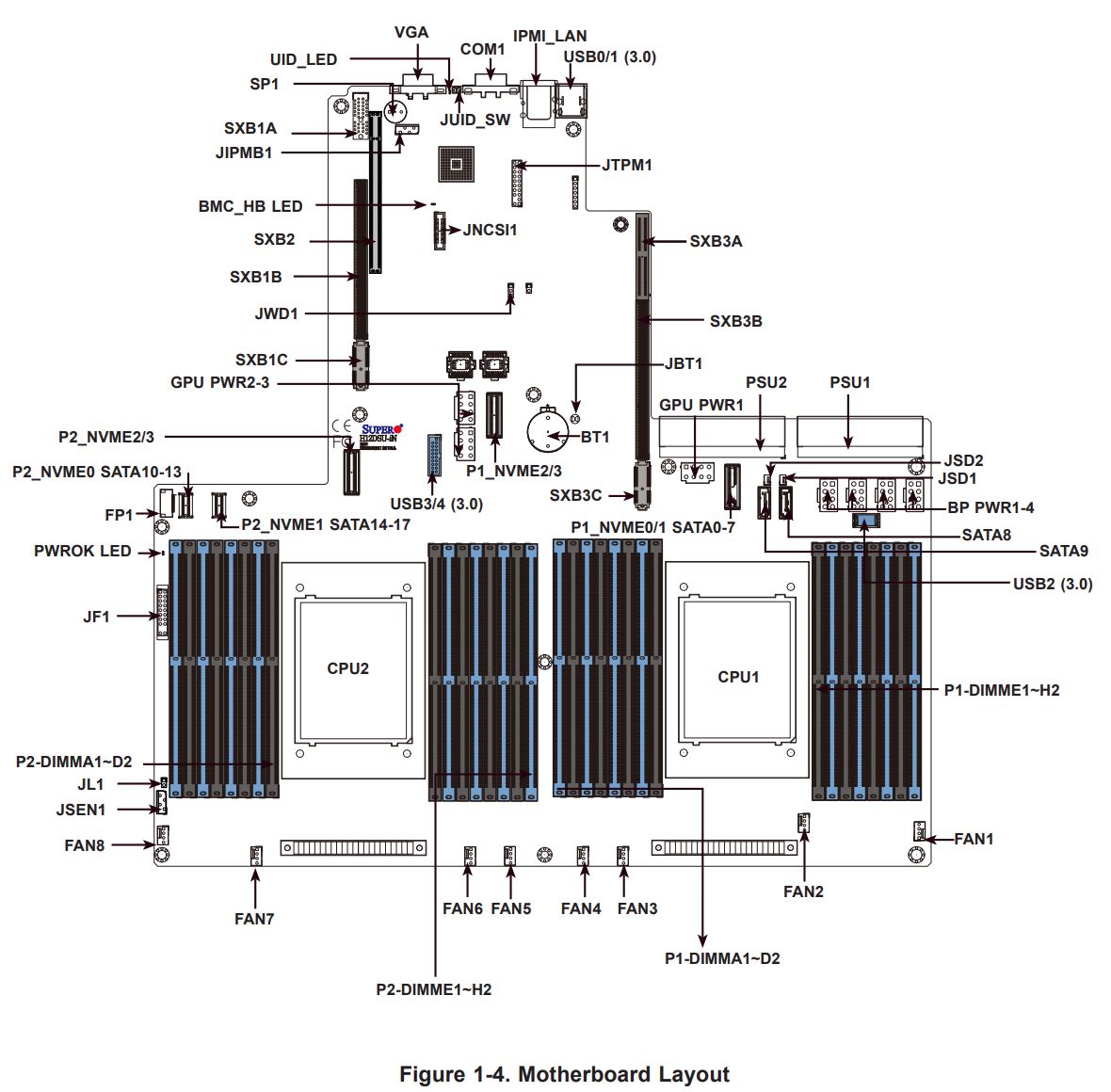

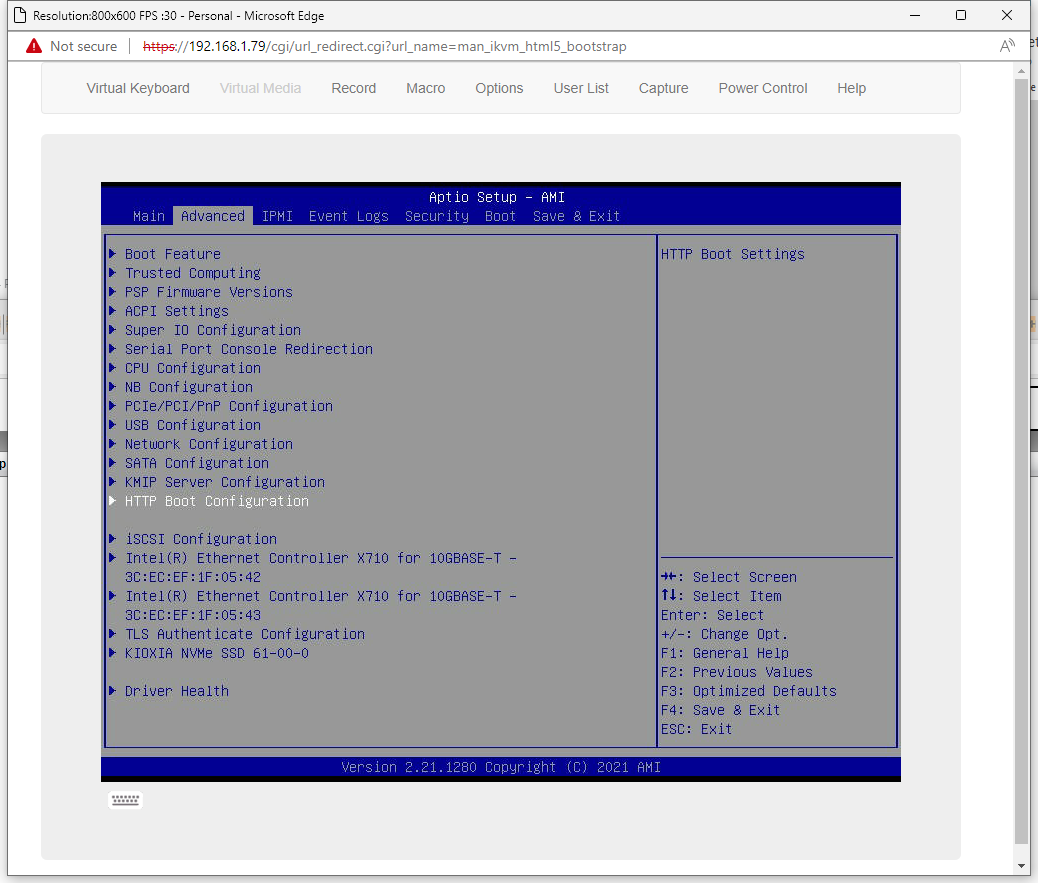

The H12DSU-iN motherboard's expansion slots consist of two full-height 9.5-inch PCIe 4.0 x16 slots and one low-profile PCIe 4.0 x16 slot, all mounted on riser cards. An additional internal PCIe 4.0 x16 slot is also available, but this slot only accepts proprietary Supermicro cards.

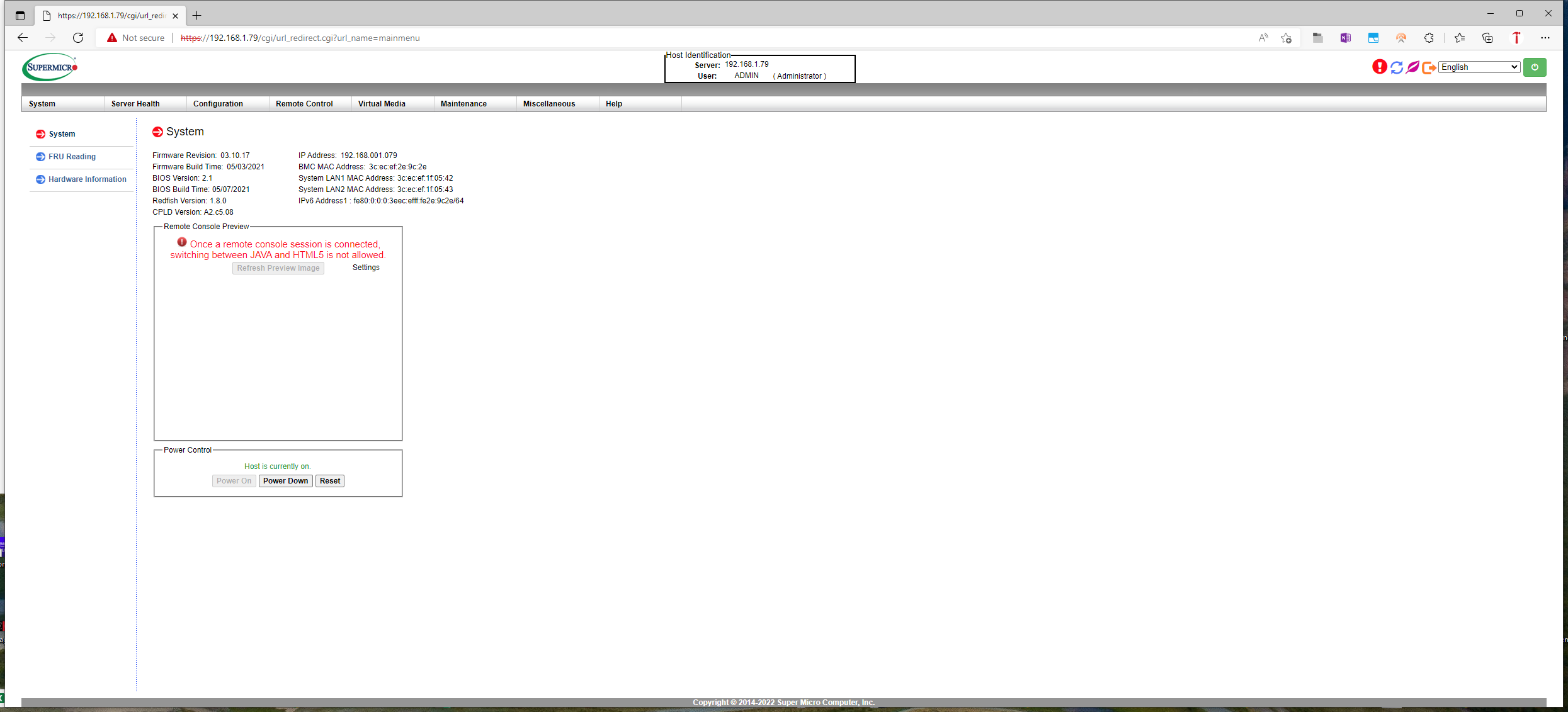

The rear I/O panel includes two 10 gigabit RJ45 LAN ports powered by an Intel X710-AT2 NIC, along with a dedicated RJ45 IPMI LAN port for management. Here we find the only USB ports on the machine, which come in the form of two USB 3.0 headers (it's a pity there isn't a USB port on the front), along with a COM and VGA port.

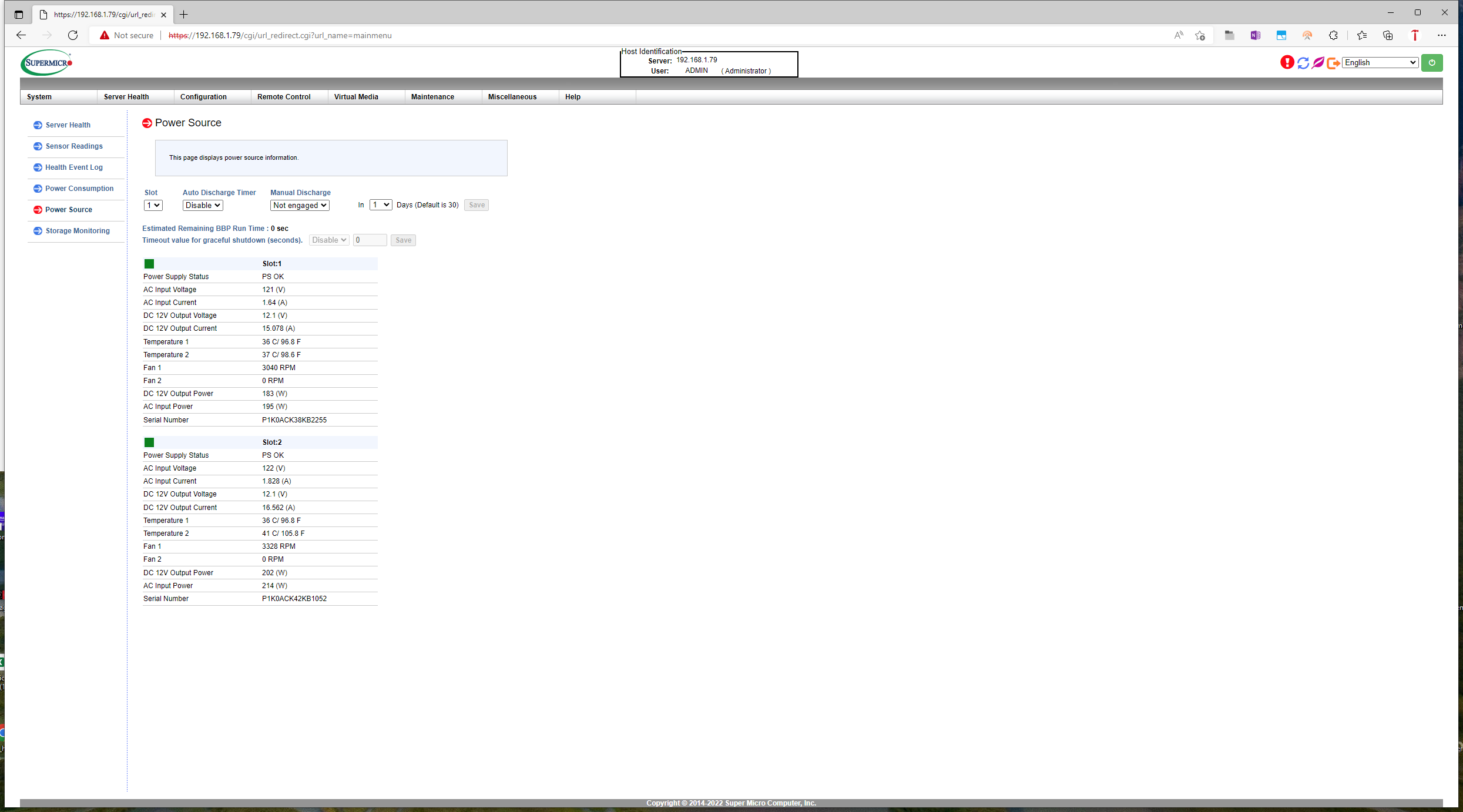

Two 1000W 80% Plus Titanium-Level redundant power supplies with PMBus provide power to the server, with automatic failover in the event of a failure and hot-swapability for easy servicing.

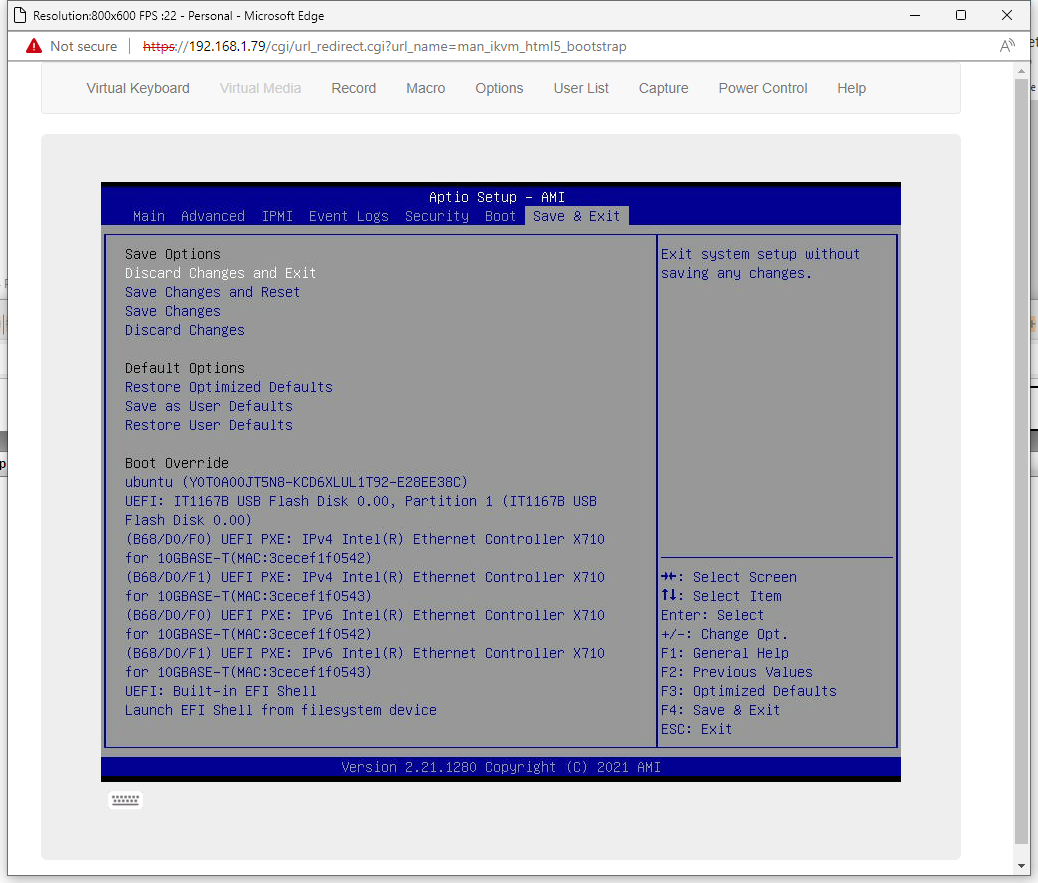

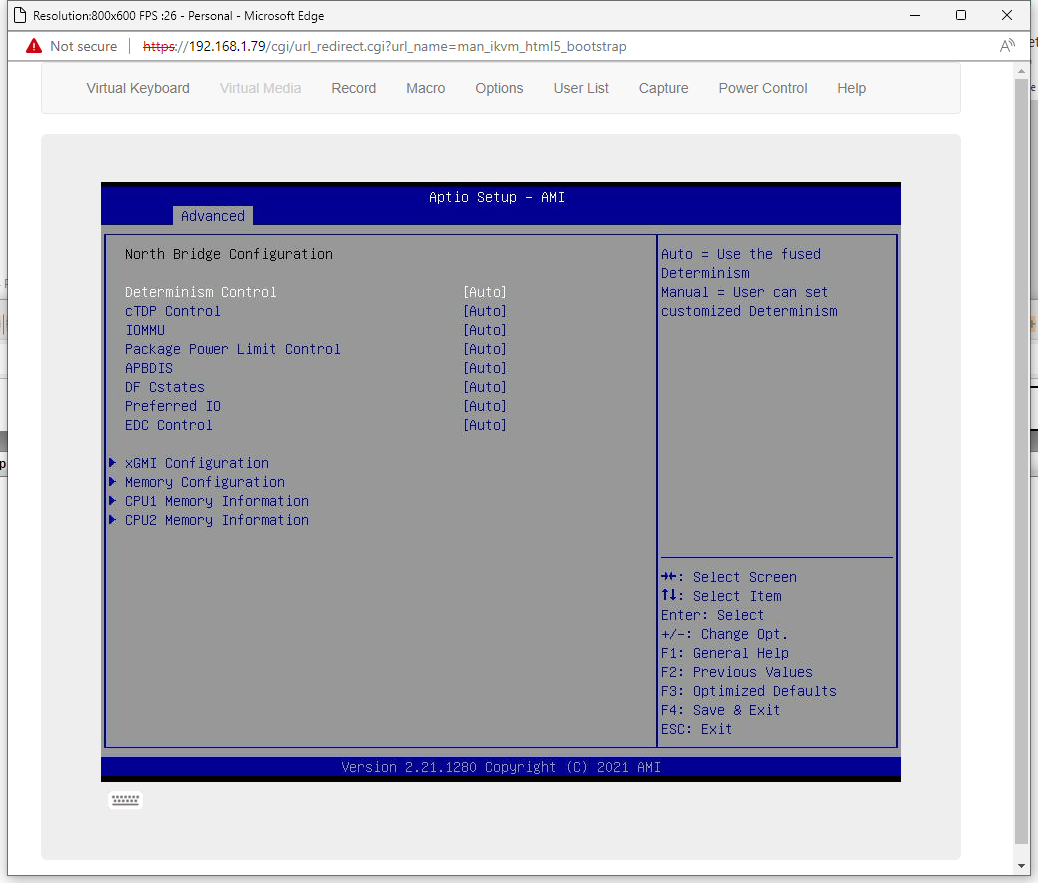

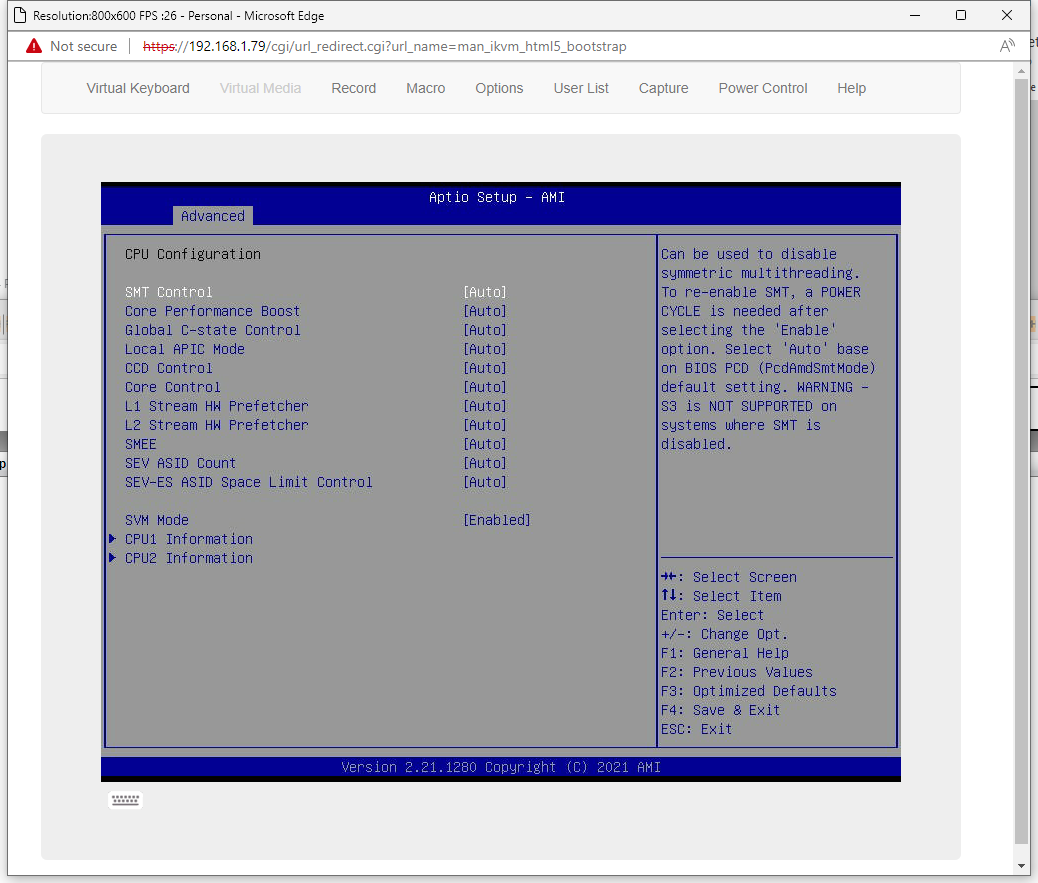

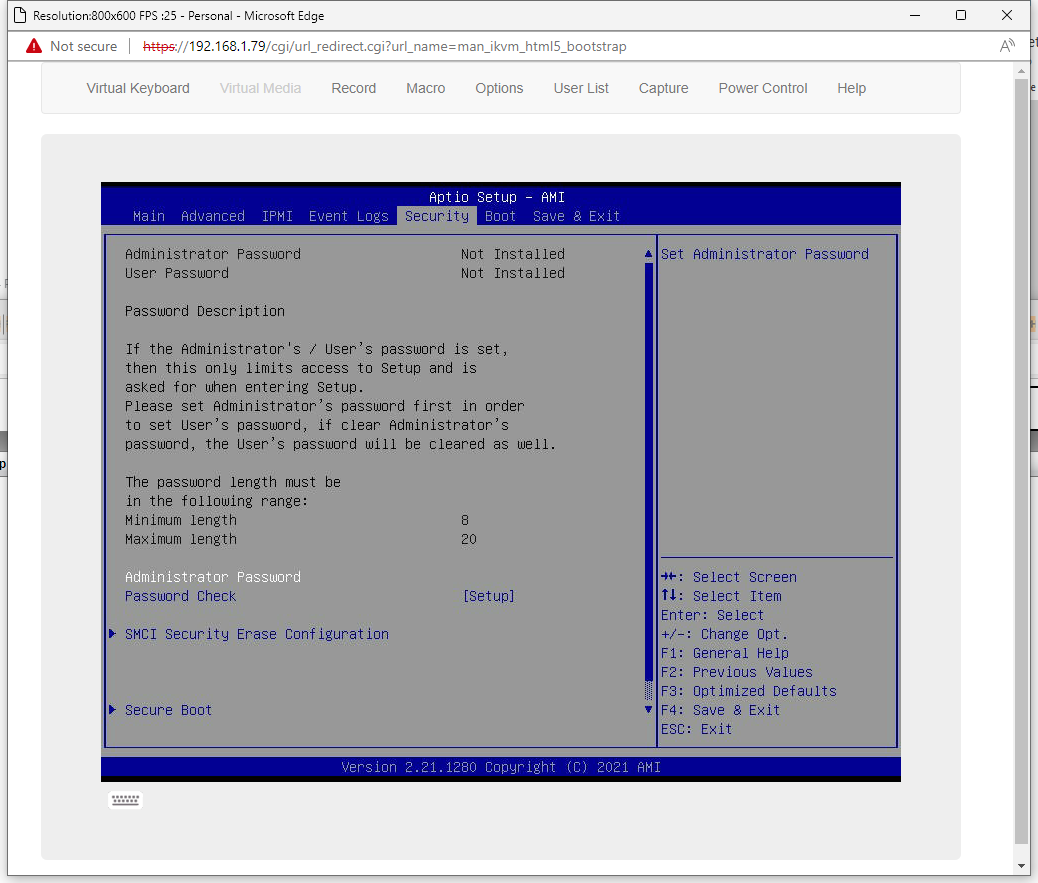

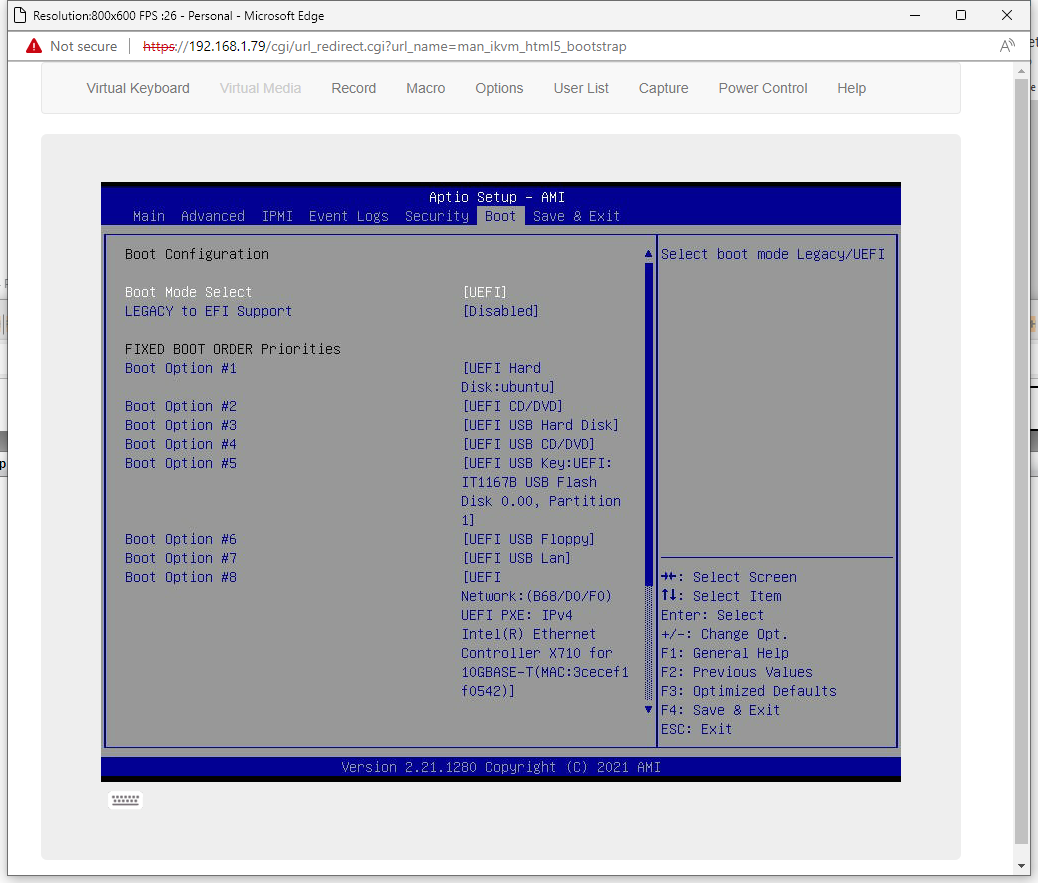

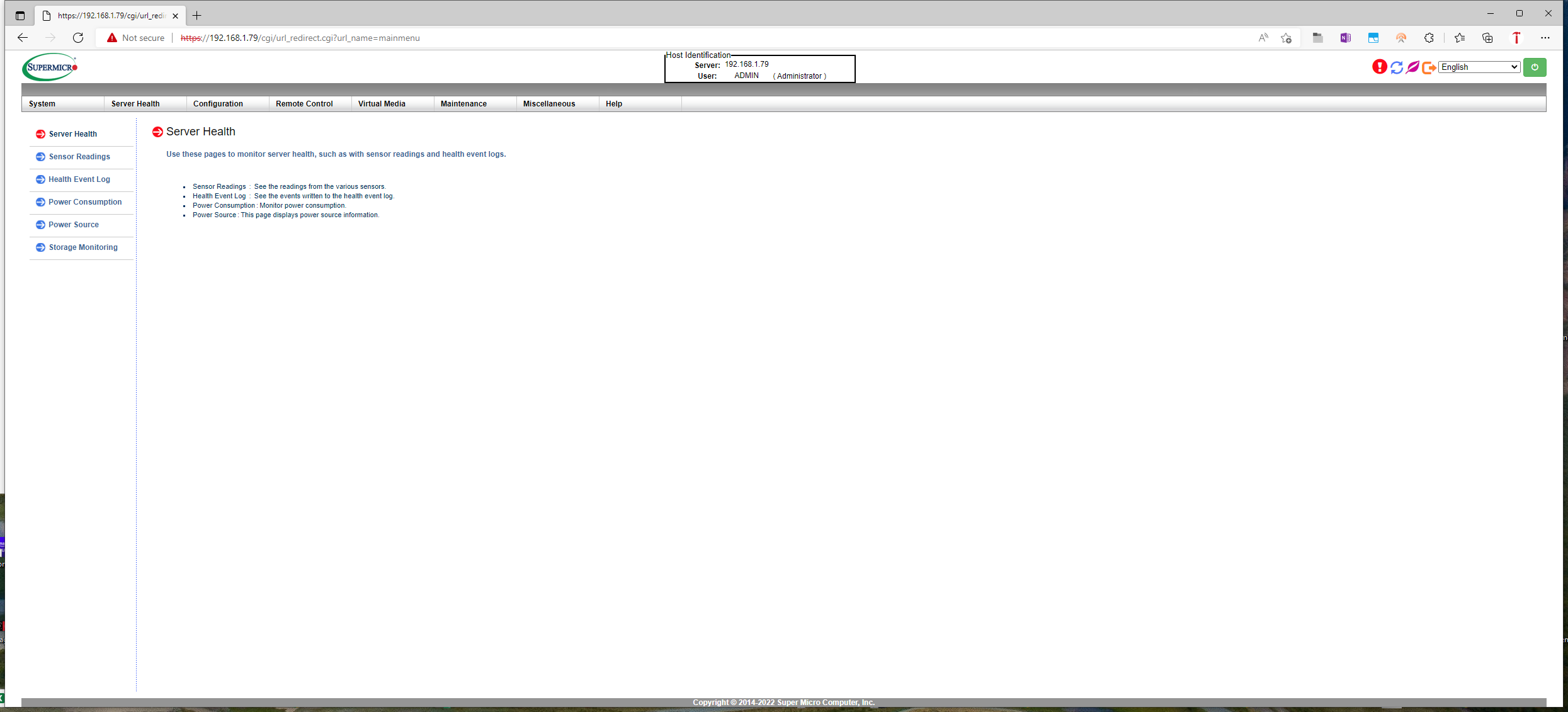

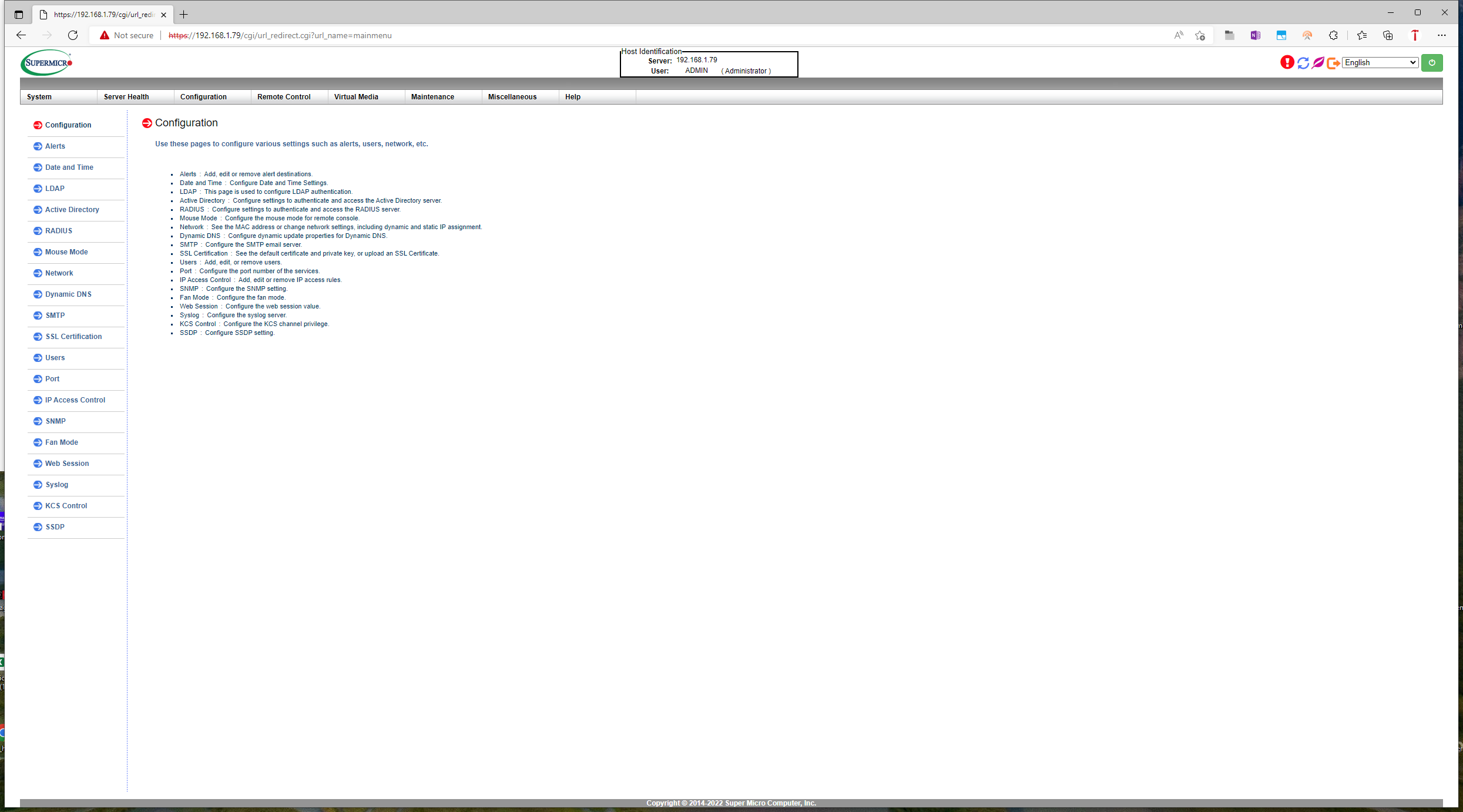

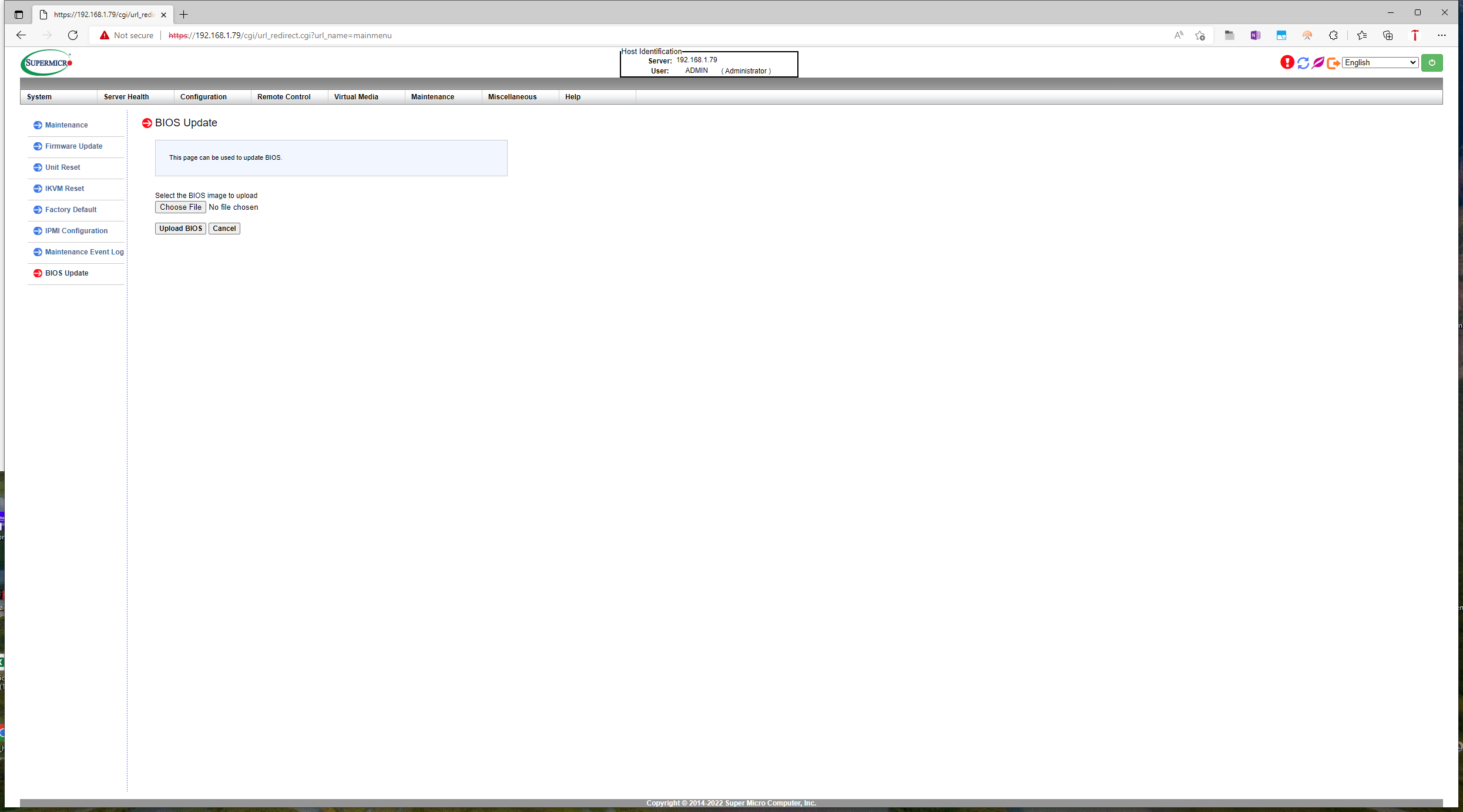

The BIOS is easy to access and use and offers plenty of tunable parameters, including CPU power threshold adjustments, while the IPMI web interface provides a wealth of monitoring capabilities and easy remote management that matches the type of functionality available with other types of platforms. Among many options, you can update the BIOS, use the KVM-over-LAN remote console, monitor power consumption, access health event logs, monitor and adjust fan speeds, and monitor the CPU, DIMM, and chipset temperatures and voltages. Supermicro's remote management suite is polished and easy to use, and we can say the same about the BIOS.

Test Setup

| Header Cell - Column 0 | Cores/Threads | 1K Unit Price | Base / Boost (GHz) | L3 Cache (MB) | TDP (W) |

|---|---|---|---|---|---|

| AMD EPYC 7713 | 64 / 128 | $7,060 | 2.0 / 3.675 | 256MB | 240W |

| AMD EPYC 7742 | 64 / 128 | $6,950 | 2.25 / 3.4 | 256 | 225W |

| Xeon Platinum 8380 | 40 / 80 | $8,099 | 2.3 / 3.2 - 3.0 | 60 | 270W |

| Intel Xeon Platinum 8280 (6258R) | 28 / 56 | $10,009 | 2.7 / 4.0 | 38.5 | 205W |

| Intel Xeon Gold 6258R | 28 / 56 | $3,651 | 2.7 / 4.0 | 38.5 | 205W |

| AMD EPYC 7F72 | 24 / 48 | $2,450 | 3.2 / ~3.7 | 192 | 240W |

| Intel Xeon Gold 5220R | 24 / 48 | $1,555 | 2.2 / 4.0 | 35.75 | 150W |

| AMD EPYC 7F52 | 16 / 32 | $3,100 | 3.5 / ~3.9 | 256 | 240W |

| Intel Xeon Gold 6226R | 16 / 32 | $1,300 | 2.9 / 3.9 | 22 | 150W |

| Intel Xeon Gold 5218 | 16 / 32 | $1,280 | 2.3 / 3.9 | 22 | 125W |

Bear in mind that the 240W 64-core EPYC 7713s we're testing aren't the highest-frequency 64-core in AMD's EPYC Milan arsenal. That distinction falls to the 64-core EPYC 7763 with a 2.45 base and 3.5 GHz boost clock rate, along with a much heftier 280W TDP. Keep that in mind when you compare this EPYC chip to the Ice Lake flagship, the Xeon Platinum 8380, which also comes with a much higher 270W TDP.

| Header Cell - Column 0 | Memory | Tested Processors |

|---|---|---|

| Supermicro AS-1024US-TRT | 16x 16GB SK hynix ECC DDR4-3200 | EPYC 7713 |

| Intel S2W3SIL4Q | 16x 32GB SK hynix ECC DDR4-3200 | Intel Xeon Platinum 8380 |

| Supermicro AS-1023US-TR4 | 16x 32GB Samsung ECC DDR4-3200 | EPYC 7742, 7F72, 7F52, 7F32 |

| Dell/EMC PowerEdge R460 | 12x 32GB SK Hynix DDR4-2933 | Intel Xeon 8280, 6258R, 5220R, 6226R, 6250 |

We used the Supermicro 1024US-TRT server to test the EPYC 7713 Milan processors, while we used the Supermicro 1024US-TR4 server to test four different EPYC Rome configurations.

We used the Intel 2U Server System S2W3SIL4Q Software Development Platform with the Coyote Pass server board to test the Ice Lake Xeon Platinum 8380 processors. This system is designed primarily for validation purposes, so it doesn't have too many noteworthy features. However, the system is heavily optimized for airflow, with the eight 2.5" storage bays flanked by large empty bays that allow for plenty of air intake. You can read more about this configuration in our Intel Ice Lake Xeon Platinum 8380 Review. We used a Dell/EMC PowerEdge R460 server to test the other Xeon processors in our test group.

Our test configurations don't have balanced memory capacities, but that comes as an unavoidable side effect of the capabilities of each platform and the systems we've been sampled. As such, bear in mind that memory capacity disparities may impact the results below.

We used the Phoronix Test Suite for testing. This automated test suite simplifies running complex benchmarks in the Linux environment. The test suite is maintained by Phoronix, and it installs all needed dependencies, and the test library includes 450 benchmarks and 100 test suites (and counting). Phoronix also maintains openbenchmarking.org, which is an online repository for uploading test results into a centralized database. We used Ubuntu 20.04 LTS and the default Phoronix test configurations with the GCC compiler for all tests below. We also tested both platforms with all available security mitigations.

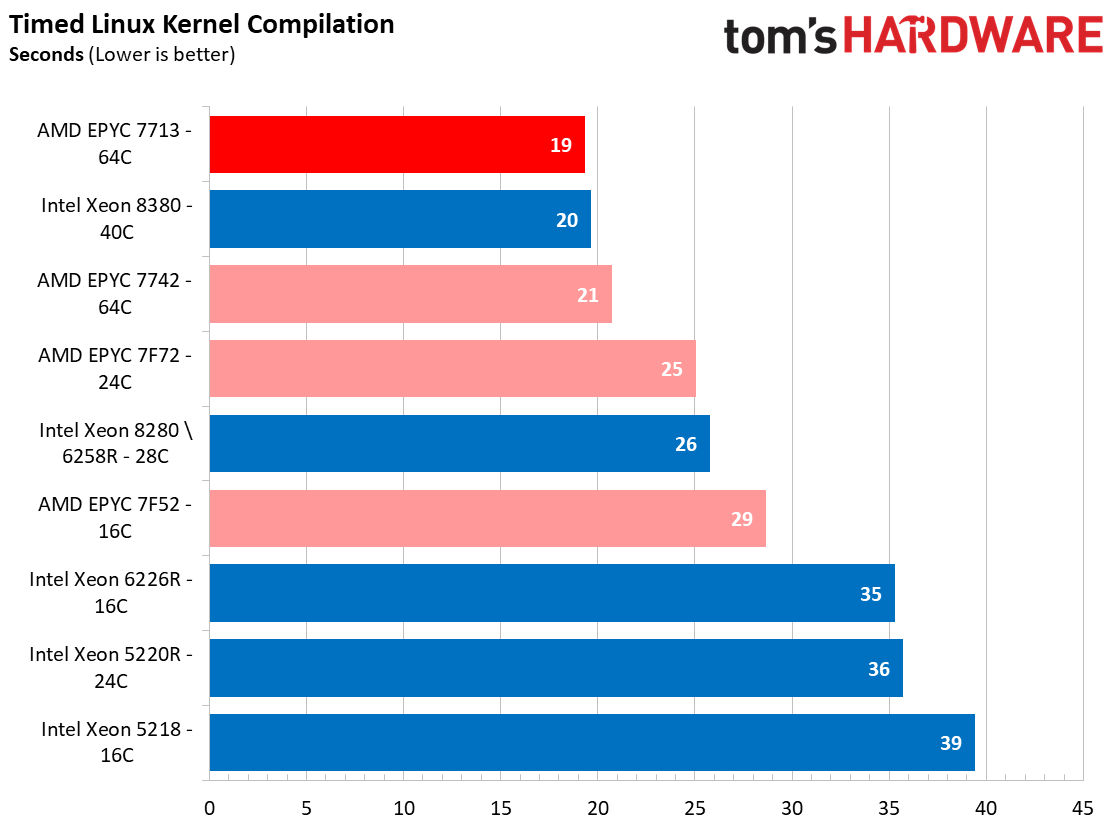

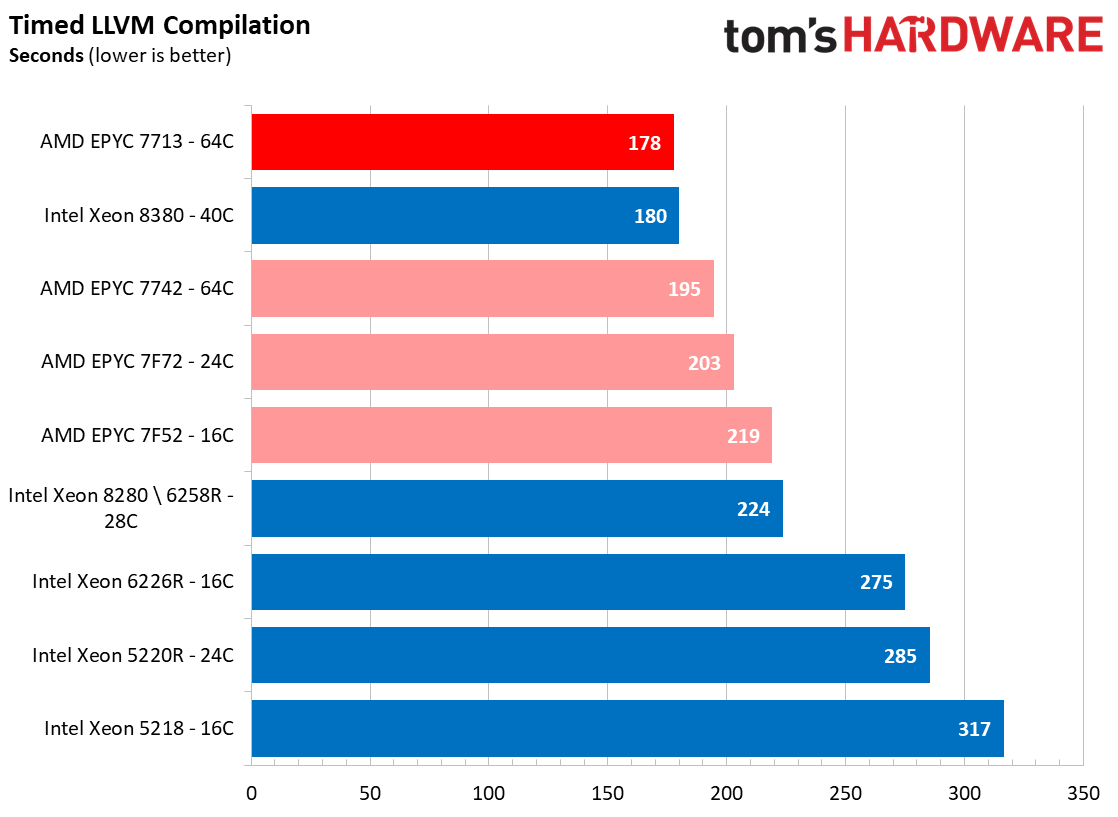

Linux Kernel and LLVM Compilation Benchmarks

The dual EPYC 7713 processors in the 1024US-TRT complete the Timed Linux Kernel Compilation benchmark, which builds the Linux kernel at default settings, in a mere 19 seconds, edging out the Xeon Platinum 8380s. That's incredibly impressive — remember, the 7713s operate at a 225W TDP, meaning it is the lower-frequency 64-core EPYC variant. In contrast, the Ice Lake chips have a 270W TDP rating. AMD's Milan flagship, the 7763, would take the lead in this benchmark.

AMD's EPYC 7713 also carves out a win against the 8380s in the Timed LLVM Compilation workload, completing the test in 178 seconds. Additionally, performance scaling is more pronounced than we expected moving from the EPYC 7742 to the 7713 given that the latter is the low-frequency variant, with the 7713s completing the benchmark 10% faster than the previous-gen flagship.

Molecular Dynamics and Parallel Compute Benchmarks

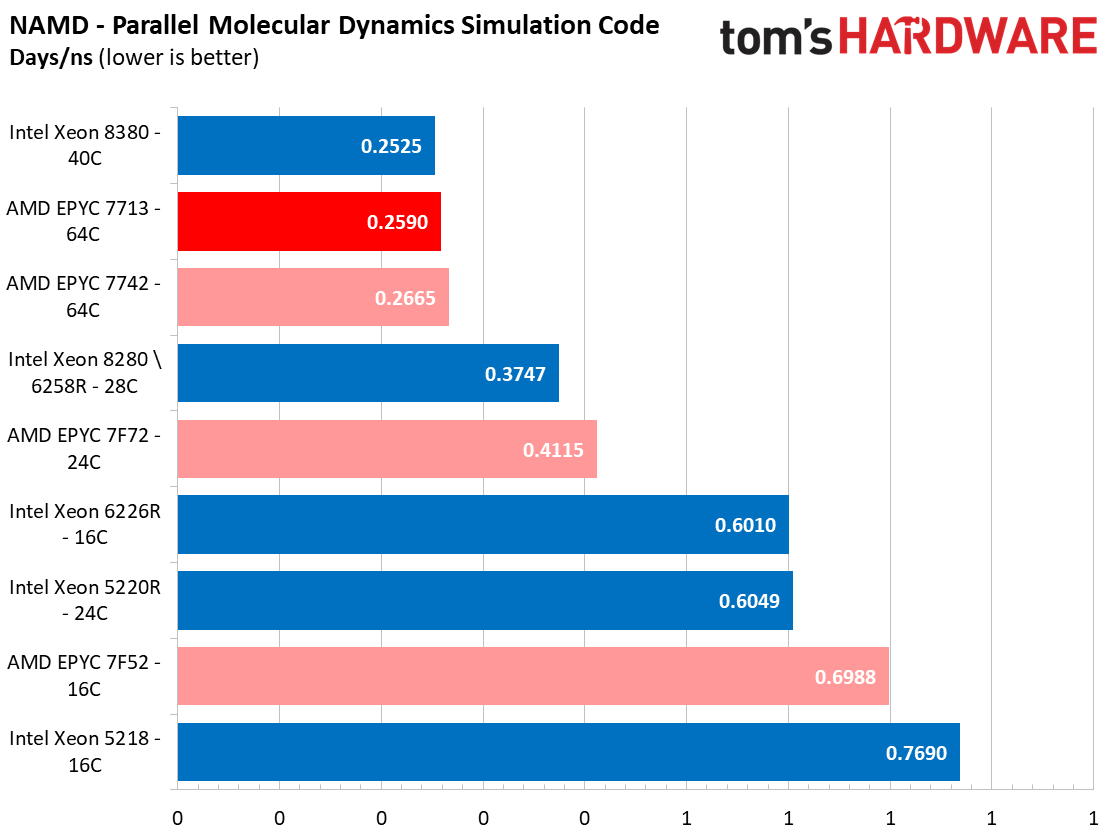

NAMD is a parallel molecular dynamics code designed to scale well with additional compute resources; it scales up to 500,000 cores and is one of the premier benchmarks used to quantify performance with simulation code. The EPYC processors are well-suited for these types of highly-parallelized workloads due to their prodigious core counts, but Intel's Ice Lake is also a force to be reckoned with. This workload fully saturates the cores with an extended workload, thus generating a heavy thermal load, but the Supermicro 1024US-TRT didn't show any signs of thermals impacting performance. Once again, the EPYC 7713 impresses against Intel's much higher-TDP flagship.

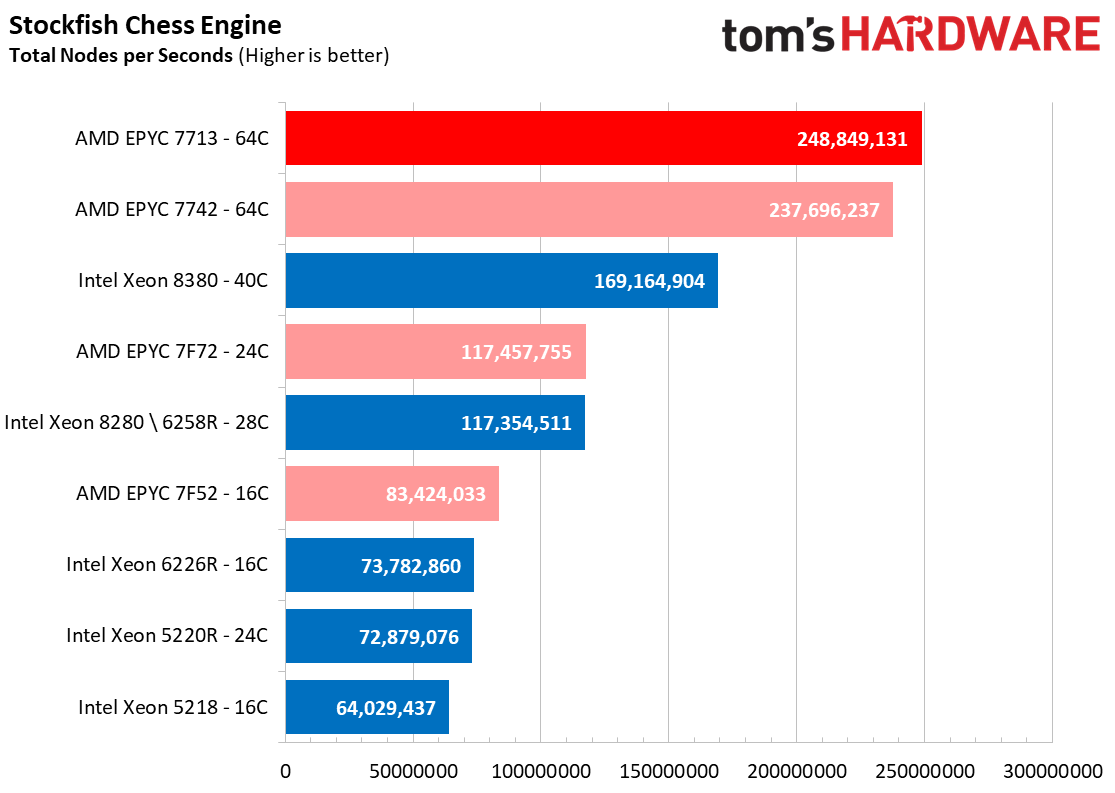

Stockfish is a chess engine designed for the utmost in scalability across increased core counts — it can scale up to 512 threads. Here we can see that this massively parallel code scales incredibly well, with the EPYC 7713s taking the top of the benchmark charts.

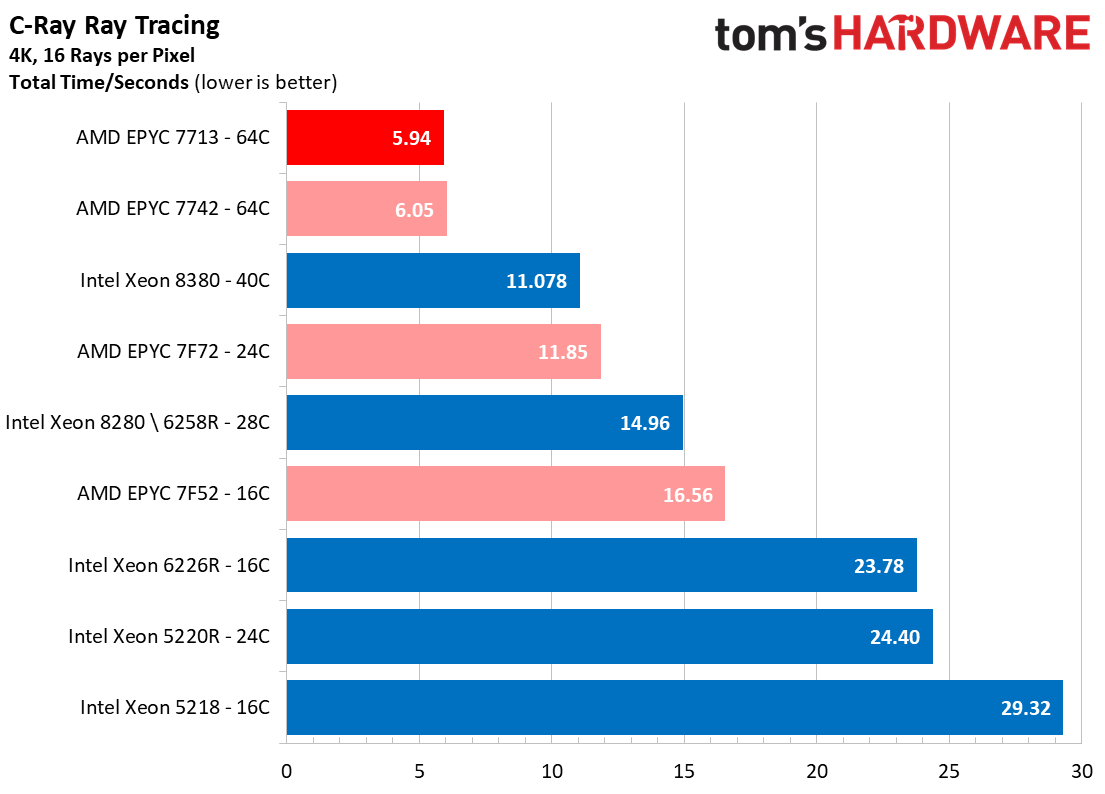

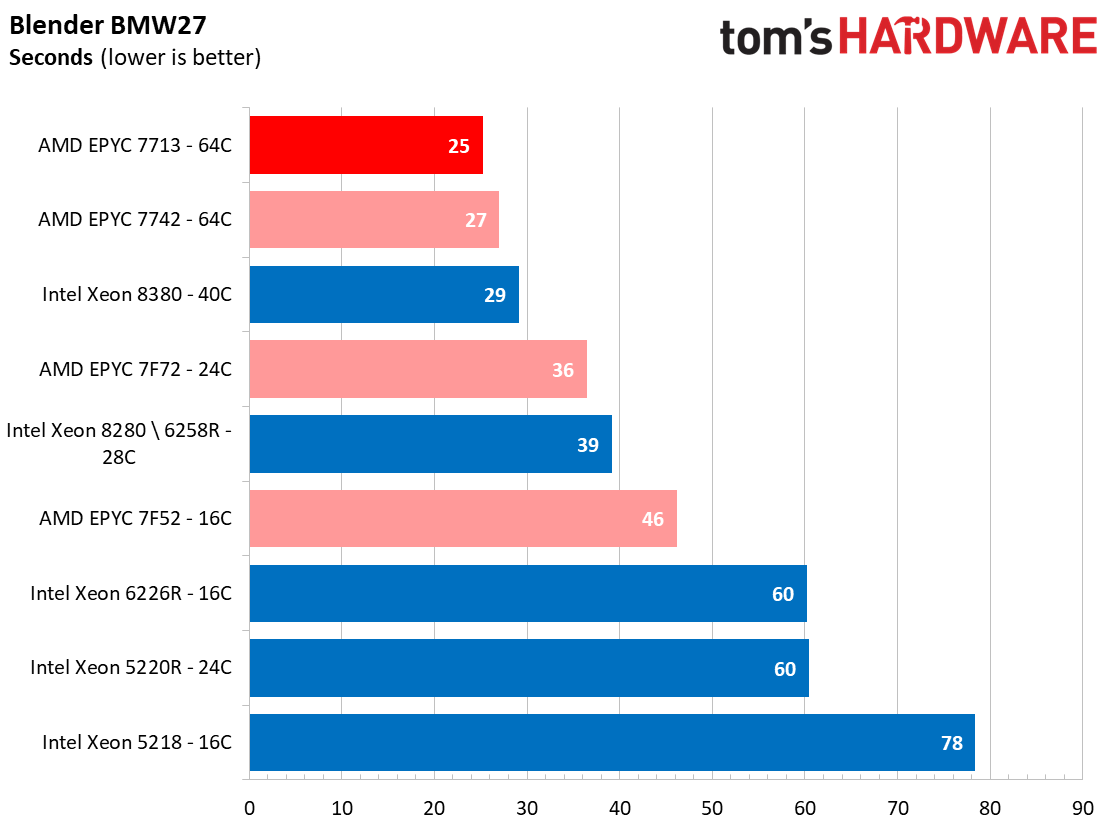

Rendering Benchmarks

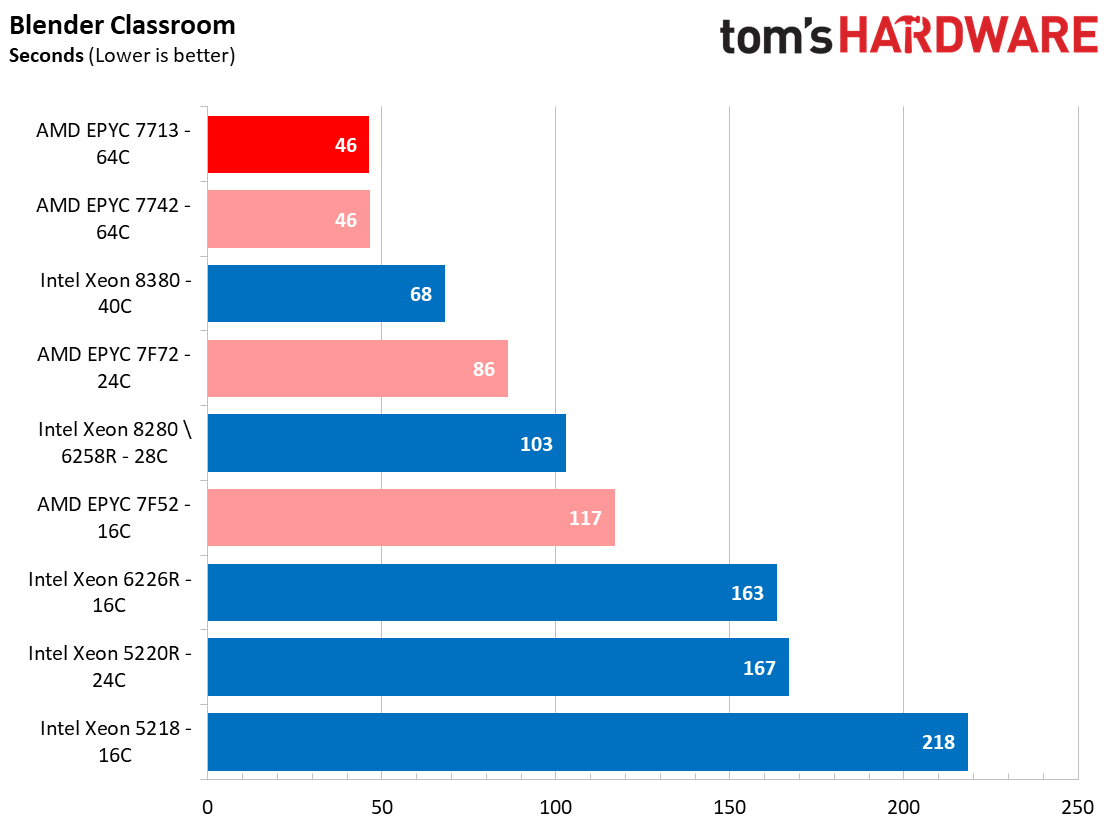

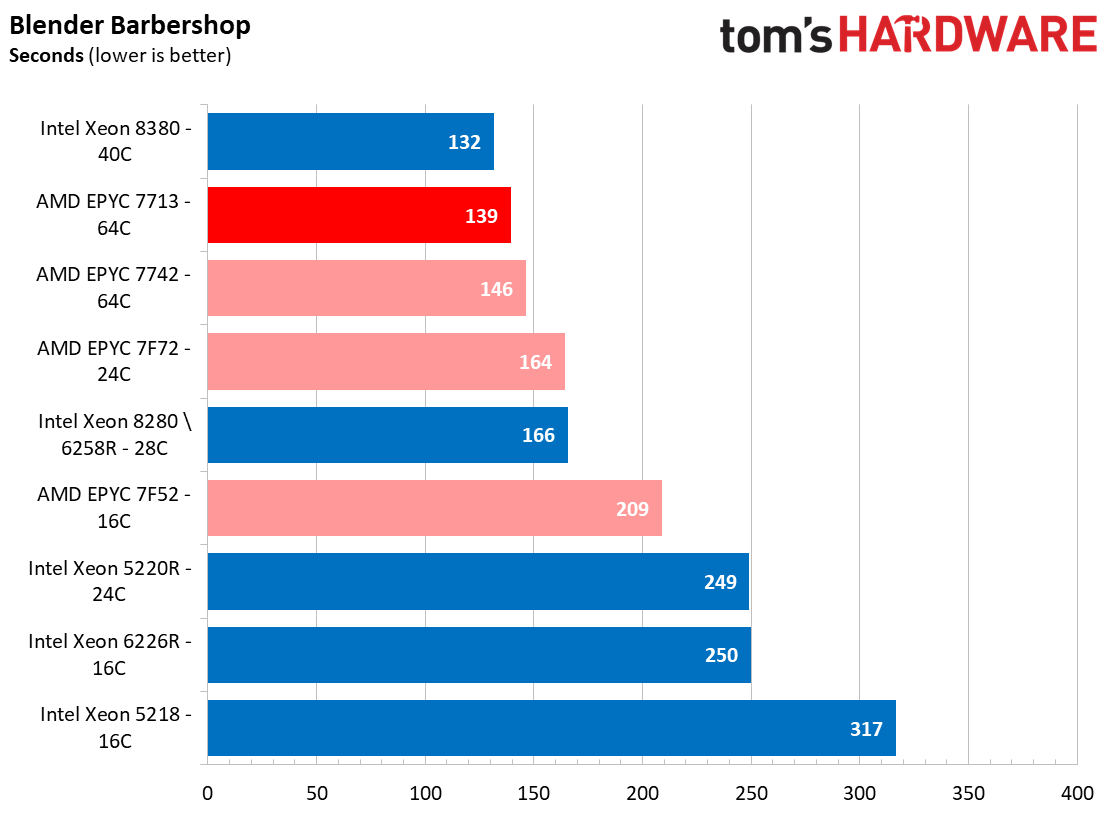

Provided you can keep the cores fed with data, most modern rendering applications also fully utilize the compute resources. The 64-core EPYC Milan and EPYC Rome processors lead this series of benchmarks convincingly, though Intel's Ice Lake does narrow the competition in a few of the Blender renders and leads in others.

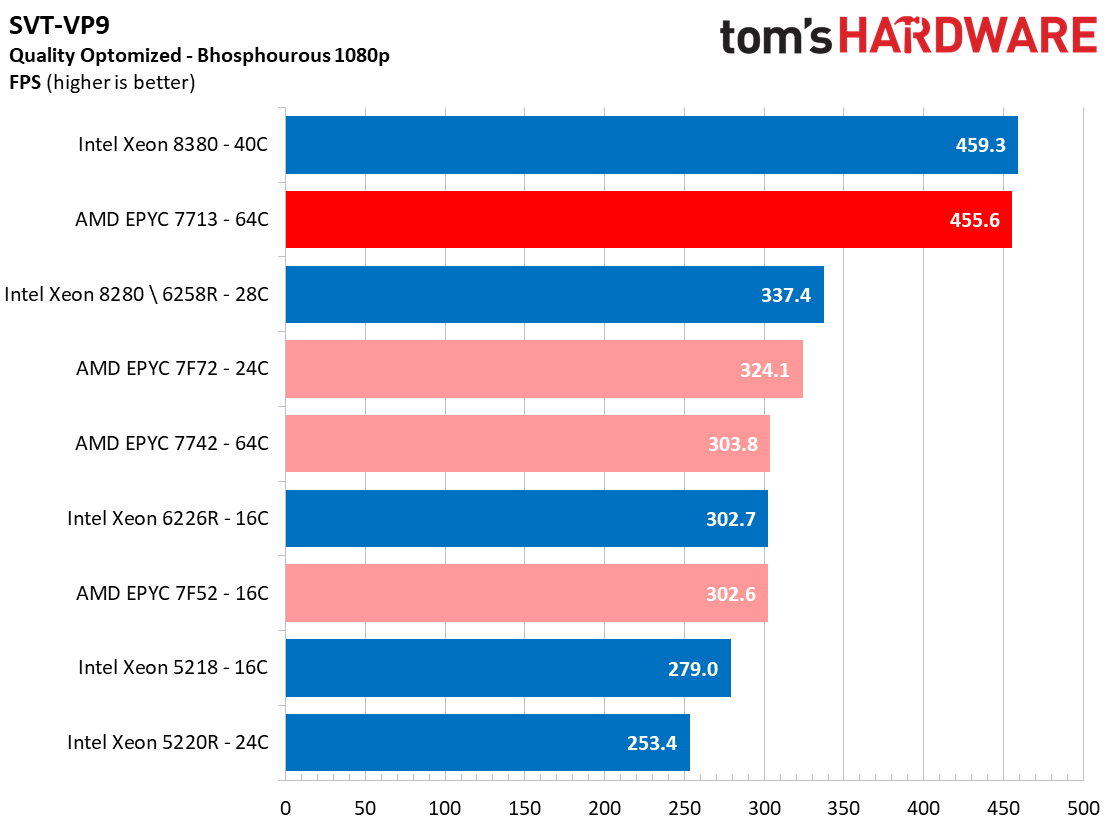

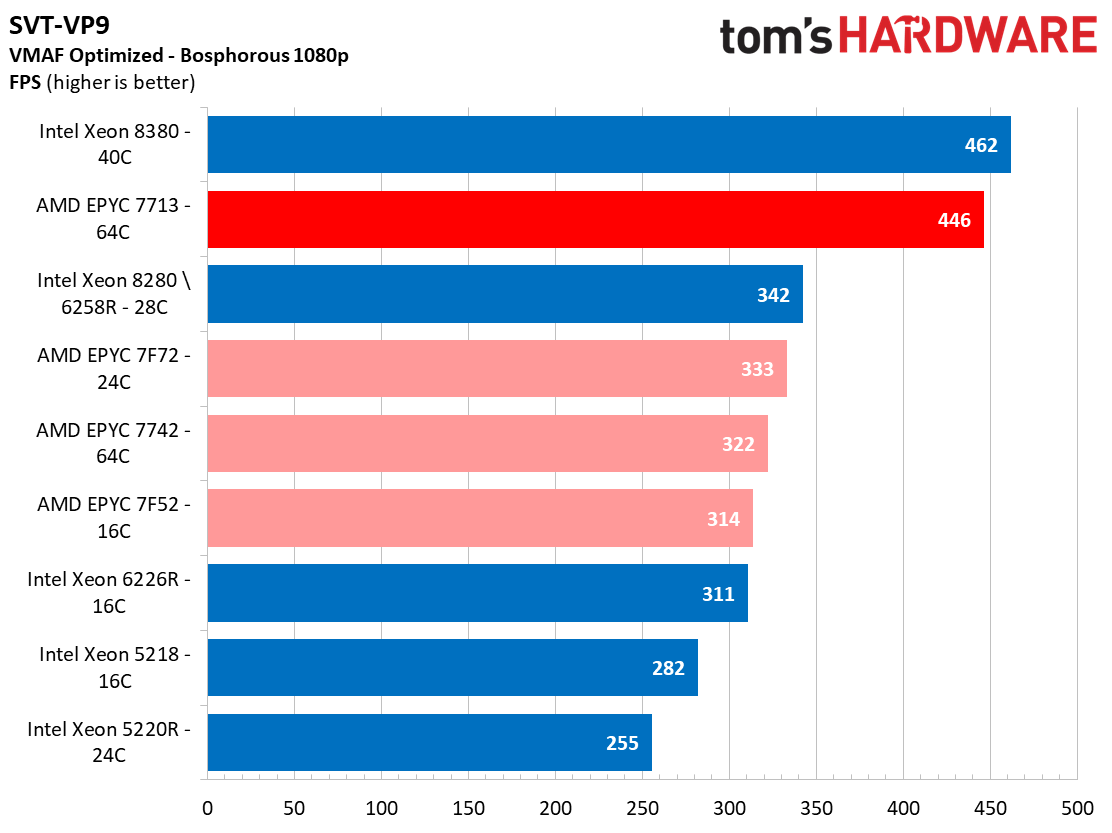

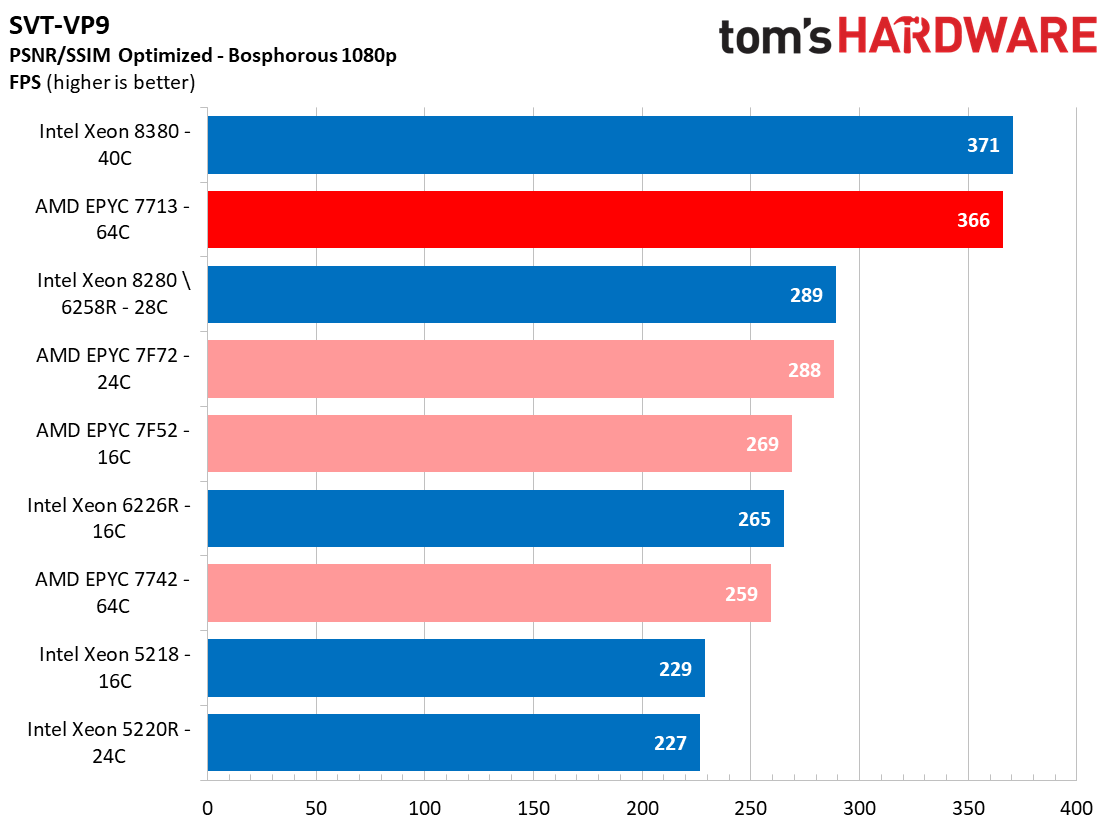

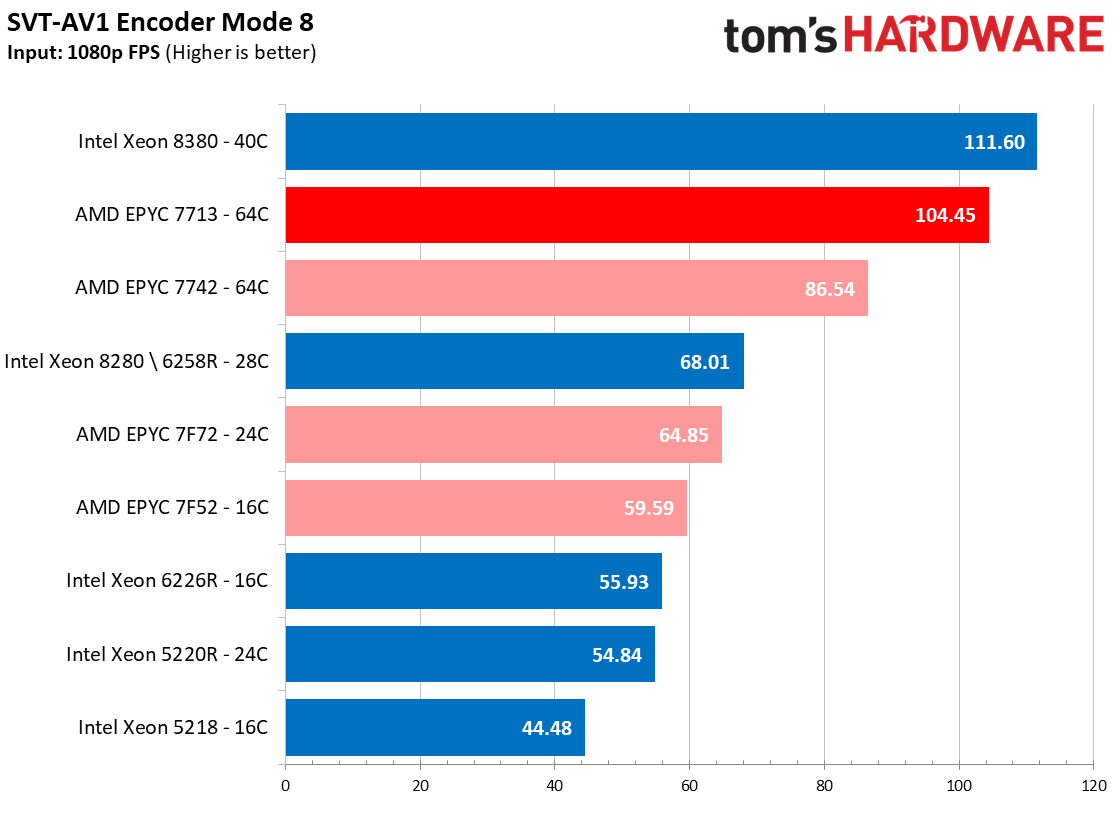

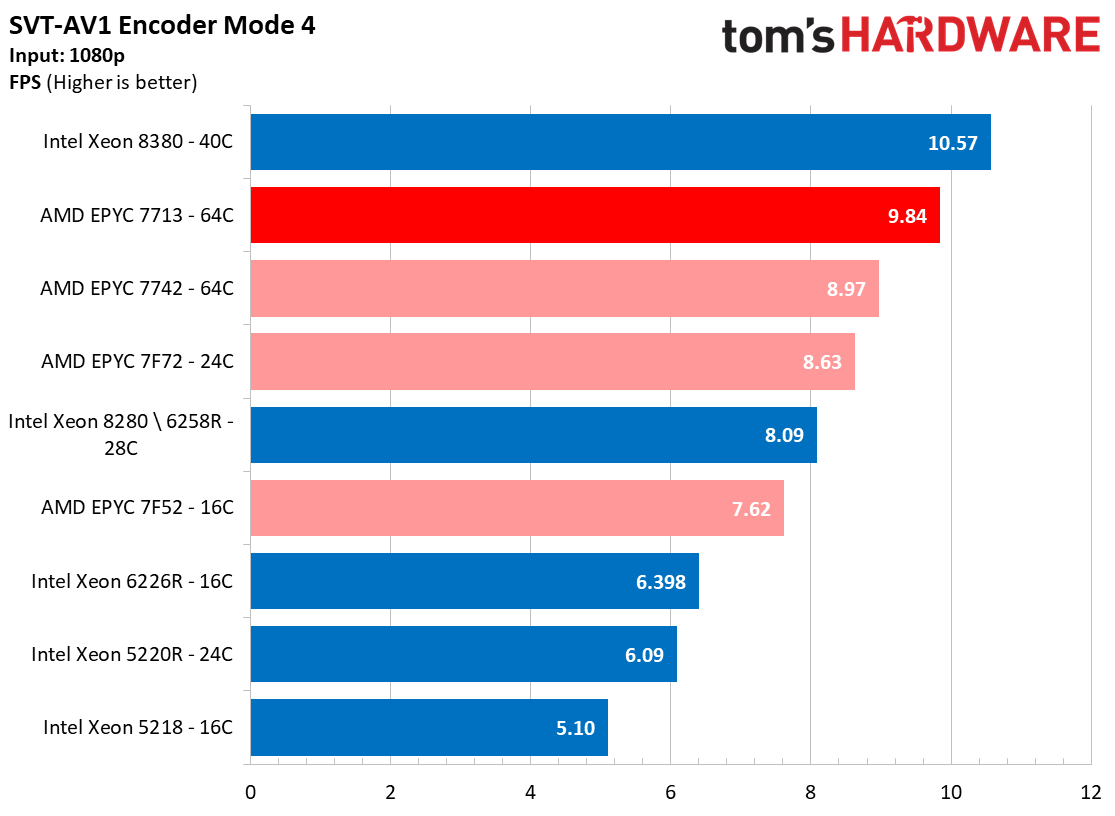

Encoders tend to present a different challenge: As we can see with the VP9 libvpx benchmark, they often don't scale well with increased core counts. Instead, they often benefit from per-core performance and other factors, like cache capacity. Here we can see that Intel's 8380 takes the lead by slim margins, but AMD's EPYC Milan 7713 is incredibly impressive given its much lower power and thermal budget.

Compression and Security

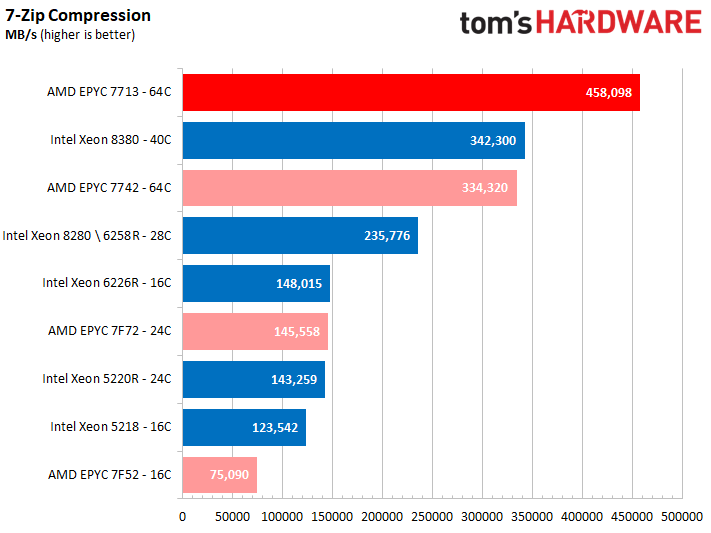

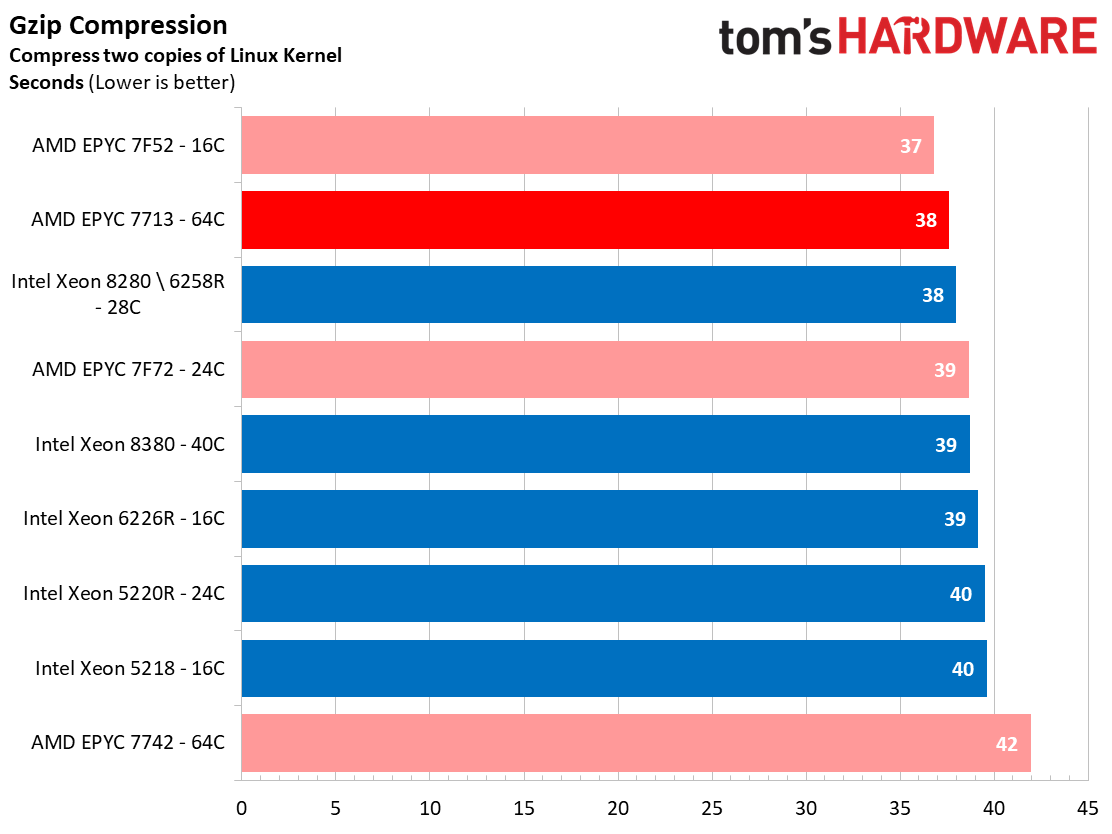

Compression workloads also come in many flavors. For example, the 7-Zip (p7zip) benchmark exposes the heights of theoretical compression performance because it runs directly from main memory, allowing both memory throughput and core counts to impact performance heavily. Here we can see that benefit the EPYC 7713s tremendously as they take the lead over the 8380s. In contrast, the gzip benchmark, which compresses two copies of the Linux 4.13 kernel source tree, tends to respond well to speedy clock rates, giving the 16-core EPYC 7F52s the lead. The 7713s are still impressive, though, as they take second place.

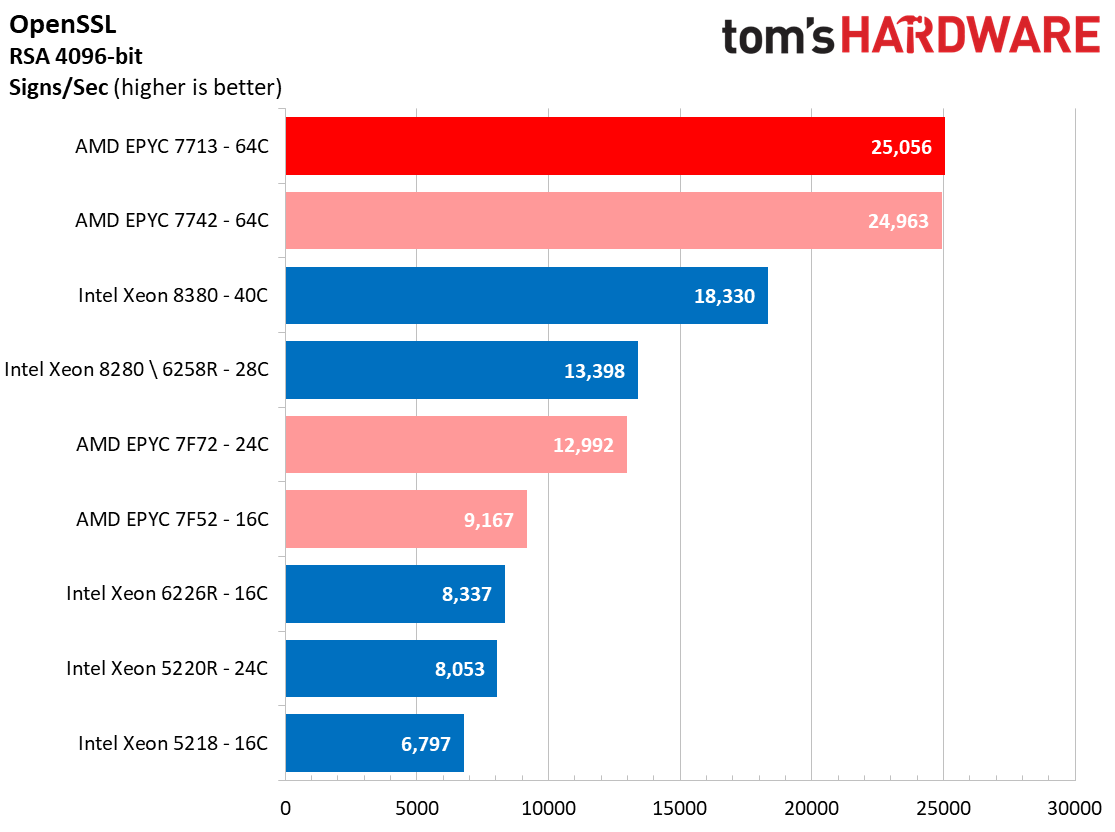

The open-source OpenSSL toolkit uses SSL and TLS protocols to measure RSA 4096-bit performance. As we can see, this test favors the EPYC processors due to its parallelized nature, but offloading this type of workload to dedicated accelerators is becoming more common for environments with heavy requirements.

Conclusion

AMD's forward-thinking SP3 socket design has given server builders plenty of flexibility as the EPYC lineup matures, and that capability is on full display with the Supermicro 1024US-TRT server. With support for EPYC 7002 and 7003 processors, high-frequency H- and F-Series, and even the Milan-X CPUs, the platform has continued to evolve to support the growing EPYC roster.

Supermicro's fully-validated systems come with the hardware fully configured, and rack installation with the tool-less rail kit is simple and quick. We found the system to be robust during our tests, with plenty of power for the processors fed by the high-quality Titanium power supplies and ample cooling provided by eight fans. We didn't notice any adverse impact from thermal generation during a bevy of extended-duration all-core workloads that generate plenty of thermal load, signifying that the cooling system is well designed.

We'd like to see a few USB ports added to the front panel, as this eases setup and maintenance. That said, the easily-accessible IPMI interface provides comprehensive monitoring and maintenance options, not to mention a polished remote management interface. On the hardware side, the dual 10GbE LAN and PCIe 4.0 interfaces provide plenty of connectivity options, while the support for up to 8TB of ECC memory allows the server to exploit EPYC Milan's tremendous throughput while providing enough capacity to address even the most memory-hungry applications. The server's slim 1U chassis does limit you to four drive bays, but Supermicro supports plenty of high-capacity storage options to maximize the available bays.

The Supermicro 1024US-TRT server performed well in our tests, packing quite the threaded heft into a small form factor that can address general-purpose, enterprise, cloud, and virtualization roles well. The addition of Milan-X support also positions the platform for possible use in more diverse technical computing workloads, positioning the 1024US-TRT as a robust server fit for a wide variety of roles.

- MORE: CPU Benchmark Hierarchy

- MORE: AMD vs Intel

- MORE: How to Overclock a CPU

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

kanewolf Reply

Hearing protection NOT included, but required ...Admin said:Supermicro's 1023US-TR4 platform serves up powerful performance in a slim package, with up to 128 cores packed into a slim 1U footprint.

Supermicro 1024US-TRT Server Review: 128 Cores in a 1U Chassis : Read more -

jeremyj_83 Reply

Its in a data center. You always need hearing protection in a place like that.kanewolf said:Hearing protection NOT included, but required ... -

kanewolf Reply

Many members of this forum think that hand-me-down 1U servers are a good idea for at home. They have never "lived" in a data center (as I have). Many times the CRAC (or CRAH) units are the noisiest but 1U servers have a high pitched noise that is REALLY annoying.jeremyj_83 said:Its in a data center. You always need hearing protection in a place like that. -

jeremyj_83 The ability to have 4 NICs, all with a PCIe 4 x16 connection, in a 1U chassis is amazing. Sadly they only included 4 NVMe storage slots. I personally have been interested in the AS-1124US-TNRP. Dual socket, 32 DIMMs, 10 NVMe storage slots, and 4x PCIe 4 x16 expansion slots. Allows for a nice hyperconvered server with up to 8 ports at 100Gb or 4 ports at 200Gb.Reply -

jeremyj_83 Reply

I know how loud those things are. Those 40mm Delta fans that spin at 15,000RPM are insanely loud.kanewolf said:Many members of this forum think that hand-me-down 1U servers are a good idea for at home. They have never "lived" in a data center (as I have). Many times the CRAC (or CRAH) units are the noisiest but 1U servers have a high pitched noise that is REALLY annoying. -

domih Buying a NEW DC/Corporate server for usage at home brings too many issues:Reply

a. You only buy one, the provider will not give you any discount. DC/Corporate hardware prices are always hyper-inflated so that during the deal negotiations the provider will give a significant discount as last nudge toward a deal. Prices are also higher because the manufacturer and provider guaranty support and availability for 10 years, as an example.

b. For the noise, unless you want to transform your living room as an airport (sound-wise), the best solution is a sound-proof basement or attic. Great for making the lives of rats a pita.

c. After you spend your hard won kopeks on a new shiny 100 GbE capable server, be aware that the rest of your computers will have to be PCIe 3.0 or PCIe 4.0 with a PCIe x16 slot dedicated to the NIC plus something like a local NVMe RAID 0 or 10. Anything under this will result in very disappointing results during file transfers. You end up with the local buses being slower than the network (!) A PC with PCIe 2.x will choke at around 20 GbE.

d. You'll want to run the thing with a beefy UPS.

e. You'll want to run the thing 24 x 7. The power bill at the end of the month will reflect that...

CONCLUSION: Don't do it. A more "valid" path is to buy used DC/Corporate hardware on eBay or alike. But you have to know what you're doing and do your home work, pun intended. -

kanewolf Reply

Every 1U server I have ever dealt with is horrible for noise. Don't buy 1U servers, IMO. For a non-commercial space, workstation hosts are much more appropriate. Same basic motherboard as a 1 or 2U server but in a much friendlier packaging.domih said:A more "valid" path is to buy used DC/Corporate hardware on eBay or alike. -

domih Replykanewolf said:Every 1U server I have ever dealt with is horrible for noise. Don't buy 1U servers, IMO. For a non-commercial space, workstation hosts are much more appropriate. Same basic motherboard as a 1 or 2U server but in a much friendlier packaging.

I agree but with customization you can make them become silent. I have a few Mellanox 1U switches (QDR, FDR10, FDR): I just remove the top panel and replace all the fans with silent ones and obviously placed differently (*). The fan LED is expectedly always red but the unit is functional and I don't care. The 1U is no longer a 1U but as a result I can use the switches inside the home, they are silent. I guess that would be the same thing with servers. But you have to do your home work, find the OEM docs of the fans for the schematics and then do some soldering. Without the top panel, the slower silent fan do the job.

(*) The overall cost of the switches + silent fans (Noctua) is still significantly less than the new shiny equivalent "prosumer" 10 GbE, e.g. 36-port 56Gbps (FDR) for $200 plus $200 of fans = $400. Same thing for the PCIe NICs inside the PCs, e.g. $65 + $20 fan to "replace" the original air flow from the 1U. As a result I get from 30 to 45 GbE depending on the PC. Much nicer than loser 10GbE.

Otherwise, I concur that at home, the vertical workstation form is much easier. No esoteric customization required.