Why you can trust Tom's Hardware

All systems are tested in a dual socket (2P) configuration.

| Test Platform | Memory | Tested Processors |

|---|---|---|

| Intel Software Development Platform — Quanta Rack Mount 2S 2U | 16x 64GB (1TB) DDR5-5600 ECC (EMR) - SPR 16x DDR5-4800 (1TB) | Intel Xeon 8592+, 8480+, 8490H, 8462Y+ |

| Supermicro SYS-621C-TN12R | 16x 32GB (512GB) Supermicro ECC DDR5-4800 | Intel Xeon Platinum 8468 (SPR) |

| 2P AMD Titanite Reference Platform | 24x 64GB (1.5TB) Samsung ECC DDR5-4800 | AMD EPYC Genoa 9654, 9554, 9374F, Bergamo 9754 |

AI Workloads

Generative AI is grabbing the headlines, not to mention the hearts and minds of marketing departments, but there's an entire universe of different types of AI models employed in the data center. For now, training large AI models remains the purview of GPUs, and the same can be said for running most large generative AI models. However, most AI inference continues to run on data center CPUs, and we expect that trend to not only continue but also intensify.

The ever-changing landscape of the explosively expanding AI universe makes it exceedingly challenging to characterize performance in such a way that it is meaningful to the average data center application. Additionally, batch sizes and other tested parameters will vary in real deployments. As such, take these benchmarks as a mere guide — these tests are not optimized to the level we would expect in actual deployments. Conversely, some data centers and enterprises will employ off-the-shelf AI models with a little tuning, so while the litmus of general performance is applicable, the models employed, and thus the relative positioning of the contenders, will vary accordingly.

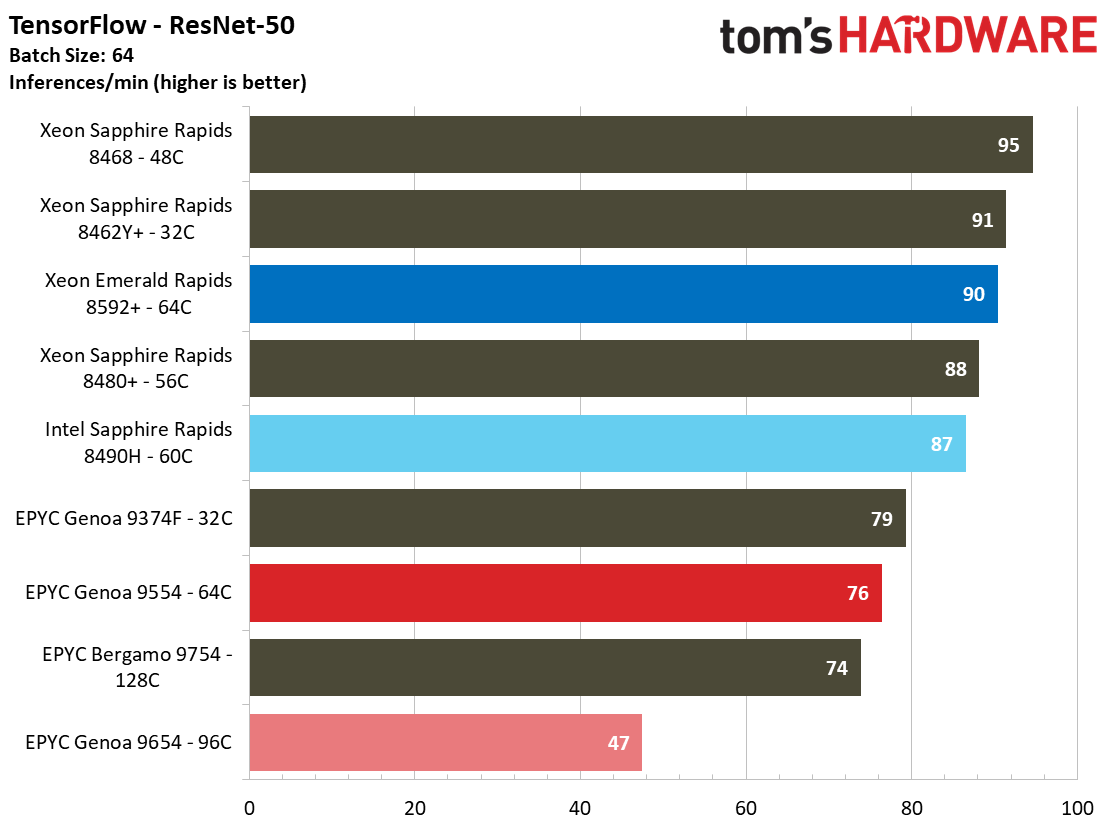

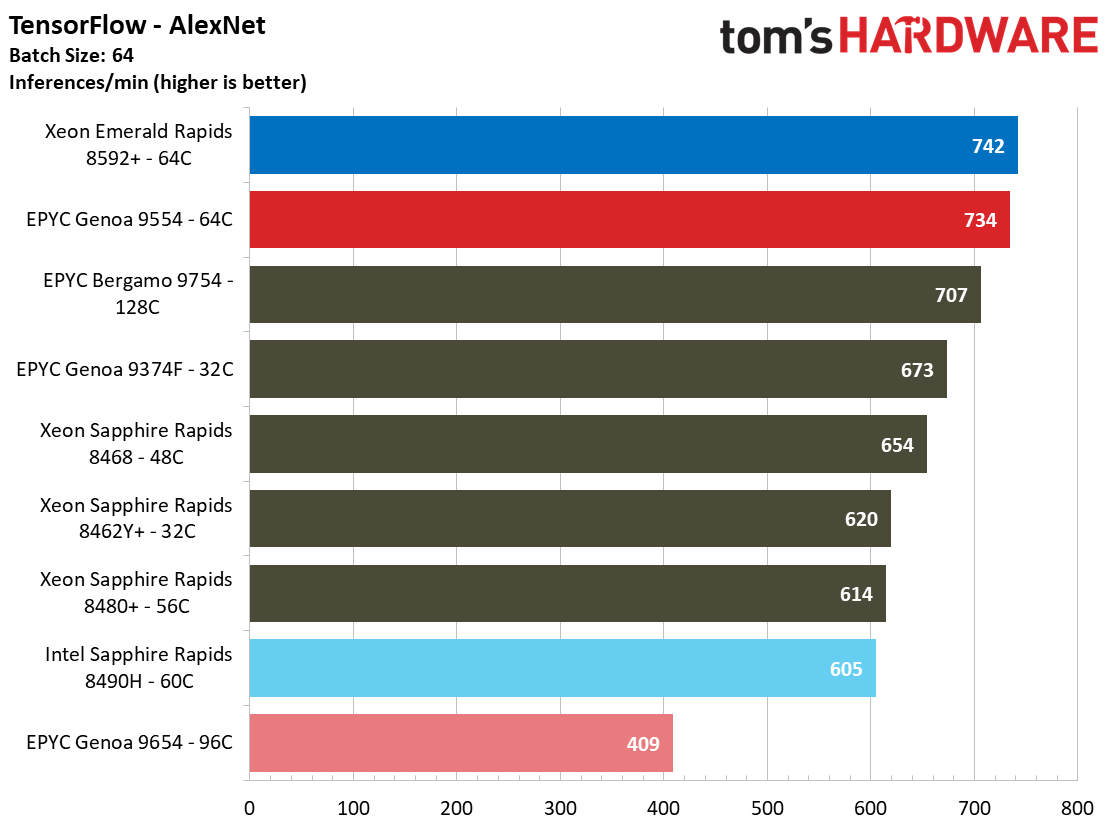

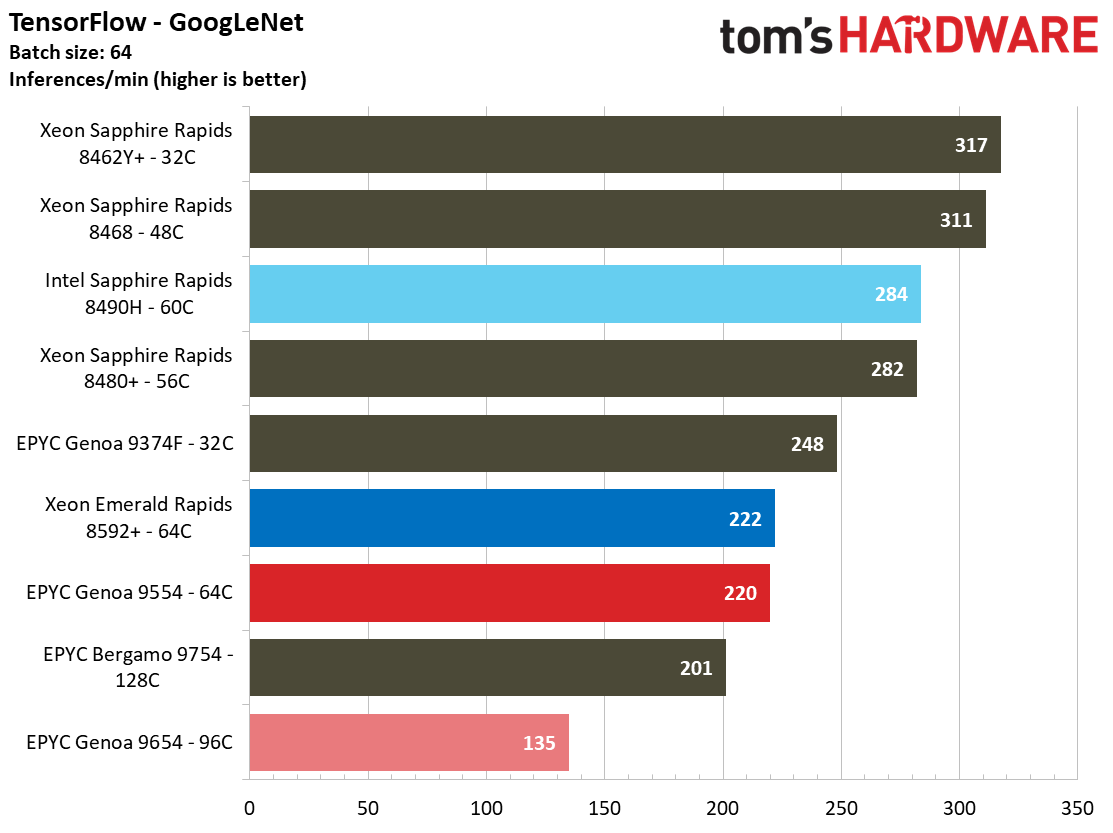

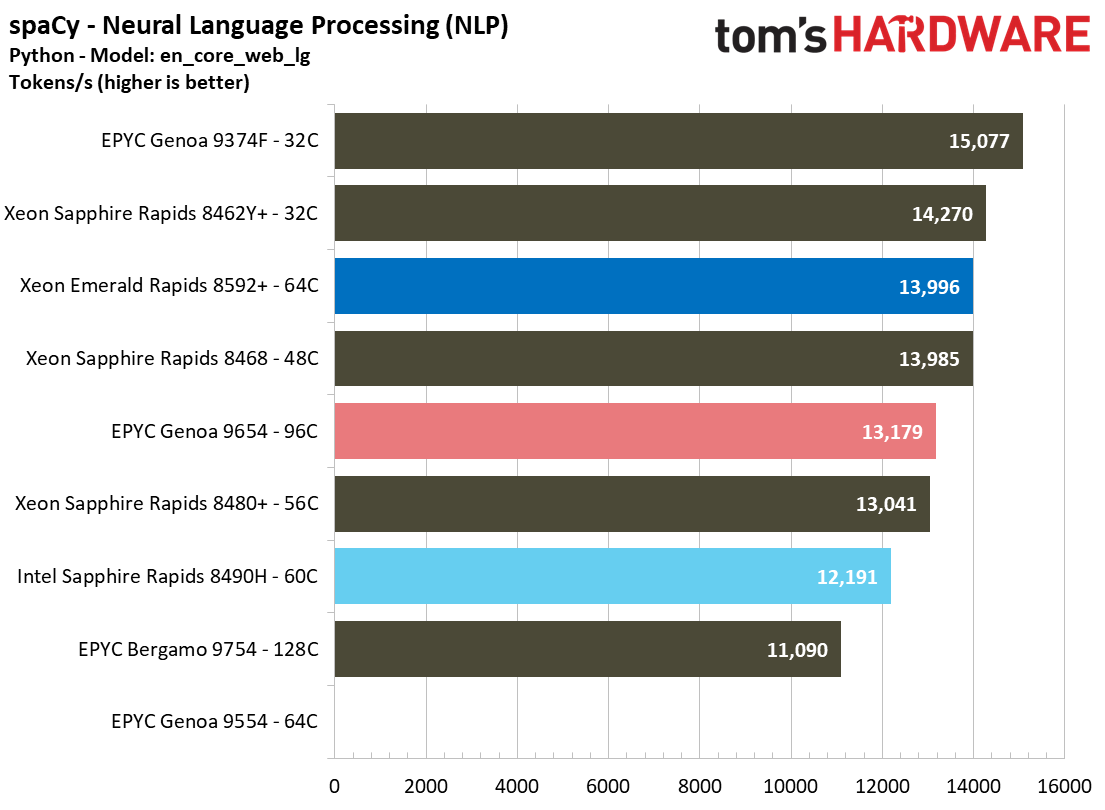

It's clear that Intel's approach of enabling AI-boosting features like AMX, AVX-512, VNNI, and Bfloat16 has yielded a strong foundation for AI users to build upon. The Emerald Rapids 8592+ is 18% faster than the 64-core EPYC Genoa 9554 in the TensorFlow ResNet-50 test, but the chips effectively tie in the AlexNet and GoogLeNet models. The 96-core EPYC Genoa 9654 surprisingly falls to the bottom of the chart in all three of the TensorFlow workloads, implying that its incredible array of chiplets might not offer the best latency and scalability for this type of model.

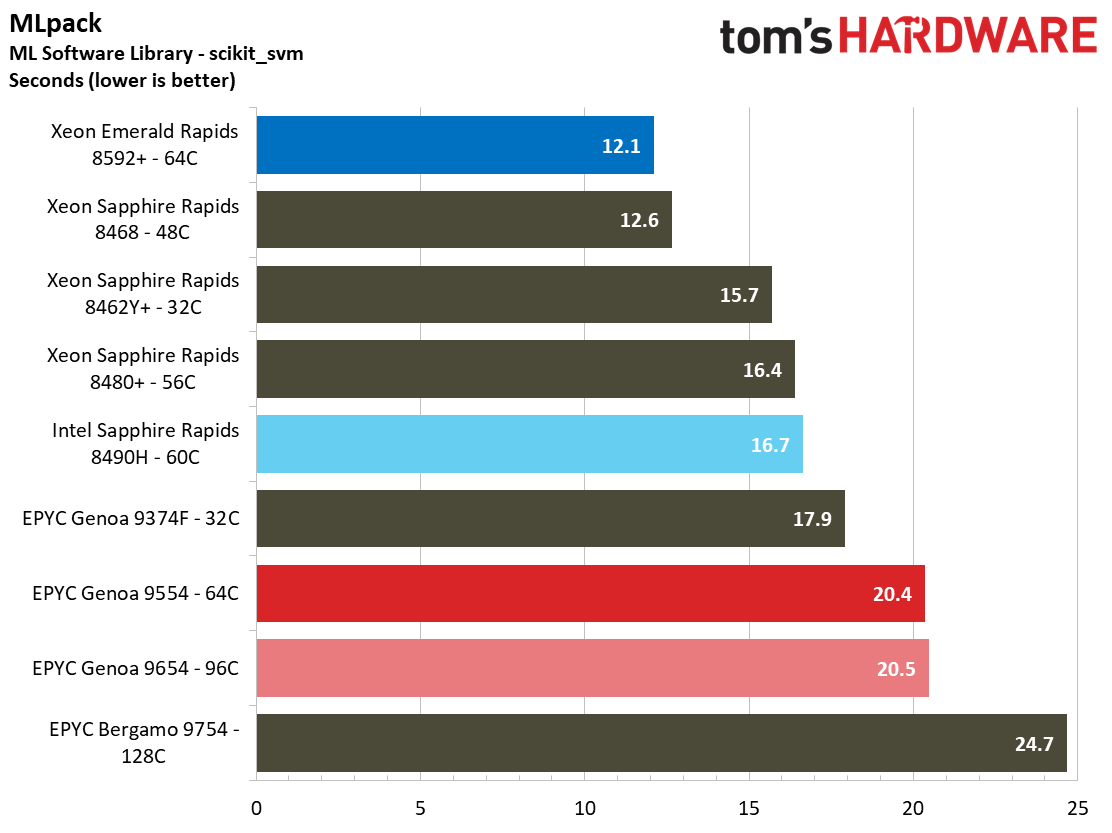

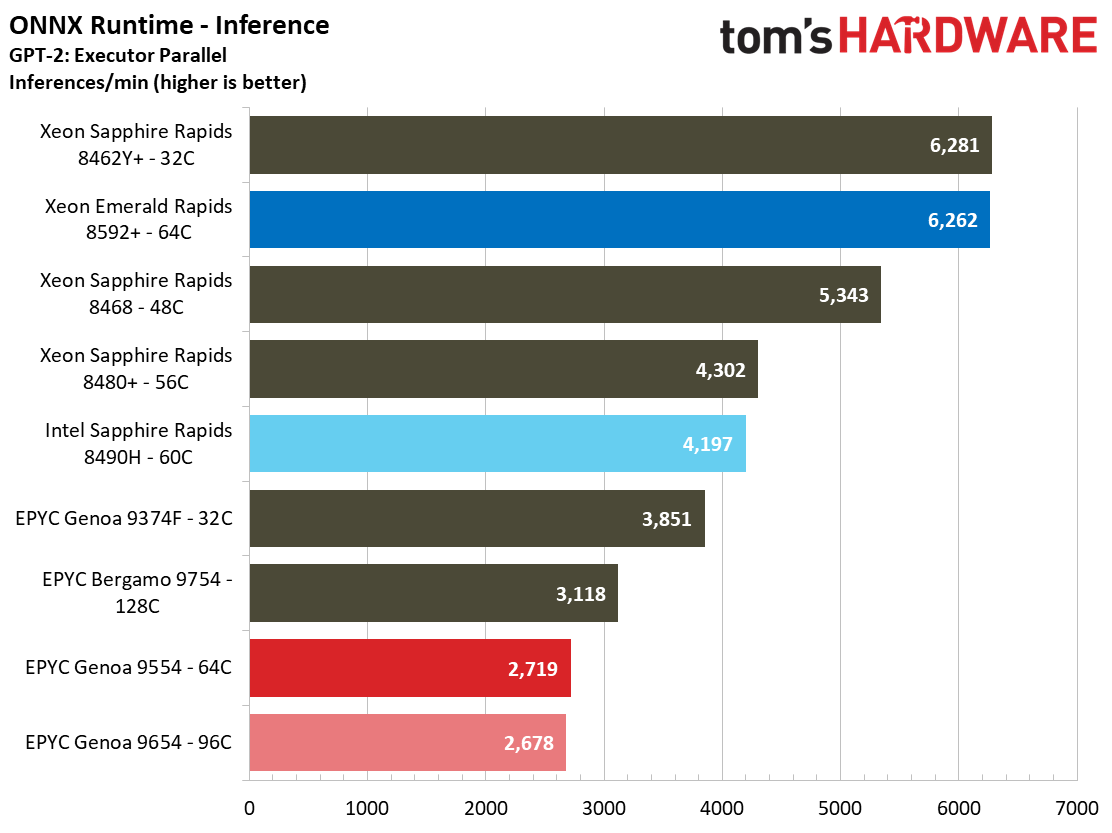

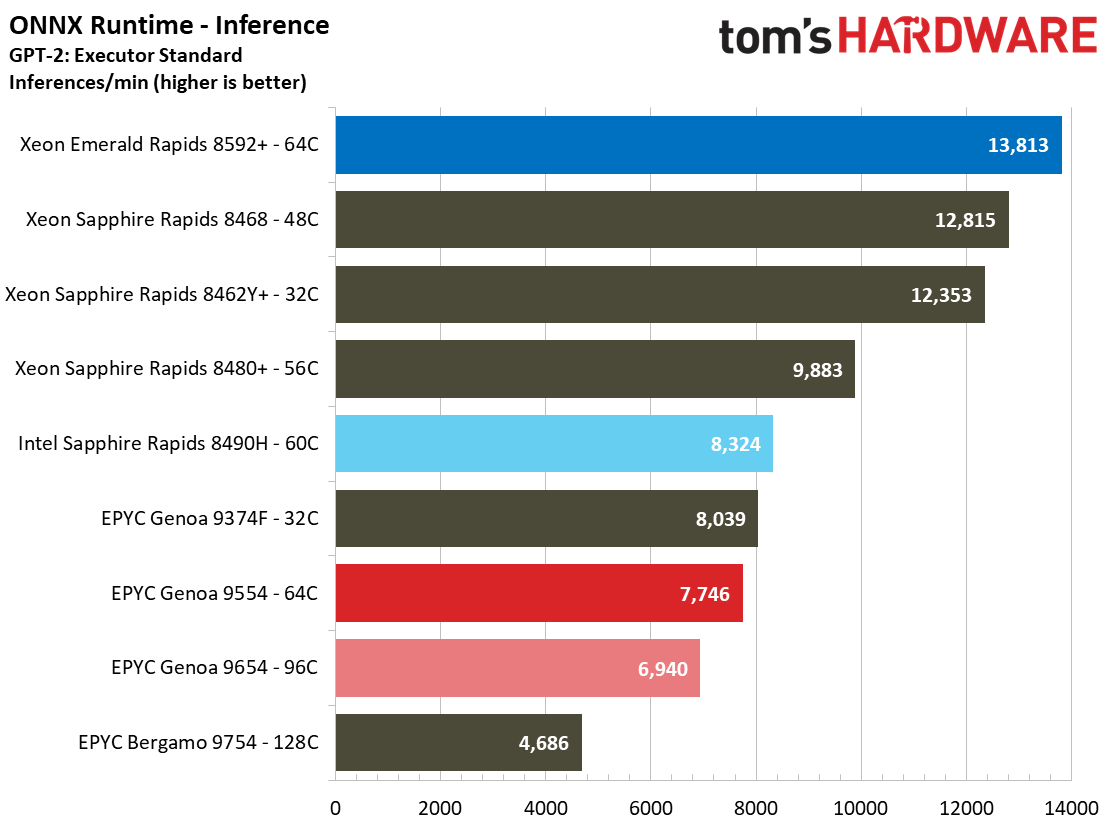

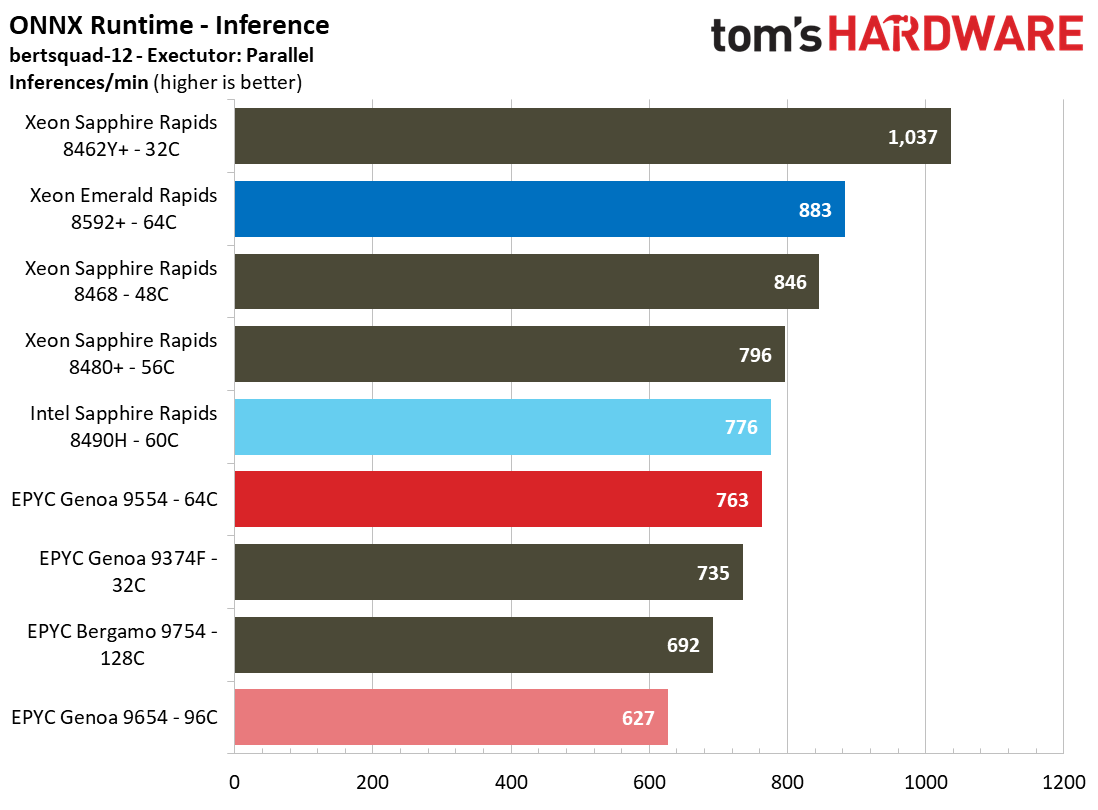

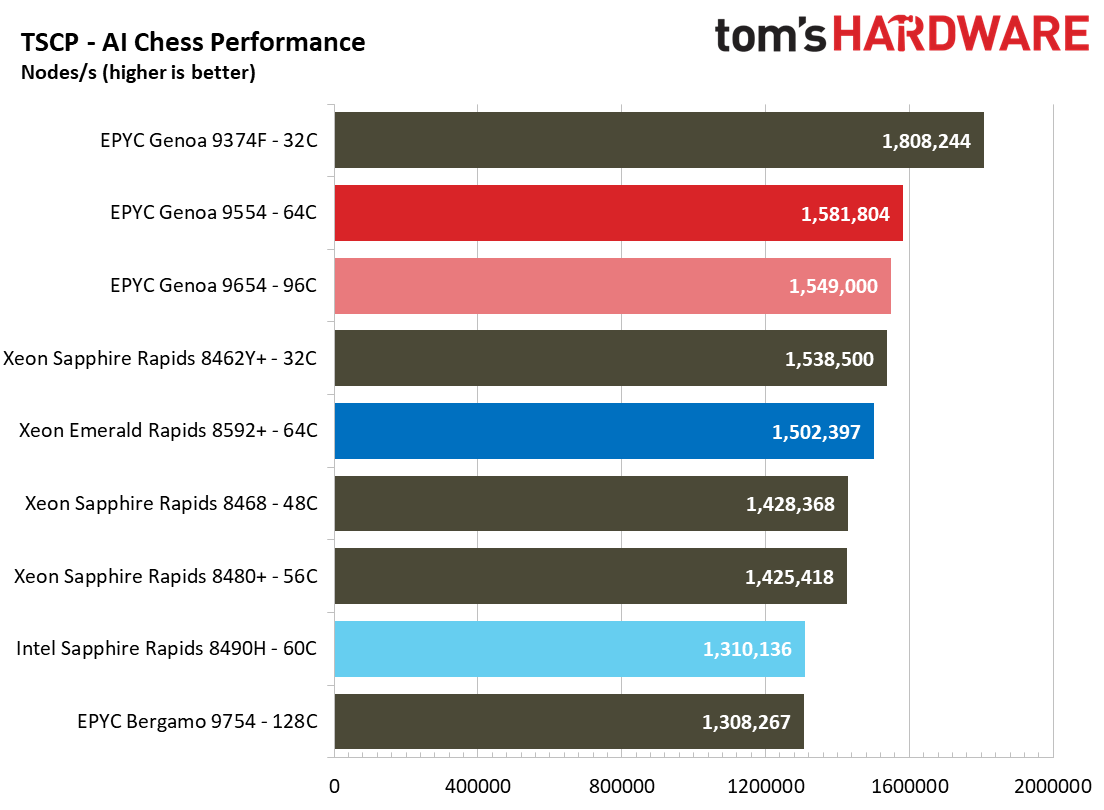

MLpack finds the 8592+ taking a strong lead over both of the comparable AMD processors as it completes the task 40% faster than the competition. All three of the ONNX inference benchmarks also highlight the advantage of Emerald Rapids' in-built acceleration. The Emerald Rapids 8592+ is impressive in many of these benchmarks, but the competing 62-core EPYC 9554 is roughly 5% faster in the TSCP AI chess benchmark.

Critically, we notice a big gain for Emerald Rapids over the prior-gen 60-core 8490H in nearly every workload except the GoogLeNet model. Overall, this is an impressive show from Emerald Rapids.

As impressive as these benchmarks are, we didn't have time to fully test some of the models that experience the most uplift from AMX acceleration. We're working to address those challenges, but from other third-party benchmarks that we've seen, it's clear that AMX gives Intel an incredible lead in models that leverage the instruction set.

Molecular Dynamics and Parallel Compute Benchmarks

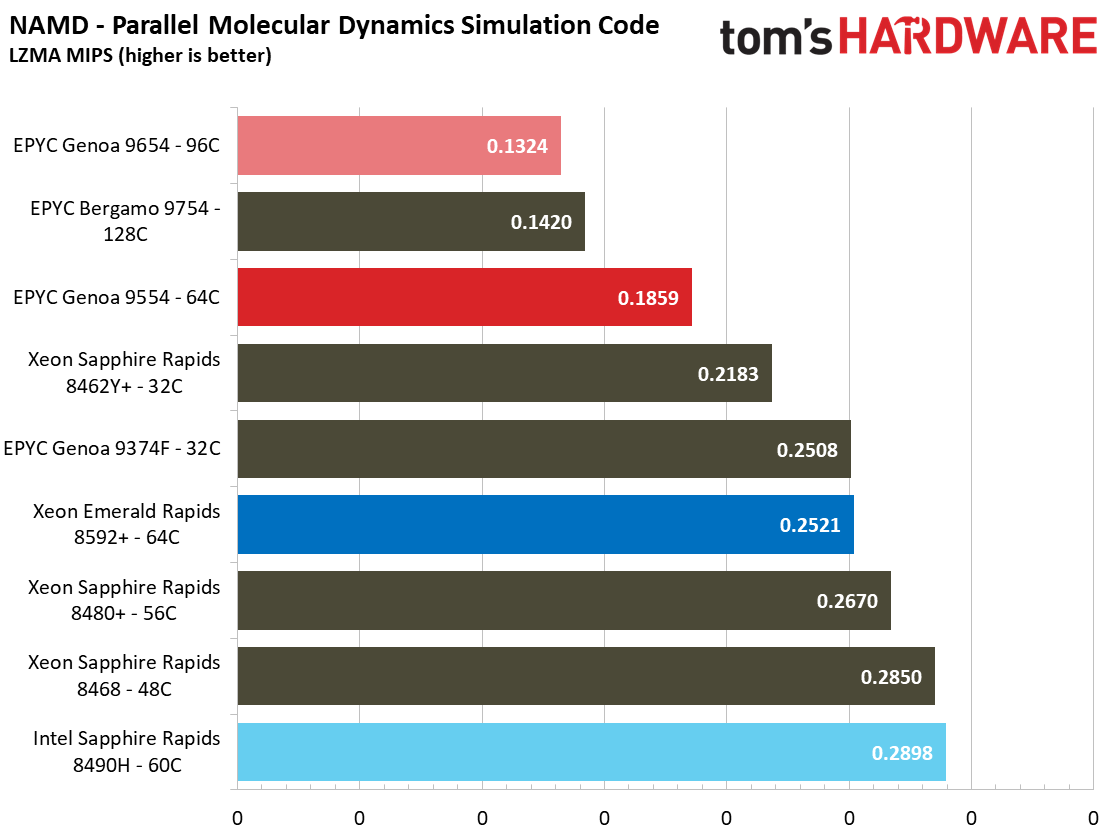

NAMD is a parallel molecular dynamics code designed to scale well with additional compute resources; it scales up to 500,000 cores and is one of the premier benchmarks used to quantify performance with simulation code. The 96-core Genoa 9654 is the hands-down winner here, but the 64-core 9554 is also impressive, with a strong win over Emerald Rapids.

You'll notice we also included the 128-core Bergamo 9754 in the test pool, but be aware that many of the benchmarks in our suite don't specifically target the types of workloads this chip is designed for. It does provide for interesting viewing, though. We'll be able to add Intel's competing Sierra Forest early next year, but this does highlight that Intel lags AMD in the density-optimized chip department.

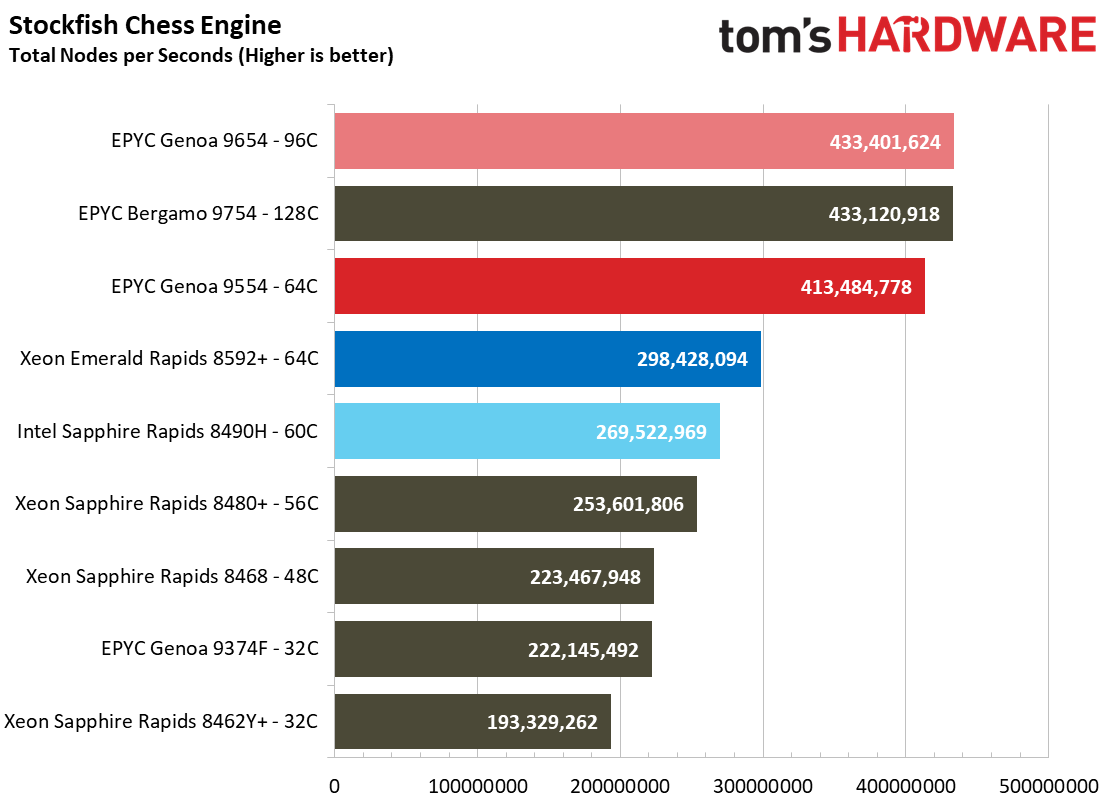

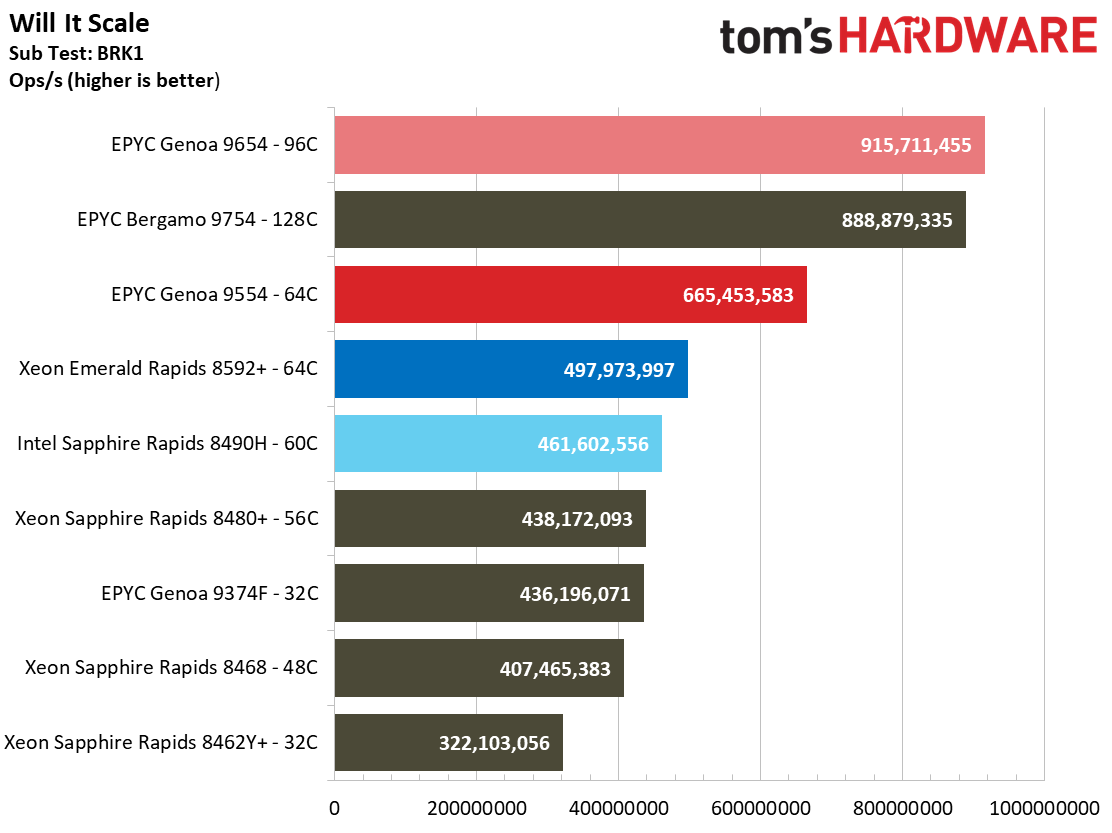

The award-winning Stockfish chess engine is designed for the utmost scalability across core counts — it can scale up to 512 threads. The Genoa 9654 takes the lead with an effective tie with the Bergamo 9754. This massively parallel code scales well with EPYC's core counts — the 64-core EPYC 9554 is 39% faster than the 64-core 8592+.

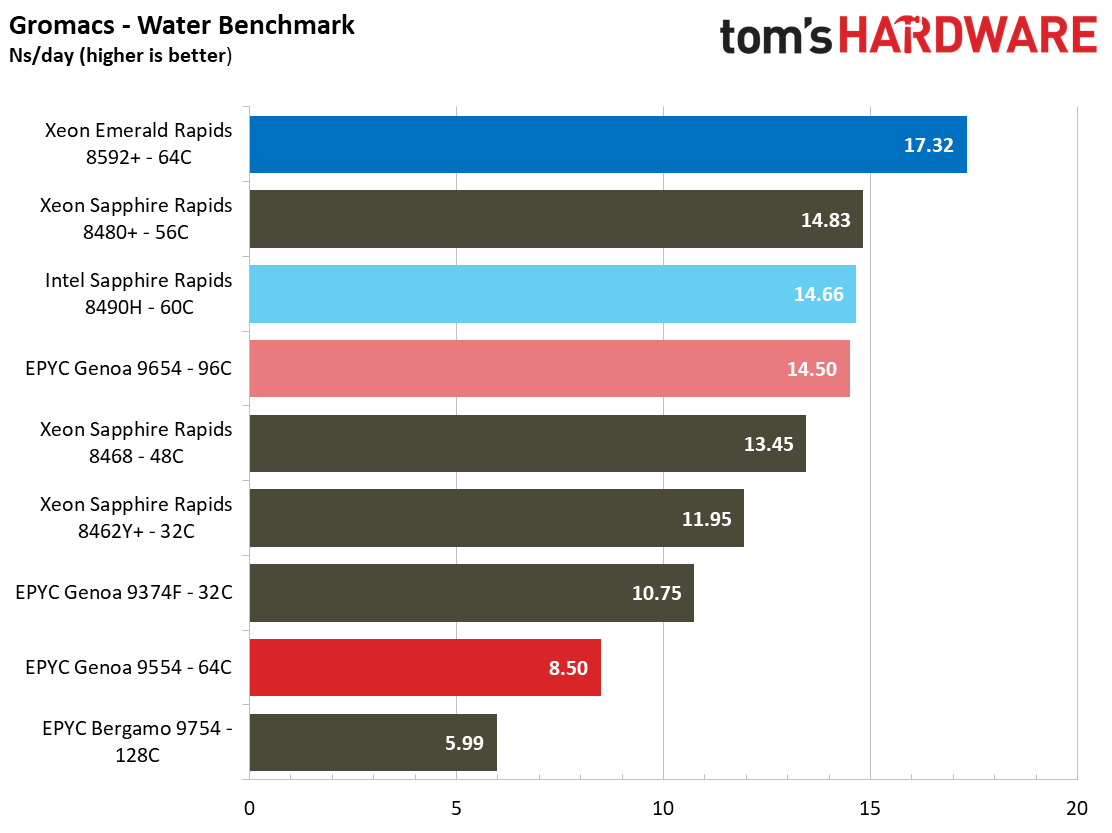

The Gromacs water benchmark simulates Newtonian equations of motion with hundreds of millions of particles. This workload scales well, but the fact is that many workloads will hit other bottlenecks, like memory throughput, NoC, or power constraints before they can fully leverage the highest core counts. Here, the 8592+ is 19% faster than AMD's 96-core and right around twice as fast as the 64-core 9554.

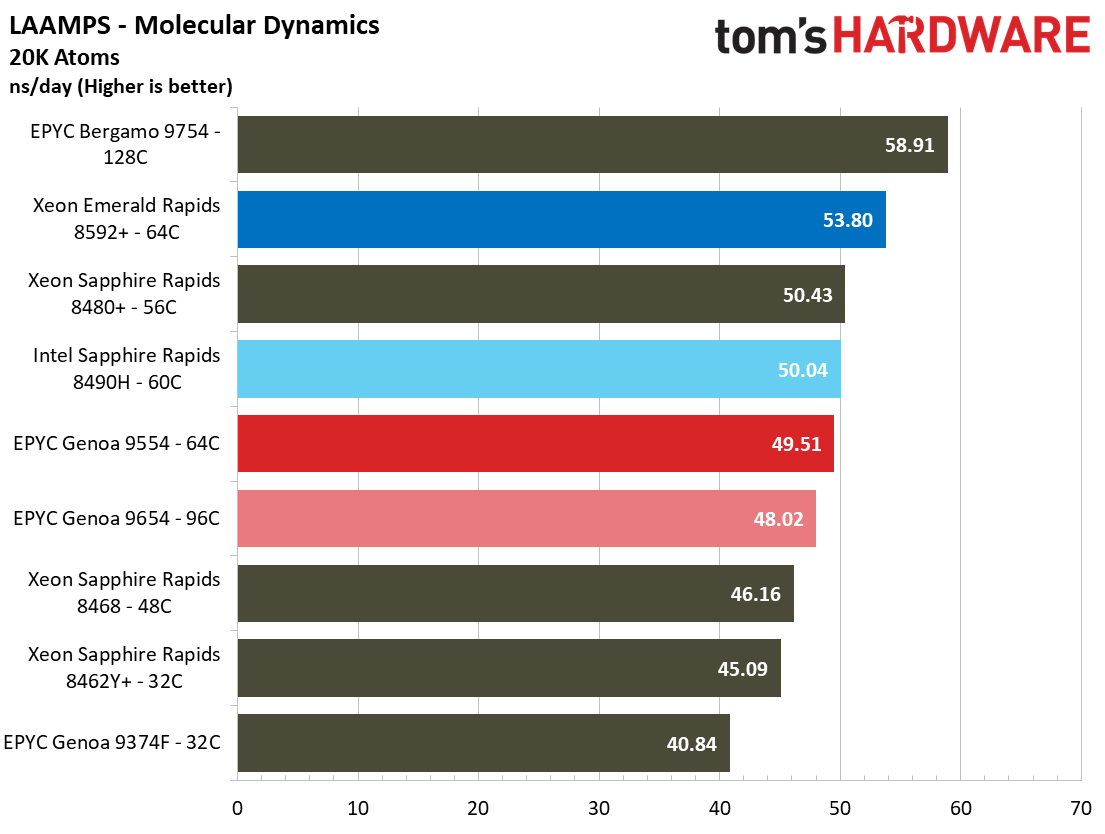

We can also say much the same about the LAAMPS molecular dynamics code. While this code is inherently scalable, we're obviously reaching other bottlenecks before the full might of the 9654's cores can be unleashed. The 8592+ carves out another surprising win in this benchmark.

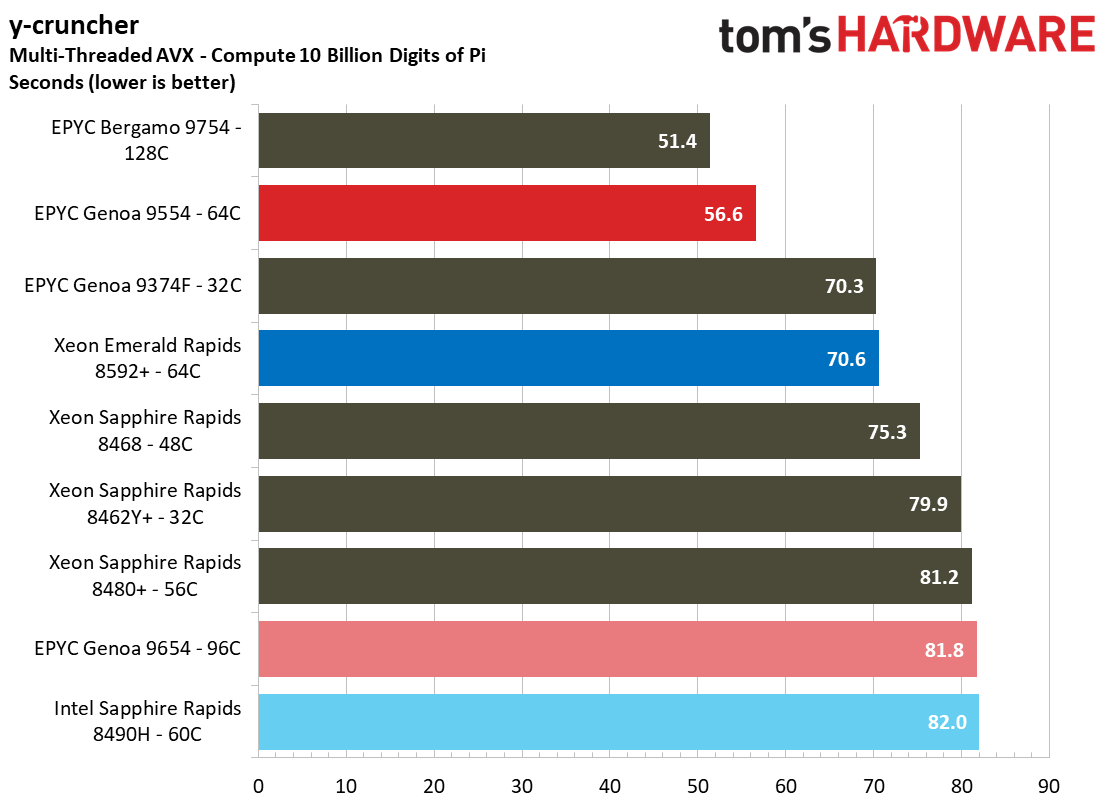

y-cruncher is incredibly sensitive to memory throughput, and here we can see that the per-core memory throughput for the 96-core Genoa 9654 is stretched to its limits while its slimmer 64-core counterpart, the Genoa 9554, takes the top of the chart as it carves out a solid win against Emerald Rapids in this multi-threaded AVX test.

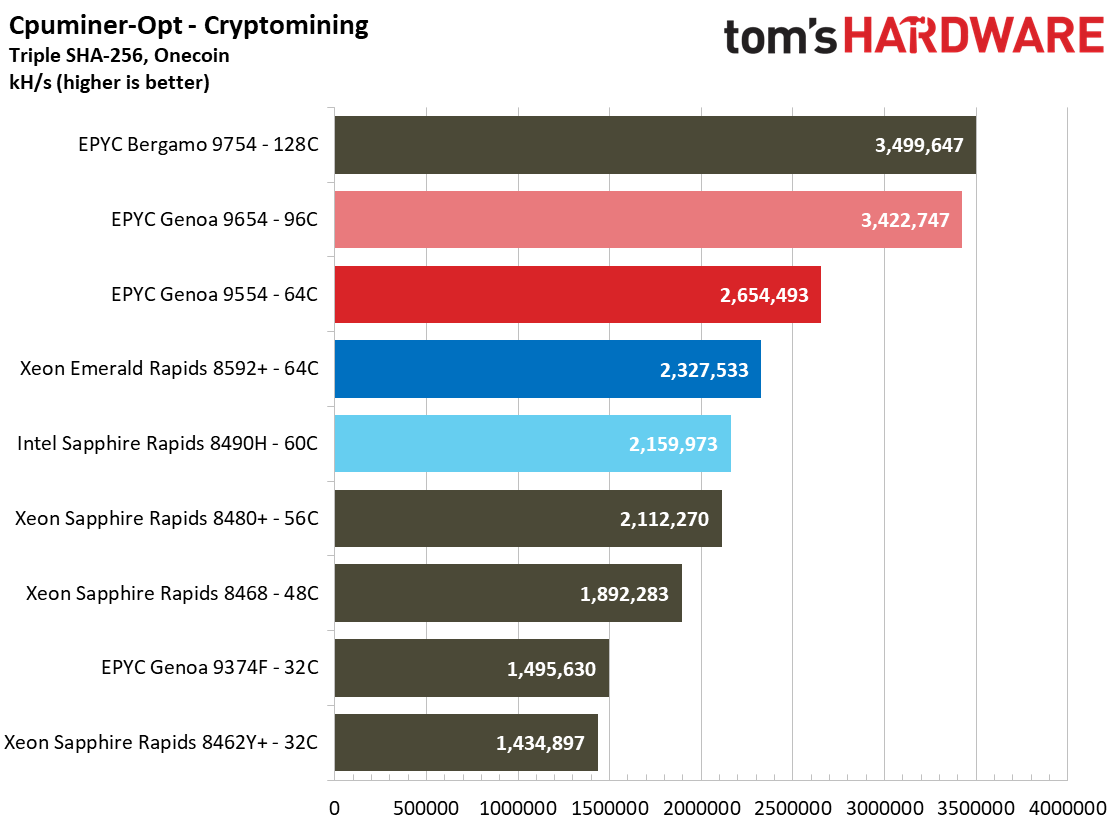

Finally, if you're foolhardy enough to run cryptomining on server CPUs that weigh in over $10,000 apiece and price-to-performance is no concern, the Bergamo 9754 is your chip.

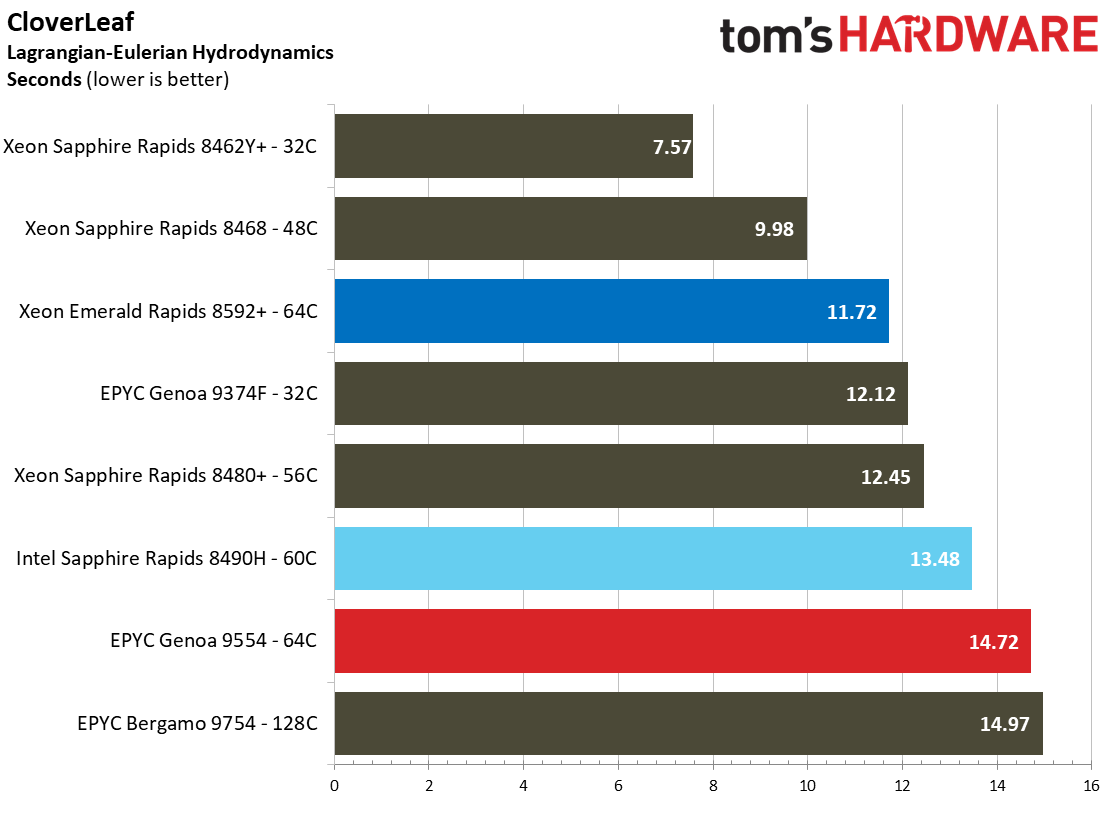

Scientific and Computational Fluid Dynamics

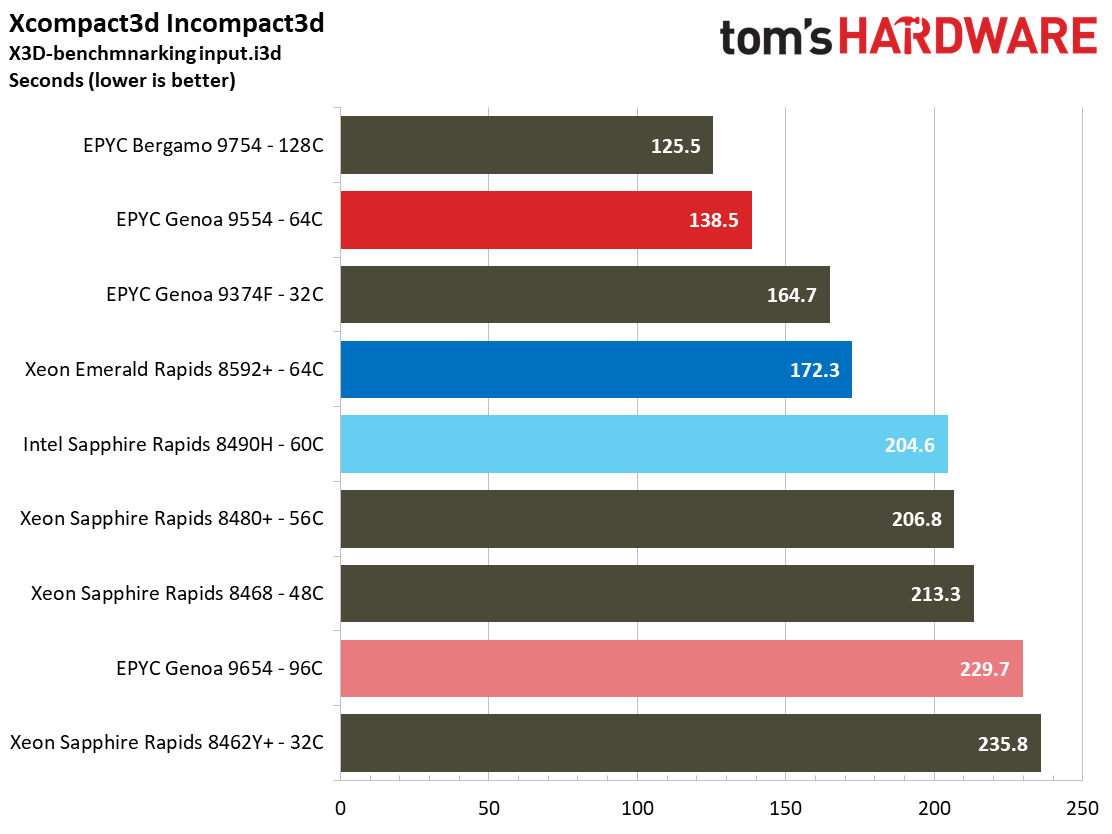

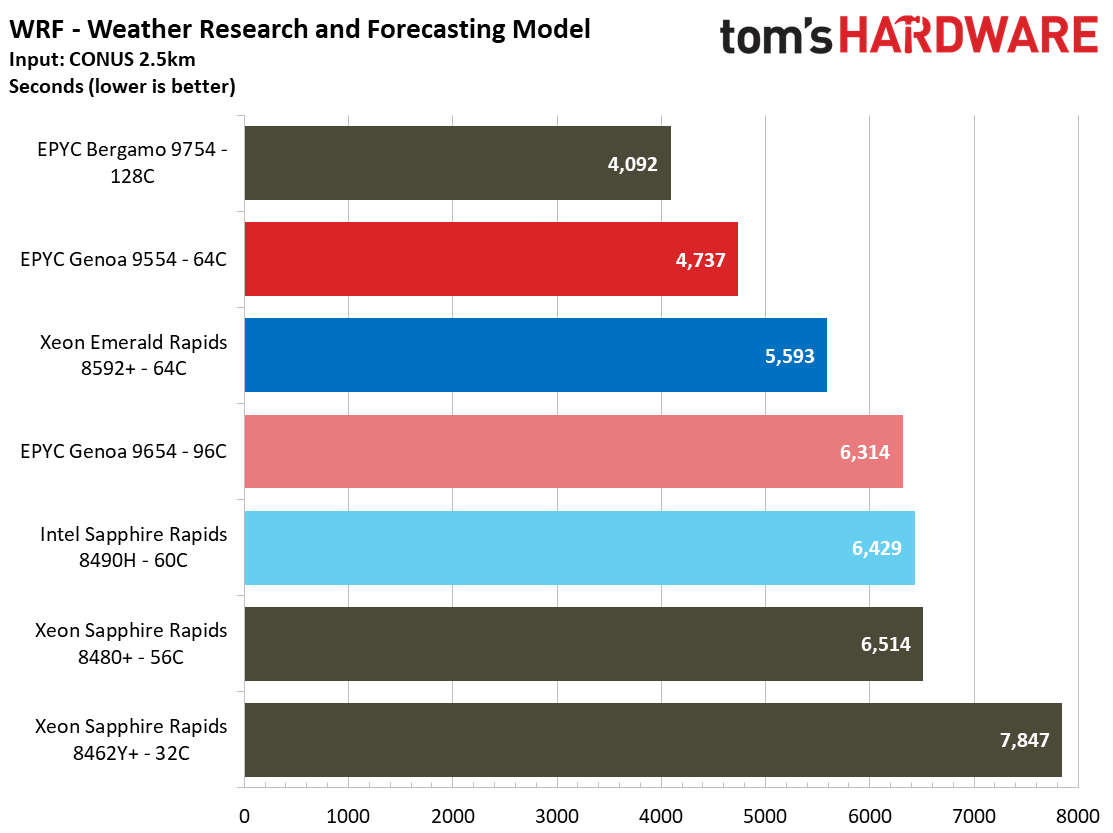

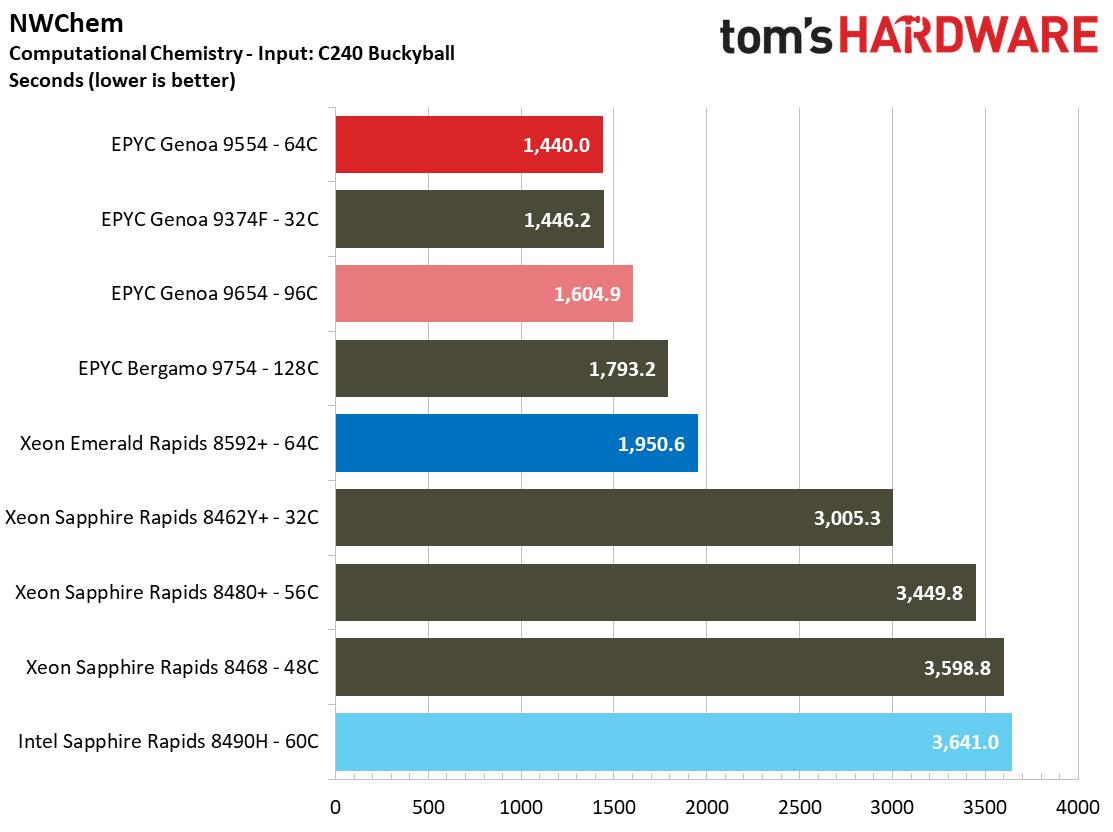

The Weather Research and Forecasting model (WRF) is memory bandwidth sensitive, so it's no surprise to see the Genoa 9554 with its 12-channel memory controller outstripping the eight-channel Emerald Rapids 8592+. Again, the 9654's lackluster performance here is probably at least partially due to lower per-core memory throughput. We see a similar pattern with the NWChem computational chemistry workload, though the 9654 is more impressive in this benchmark.

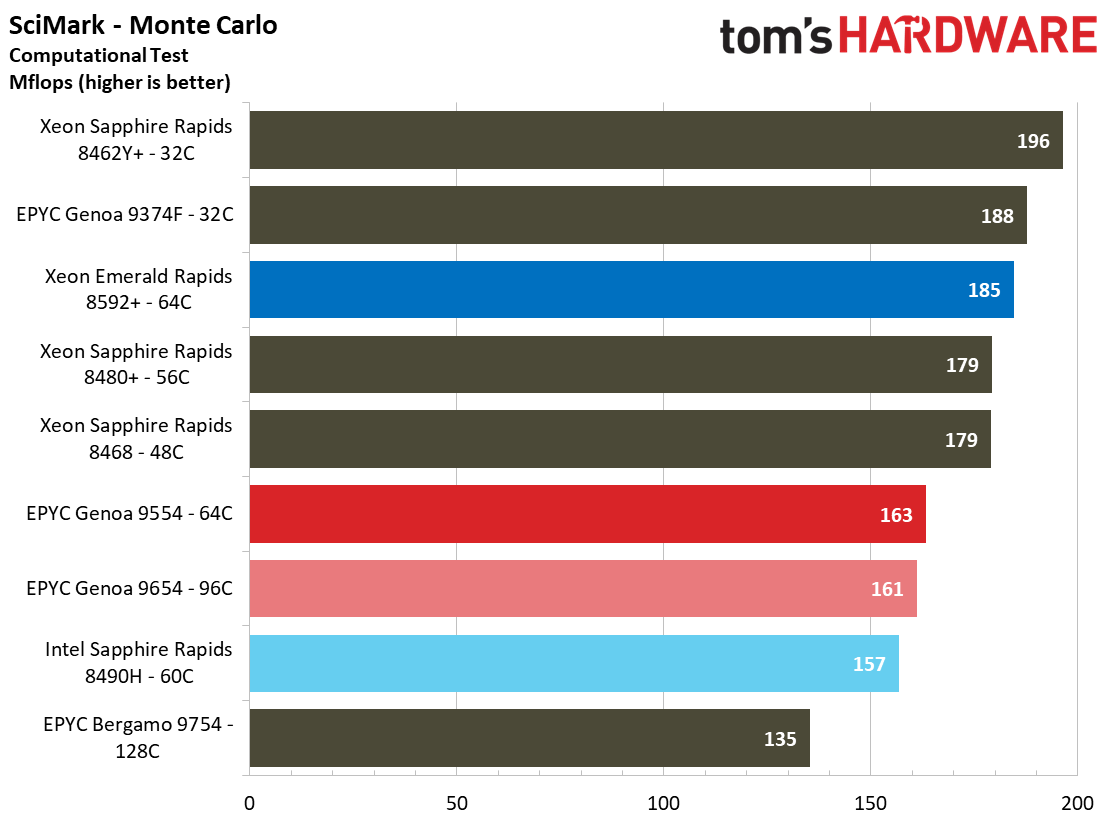

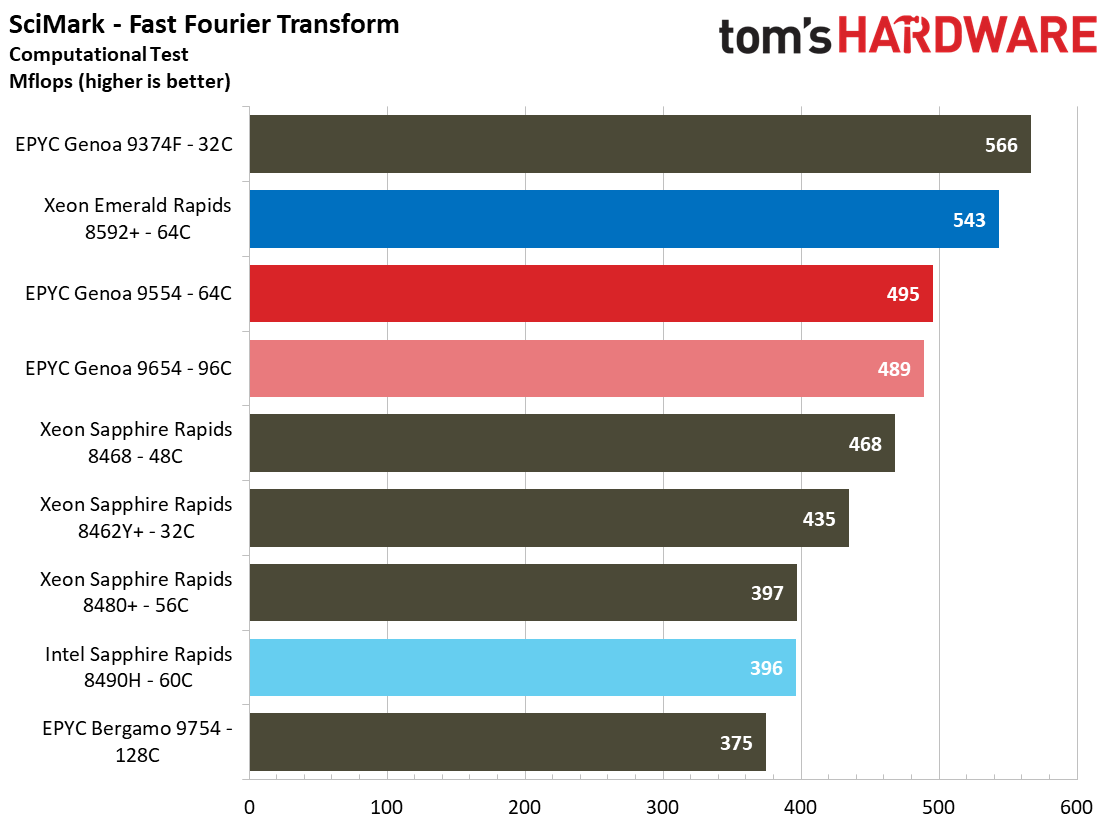

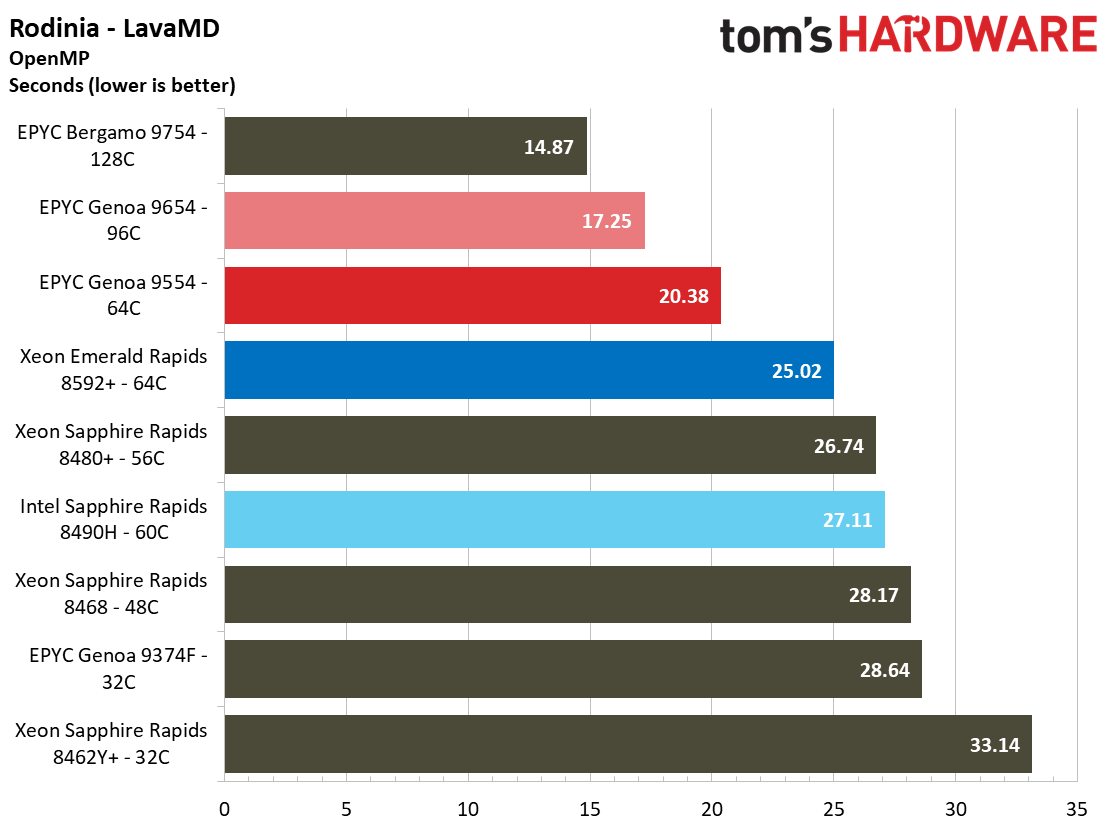

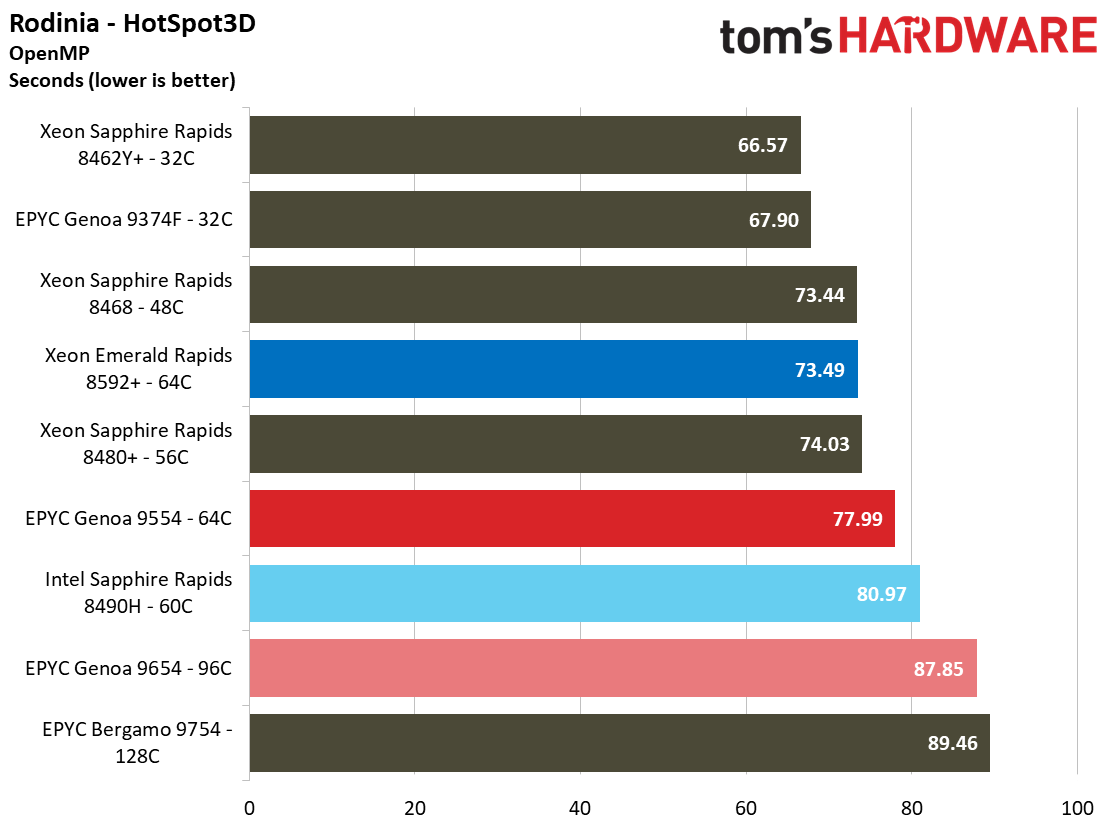

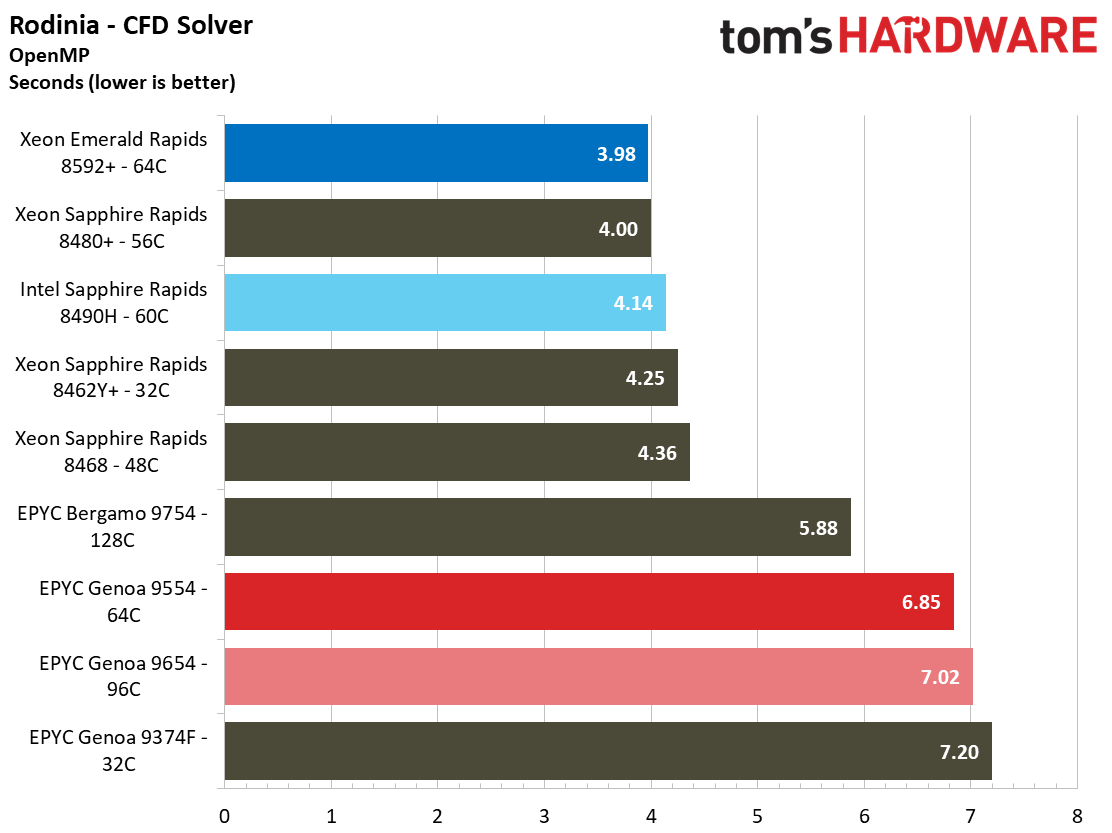

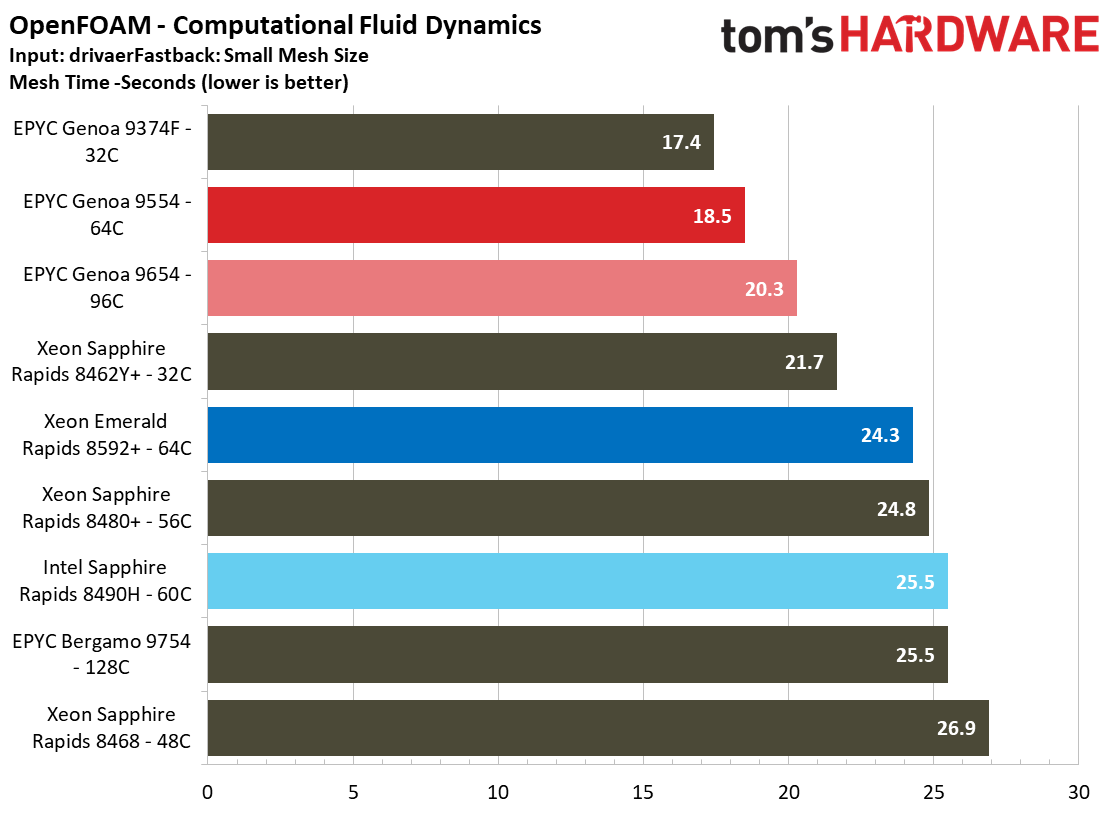

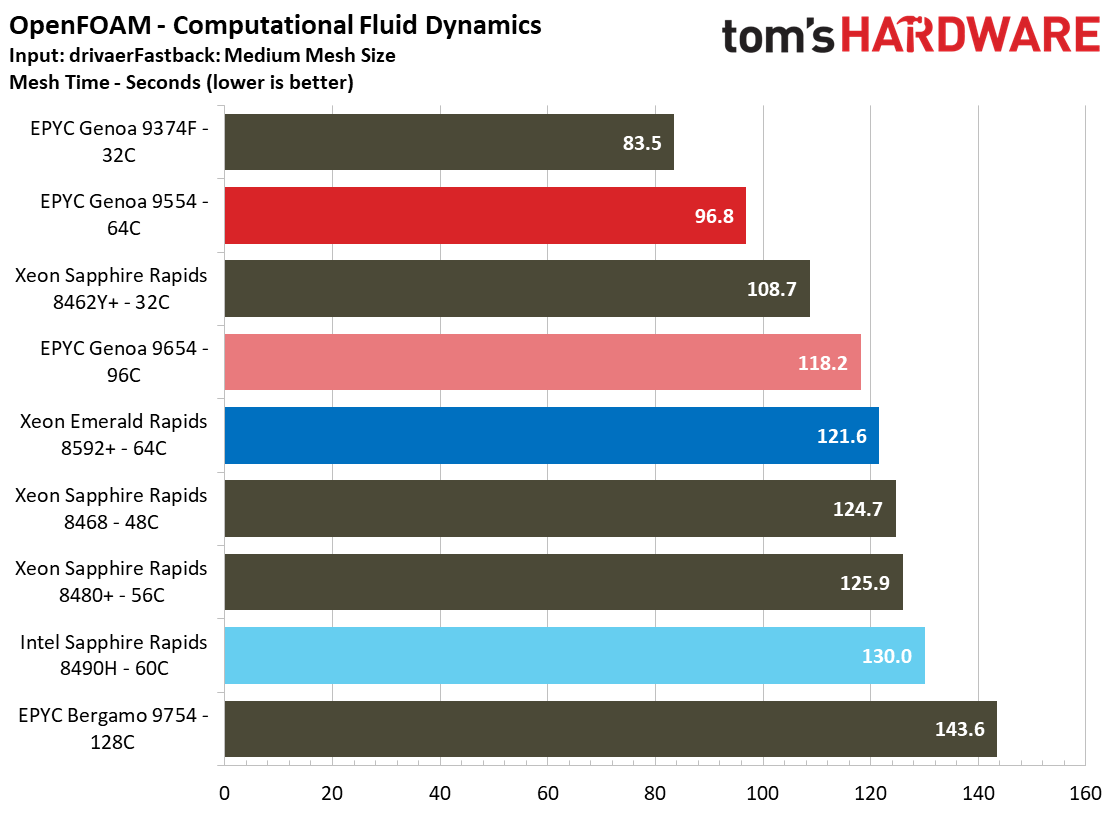

The 8592+ is impressive in the SciMark benchmarks, but falls behind in the Rodinia LavaMD test. It makes up for that shortcoming with the Rodinia HotSpot3D and CFD Solver benchmarks, with the latter showing a large delta between the 8592+ and competing processors. The 64-core Genoa 9554 fires back in the OpenFOAM CFD workloads, though.

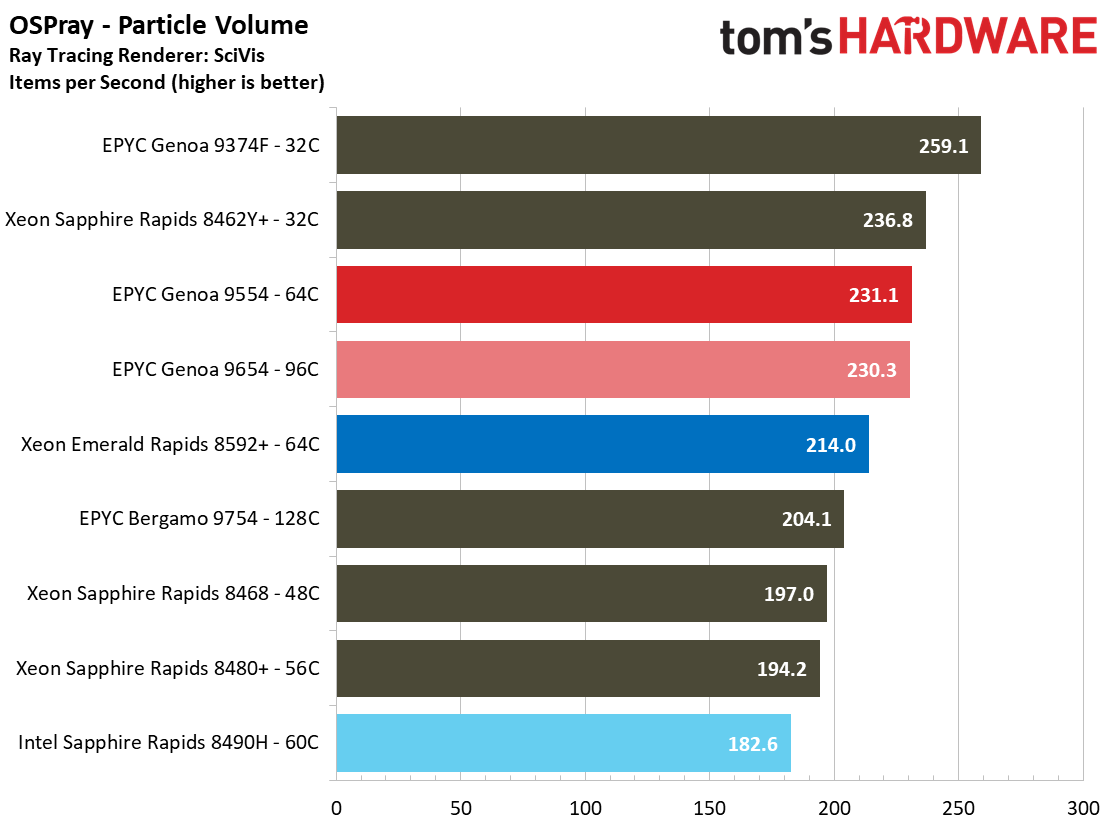

Rendering Benchmarks

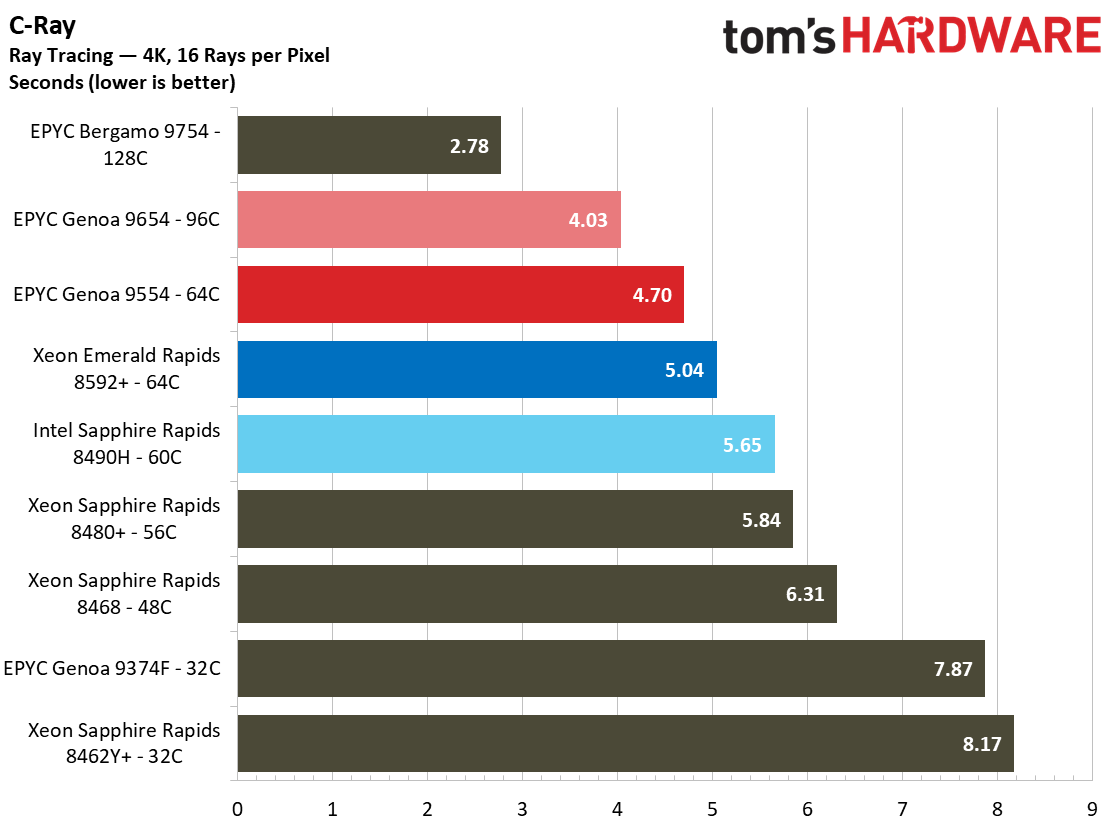

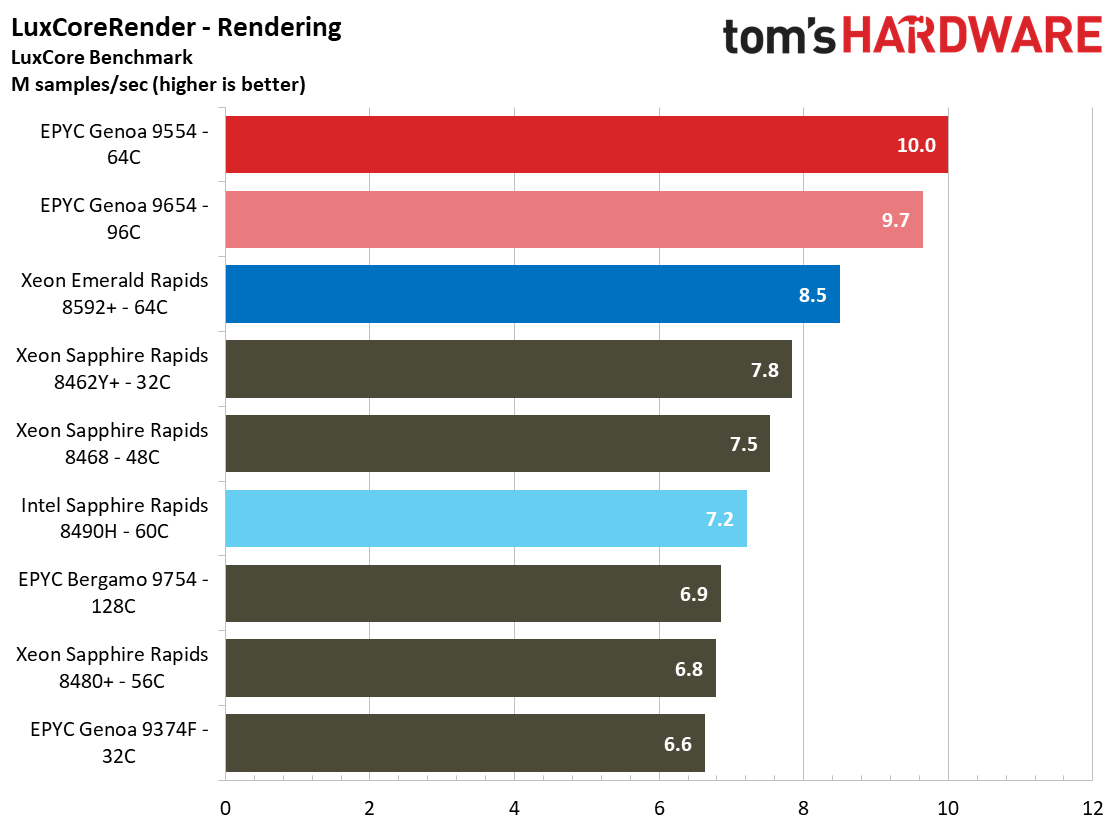

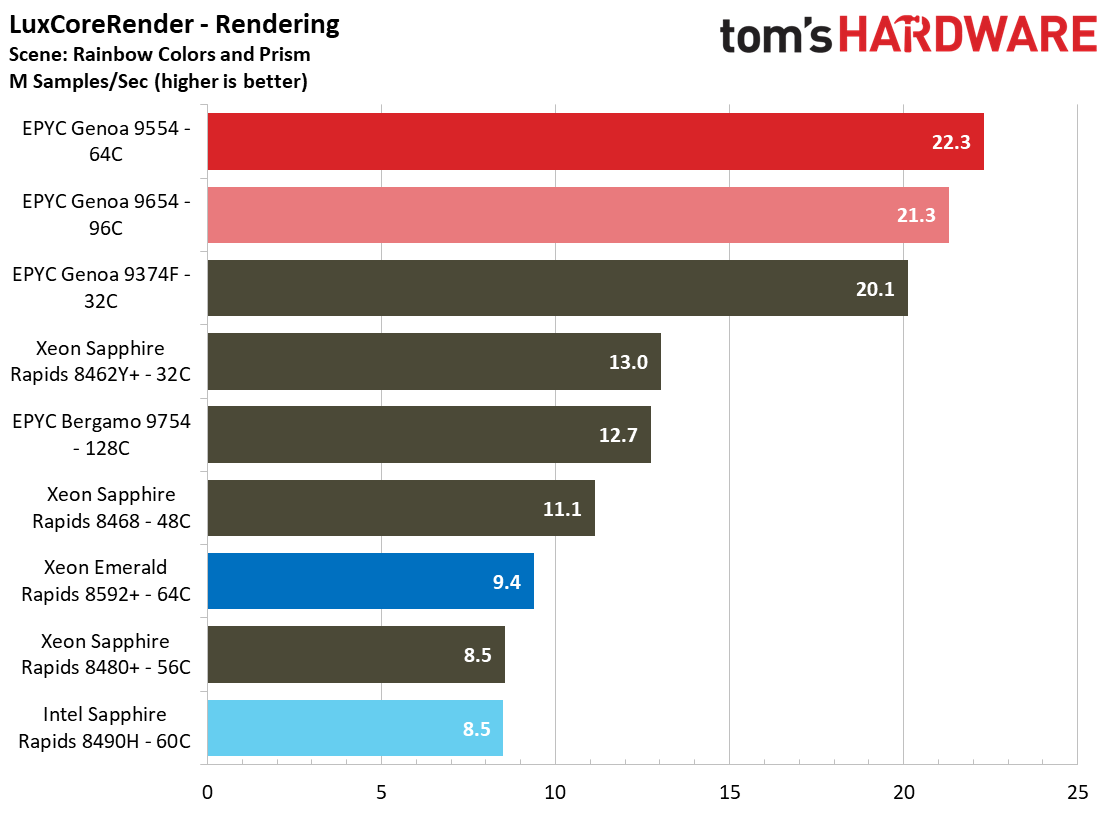

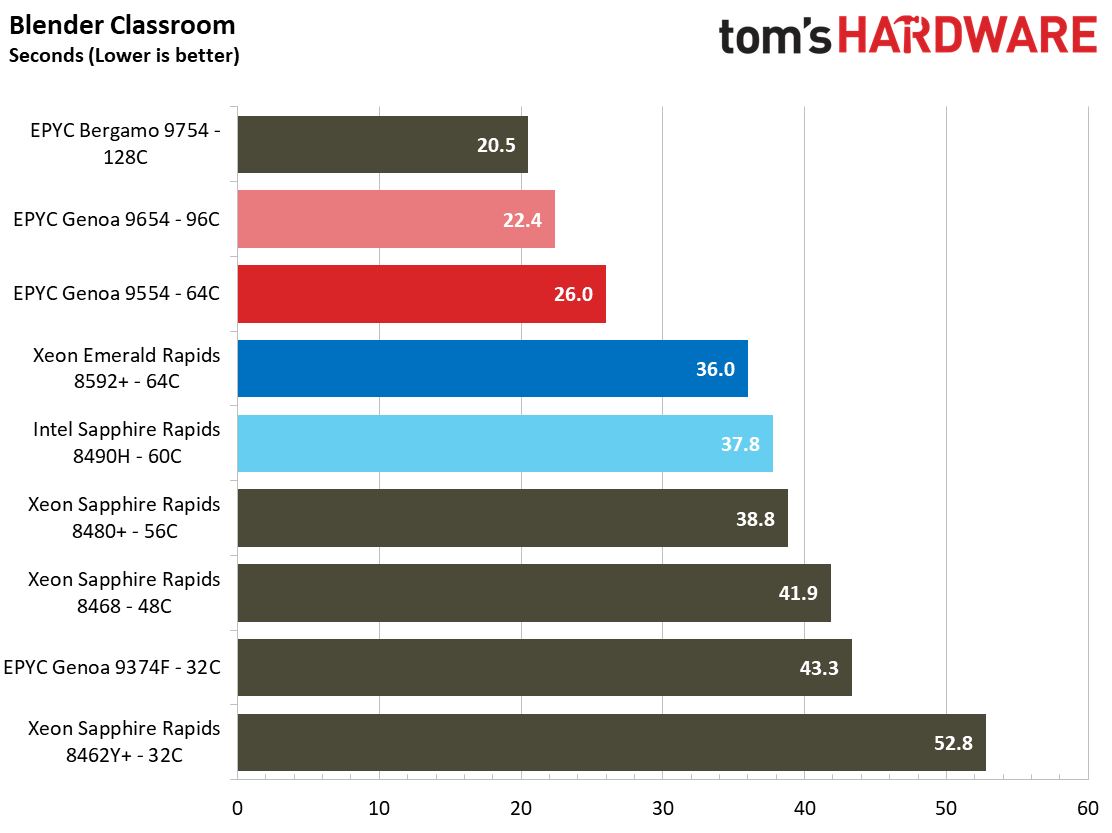

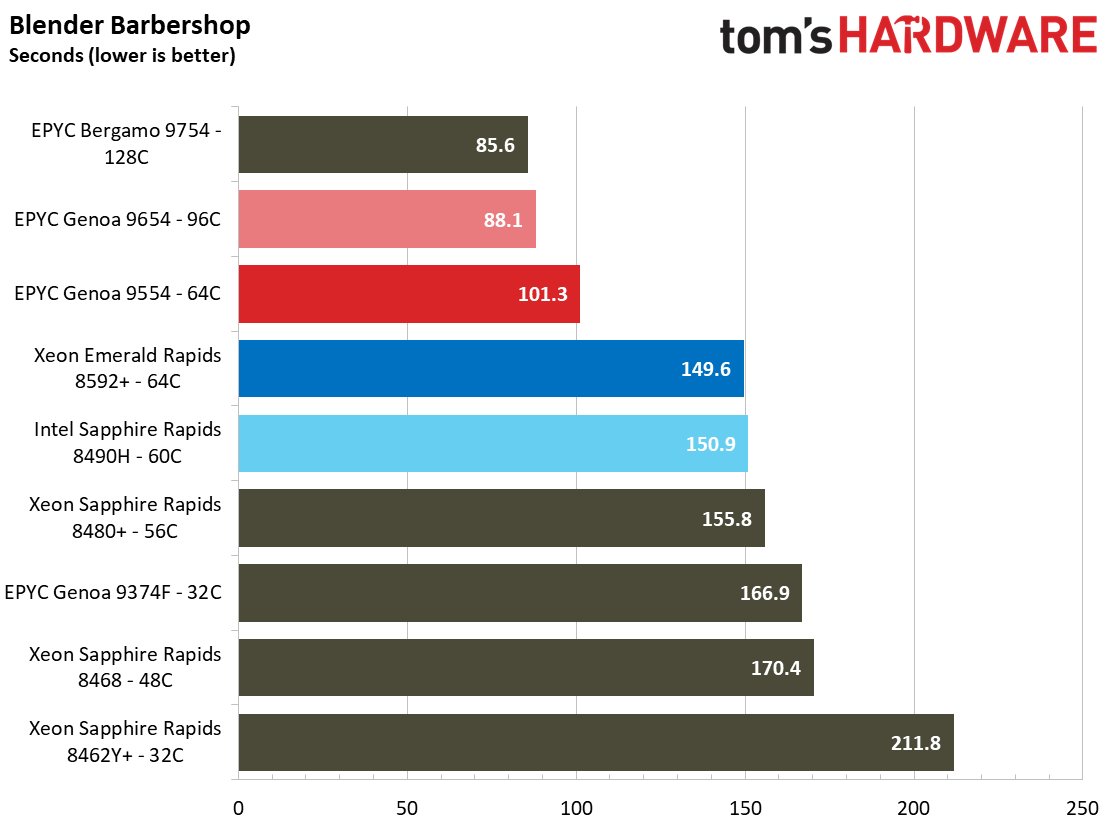

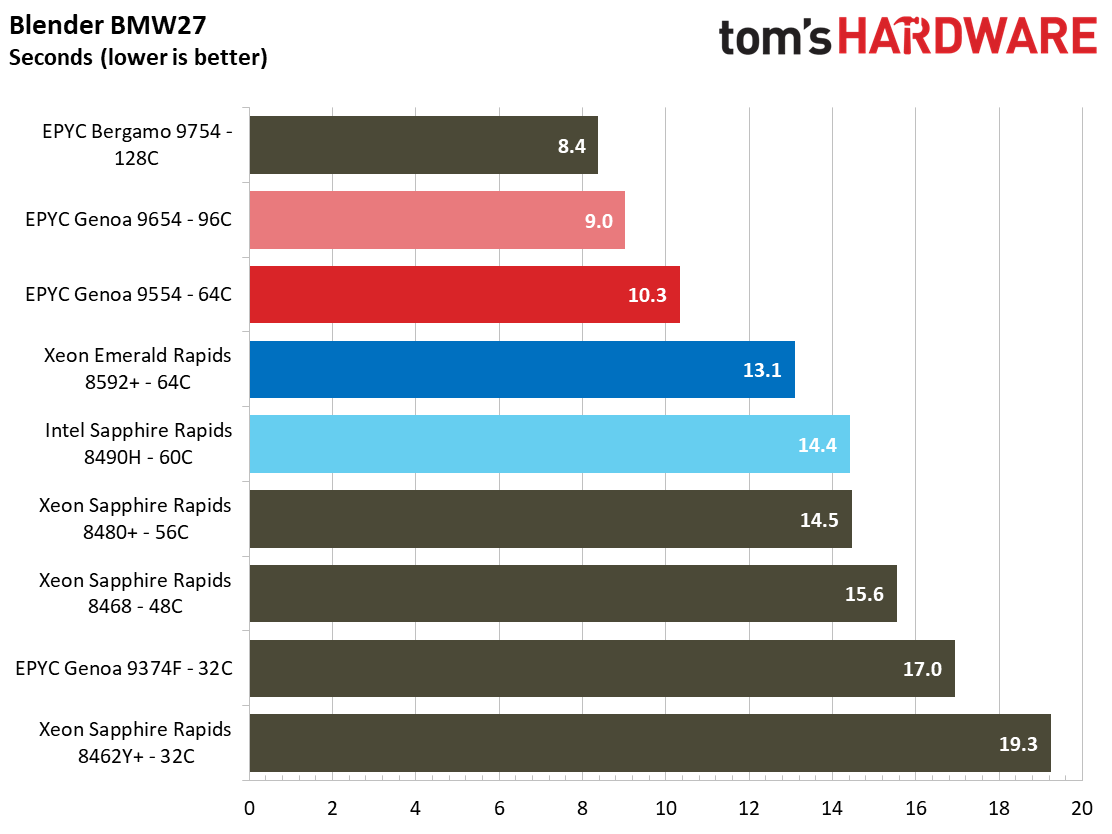

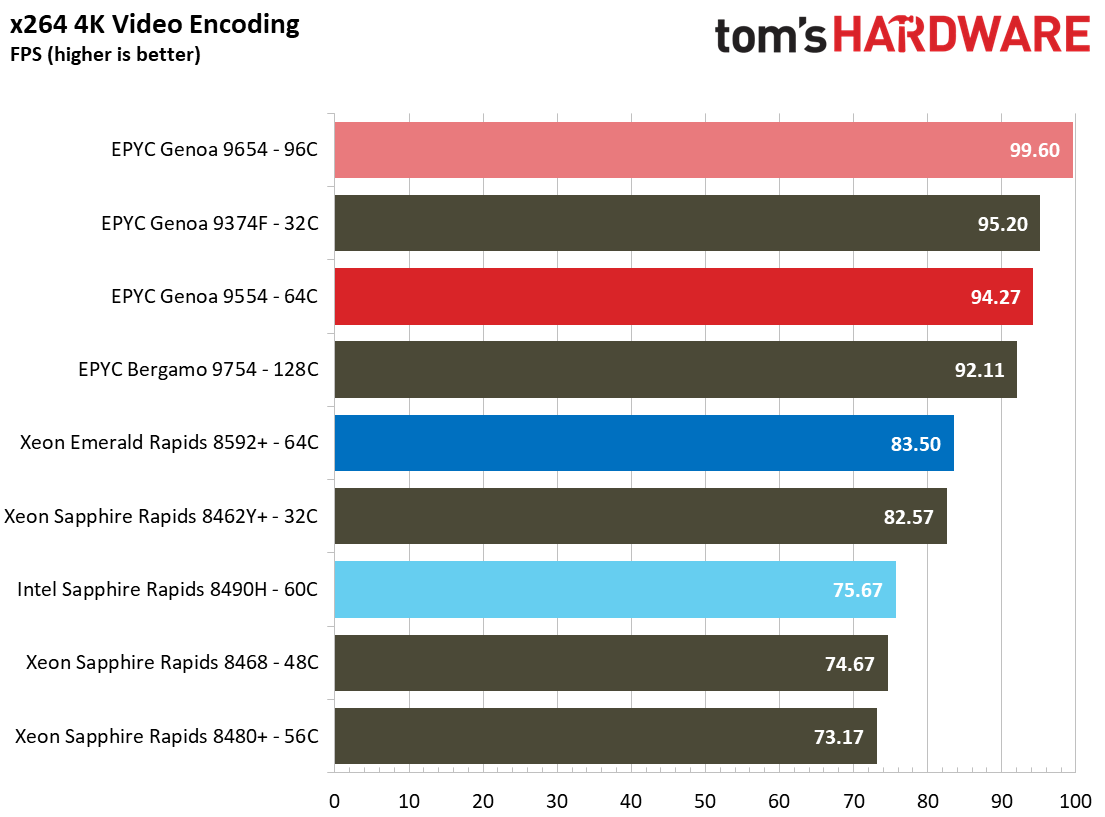

Turning to the more standard fare, provided you can keep the cores fed with data, most modern rendering applications also take full advantage of the compute resources. This type of workload has long been AMD's forte, and here we see that trend continue.

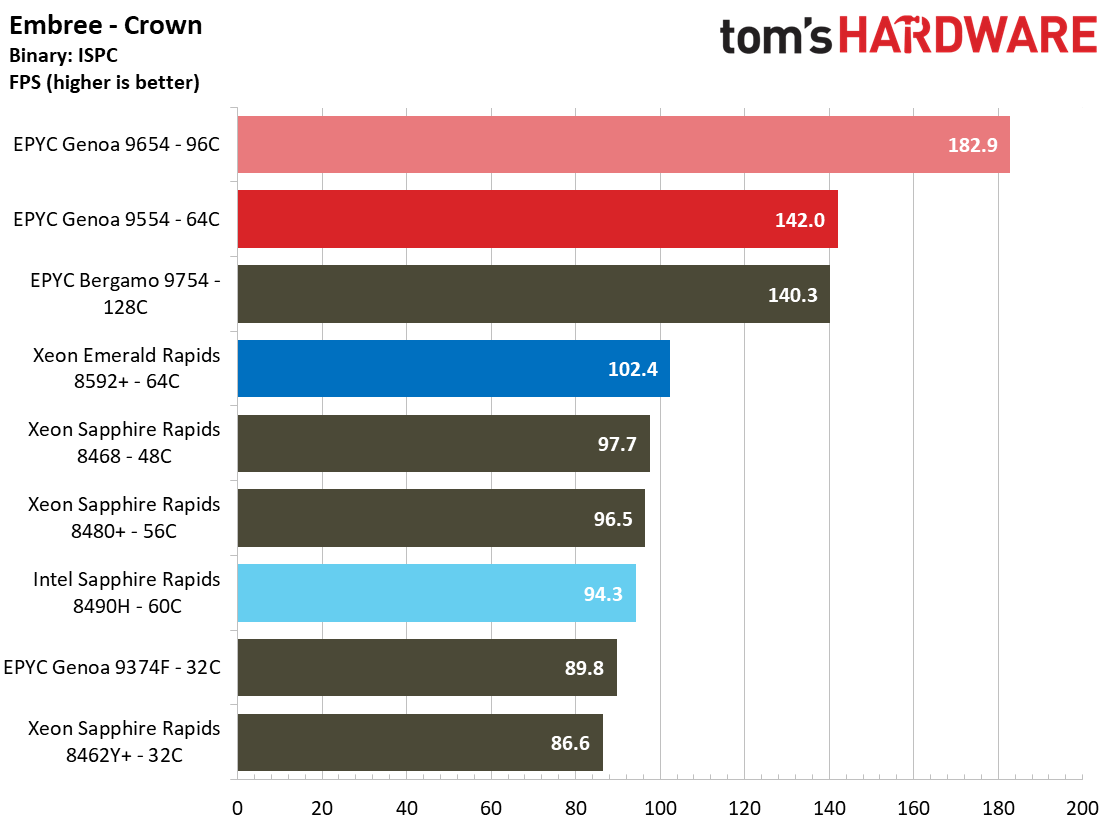

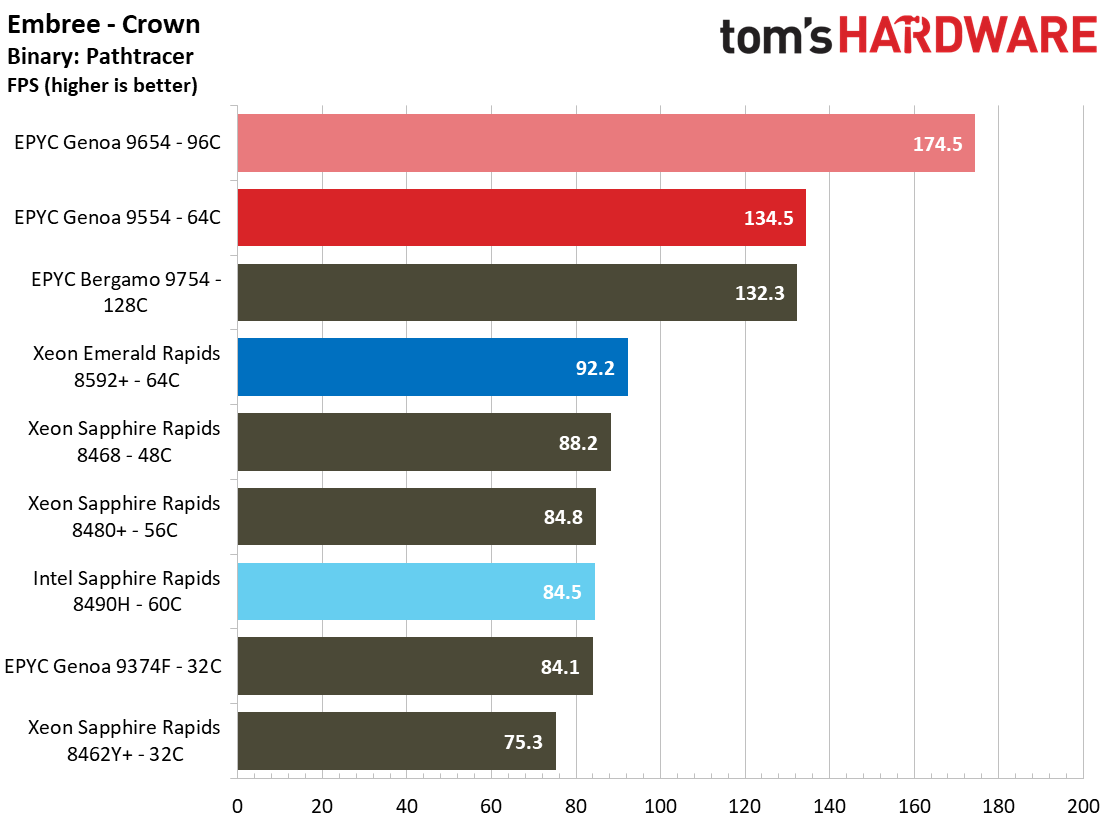

Intel developed Embree, a ray tracing kernel for CPUs that leverages the AVX-512 instruction set. In either case, the EPYC Genoa processors dominate these Intel-designed benchmarks.

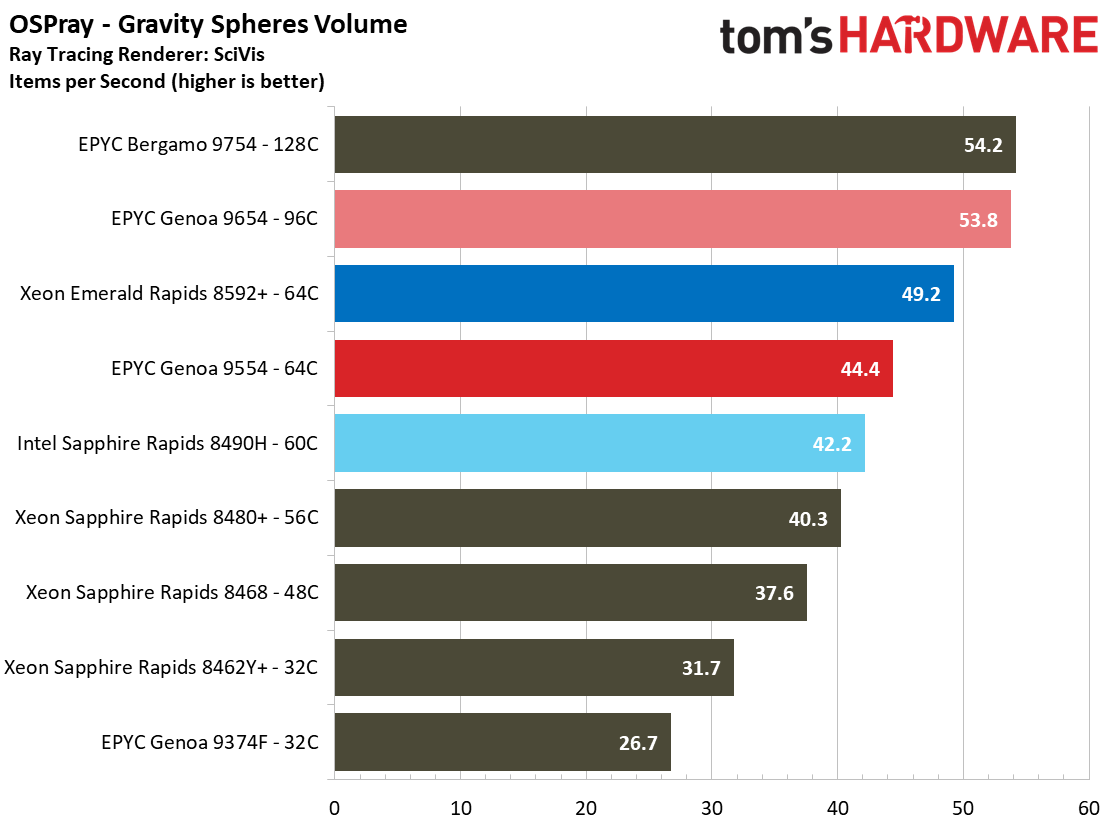

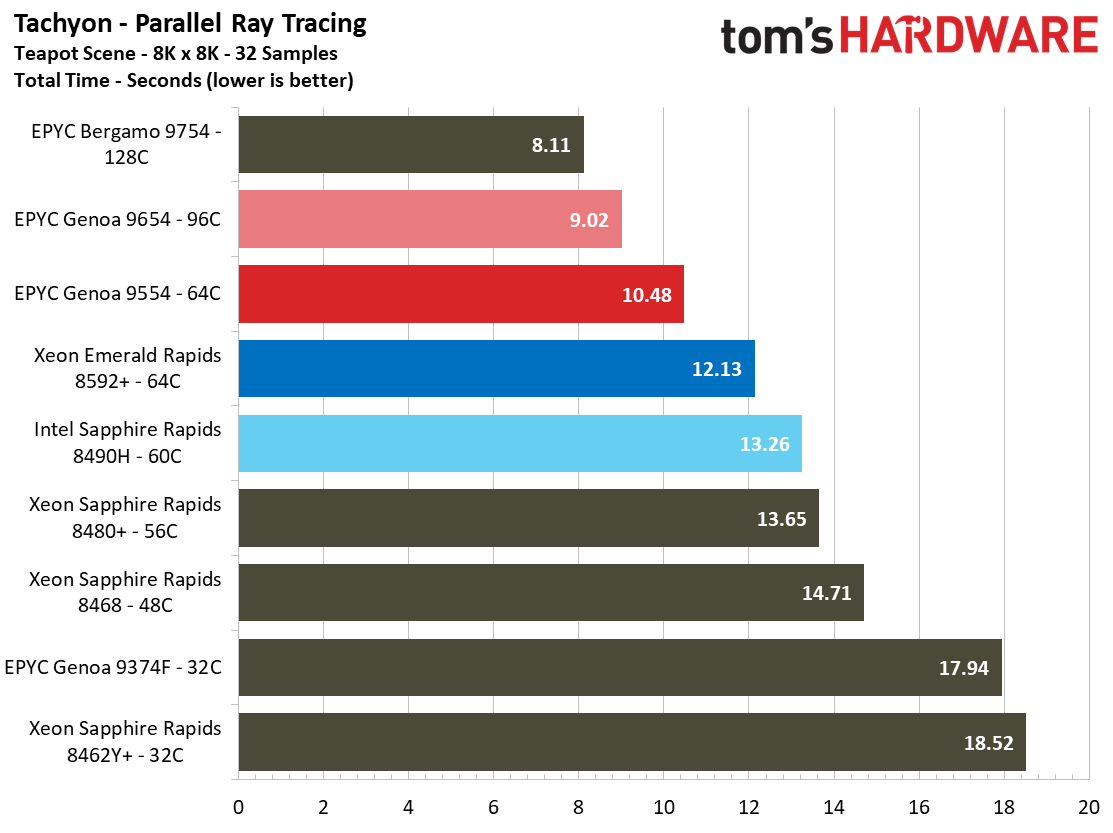

Moving on to compute-intensive ray tracing benchmarks, the Genoa chips stretch their legs in the Embree-based OSPray benchmark and Tachyon, taking impressive leads again.

Overall, AMD's Genoa wins this group of benchmarks, even when judged on a core-to-core basis.

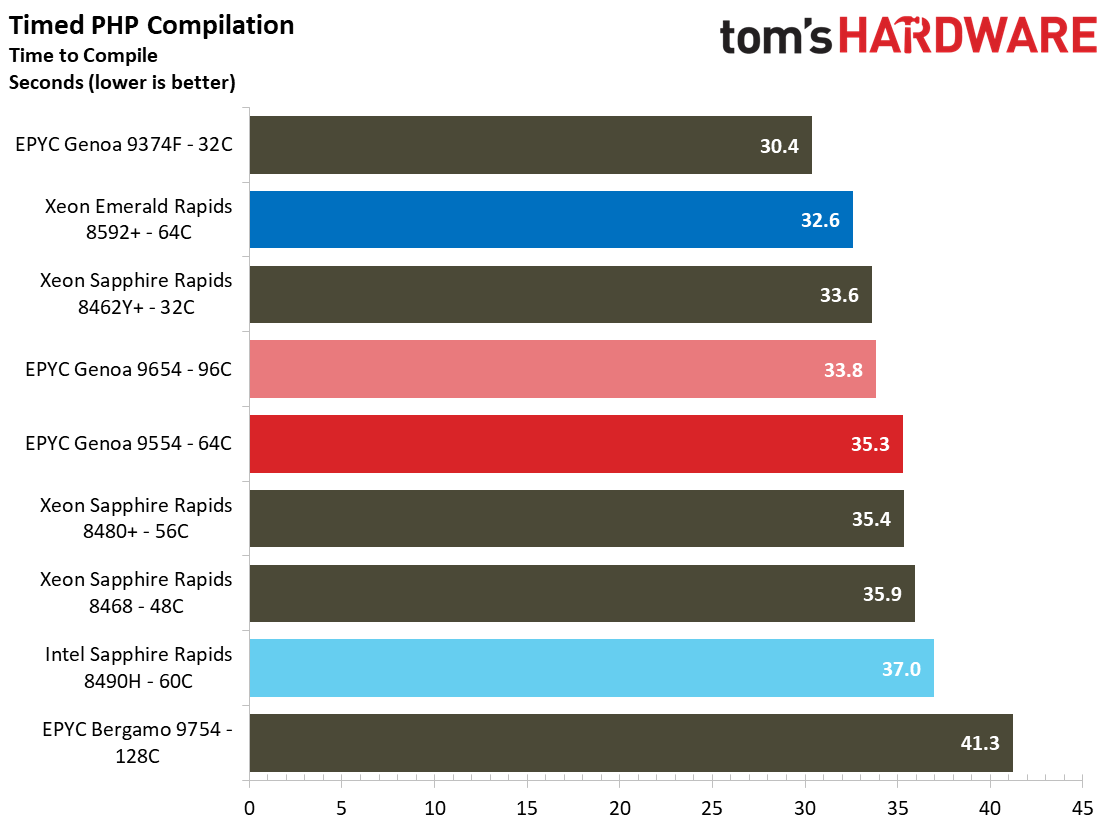

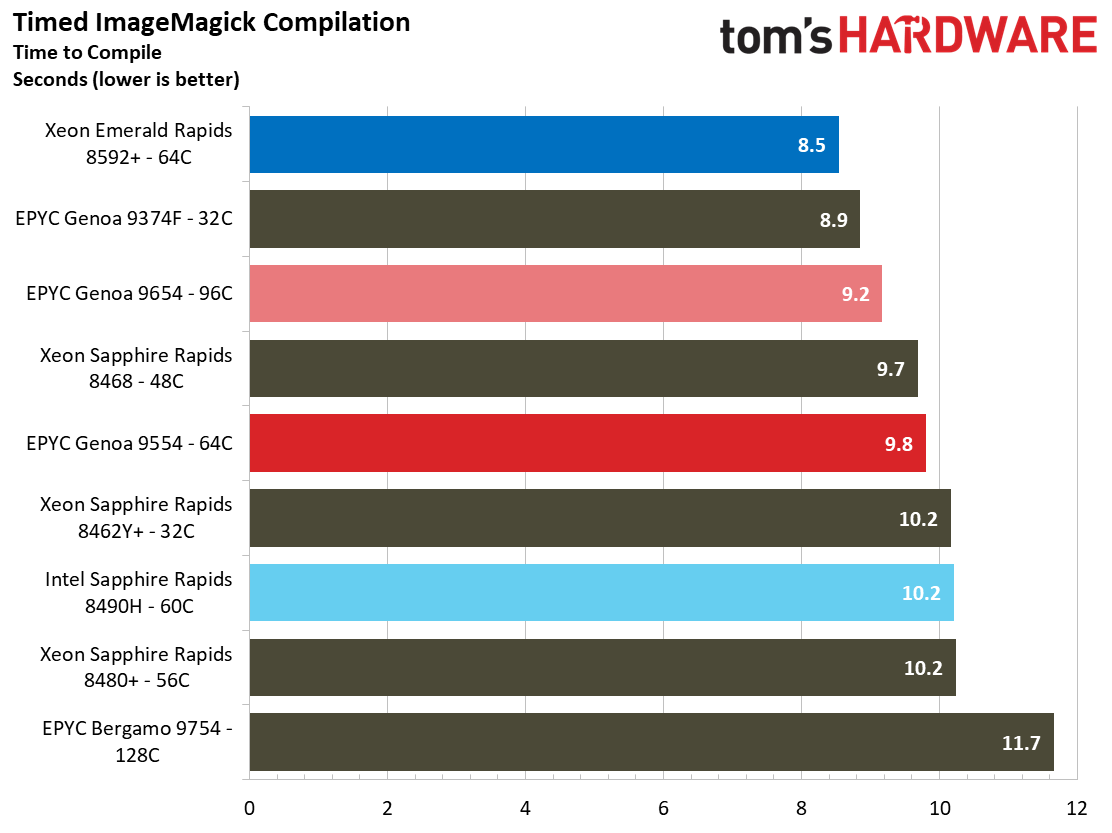

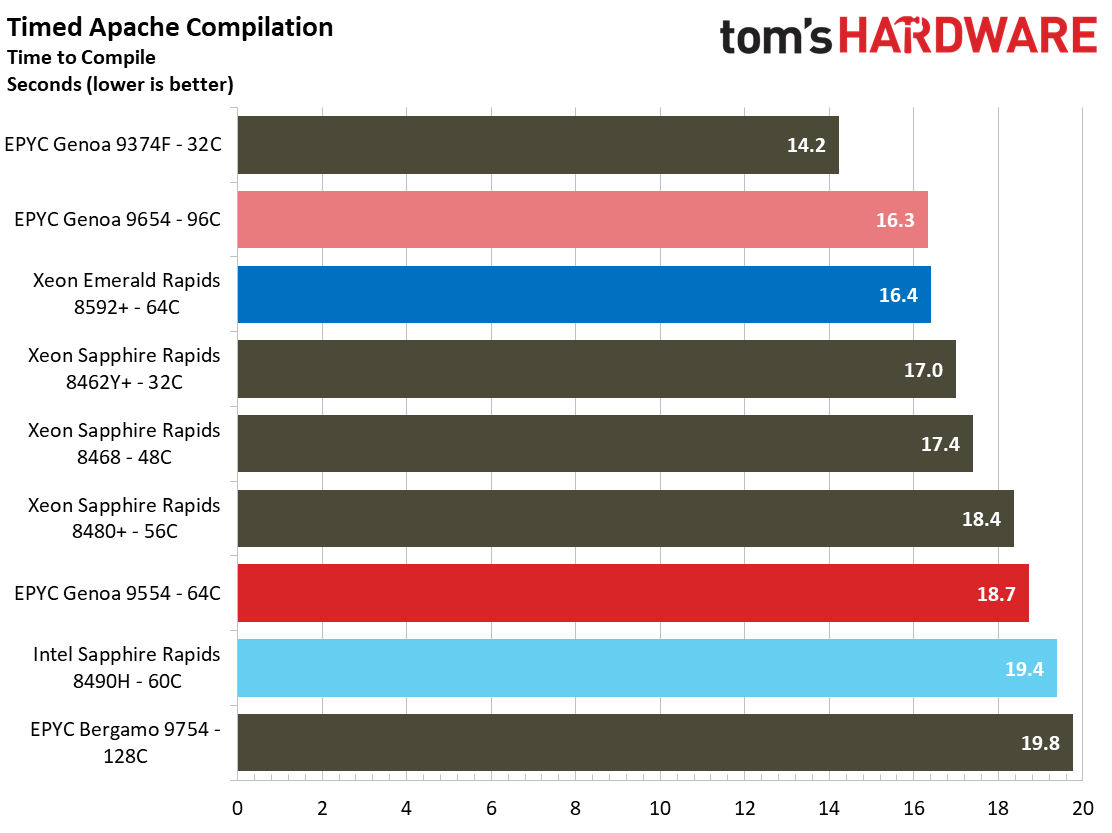

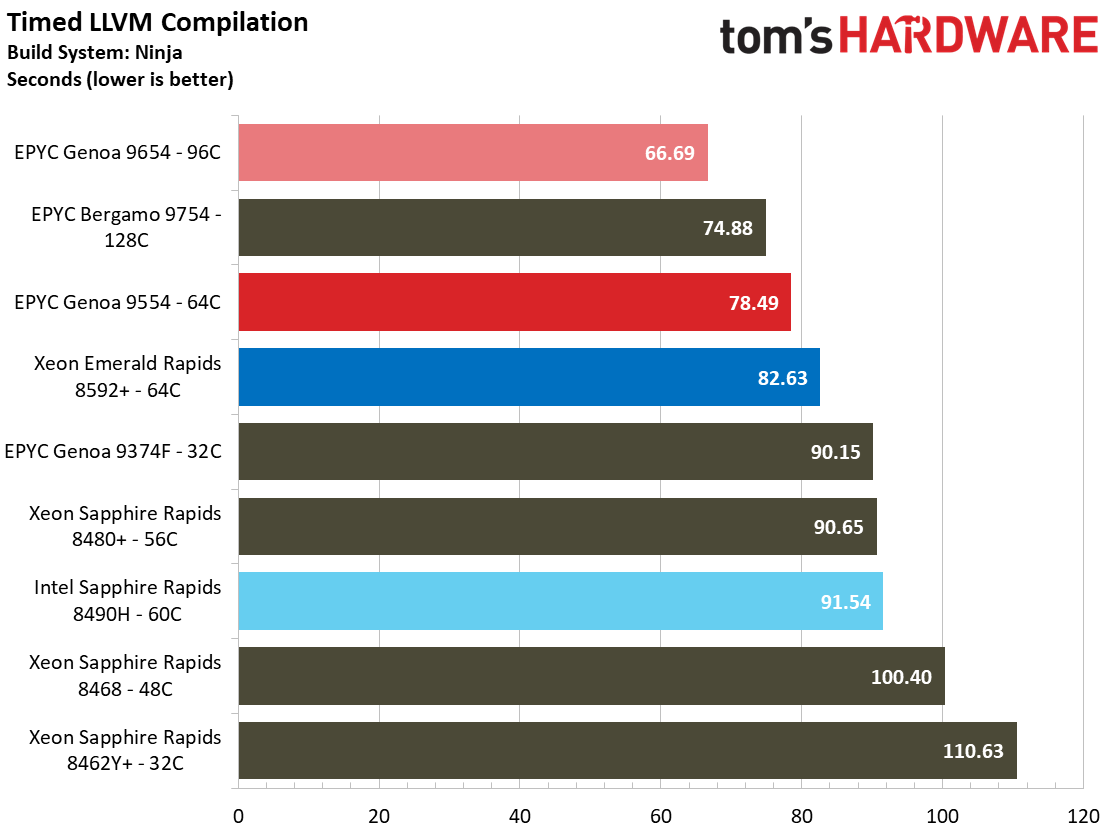

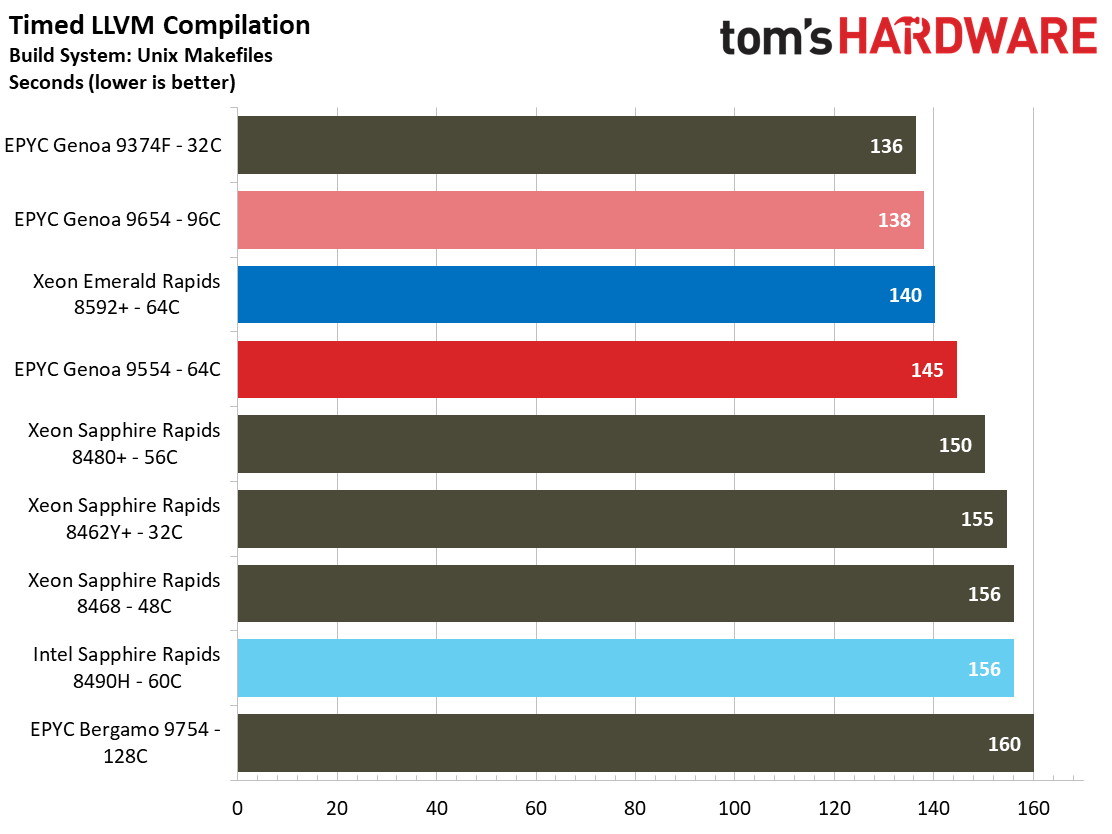

Compilation Benchmarks

AMD's Genoa is brutal in multi-threaded workloads, so we shifted gears to compilation work to see how the chips handle more varied workloads. Intel has made considerable gains in these types of workloads, likely borne of the decreased latency associated with the pared-back dual-tile architecture. Of course, faster memory and larger L3 caches also float all boats in compilation workloads.

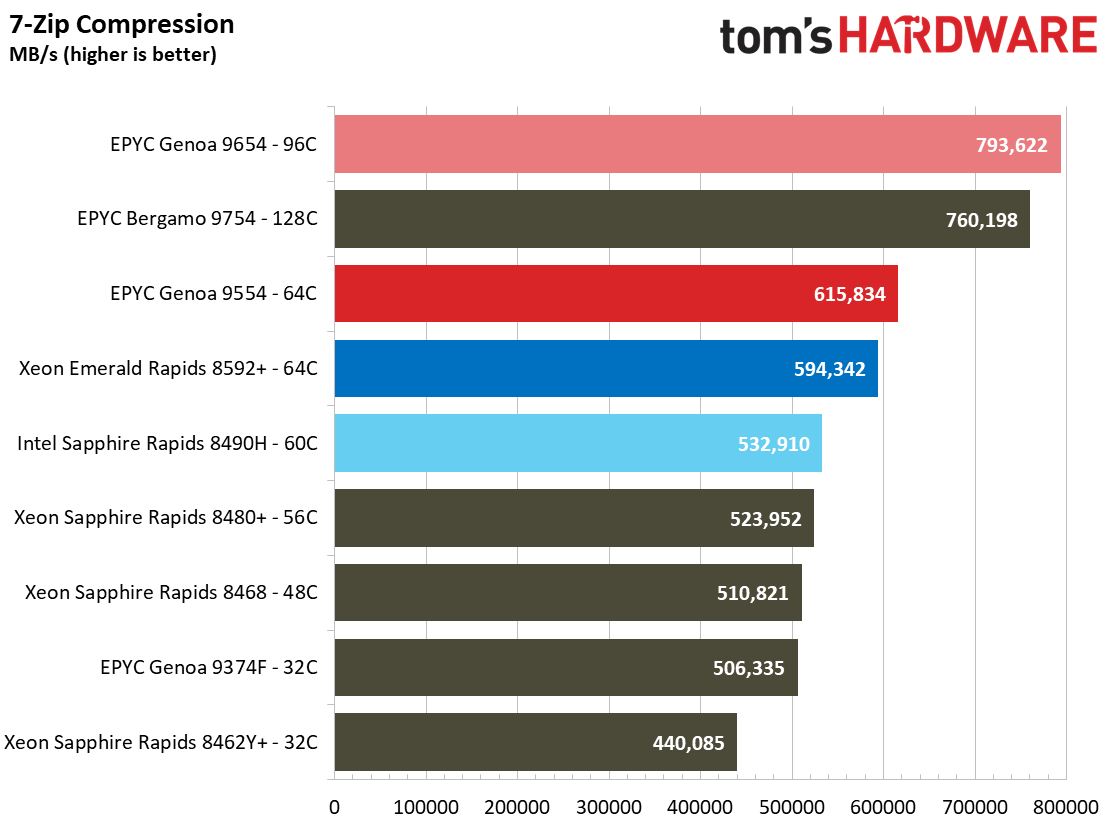

Compression, Security, Webserver, NumPy and Python Benchmarks

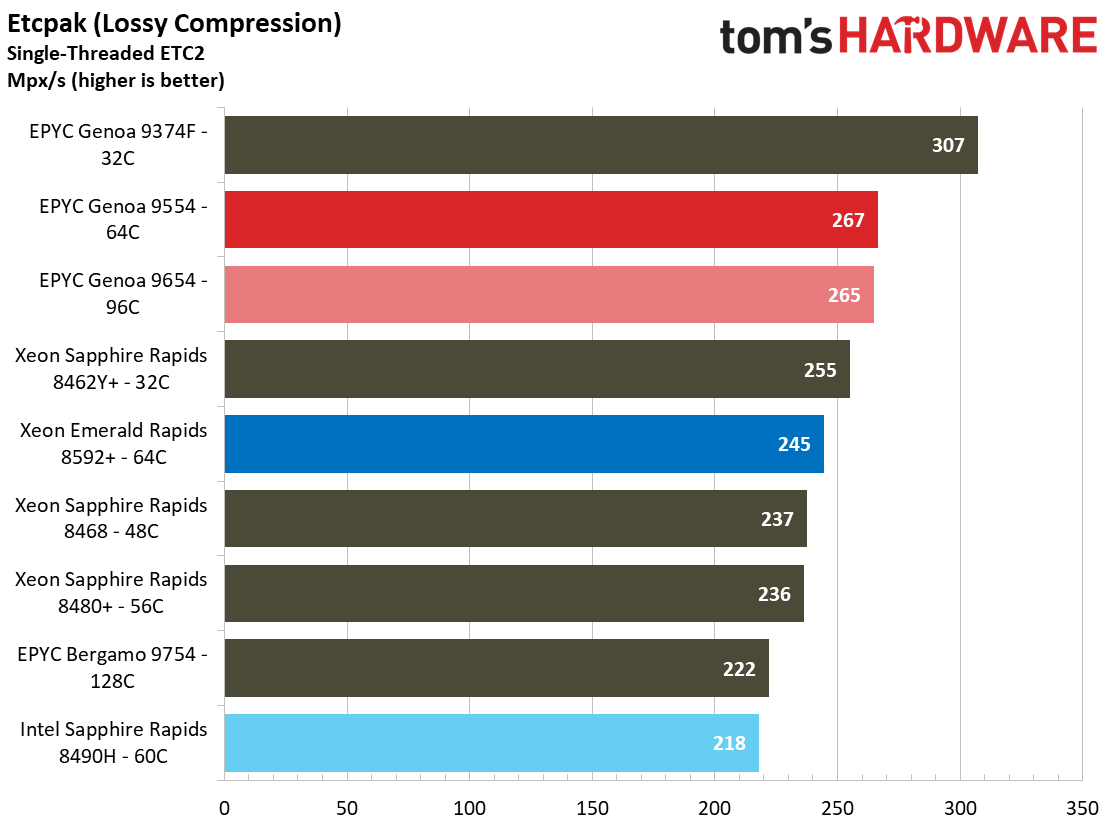

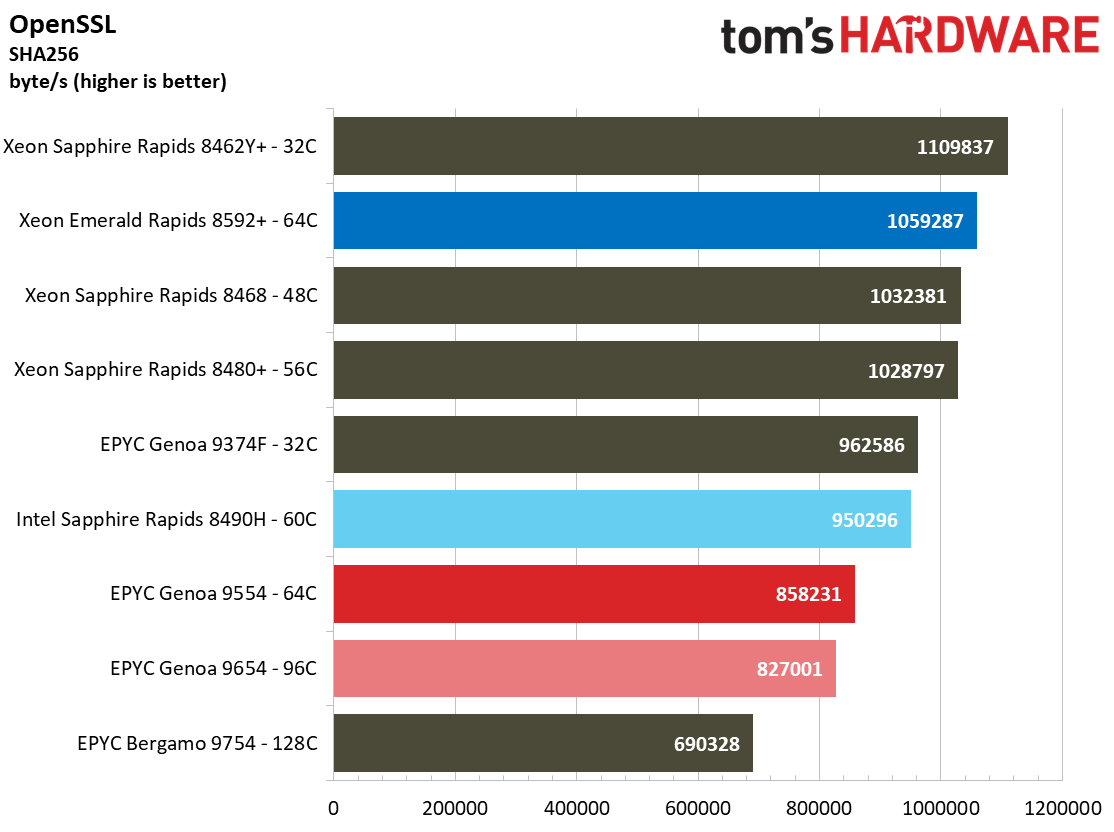

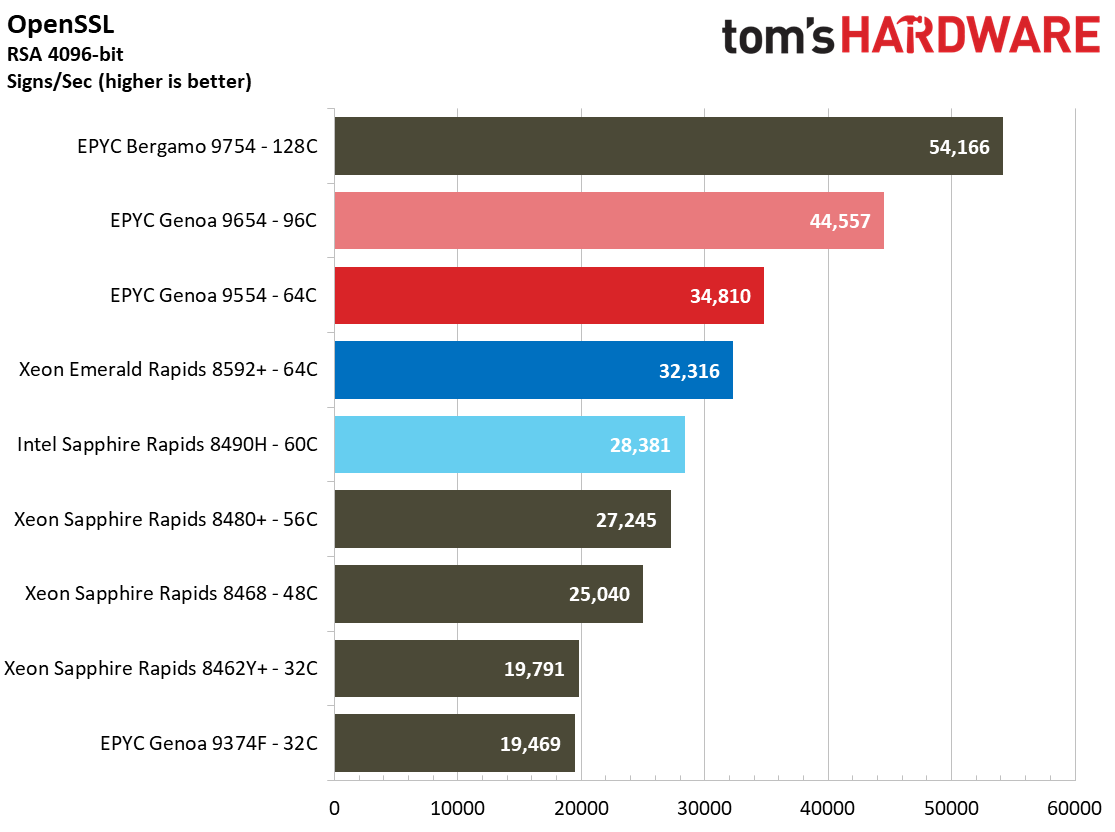

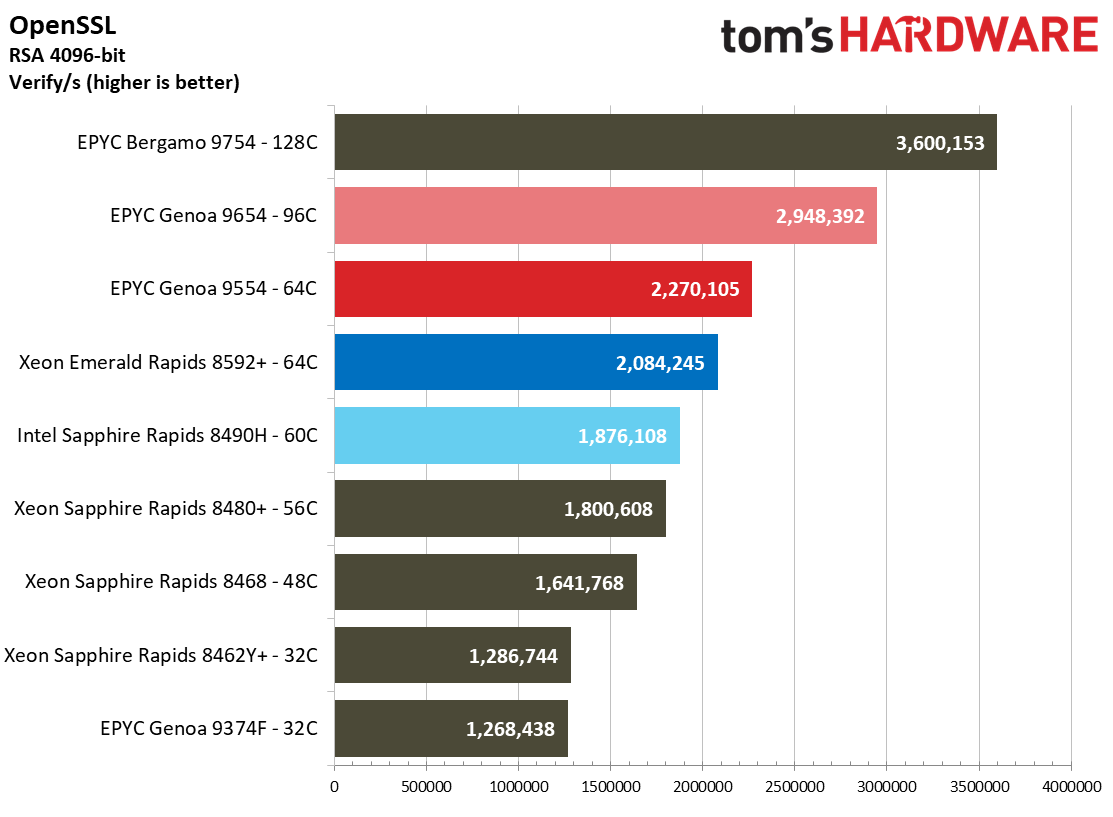

The open-source OpenSSL toolkit uses SSL and TLS protocols to measure RSA 4096-bit performance in verify and sign operations, along with SHA-256 throughput. AMD dominates in the RSA 4096-bit sign and verify operations, but Intel takes the lead in the SHA256 workload.

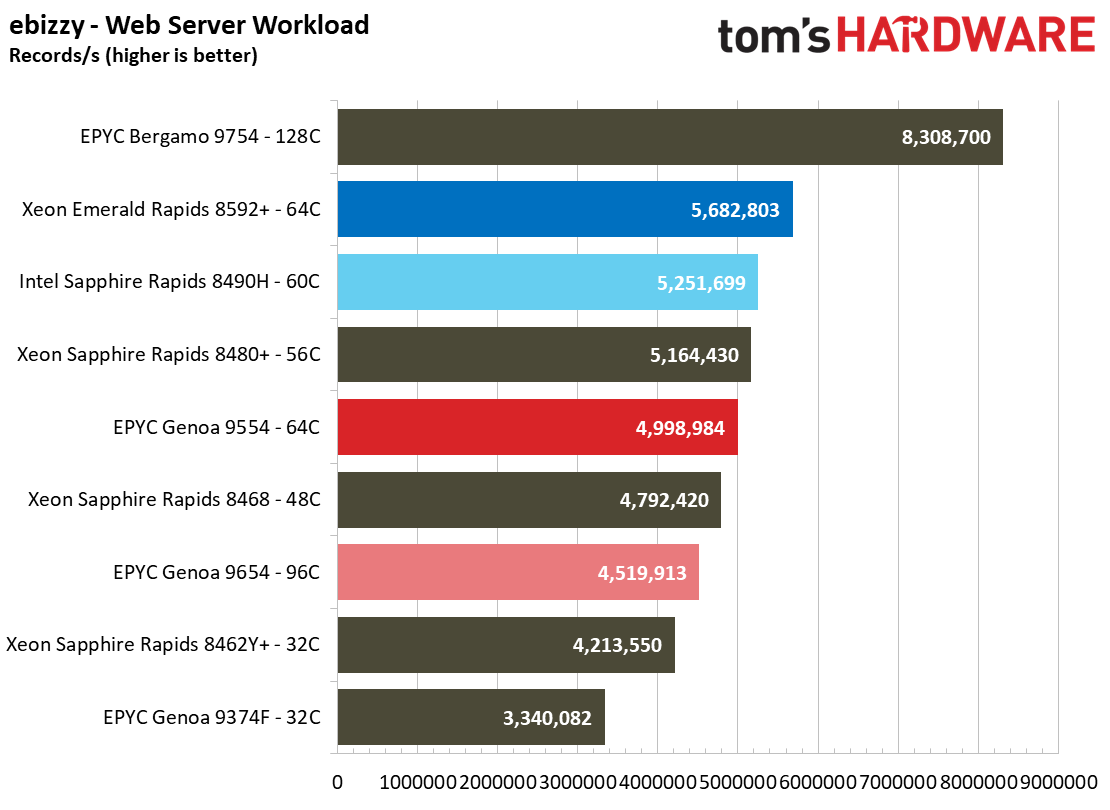

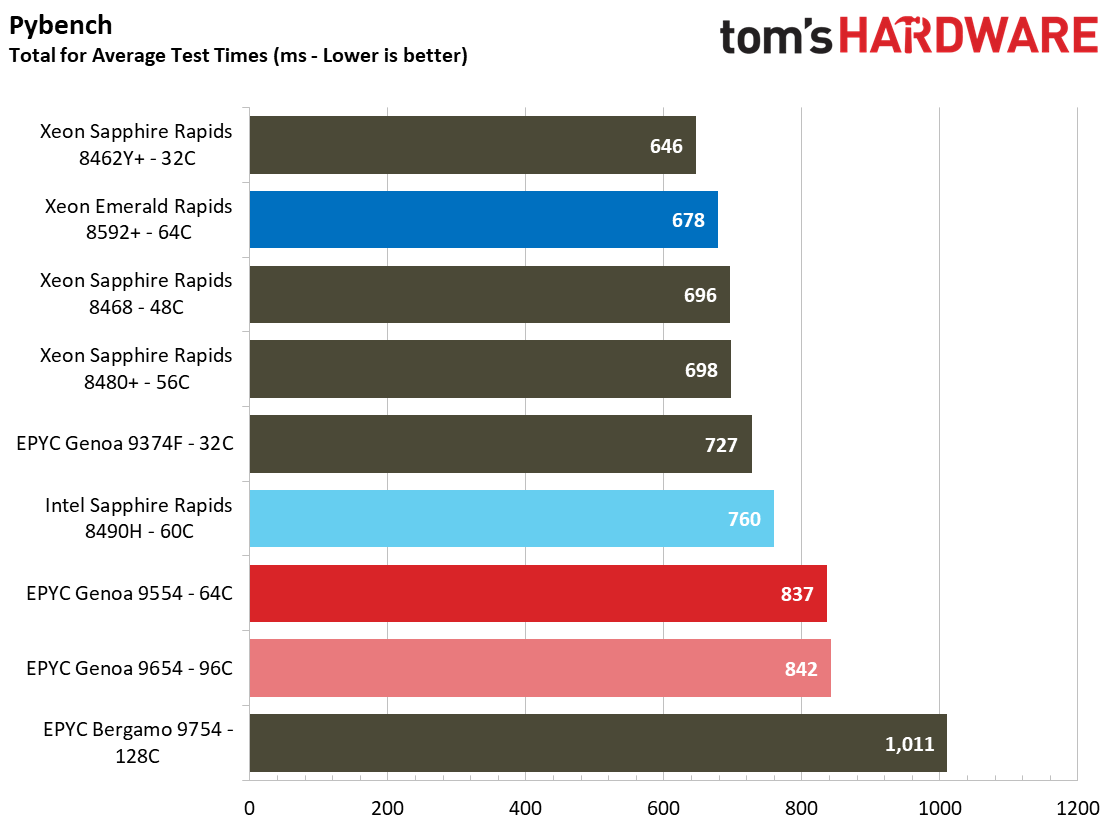

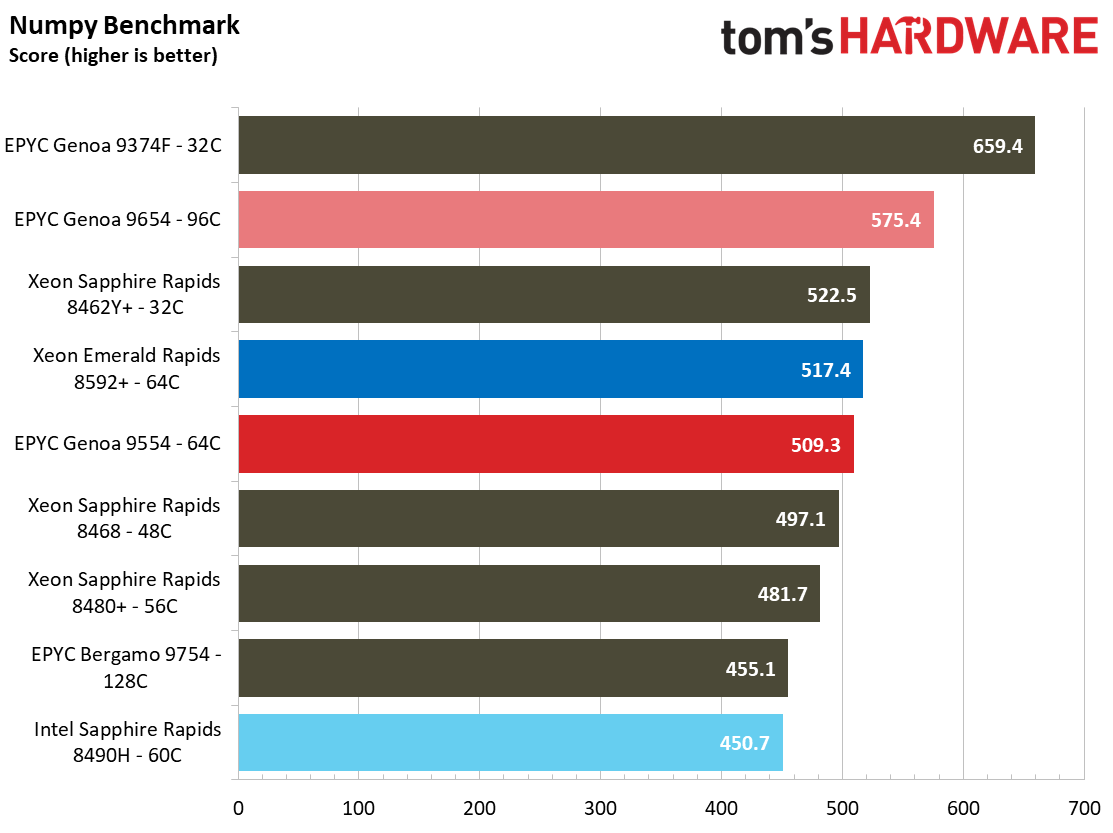

Take note of the ebizzy web server benchmark, as Emerald Rapids delivers strong performance in this test. The Pybench and Numpy benchmarks are used as a general litmus test of Python performance, and as we can see, these tests typically don't scale linearly with increased core counts, instead prizing per-core performance. Intel has made impressive gen-on-gen gains here, matching the 64-core Genoa processor in Numpy and taking an easy lead in Pybench.

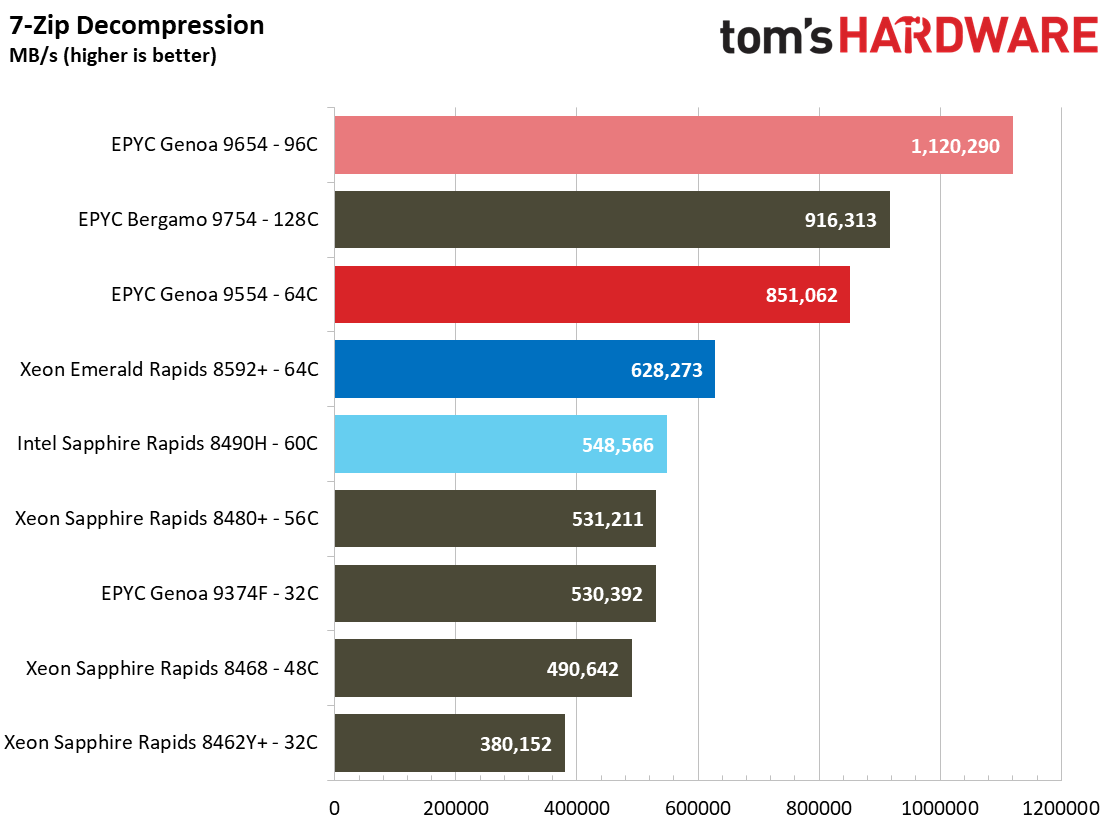

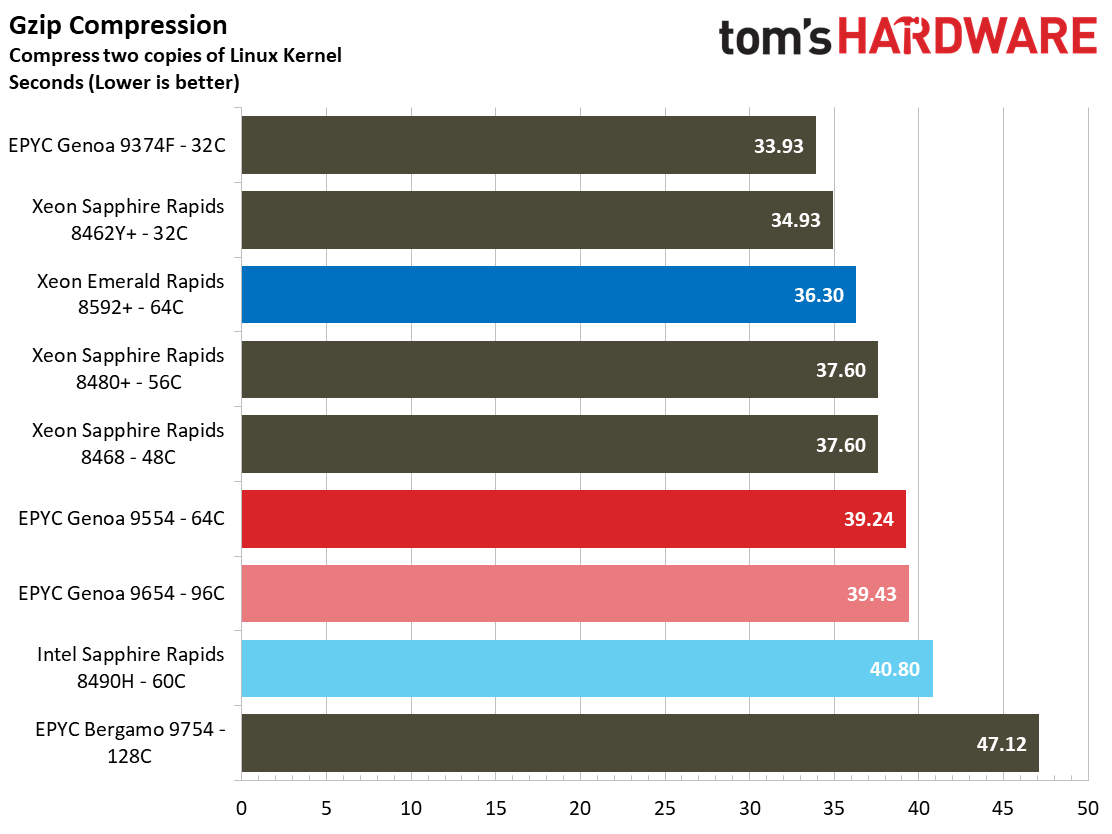

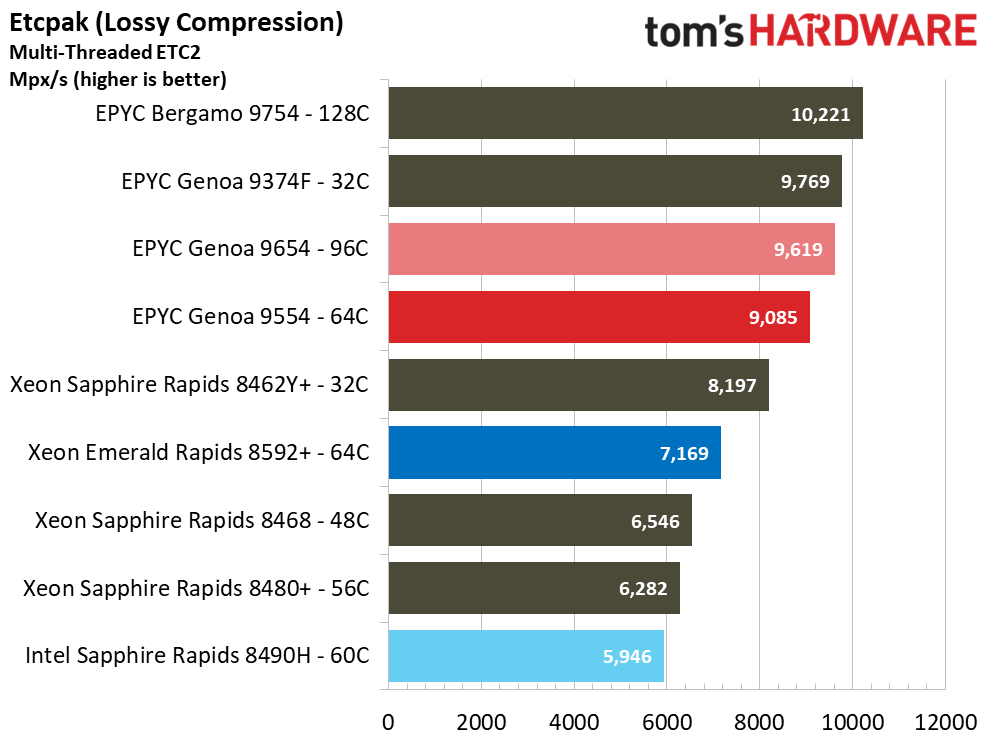

Compression workloads come in many flavors. The 7-Zip (p7zip) benchmark exposes the heights of theoretical compression performance because it runs directly from main memory, allowing both memory throughput and core counts to impact performance heavily. As we can see, this benefits the core-heavy chips as they easily dispatch with the chips with lesser core counts, but AMD once again wins on a core-to-core basis when we compare the 64-core Intel and AMD chips. Gzip, which we use to show compression performance with a more frequency-sensitive engine, finds the 8592+ taking the slightest of leads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

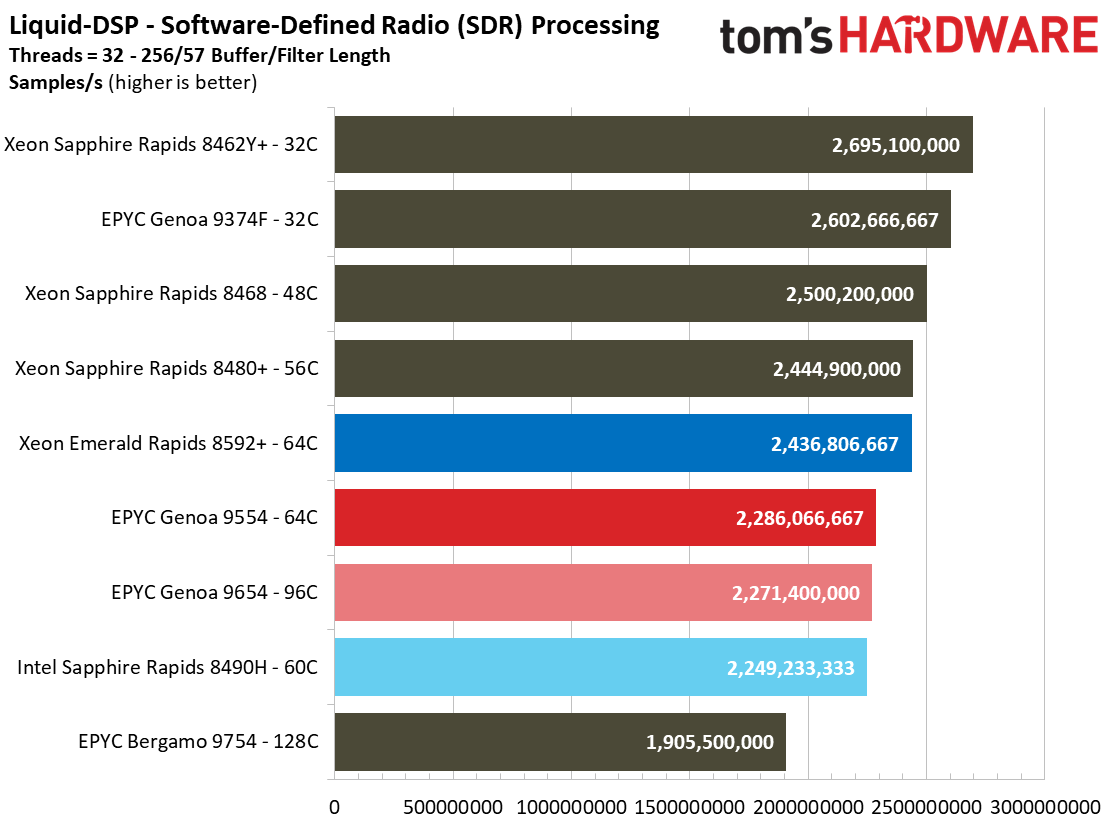

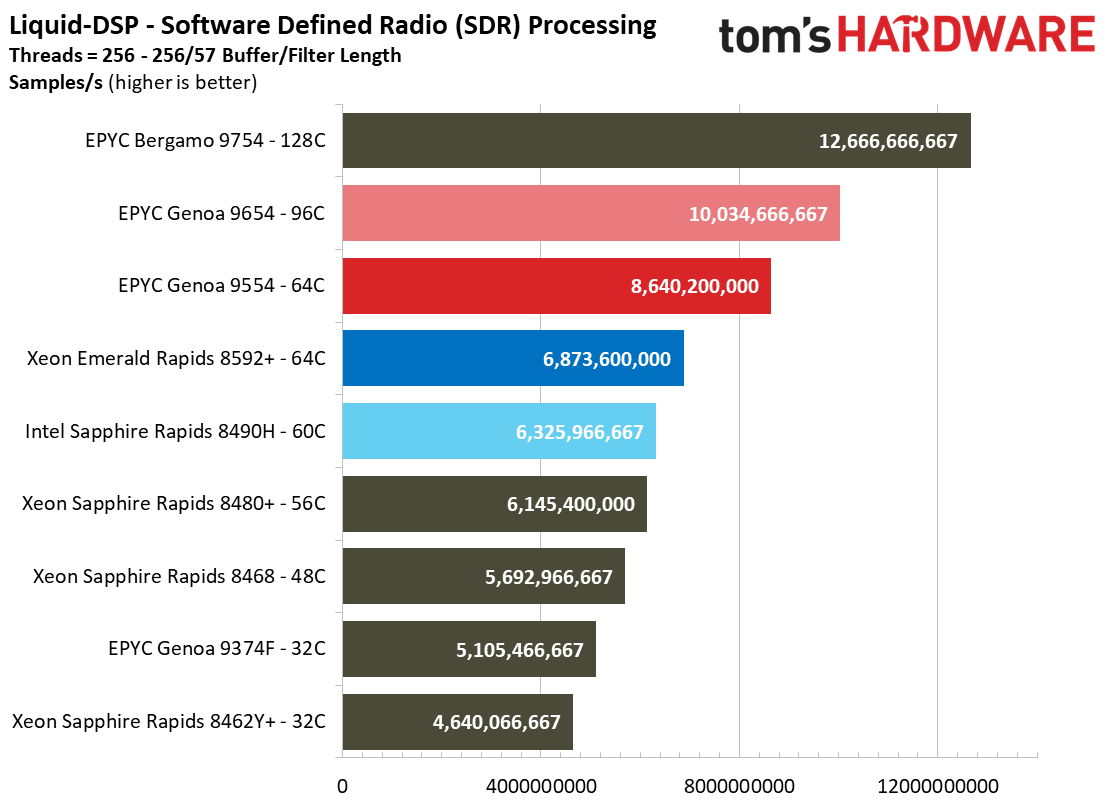

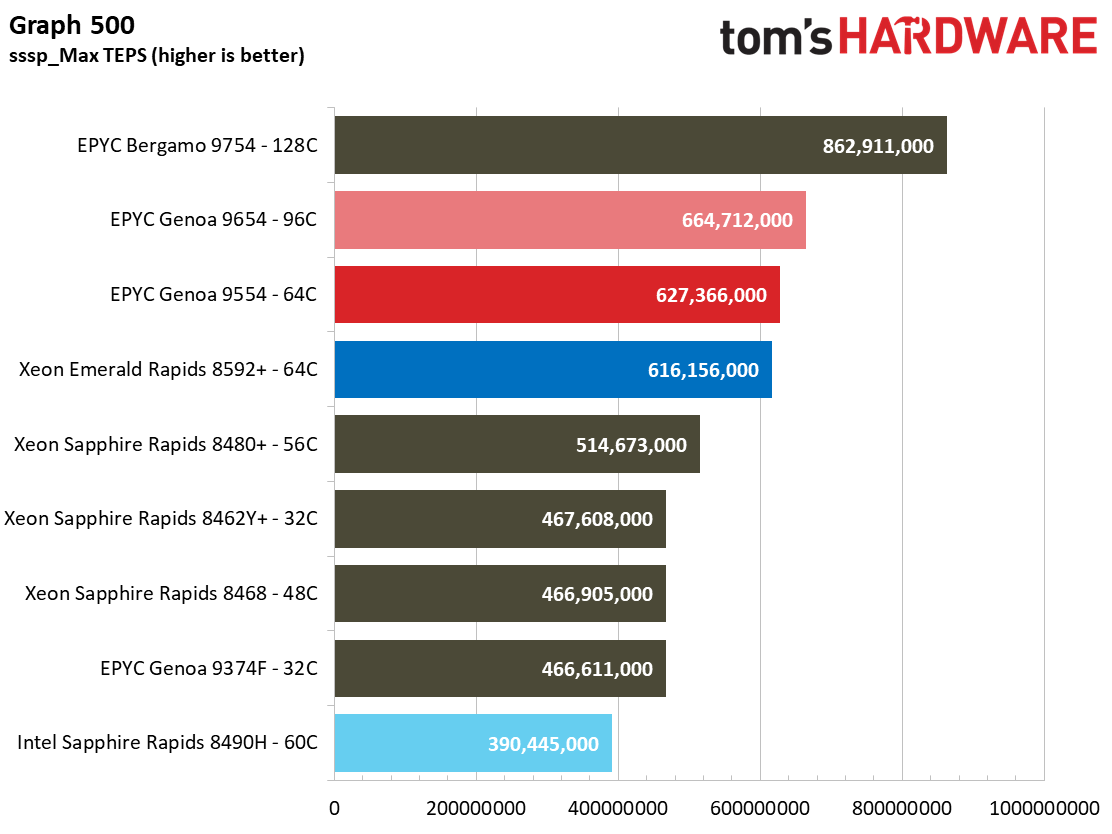

Supercomputing Benchmarks

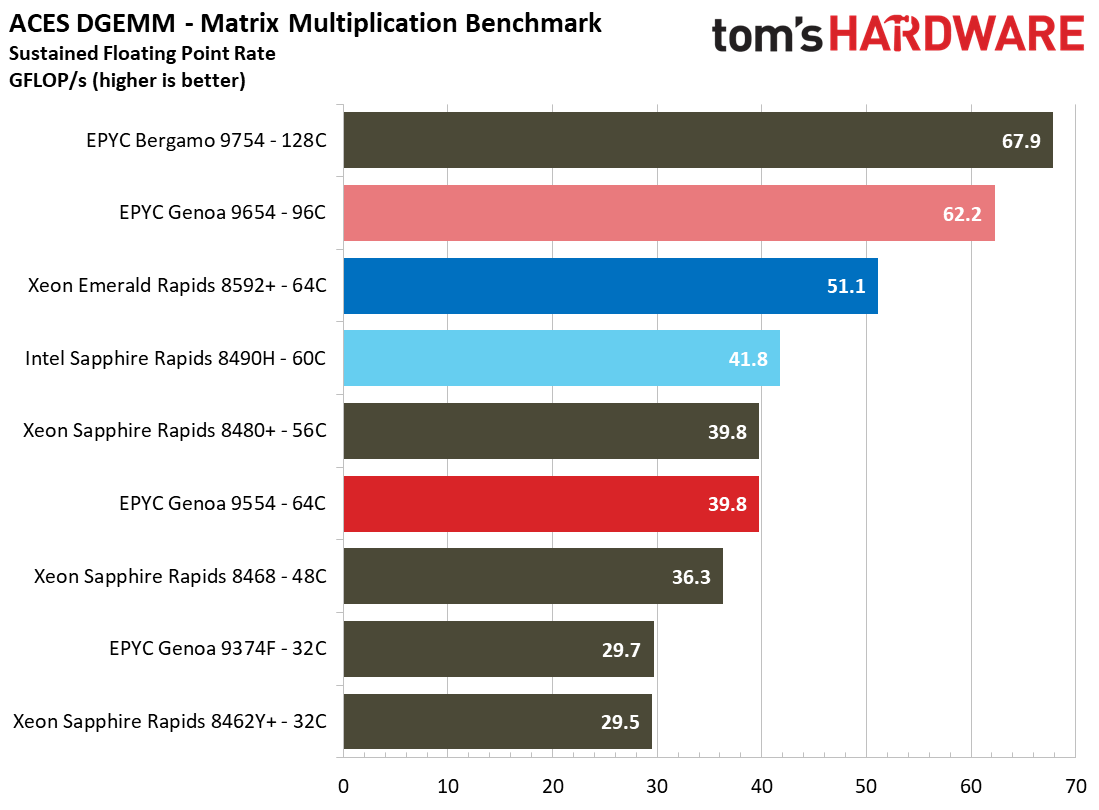

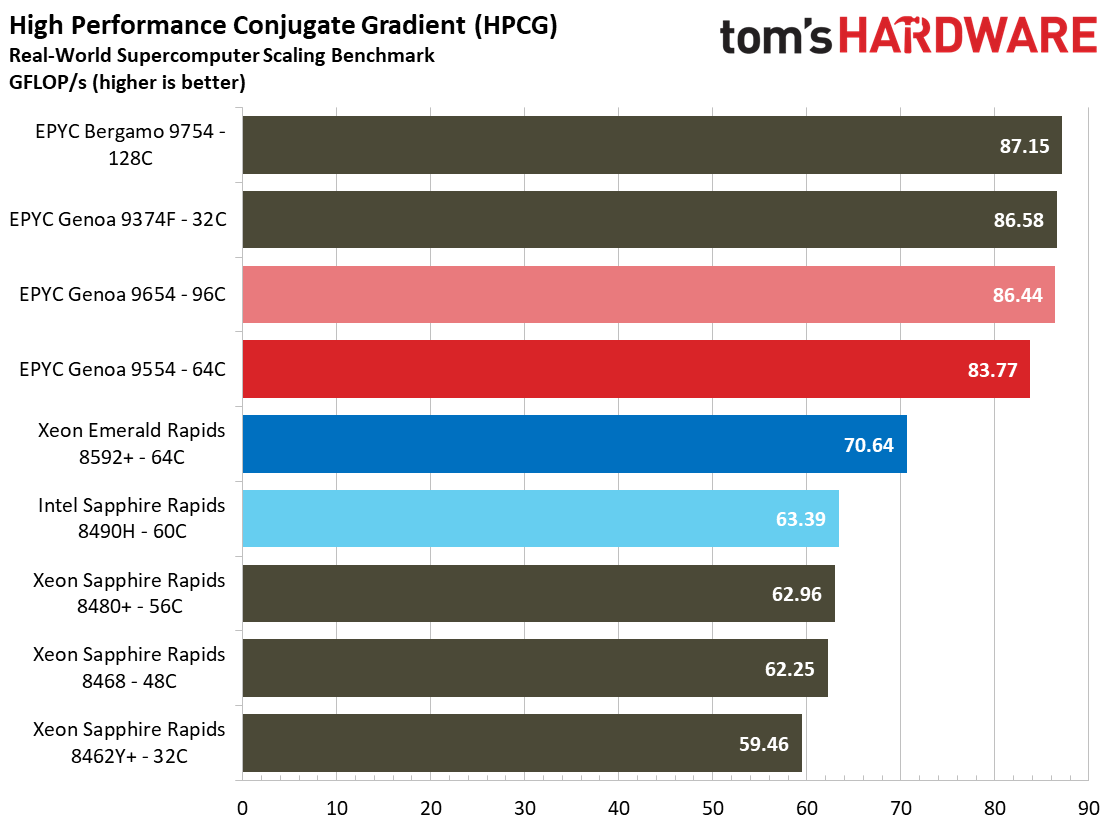

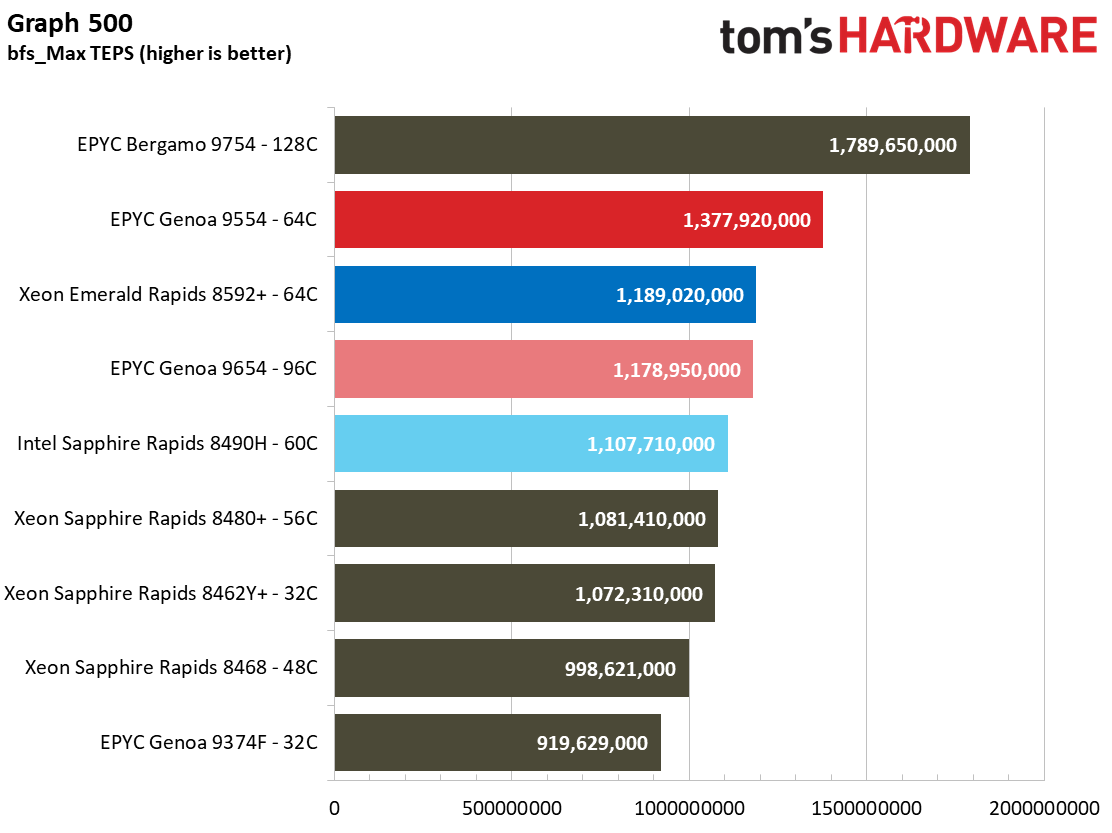

The EPYC 9654 again takes the lead in our supercomputer test suites, though the core-dense Bergamo 9754 tops all the charts. The 8592+ shows some improvements over the previous generation 8490H, particularly in the Graph 500 test, but on a core-for-core basis AMD still leads Intel.

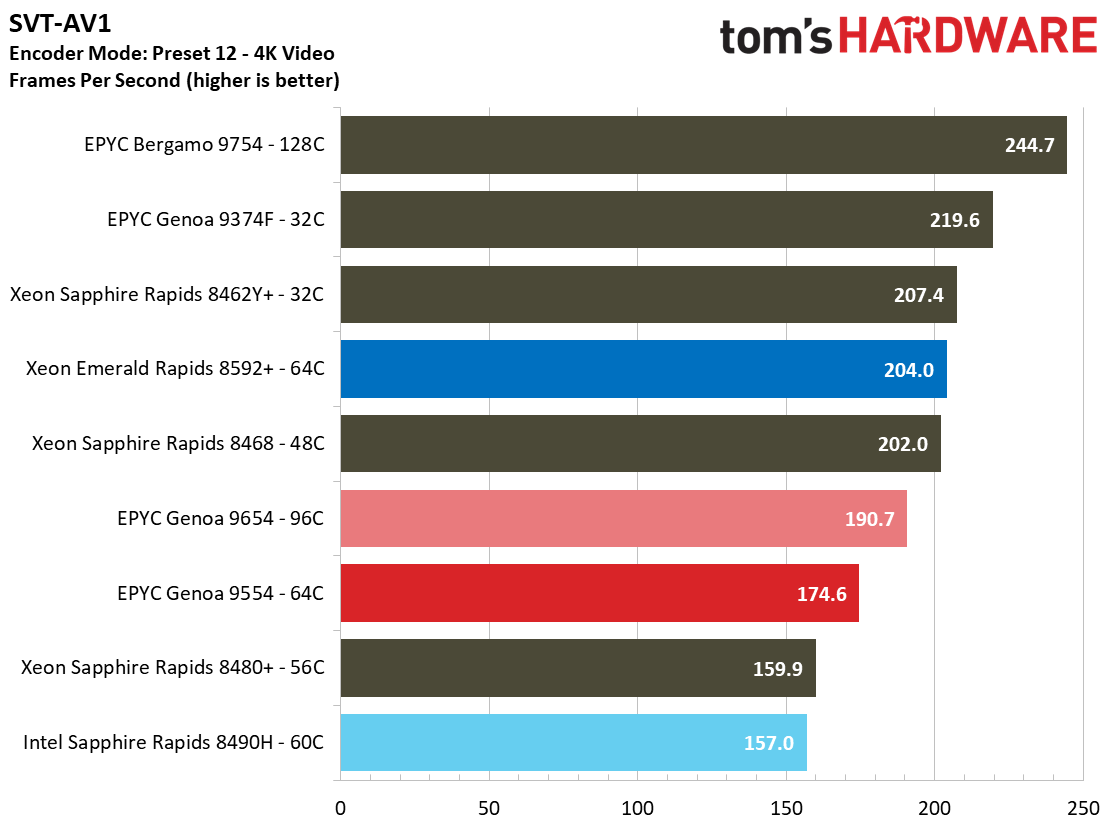

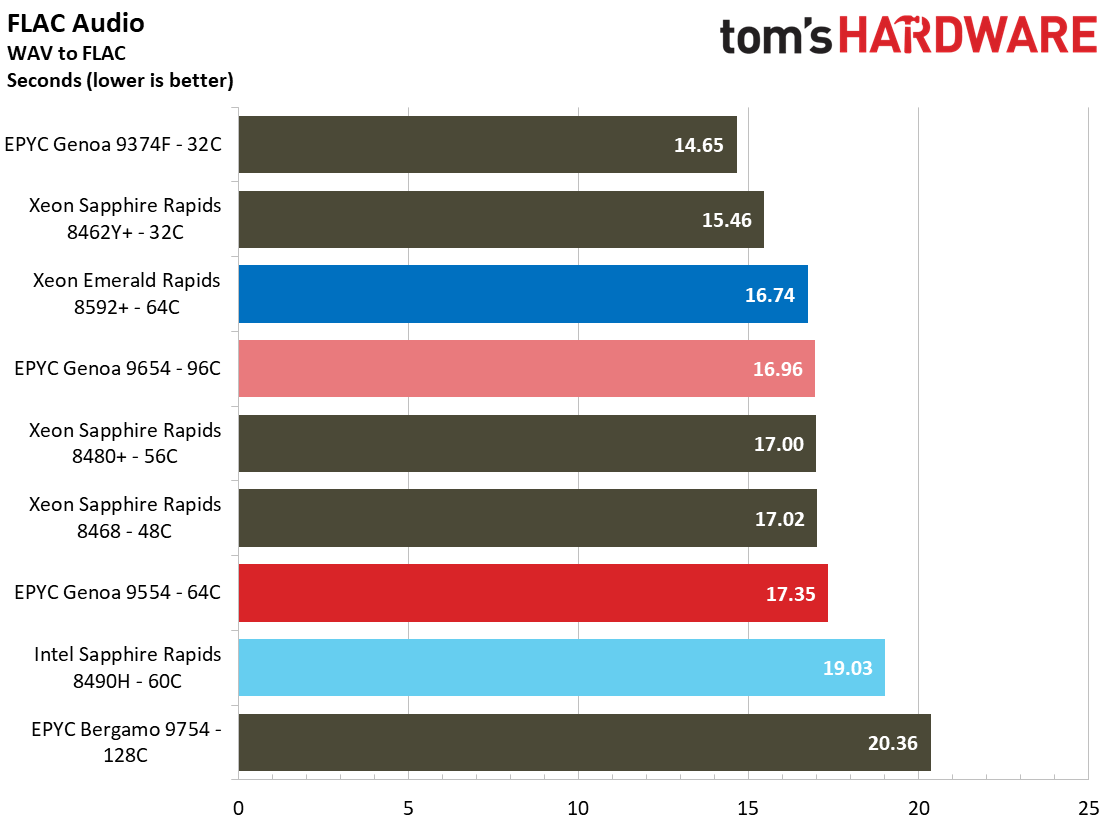

Encoding Benchmarks

Encoders tend to present a different type of challenge. As we can see with the FLAC benchmark, they often don't scale well with increased core counts. Instead, they often benefit from per-core performance and other factors, like cache capacity.

- MORE: Best CPUs for Gaming

- MORE: CPU Benchmark Hierarchy

- MORE: AMD vs Intel

Current page: Intel Fifth-Gen Xeon Emerald Rapids Server Benchmarks

Prev Page Supermicro SYS-621C-TN12R server, Test Systems and Setup Next Page Conclusion

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Tech0000 Thank you for the write article!Reply

1. correction: 2nd table first page Intel Xeon 8462Y+ (SPR) price should be the same as 8562Y+ (EMR) price = $5,945. Right now (11.22pst) you have Intel Xeon 8462Y+ (SPR) priced at $3,583 - which is wrong.

2. I would have liked to see the comparison between EMR and SPR for the same model, e.g. 8462Y+ vs 8562Y+ to better understand and isolate the generational core for core and model improvement (mostly everything else being equal). It's hard to derive conclusions, when you are comparing different models and core configs with test results numbers allover the place - one winning over the other depending on the test performed.

I suspect that a 8462Y+ vs 8562Y+ comparison would result net very modest gain (due marginally higher all core turbo) and that the real performance gains are in top tier SKUs with triple L3 cache, accepting faster DDR5 etc.

3. As a workstation ship the single socket 8558U seams to be pretty good "value" (relative to the other intel SKUs) actually. $3720 for a 48 core chip with 250GB L3 cache is not too bad for a corporate WS. Not as capable (in terms of accelerators) as the other loaded high end pricy SKUs, but for 48 cores at $77/core it is pretty good. Maybe this chip can be packaged and used as a candidate Xeon W9-3585X or similar chip... -

thestryker I'm assuming a chunk of the losses are due to Zen 4 being much more efficient but it would be nice to see some clock graphs (not for every test, but maybe one per category) if possible. If that isn't possible maybe running this same suite on a 13900K/14900K and 7950X to give some context since these are extremely close in threaded performance despite Intel using more power.Reply

Appreciate the immediate look and hope to see some more, or maybe some Xeon W review action when those EMR refreshes come out! -

tamalero Reply

Whats with these "you can trust our review made by pros" ?Admin said:We put Intel’s fifth-gen Emerald Rapids Xeon Platinum 8592+ through the benchmark paces against AMD's EPYC Genoa to see which server chips come out the winner.

Intel 'Emerald Rapids' 5th-Gen Xeon Platinum 8592+ Review: 64 Cores, Tripled L3 Cache and Faster Memory Deliver Impressive AI Performance : Read more

The first one I've ever seen do that thing was Gamer Nexus.

Now seems everyone wants to add those kind of "claims" on their own reviews. -

bit_user Thanks for the review, as always!Reply

Some more potential cons:

Still significantly lagging Genoa on energy-efficiency.

PCIe deficit in 1P configurations (80 Emerald Rapids vs. 128 lanes for Genoa). In 2P configurations, Genoa can run at either 128 or match Emerald Rapids' 160 lanes, if you reduce the inter-processor links to just 3.

Fewer memory channels (8 vs. 12 for Genoa), though the number of channels per-core is the same.

The 96-core EPYC Genoa 9654 surprisingly falls to the bottom of the chart in all three of the TensorFlow workloads, implying that its incredible array of chiplets might not offer the best latency and scalability for this type of model.

I did see a few such inversions in Phoronix' review, but fewer and way less severe. This should be investigated. I recommend asking AMD about it, @PaulAlcorn . It almost looks to me like you might've had a CPU heatsink poorly mounted, forgot to replace the fan shroud, or something like that. It's way worse than anything you saw in your original Genoa review, where we basically only saw inversions in stuff that didn't parallelize too well.

https://www.tomshardware.com/reviews/amd-4th-gen-epyc-genoa-9654-9554-and-9374f-review-96-cores-zen-4-and-5nm-disrupt-the-data-center/5In this review, it almost seems like the EPYC 9554 is outperforming the 9654 more often than not! -

bit_user BTW, I find it a little weird that they still don't have a monolithic version that's just 1 of XCC tiles, even as just a stepping stone, before you get down to the range of the regular MCC version.Reply

-

bit_user Reply

I'd imagine the issue is that they can only test the review samples they're sent by Intel.Tech0000 said:2. I would have liked to see the comparison between EMR and SPR for the same model, e.g. 8462Y+ vs 8562Y+ to better understand and isolate the generational core for core and model improvement (mostly everything else being equal). It's hard to derive conclusions, when you are comparing different models and core configs with test results numbers allover the place - one winning over the other depending on the test performed.

I suspect that a 8462Y+ vs 8562Y+ comparison would result net very modest gain (due marginally higher all core turbo) and that the real performance gains are in top tier SKUs with triple L3 cache, accepting faster DDR5 etc.

Phoronix tested a limited number of benchmarks with different DDR5 speeds. Seems like the faster DDR5 wasn't a huge win, but sadly none of the AI benchmarks were included. Those should've skewed the geomean a bit higher.

https://www.phoronix.com/review/intel-xeon-ddr5-5600

To make the results more applicable, I'd suggest the E-cores should be disabled.thestryker said:If that isn't possible maybe running this same suite on a 13900K/14900K and 7950X to give some context since these are extremely close in threaded performance despite Intel using more power. -

thestryker Reply

What does the XCC offer in Xeon Scalable that MCC doesn't? I was trying to think of something but the specs of all the SKUs seem so random for EMR I couldn't figure out what you'd be referring to.bit_user said:BTW, I find it a little weird that they still don't have a monolithic version that's just 1 of XCC tiles, even as just a stepping stone, before you get down to the range of the regular MCC version.

That would remove the entire point I was getting at of using the desktop parts as a comparison. The 13900K/14900K consistently go back and forth with the 7950X in MT performance at stock settings in standard CPU benchmarks despite the extra power consumption on the Intel side. Though with the IPC between RPL/Zen 4 so close maybe disabled E-cores + 1 CCD disabled would make for a good comparison as then it would be just 8 P-cores vs 8 Zen 4 cores. I haven't seen any such comparison though so this is just a wild guess.bit_user said:To make the results more applicable, I'd suggest the E-cores should be disabled. -

bit_user Reply

I just meant that perhaps they could get more mileage out of their chiplet usage. Like, maybe there are some XCC tiles with a defect in the EMIB section, so just put those on a substrate by themselves and sell it as 32C or less.thestryker said:What does the XCC offer in Xeon Scalable that MCC doesn't?

Okay, well if you don't exclude the E-cores, then I don't see how those tests would be relevant to these server CPUs.thestryker said:That would remove the entire point I was getting at of using the desktop parts as a comparison.

Heh, you might just get your chance! The new Xeon E-series 2400 have their E-cores disabled (sounds ironic, eh?). So, if anyone benchmarks a Xeon E-2488 against a Ryzen 7700X, then it'd be exactly what you're talking about.thestryker said:with the IPC between RPL/Zen 4 so close maybe disabled E-cores + 1 CCD disabled would make for a good comparison as then it would be just 8 P-cores vs 8 Zen 4 cores. I haven't seen any such comparison though so this is just a wild guess.

Annoyingly (for me), the new Xeon E 2400 also have their GPUs disabled. Otherwise, I might've been interested. I guess they could still announce G-versions, later. -

thestryker Reply

Ah yeah I get what you mean, but they'd be limited to 4 memory channels and half the PCIe lanes as well. I would love to know what happens in that circumstance though... like do they have to toss the whole thing?bit_user said:I just meant that perhaps they could get more mileage out of their chiplet usage. Like, maybe there are some XCC tiles with a defect in the EMIB section, so just put those on a substrate by themselves and sell it as 32C or less.

Well like I said originally it's more to give a known quantity comparison than it is to get a direct reflection. What I mean by this being if there was a test that Genoa beat EMR, but the desktop CPUs were closer/equal you could extrapolate that the server CPU differences were more likely due to efficiency than architecture. It would definitely be much better if you had a P-core only setup which matched a Zen 4 setup in performance though for this comparison.bit_user said:Okay, well if you don't exclude the E-cores, then I don't see how those tests would be relevant to these server CPUs.

Yeah that would be the ideal comparison. I'd love to see a die shot to see if they're using ones without E-cores.bit_user said:Heh, you might just get your chance! The new Xeon E-series 2400 have their E-cores disabled (sounds ironic, eh?). So, if anyone benchmarks a Xeon E-2488 against a Ryzen 7700X, then it'd be exactly what you're talking about.

Yeah I was surprised there were so many SKUs listed but none with an IGP. In the past they've always launched at least a few with graphics. Another reason why I'd love to see a die shot.bit_user said:Annoyingly (for me), the new Xeon E 2400 also have their GPUs disabled. Otherwise, I might've been interested. I guess they could still announce G-versions, later.