Tachyum, long on wild performance claims and short on actual silicon, delays the Prodigy Universal processor yet again to the second half of 2024 — meme chip reeks of vaporware

Vaporware much?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

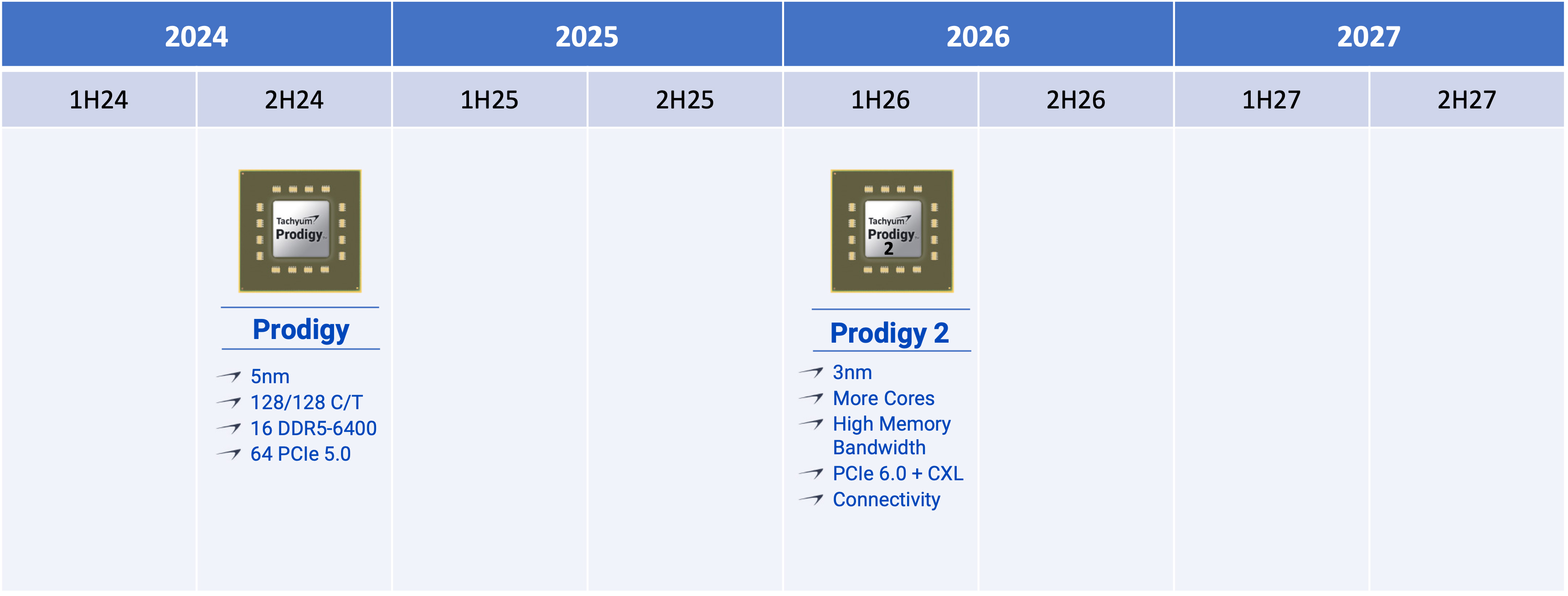

For over five years, Tachyum has been working on its universal Prodigy processor, which it claims is equally good for artificial intelligence, high-performance computing, and other data center workloads. The processor was originally meant to tape out in 2019 and hit the market in 2020. However, it has slipped to 2021, then 2022, then 2023, all while the company has made increasingly wild and unrealistic performance claims even though it has never demoed working silicon. Perhaps unsurprisingly, the company's most recent roadmap now earmarks the Prodigy launch for the second half of 2024. Tachyum will supposedly follow up with the Prodigy 2 processor with more cores and a higher-performance memory subsystem in the first half of 2026.

The company made its mark by suing Cadence when its original design didn't meet performance targets, all the while making unrealistic claims that its 'Universal Processor' "does everything' and will power a 20 ExaFlops supercomputer.

The latest roadmap published by Tachyum says that its first Prodigy processor, made using a 5nm-class process technology, is set to sample in Q2 2024 and then ship in the second half of next year, which probably means very late in 2024, which is about a year later than the company said just a few months ago and four years later than its original launch date. In addition, the company now says it will follow up with a Prodigy 2 processor that will feature PCIe 6.0 connectivity with the CXL protocol on top, which will significantly enhance the platform's capabilities when it comes to market — if it ever does.

Tachym describes its first-gen Prodigy as a universal monolithic processor integrating up to 192 proprietary 64-bit VLIW-style cores that feature two 1024-bit vector units per core and two 1024-bit matrix units per core, all of which run at a massive 5.70 GHz peak frequency. The CPU features 96 PCIe 5.0 lanes and sixteen DDR5-7200 memory channels that are said to support massive memory capacities for large language models.

Interestingly, the company seems to have further altered the architecture of its Prodigy processor since 2022. Instead of one 4096-bit matrix unit per core, Prodigy now has two 1024-bit matrix units per core. An architectural change like this requires a compiler change and recompilation of software meant to run on Prodigy natively. However, it is hard to say how many programs are actually ready to do that as of now.

However, there is yet another new significant inconsistency in the latest whitepaper published by Tachyum: Although the original Prodigy now supposedly features up to 192 cores, the company's roadmap slide points to 128 cores in 2H 2024.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user I never did believe their hype. This chip/company fits a mold of many before it, which make lofty promises but either fail to deliver or are so late that the industry has already moved beyond their solution, by the time they finally do.Reply

Not that I don't feel some sympathy, but the amount of hubris and overpromising in their messaging doesn't exactly make it easy.

Also, the whole episode of using Cadence as a scapegoat didn't exactly improve my feelings towards them. While I can believe some of their complaints, I highly doubt their chip wouldn't have been held up for other reasons, even if Cadence had executed perfectly. -

bit_user ReplyTachym describes its first-gen Prodigy as a universal monolithic processor integrating up to 192 proprietary 64-bit VLIW-style cores

No, they revised their ISA some time ago. They ditched the original plan to use VLIW, which I found mildly disappointing.

"Tachyum still calls their architecture ‘Prodigy’. But they’ve overhauled it in response to customer feedback. VLIW bundles are gone in favor of a more conventional ISA. Hardware scheduling is much more capable, improving performance per clock. And the cache hierarchy has seen major modifications. 2022 Prodigy’s changes are sweeping enough that much of the analysis done on 2018’s Prodigy no longer applies."

Source: https://chipsandcheese.com/2022/08/26/tachyums-revised-prodigy-architecture/

Prodigy now has two 1024-bit matrix units per core.

Like I said above, about how hard it is to leap-frog the industry, even ARM now has matrix-multiply extensions!

https://community.arm.com/arm-community-blogs/b/architectures-and-processors-blog/posts/scalable-matrix-extension-armv9-a-architecture

I'm not aware of any ARM CPUs actually implementing it, but probably by the time Prodigy comes to market... -

atomicWAR Yeah these delays have been disappointing. I was eager to see their promised reached though as bit_user mentions it's hard to leap frog the industry. I think the days where leap frogging in the industry as 'common' have left the building as far as I can tell as the frequency for this happening has significantly diminished over my lifetime.Reply -

bit_user Reply

The last examples I'm aware of were all Japanese.atomicWAR said:it's hard to leap frog the industry. I think the days where leap frogging in the industry is 'common' have left the building as far as I can tell as the frequency for this happening has significantly diminished over my lifetime.

https://www.preferred.jp/en/projects/supercomputers/https://www.pezy.co.jp/en/products/pezy-sc3/https://www.fujitsu.com/global/products/computing/servers/supercomputer/a64fx/

However, PEZY - the most interesting, with an exotic RF-coupled alternative to HBM - got hit with some massive corruption case that seems to have derailed their business, at least for quite a while.

As for Preferred Networks, I'm not sure if their processors are for sale outside Japan.

Lastly, Fujitsu's A64FX did finally reach global markets, but I think probably not at a compelling price point, by the time it did. Probably of limited interest to anyone not doing work on auto-vectorization or ARM SVE.

The way things are going, the next instance will be likely be Chinese. -

sygreenblum Reply

So basically an entirely different chip then the one announced in 2018. Not holding my breath on this one.bit_user said:No, they revised their ISA some time ago. They ditched the original plan to use VLIW, which I found mildly disappointing.

"Tachyum still calls their architecture ‘Prodigy’. But they’ve overhauled it in response to customer feedback. VLIW bundles are gone in favor of a more conventional ISA. Hardware scheduling is much more capable, improving performance per clock. And the cache hierarchy has seen major modifications. 2022 Prodigy’s changes are sweeping enough that much of the analysis done on 2018’s Prodigy no longer applies."Source: https://chipsandcheese.com/2022/08/26/tachyums-revised-prodigy-architecture/

Like I said above, about how hard it is to leap-frog the industry, even ARM now has matrix-multiply extensions!

https://community.arm.com/arm-community-blogs/b/architectures-and-processors-blog/posts/scalable-matrix-extension-armv9-a-architecture

I'm not aware of any ARM CPUs actually implementing it, but probably by the time Prodigy comes to market... -

Findecanor Tachyum has posted a bunch of command-line demos of supposedly running operating systems and programs on Prodigy on FPGA. So they do have something, but it takes more than some Verilog to make a chip.Reply

So does Intel, and there have been discussions about RISC-V getting some too.bit_user said:Like I said above, about how hard it is to leap-frog the industry, even ARM now has matrix-multiply extensions!

Matrix multiplication of small datatypes is used for AI / "Deep learning". These don't require that much precision though.

ARM and RISC-V have extensions for fp16 and bf16 (32-bit IEEE fp with 16 fractional bits chopped off). There are also ops using integers. Tachyum Prodigy is supposed to support 4-bit integers even, for stupidly great throughput at stupidly low precision.

BTW. A matrix multiplication consists of many dot products, so if you see a low-precision dot product instruction in an ISA then you could bet that it was also put there for AI. -

bit_user Reply

Intel's AMX is not quite as general as ARM's SME.Findecanor said:So does Intel, and there have been discussions about RISC-V getting some too.

Dot products on individual rows & columns was the old way of doing it. However, the downsides are that you have to perform redundant fetches of the same data to compute a matrix product and you're limited in parallelism to how many independent vector dot products the CPU can do at once. The point of a matrix or tensor product engine is to enable greater parallelism, while reducing redundant data movement.Findecanor said:BTW. A matrix multiplication consists of many dot products, so if you see a low-precision dot product instruction in an ISA then you could bet that it was also put there for AI. -

Atom Symbol Reply

Do you mean that (1) the original VLIW was mildly disappointing or (2) the fact that they ditched VLIW was disappointing?bit_user said:No, they revised their ISA some time ago. They ditched the original plan to use VLIW, which I found mildly disappointing.

In my opinion, changing the original Tachyum design (which already had sufficiently high performance-per-watt to be respected on the market) without having any actual Tachyum V1 VLIW chips manufactured in sufficient quantities, was a mistake. They should have concentrated on first delivering an actual product to the market in sufficient quantities, and only then abandon the V1 VLIW architecture. -

bit_user Reply

I was hoping for some kind of fresh take on VLIW, or an effective use of JIT + runtime, profile-driven optimization.Atom Symbol said:Do you mean that (1) the original VLIW was mildly disappointing or (2) the fact that they ditched VLIW was disappointing?

Yes, there's something to be said for actually delivering "good enough". I think Ventanna got this part right. They supposedly completed their first generation, even though I think it's not quite a product anyone is willing to deploy. However, completing it seems to have given them enough credibility to line up customers for their v2 product.Atom Symbol said:In my opinion, changing the original Tachyum design (which already had sufficiently high performance-per-watt to be respected on the market) without having any actual Tachyum V1 VLIW chips manufactured in sufficient quantities, was a mistake. They should have concentrated on first delivering an actual product to the market in sufficient quantities, and only then abandon the V1 VLIW architecture. -

Atom Symbol Reply

In my opinion, JIT+runtime profile-driven optimization can be less effective in a VLIW CPU compared to OoO in the sense that the decisions of a JIT (which is mostly implemented in software, with some hardware assistance/support) are too coarse grained (i.e: it takes a very long time for a JIT engine to react to a real-time change) taking at least N*100 CPU cycles to examine+rewrite code using a JIT engine, while an OoO (out of order) execution implemented mostly directly in hardware can respond to a real-time change in just a few CPU cycles. The difference in response time between a JIT and an OoO is 100-fold (or more). Secondly, JIT cannot (by definition) have access to a large part of the telemetry accessible to an OoO engine - a large part of realtime telemetry that matters for optimization is valid for just a few CPU cycles.bit_user said:I was hoping for some kind of fresh take on VLIW, or an effective use of JIT + runtime, profile-driven optimization.

I am not against JIT, and not against VLIW in-order CPUs without OoO - I am just saying that there is a fundamental dichotomy between where JIT compilation is useful and where OoO execution is useful: JIT compilation is incapable of fully substituting/replacing OoO execution. JIT and OoO can coexist (obvious example: Java VM running on an i386 CPU) and their individual speedups are additive, but there is a very large performance gap between a VLIW in-order CPU with an optional JIT (i.e: Tachyum V1 CPU from HotChips 2018 PDF running a Java application) and an OoO CPU with an optional JIT (i.e: current Tachyum "V1+" CPU architecture from year 2022 running a Java application).