If you think PCIe 5.0 runs hot, wait till you see PCIe 6.0's new thermal throttling technique

Intel is working on PCIe Bandwidth Controller Linux driver to keep thermals in check.

The next generations of PCIe are becoming so demanding that Intel is now designing techniques to reduce the bus speed, or even the width of the PCIe link, to prevent devices from overheating. Intel has been developing a Linux PCIe bandwidth controller driver designed to keep thermals in check since last year, reports Phoronix. That work includes plumbing in new mechanisms for PCIe 6.0.

The source of increasing temperatures for PCIe devices is pretty simple—the devices run faster to saturate the faster bus, thus generating more heat. As the PCIe bus gets faster, it becomes more demanding of signal integrity and less tolerant of signal loss, which is often combated by improving encoding or increasing clocks and power, with the latter two creating extra heat.

The driver's near-term function for PCIe 5.0 is to mitigate thermal issues by reducing PCIe link speeds to keep temperatures in check — this means the bus itself will downshift from its standard 16 GHz frequency to slower speeds to keep heat in the safe zone. This feature ensures the devices can maintain optimal temperatures even under high loads. While the current focus is on controlling the link speed, plans are underway to extend the functionality to manage PCIe link widths (i.e., the number of active PCIe links), which the PCIe 6.0 specification will enable. For instance, a PCIe x16 device could shift down to a x8 or x4 connection to control thermals.

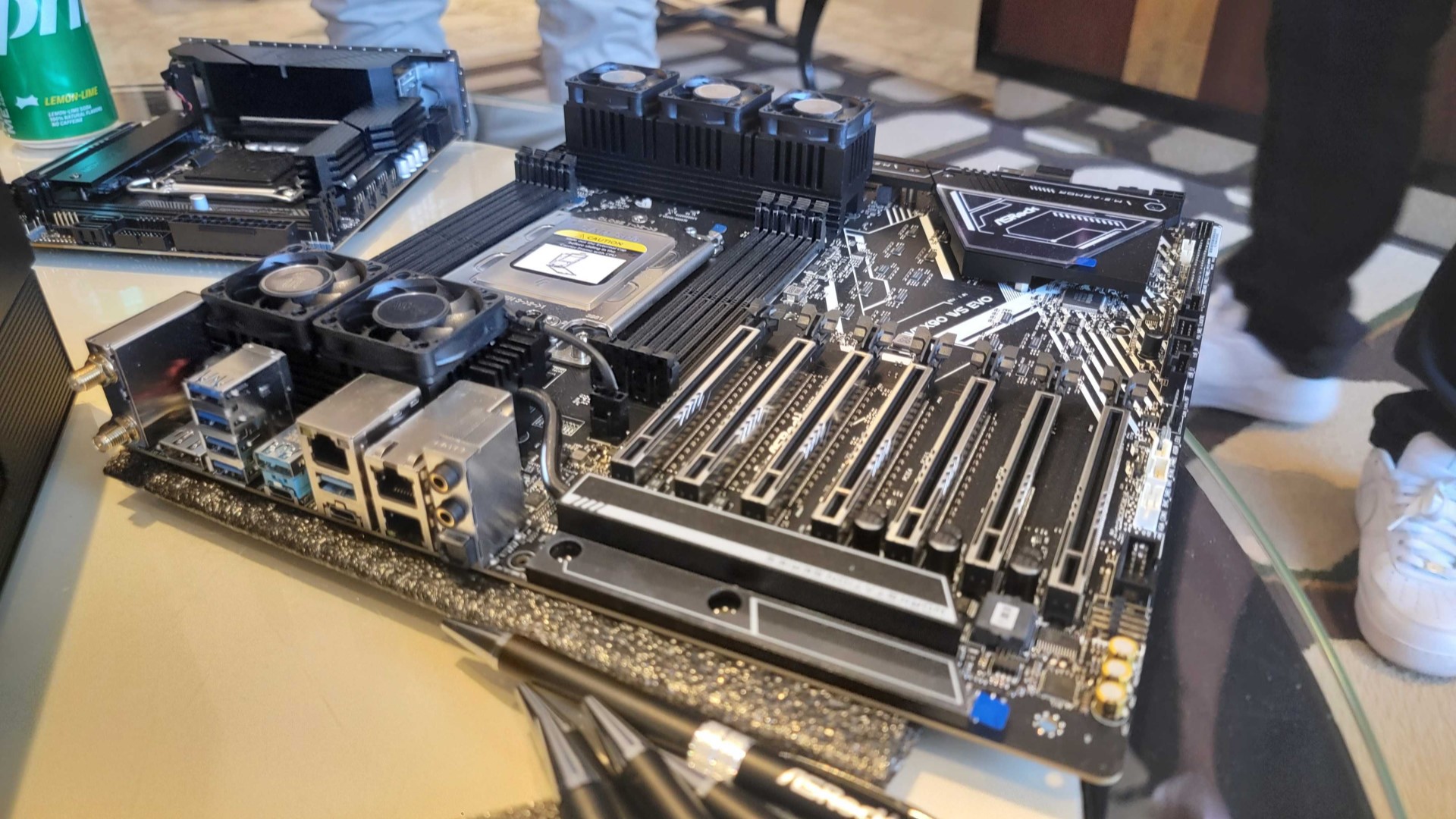

The introduction of PCI 6.0 could present a serious thermal challenge, particularly for GPU servers that use hundreds of PCIe links simultaneously. "This series adds PCIe bandwidth controller (bwctrl) and associated PCIe cooling driver to the thermal core side for limiting PCIe Link Speed due to thermal reasons," Intel's description of the driver reads. "PCIe bandwidth controller is a PCI express bus port service driver. A cooling device is created for each port the service driver finds if they support changing speeds. This series only adds support for controlling PCIe Link Speed. Controlling PCIe Link Width might also be useful but AFAIK, there is no mechanism for that until PCIe 6.0 (L0p) so Link Width throttling is not added by this series."

While Intel's commitment to improving server thermal controls is understandable, how it will be implemented remains to be seen. Intel could use data from thermal sensors in PCIe hosts, endpoints, and retimers provided via standardized interfaces.

Recently, the fifth set of patches for this driver was released, indicating refinements and optimizations in the code, such as refactoring and clean-ups. This ongoing development reflects Intel's intention to enhance the Linux kernel's capability to handle thermal management with newer and faster PCI Express versions, including 6.0 and 7.0.

Although not yet complete, the latest updates of the driver show promising progress toward integration into the mainline kernel, according to Phoronix. How this will affect performance for AI training and HPC servers remains to be seen, but the capability gives Intel, server makers, and data center admins another way to manage server power consumption and heat dissipation.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Dementoss If it carries on like this, without major improvements in efficiency, PCs are going to need an external refrigeration system, to chill the intake air...🥶Reply -

hotaru251 Replymitigate thermal issues by reducing PCIe link speeds to keep temperatures in check

question is...how much does this slow the data rates & if you aren't better just using an older gen drive at that point that doesnt have thermal issue -

dmitche31958 What is old is new again. Computers are going require air conditioning to use them. LOL.Reply

If the new standards can't run as rated then why bother upgrading to them if you can use the older standard and spend less money? -

Conor Stewart I can't see how reducing the link speed or number of lanes is the best way to deal with thermals. Surely it would be better to limit it in other ways, like power and temperature limits in the cards themselves (isn't that already a thing?) or communication between the CPU and PCIe device so for instance the GPU can tell the CPU it can't keep up and is overheating and needs to slow down (I would be surprised if this isn't already a thing).Reply

Adding a connection bottleneck rather than just slowing the device down seems like a very odd and nonsensical way of doing things. It would only make sense if there was no way of slowing the device down, in which case it just needs designed better.

If you are going to use a PCIe 6.0 x 16 connection just for it to reduce to PCIe 6.0 x 8 or x 4 under load then you might as well just use PCIe 4 or 5. Same if it reduces the clock speed of the PCIe bus. Sure you would lose out on burst performance but the sustained performance would be the same.

Also can't you just design the products better? If you know your product can only handle a PCIe 6.0 x 4 connection under load then why give it a 16 lane connection? The performance gained for the tiny amount of time it can use the 16 lanes (before it heats up) will be minimal and of no use to many applications especially the likes of datacentres where you want to use the devices as much as possible and constantly.

Also what benefit do you have from reducing the lanes of a device under use? It's not like you can then use those lanes elsewhere, all lanes are still taken up by that one device. -

bit_user Reply

Even improvements in the encoding can burn more power. I'm sure PCIe's PAM4 does, not to mention its FEC computation.The article said:As the PCIe bus gets faster, it becomes more demanding of signal integrity and less tolerant of signal loss, which is often combated by improving encoding or increasing clocks and power, with the latter two creating extra heat. -

bit_user Reply

They already do. PCIe 6.0 is used in servers, which run in air conditioned machine rooms and datacenters.dmitche31958 said:What is old is new again. Computers are going require air conditioning to use them.

PCIe 6.0 was created and is being adopted to address market needs. The market wants faster speeds more than energy efficiency.dmitche31958 said:If the new standards can't run as rated then why bother upgrading to them if you can use the older standard and spend less money?

Haven't you ever heard of a fan failure, or maybe a heatsink that's not properly mounted? Just like how CPUs will avoid burning themselves out in these situations, PCIe controllers also need to avoid frying chips (on some very expensive boards, I might add), in the event of a cooling failure.Conor Stewart said:Also can't you just design the products better? -

Nicholas Steel Reply

Think of it like a CPU with Boost capability, or anotherwords a PCI-E 5.0 device that can temporarily boost up to double the speed.dmitche31958 said:What is old is new again. Computers are going require air conditioning to use them. LOL.

If the new standards can't run as rated then why bother upgrading to them if you can use the older standard and spend less money? -

Conor Stewart Reply

How is the solution to cooling failures to reduce the number of PCIe lanes? The chips need to avoid frying themselves by slowing down or switching off if they get too hot. Why should controlling the devices thermals be the job of the PCIe controller?bit_user said:They already do. PCIe 6.0 is used in servers, which run in air conditioned machine rooms and datacenters.

PCIe 6.0 was created and is being adopted to address market needs. The market wants faster speeds more than energy efficiency.

Haven't you ever heard of a fan failure, or maybe a heatsink that's not properly mounted? Just like how CPUs will avoid burning themselves out in these situations, PCIe controllers also need to avoid frying chips (on some very expensive boards, I might add), in the event of a cooling failure.

This also seems to be talking about normal use, not failures, so that is a totally different argument anyway.