Which GPU Is Best for Mining Ethereum? AMD and Nvidia Cards, Tested

Ethereum: A Bitcoin Killer?

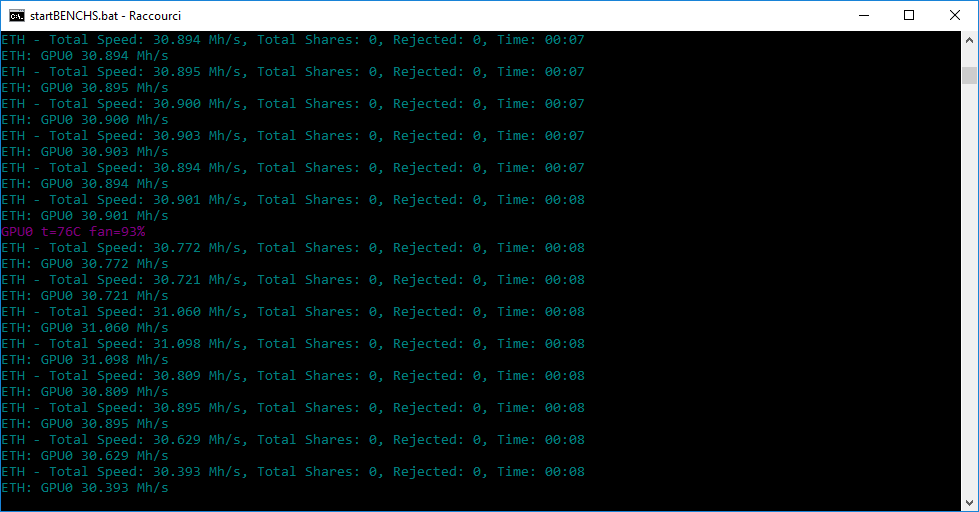

For this first update to our exploration of Ethereum mining performance, we're adding six graphics cards in the entry-level and mid-range categories. We're also preparing a second update that'll include high-end GPUs. Beyond our original line-up, the list of contenders now also includes:- MSI GTX 1050 Ti Gaming X 4G- MSI RX 580 Gaming 8G- MSI RX 560 Aero ITX OC 4G- Sapphire RX 560 Pulse OC 4G- Sapphire RX 550 Pulse 4G- Sapphire RX 470 Mining 4G

How far will the cryptocurrency madness go? While the number of different coin varieties is always increasing, only a handful have attained enough market capitalization to be truly viable. Ethereum, a blockchain-based distributed computing platform, and its associated token, ether, is one of the most popular.

At the time of this writing, Ethereum (ETH) ranks second only to Bitcoin (BTC) in terms of market capitalization, with a total cap of almost $83 (£64) billion. In comparison, Bitcoin's market cap exceeds $140 (£108) billion (after hitting a peak of more than $325/£251 billion several weeks ago), and Litecoin (LTC), silver to Bitcoin's gold, crests at only $6.8 (£5.24) billion.

An Algorithm Perfectly Suited to GPUs

One of the advantages of Ethereum over Bitcoin or Litecoin has to do with the algorithm chosen to validate the proof-of-work (PoW). While BTC relies on SHA-256 and Litecoin on Scrypt for its hash function, Ethereum calls on an algorithm called Ethash, created especially for this purpose. In practice, it was designed from the start to prevent the development of dedicated ASICs to mine it.

Indeed, while SHA-256 and Scrypt are extremely compute-hungry, consequently rendering ASICs more efficient than our graphics cards (even more so than CPUs), Ethash is rather dependent on memory performance (frequency, timing, and bandwidth). With their fast GDDR5, GDDR5X, and HBM, graphics cards are perfectly suited to mine Ethereum.

Performance Varies Greatly By Card

However, not all boards are created equal. Certain GPU architectures are quicker and more effective than others, and not all cards are loaded with the same type of graphics memory. Naturally, then, we set out to determine for ourselves which models are the most profitable to use for mining, narrowing our focus to some of the most in-demand mainstream solutions from AMD and Nvidia.

Our comparison includes graphics cards armed with modern GPUs: AMD is represented by Ellesmere, Baffin, Lexa, and Hawaii (that's the Radeon R9 390, Radeon RX 470/480, and Radeon RX 550/560/570/580), while Nvidia-based GP106/GP107 cards include the GeForce GTX 1050 Ti, GTX 1060 3GB, and GTX 1060 6GB.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

We had to exclude certain boards because they were too slow (previous-gen GeForce GTX 9-series, for example) or came up short on memory capacity (GeForce GTX 1050 2GB and Radeon RX 460 2GB). As you'll soon see, those are important considerations, as two cards armed with the same GPU aren't necessarily equal when it comes to mining Ethereum.

MORE: The Ethereum Effect: Graphics Card Price Watch

MORE: How To Mine Ethereum Now

MORE: Experiment: Build a (Profitable) Ethereum Mining Rig From Spare Parts

MORE: Top 25 Cryptocurrencies By Market Cap

MORE: Best Graphics Cards

Current page: Ethereum: A Bitcoin Killer?

Next Page The Graphics Cards We Tested-

abryant Archived comments are found here: http://www.tomshardware.com/forum/id-3520934/ethereum-mining-performance-geforce-radeon.htmlReply -

Integr8d I like hot Toms laments the prices of GPUs, due to the mining craze, and then publishes articles like this...Reply -

derekullo Reply

If you know the enemy and know yourself, you need not fear the result of a hundred battles.20677340 said:I like hot Toms laments the prices of GPUs, due to the mining craze, and then publishes articles like this...

If you know yourself but not the enemy, for every victory gained you will also suffer a defeat.

If you know neither the enemy nor yourself, you will succumb in every battle.

-

WyomingKnott Add some workstation cards to the review. If they turn out to be hashmasters, it would relieve the strain on the graphics card market.Reply

fat chance. -

derekullo Reply20677421 said:Add some workstation cards to the review. If they turn out to be hashmasters, it would relieve the strain on the graphics card market.

fat chance.

A titan V would have handily crushed any of the cards here with its 70+ MH/s Eth rate.

https://hothardware.com/reviews/nvidia-titan-v-volta-gv100-gpu-review?page=5

But when it comes to making your money back you use:

Cost of graphics card / Megahashes per second (Easy to understand formula, real formula at the bottom)

For the Geforce 1060 that comes out to roughly

$400 / 25MH/s or 16 dollars per megahash (I was able to buy 4 1060s for about $300 each a few months ago. Score!!!)

For the Geforce Titan V that comes out to roughly

$5000 / 82MH/s or 61 dollars per megahash.

As you can see it would take at least 3.8 times as long for a Titan V to pay itself off, not including the power cost.

This isn't the most true comparison due to not taking power into account, but still gives an easily understandable way of seeing why a $5000 GPU isn't worth mining with.

You could have simply bought 3 Geforce 1060s and had a similar hash rate for cheaper.

The goal here being to make back the price of the graphics cards, then everything else is gravy.

Ideally I would have used the formula of

Cost of graphics card / Spreadsheet calculated monthly income taking power and other fluctuations into account.

to calculate on average how long a graphics card would take to pay itself off.

You can also use the calculator on https://whattomine.com/ to quickly calculate the "Spreadsheet calculated monthly income" -

aquielisunari Sun TzuReply

If you know the enemy and know yourself, you need not fear the result of a hundred battles.

If you know yourself but not the enemy, for every victory gained you will also suffer a defeat.

If you know neither the enemy nor yourself, you will succumb in every battle.

-

aquielisunari Bad job Tom's. Just add fuel to the GFX card debacle. Irresponsible and just wrong. First the user must go out and OVERSPEND on a GFX card and then follow this article and start mining and increase the shortages even further. Hm, good job Tom, good job.Reply -

derekullo Reply20677582 said:Bad job Tom's. Just add fuel to the GFX card debacle. Irresponsible and just wrong. First the user must go out and OVERSPEND on a GFX card and then follow this article and start mining and increase the shortages even further. Hm, good job Tom, good job.

Tom's gave you all the information needed for you to decide whether to mine or not.

Let me paint it a bit simpler.

Lets assume you believe the price of Ethereum is going to stay at what it is today, currently $709, forever.

Plugging in all that information into whattomine.com gives us:

https://whattomine.com/coins/151-eth-ethash?utf8=%E2%9C%93&hr=22.5&p=90.0&fee=1.0&cost=0.06&hcost=400&commit=Calculate

(0.06$/kWh is my power cost in my area)

Or $38.78 monthly profit or a 309 day repayment.

If you are confident that Ethereum's price will stay the same or maybe even rise then you could expect to recoup your $400 purchase in at max 309 days.

If 309 days is too long to wait or you are unsure about cryptocurrencies then stay out of the water.

-

WyomingKnott @aquielisunariReply

I don't think that Tom's is going to advance mining much; our longtime enthusiast members mostly won't get into it and it's widely enough known already. A little extra knowledge for those of us on the sidelines and, who knows, maybe miners will concentrate on the most efficient cards and the price of the rest will go down. Not an issue for me, I'm running a fanless GT430, so what do I care about current prices?