Gigabit Ethernet: Dude, Where's My Bandwidth?

Network Tests: Setting Our Expectations

Before we do anything else, we should test our hard drives without using the network to see what kind of bandwidth we can expect from them in an ideal scenario.

There are two PCs taking part in our real-world gigabit network. The first, which we’ll call the server, has two drives. Its primary drive is a 320 GB Seagate Barracuda ST3320620AS that’s a couple of years old. The server acts as a network-attached storage (NAS) device for a RAID array consisting of two 1 TB Hitachi Deskstar 0A-38016 drives, mirrored for redundancy.

The second PC on the network, which we’ll call the client, has only one drive: a 500 GB Western Digital Caviar 00AAJS-00YFA that is about six months old.

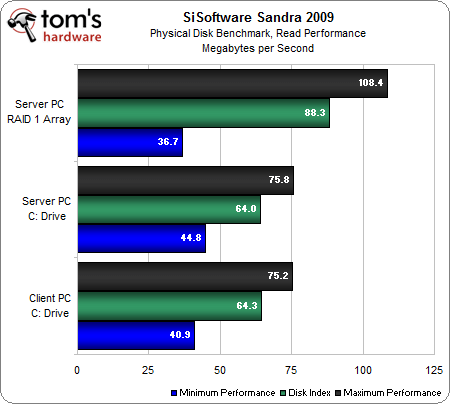

We first test the speed of both the server's and client computer's C: drives and see what kind of read performance we can expect to get from them. We’ll use SiSoftware Sandra 2009’s hard disk benchmark:

Right out of the gate, our hopes for achieving gigabit speed file transfers have disappeared. Both of our single hard disks have a maximum read speed of about 75 MB/s in ideal situations. Since this is a real-world test and the drives are about 60% full, we can expect read speeds closer to the 65 MB/s index that both of these drives share.

But have a look at the RAID 1 array performance–the great thing about this RAID 1 array is that the hardware RAID controller can increase read performance by pulling data from both drives simultaneously, similar to how a striped RAID 0 array can; note that this effect will probably only occur with hardware RAID controllers, not software RAID solutions. In our tests, the RAID array gives us much faster read performance than a single drive can, so our best chance for a quick file transfer over the network looks like it’s with our RAID 1 array. While the RAID array is delivering an impressive peak 108 MB/s, we should be seeing real-world performance close to its 88 MB/s index because this array is about 55% full.

So, we should be able to demonstrate about 88 MB/s over our gigabit network, right? It’s not that close to a gigabit network’s 125 MB/s ceiling, but it’s a lot faster than 100 megabit networks that have a 12.5 MB/s ceiling, so 88 MB/s would be great in practice.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

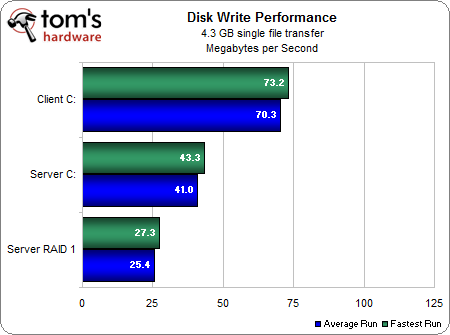

Not so fast. Just because our drives can read this quickly doesn’t mean they can write that fast in a real-world situation. Let’s try a few disk write tests before even using the network to see what happens. We’ll start with our server and copy a 4.3 GB image file from the speedy RAID array to the 320 GB system drive, and then back again. Then we'll try copying a file from the client PC's D: drive to its C: drive.

Yuck! Copying from the quick RAID array to the C: drive results in a mere 41 MB/s average transfer speed. And when copying from the C: drive to our RAID 1 array, the transfer speed drops even more to about 25 MB/s. What is going on here?

Well, this is what happens in the real world: The C: drive is only a little over a year old, but it’s about 60% full, probably fragmented a bit, and it’s no speed demon when writing. There are other factors as well, such as how fast the system and memory are in general. As for the RAID 1 array, it’s made up of relatively new hardware, but because it’s a redundant array, it has to write data to two drives simultaneously and is going to take a huge write performance hit. While RAID 1 can offer fast read performance, write performance is sacrificed. Alternatively, we could use a RAID 0 array with striped drives that delivers fast write and read performance, but if one of the drives die, then all of the data is compromised. Realistically, RAID 1 is a better bet for folks who value their data enough to set up a NAS.

However, all is not lost yet, as we have a little light at the end of the tunnel. The newer 500 GB Western Digital Caviar is capable of writing this file at an average 70.3 MB/s over five runs, and even recorded a top-speed run of 73.2 MB/s performance.

With this in mind, we’re going to expect that our real-world gigabit LAN might demonstrate a maximum of about 73 MB/s on transfers from the NAS RAID 1 array to the client’s C: drive. We’ll also test transfer files from the client’s C: drive to the server’s C: drive to see if we can realistically expect about 40 MB/s in that direction.

Current page: Network Tests: Setting Our Expectations

Prev Page Test Systems Next Page Network Tests: Are We Getting Gigabit Performance?Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

drtebi Interesting article, thank you. I wonder how a hardware based RAID 5 would perform on a gigabit network compared to a RAID 1?Reply -

HelloReply

Thanks for the article. But I would like to ask how is the transfer speed measured. If it is just the (size of the file)/(a time needed for a tranfer) you are probably comsuming all the bandwith, beacuse you have to count in all the control part of the data packet (ethernet header, IP headrer, TCP header...)

Blake -

jankee The article does not make any sense and created from an rookie. Remember you will not see a big difference when transfer small amount of data due to some transfer negotiating between network. Try to transfer some 8GB file or folder across, you then see the difference. The same concept like you are trying to race between a honda civic and a ferrari just in a distance of 20 feet away.Reply

Hope this is cleared out.

-

spectrewind Don Woligroski has some incorrect information, which invalidates this whole article. He should be writing about hard drives and mainboard bus information transfers. This article is entirely misleading.Reply

For example: "Cat 5e cables are only certified for 100 ft. lengths"

This is incorrect. 100 meters (or 328 feet) maximum official segment length.

Did I miss the section on MTU and data frame sizes. Segment? Jumbo frames? 1500 vs. 9000 for consumer devices? Fragmentation? TIA/EIA? These words and terms should have occurred in this article, but were omitted.

Worthless writing. THG *used* to be better than this. -

IronRyan21 ReplyThere is a common misconception out there that gigabit networks require Category 5e class cable, but actually, even the older Cat 5 cable is gigabit-capable.

Really? I thought Cat 5 wasn't gigabit capable? In fact cat 6 was the only way to go gigabit. -

cg0def why didn't you test SSD performance? It's quite a hot topic and I'm sure a lot of people would like to know if it will in fact improve network performance. I can venture a guess but it'll be entirely theoretical.Reply -

flinxsl do you have any engineers on your staff that understand how this stuff works?? when you transfer some bits of data over a network, you don't just shoot the bits directly, they are sent in something called packets. Each packet contains control bits as overhead, which count toward the 125 Mbps limit, but don't count as data bits.Reply

11% loss due to negotiation and overhead on a network link is about ballpark for a home test. -

jankee After carefully read the article. I believe this is not a tech review, just a concern from a newbie because he does not understand much about all external factor of data transfer. All his simple thought is 1000 is ten time of 100 Mbs and expect have to be 10 time faster.Reply

Anyway, many difference factors will affect the transfer speed. The most accurate test need to use Ram Drive and have to use powerful machines to illuminate the machine bottle neck factor out.