AMD Ryzen 9 3900X and Ryzen 7 3700X Review: Zen 2 and 7nm Unleashed

Why you can trust Tom's Hardware

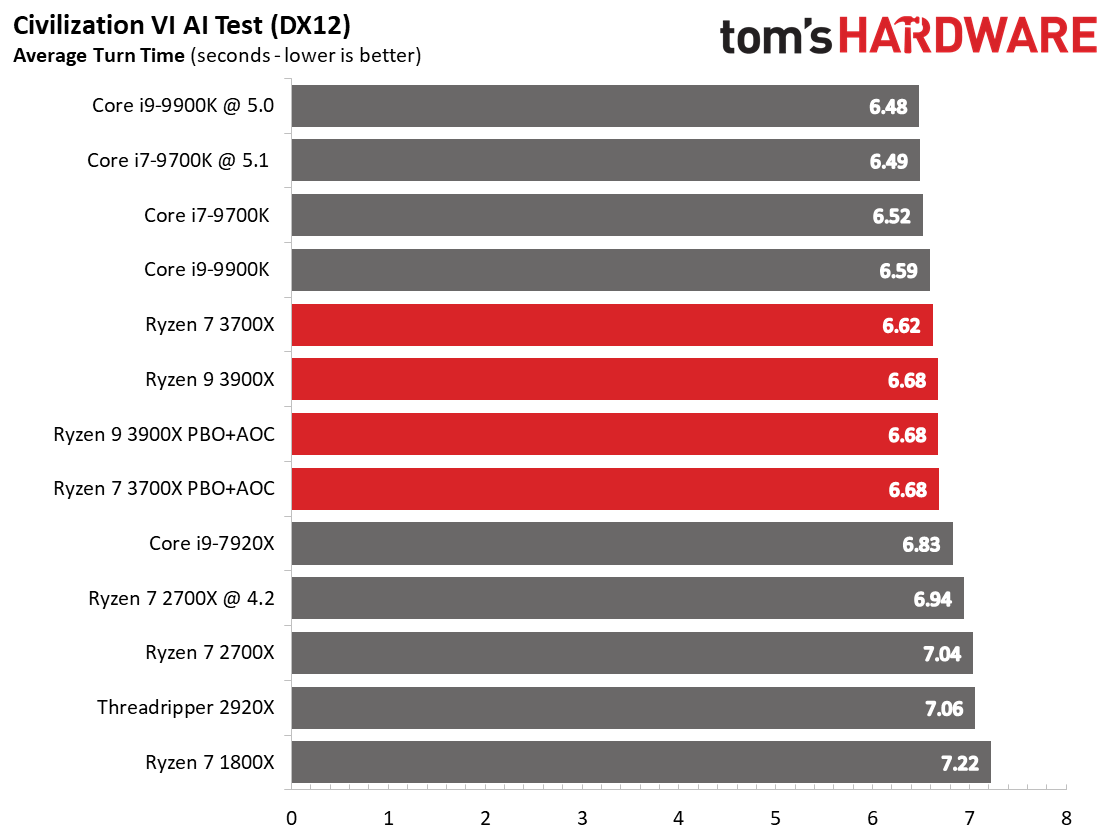

Civilization VI AI Test

This Civilization VI test mesures AI performance in a turn-based scenario and tends to prize per-core performance. The 3900X doesn't benefit from the auto-overclocking features, and the 3700X again suffers a slight decline in performance, indicating there are still a few kinks to work out. In either case, both processors at stock settings easily beat their previous-gen predecessors, even when they're overclocked.

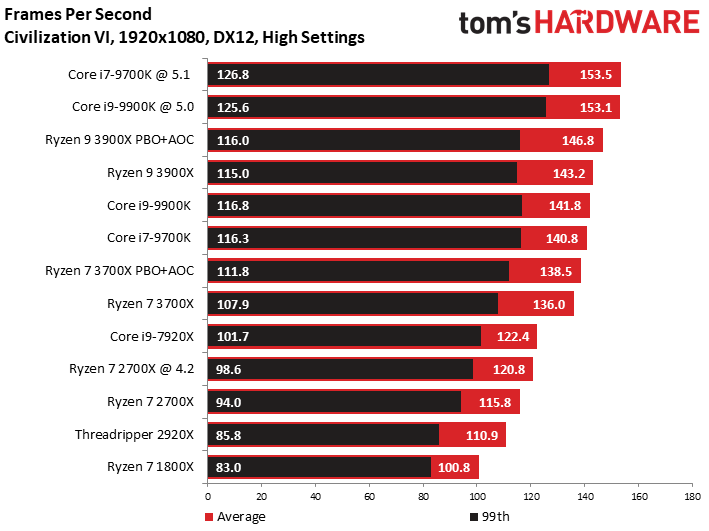

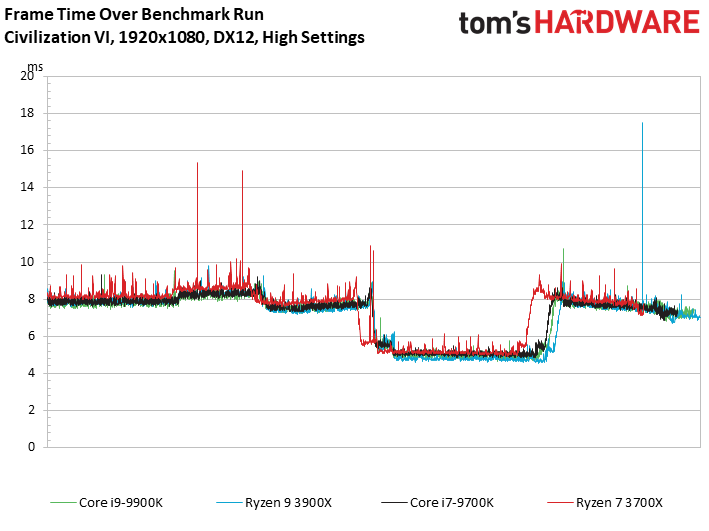

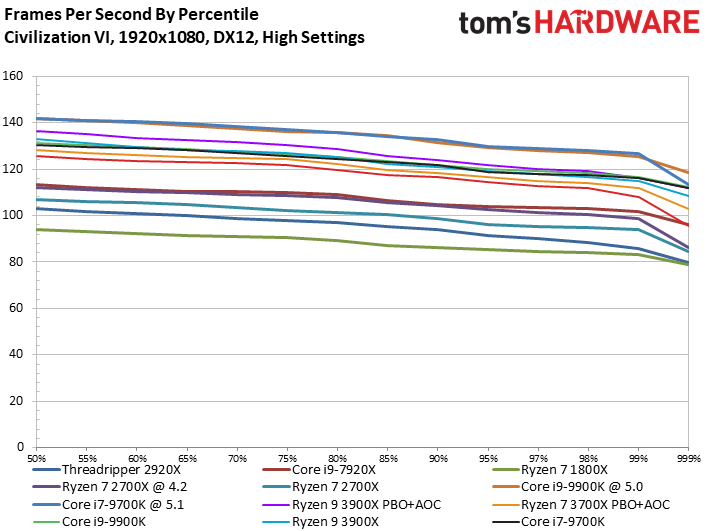

Civilization VI Graphics Test

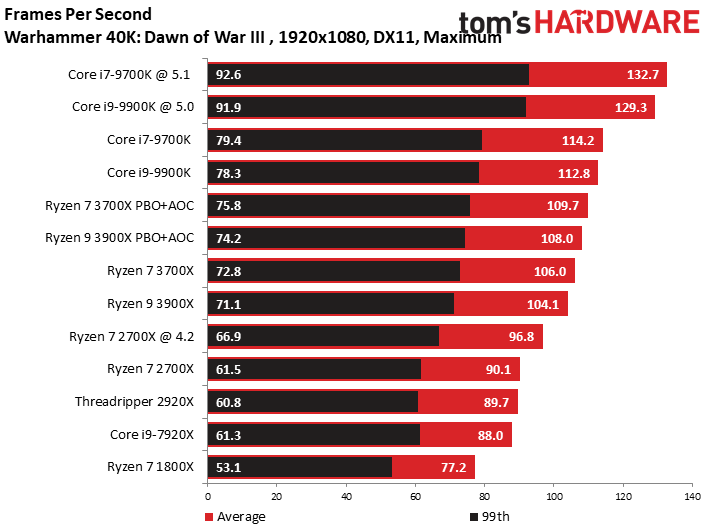

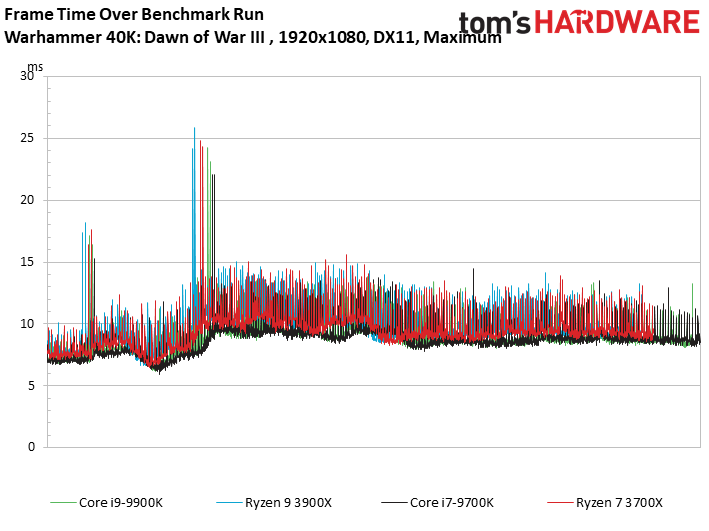

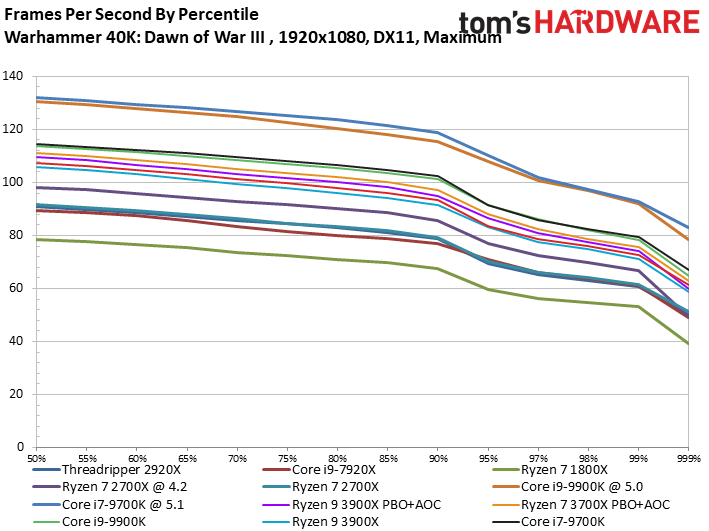

Warhammer 40,000: Dawn of War III

Tthe Warhammer 40,000 benchmark responds well to threading, but it's clear that Intel's per-core performance advantage has a big impact.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Civilization VI Graphics and AI, Dawn of War III

Prev Page VRmark, 3DMark and AotS: Escalation Next Page Far Cry 5 and Final Fantasy XV

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Isokolon too bad there wasn't a 3800X included, would be interesting to see if the price tag for the 3800X over the 3700X is indeed worth itReply -

feelinfroggy777 Nice for worksation task, but disappointing it basically ties Intel in gaming if not just a tad behind.Reply -

salgado18 Reply

It tied to Intel for less money, with a great bundled cooler, and a cheaper platform (edit: and less power too). Also, unless you use a 144Hz monitor, the diference is purely synthetic. Did you expect it to be way faster than a 5 GHz Intel magically?feelinfroggy777 said:Nice for worksation task, but disappointing it basically ties Intel in gaming if not just a tad behind. -

Phaaze88 ReplyAbel Rivera1 said:Thank you so much and to be honest the only reason why I bought the 750tx Corsair psu is because it was 30$, can I ask you if the gtx770 2gb will run battlefield 1 on high?

I can't imagine it would be.Isokolon said:too bad there wasn't a 3800X included, would be interesting to see if the price tag for the 3800X over the 3700X is indeed worth it

If looking at the 3800x as a binned 3700x - that's basically what it would be - grab one if it goes on sale closer to the 3700x's price.

These chips don't overclock any better than their predecessors, which wasn't good to begin with, so whatever extra clocks you get with a 3800x will hardly be noticeable and won't be worth a $50+ price increase over 3700x. -

velocityg4 The overclocking results were disappointing. 4.1Ghz max on all cores. Given that the 3950x does a 4.7Ghz single core turbo boost and the 3900x does 4.6Hz single core turbo boost. I'd have assumed any of the Ryzen 3000 would OC to 4.6/4.7Ghz on all cores with decent air/water cooling.Reply

Power consumption: AIDA 64 seems to punish AMD a lot more than Intel. When you were using Prime95 Intel was punished a lot more. It seems the switch from Prime95 to AIDA 64 gives Intel an unfair advantage in the stress test power consumption test. While Prime95 gave AMD an unfair advantage. I'd suggest using both in reviews or find another torture test that will fully punish both AMD and Intel for a max load test. With such wild variation. I can't see how either is an accurate measure of a CPU under full load.

Example Review: https://www.tomshardware.com/reviews/intel-core-i9-9900k-9th-gen-cpu,5847-11.html

The Intel i9-9900K hit 204.6W in your old reviews stress test. This time it is only 113W.

The AMD Ryzen 2700x hit 104.7W in your old review. Now it is 133W. -

feelinfroggy777 Replysalgado18 said:It tied to Intel for less money, with a great bundled cooler, and a cheaper platform (edit: and less power too). Also, unless you use a 144Hz monitor, the diference is purely synthetic. Did you expect it to be way faster than a 5 GHz Intel magically?

It did tie Intel in gaming. It tied basically the same Intel CPUs that have been on the market since 2015 with Skylake. We are in the back half of 2019 and we see the same gaming performance that we had in 2015 mainstream CPUs.

We know AMD is cheaper and comparing clockspeeds against different architectures between Intel and AMD is silly. But it would be nice to see some tangible improvement regarding fps with CPUs. The GPU still remains king when it comes to a quality gaming build. -

delaro I've seen reviews from 5 different sites and the conclusions bounce all over the place, which makes me think there is much to do on the software optimization side. :unsure: I was expecting gaming FPS to not change all that much with many of the titles being tested have partnered or optimized around Intel.Reply -

jimmysmitty Replyfeelinfroggy777 said:Nice for worksation task, but disappointing it basically ties Intel in gaming if not just a tad behind.

Minus the ability to overclock yes tied. Most people who buy the 9900K will not be buying it to leave it stock.

velocityg4 said:The overclocking results were disappointing. 4.1Ghz max on all cores. Given that the 3950x does a 4.7Ghz single core turbo boost and the 3900x does 4.6Hz single core turbo boost. I'd have assumed any of the Ryzen 3000 would OC to 4.6/4.7Ghz on all cores with decent air/water cooling.

Power consumption: AIDA 64 seems to punish AMD a lot more than Intel. When you were using Prime95 Intel was punished a lot more. It seems the switch from Prime95 to AIDA 64 gives Intel an unfair advantage in the stress test power consumption test. While Prime95 gave AMD an unfair advantage. I'd suggest using both in reviews or find another torture test that will fully punish both AMD and Intel for a max load test. With such wild variation. I can't see how either is an accurate measure of a CPU under full load.

Example Review: https://www.tomshardware.com/reviews/intel-core-i9-9900k-9th-gen-cpu,5847-11.html

The Intel i9-9900K hit 204.6W in your old reviews stress test. This time it is only 113W.

The AMD Ryzen 2700x hit 104.7W in your old review. Now it is 133W.

Its what I wanted to know. Ryzen has always been pushed to the limit in terms of clock speed and Zen 2 is no different it seems. Little to no headroom. AnandTech was able to get it to 4.3GHz all core but with manual OCing it seems to disable boost clocking which in turn cuts 300MHz from single core performance.

As for the power consumption, the differences are probably what they prioritize. I know Prime 95 heavily uses AVX which is a power hog. Not as sure on AIDA 64 since I never used it. I always use Prime 95 and IBT for stability.

feelinfroggy777 said:It did tie Intel in gaming. It tied basically the same Intel CPUs that have been on the market since 2015 with Skylake. We are in the back half of 2019 and we see the same gaming performance that we had in 2015 mainstream CPUs.

We know AMD is cheaper and comparing clockspeeds against different architectures between Intel and AMD is silly. But it would be nice to see some tangible improvement regarding fps with CPUs. The GPU still remains king when it comes to a quality gaming build.

Its not silly to compare clock speeds as those can be advantages. Intel still clearly has a clock speed advantage and that advantage will keep them priced higher. We might see some drops but I doubt we will see enough to make it feel like Athlon 64 again.

As much crap as people give Intel for getting stuck at 14nm I have to give them props for having a 5 year old process tech beat modern process tech, especially one that's supposed to be "half" the size. I know its not quite as most 7nms out there are still less dense than Intels initial 10nm plans but still it goes to show that the nm part has become pointless and a marketing gimmick more than anything.

The only thing a CPU matters gaming wise is how long it will last before it will bottleneck the GPU. While its still early the clock speed and overclocking advantage Intel has might make their CPUs last longer in gaming than Zen 2. Only time will tell but maybe AMD will get a better process tech in a few years and finally compete like the old days. -

martinch Reply

Unless you're trying to give an indication of "performance-per-MHz" of varying architectures, yes, comparing clock speeds between differing architectures is a fundamentally invalid comparison (it's also not exactly an accurate predictor of per-core performance).jimmysmitty said:Its not silly to compare clock speeds as those can be advantages. -

feelinfroggy777 Replyjimmysmitty said:

Its not silly to compare clock speeds as those can be advantages. Intel still clearly has a clock speed advantage and that advantage will keep them priced higher. We might see some drops but I doubt we will see enough to make it feel like Athlon 64 again.

Clockspeeds between AMD and Intel are not apples to apples. Bulldozer hit 5ghz and it was a terrible CPU. Just because it could hit 5ghz, did not make a good chip.