China provides 'computing vouchers' for AI startups to train large language models — designed to combat rising GPU costs due to US sanctions

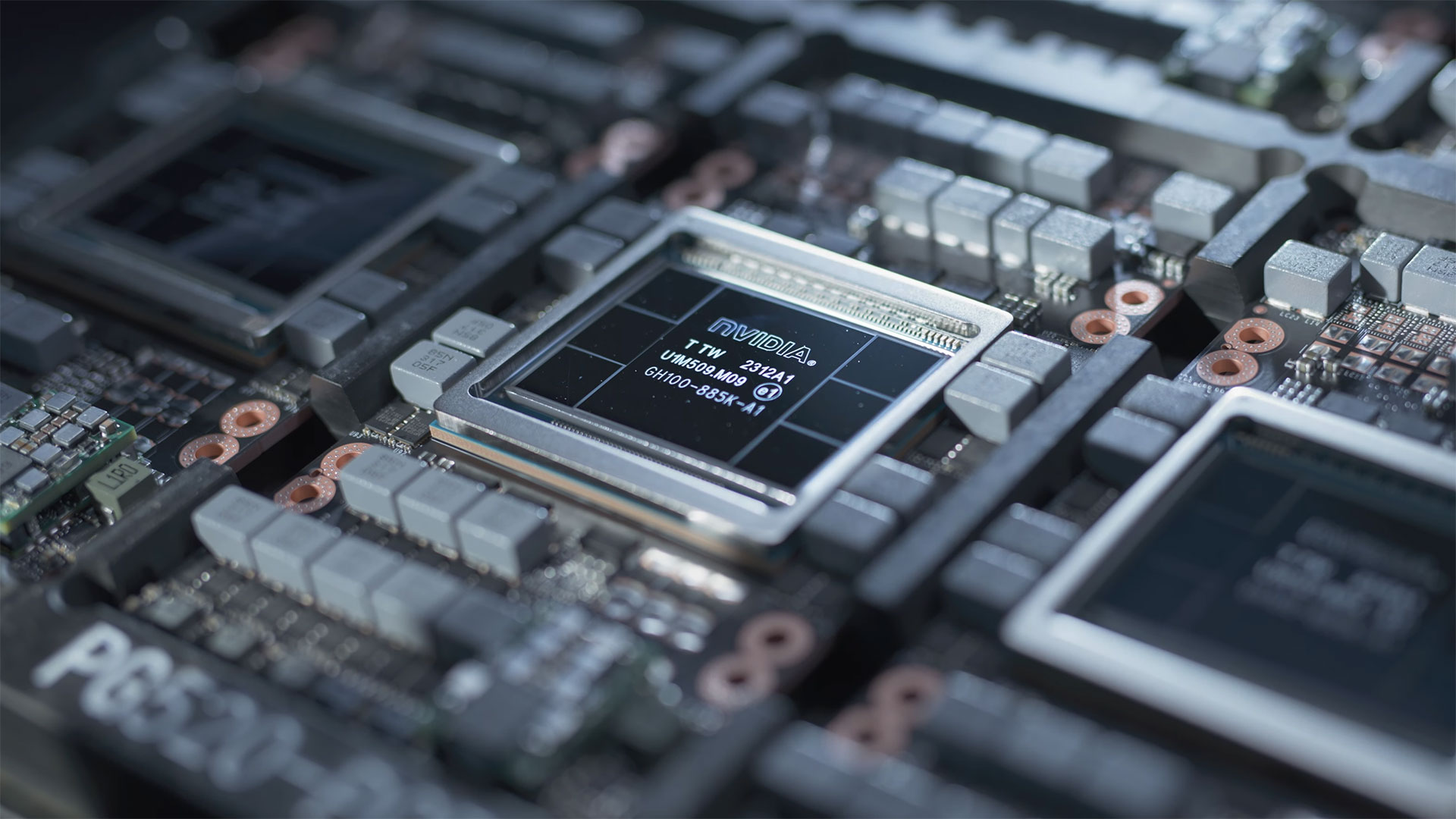

Chinese AI startups have trouble accessing compute performance, due to U.S. export rules.

China is implementing measures to support its AI startups, as the latter has trouble accessing high-performance Nvidia GPUs for large language model (LLM) training due to U.S. export rules. Several city governments, including Shanghai, are offering 'computing vouchers' to AI startups to subsidize the training cost of their LLMs, reports the Financial Times.

The computing vouchers are worth between $140,000 and $280,000 and are designed to subsidize costs related to data center use. This initiative addresses the difficulties startups face due to rising data center costs and the scarcity of crucial Nvidia processors in China. This is, of course, a result of the latest export rules imposed by the U.S. government that prohibit Nvidia and other companies from selling advanced AI processors to customers in China and other countries.

The situation has been intensified by actions from Chinese internet giants like Alibaba, Tencent, and ByteDance, who have limited the rental of Nvidia GPUs and prioritized their use for internal purposes and key clients. This internal prioritization came in response to the U.S. tightening export rules, which also led to the stockpiling of Nvidia GPUs and alternative sourcing methods for crucial AI chips.

Despite these efforts, industry analysts believe the vouchers will only partially address the problem, as they do not solve the core issue of resource availability.

"The voucher is helpful to address the cost barrier, but it will not help with the scarcity of the resources," Charlie Chai, an analyst at research group 86Research, told the Financial Times.

China is working on increasing its self-reliance by subsidizing AI groups that utilize domestic chips and developing state-run data centers and cloud services as alternatives to Big Tech's offerings. This includes plans for creating data center clusters under the 'East Data West Computing' project to improve resource allocation efficiency and reduce power consumption for AI workloads.

The government's support includes reducing computing costs by 40% to 50% for AI companies using government-run data centers, albeit with stringent eligibility criteria. This initiative is part of broader efforts to accelerate AI adoption and maintain strict oversight of its application, including the approval of LLMs for public use and centralizing the distribution of computing power through state-run platforms.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

dmitche3 Since every country sues another country in the World Court of fools for subsidizing their industries, will the rest of the world remember that China subsidized their AI companies when it comes in the future to a head to head competition? Nah.Reply -

digitalgriffin Whenever any government subsidizes the cost of anything to make it "more affordable" it only drives cost up.Reply

Gaurenteed Student Loans? College Tuition shoots through the roof

Cheap Money with low prime? Inflation and housing cost shooting through roof

Subsidize Ethanol? Other countries buy it up as it's cheaper from USA, and corn prices shoot through roof.

Cheap money is never cheap. -

nookoool Replydmitche3 said:Since every country sues another country in the World Court of fools for subsidizing their industries, will the rest of the world remember that China subsidized their AI companies when it comes in the future to a head to head competition? Nah.

Um, China got unfairly sanction and cripple from buying "free market gpu/cpu" It is fair game for china government to dump billions into their sector now.