UALink has Nvidia's NVLink in the crosshairs — final specs support up to 1,024 GPUs with 200 GT/s bandwidth

The final UALink specifications are here.

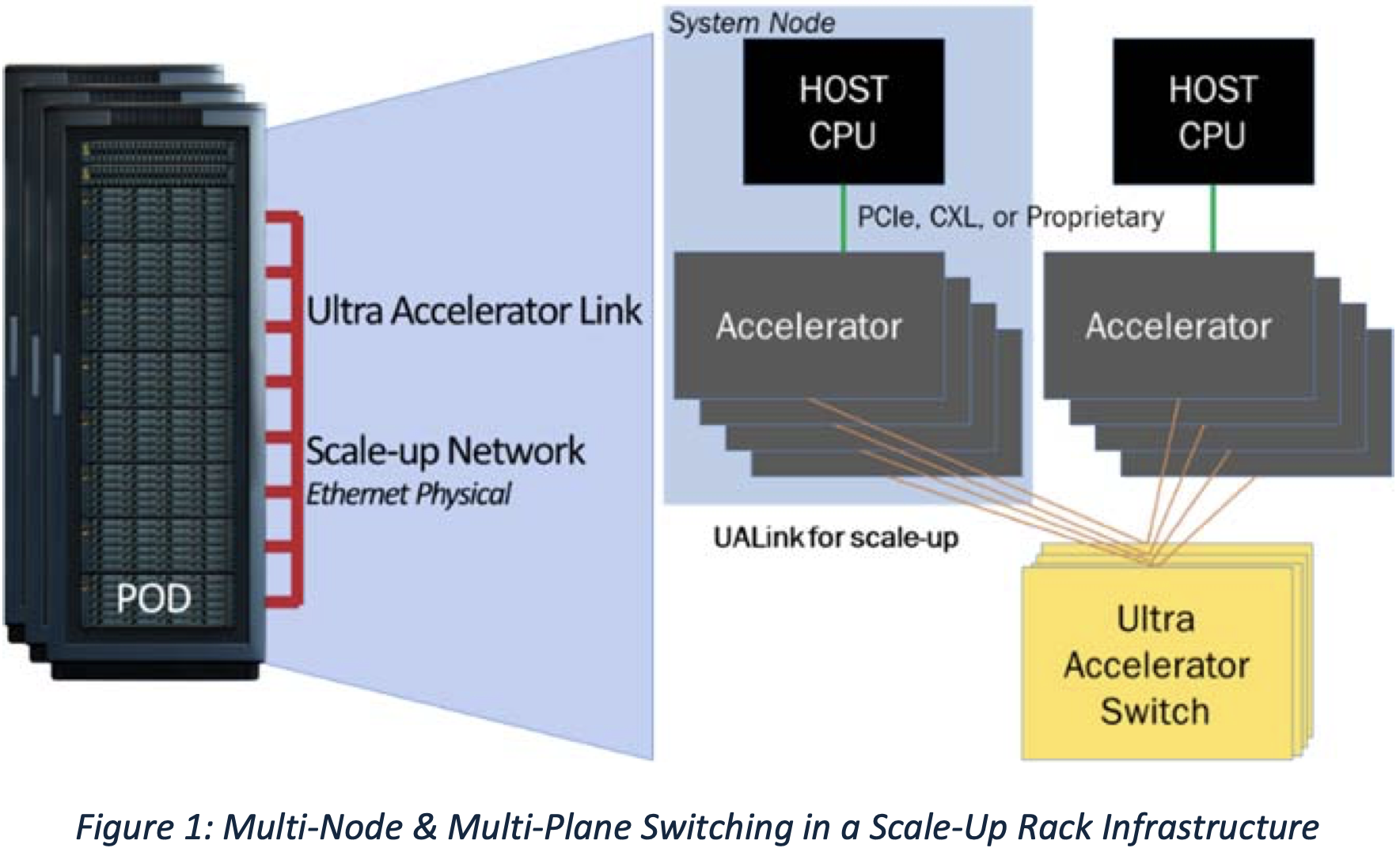

One of the key aims of UALink is enabling a competitive connectivity ecosystem for AI accelerators that will rival Nvidia's established NVLink technology that enables the green company to build rack scale AI-optimized solutions, such as Blackwell NVL72. With the emergence of UALink 1.0, companies like AMD, Broadcom, Google, and Intel will also be able to build similar solutions, using industry-standard technologies rather than Nvidia's proprietary solutions, which means lower costs.

The Ultra Accelerator Link Consortium on Tuesday officially published the final UALink 1.0 specification, which means that members of the group can now proceed with tape outs of actual chips supporting the new technology. The new interconnect technology targets AI and HPC accelerators and is supported by a broad set of industry players — including AMD, Apple, Broadcom, and Intel. It promises to become the de facto standard for connecting such hardware.

The UALink 1.0 specification defines a high-speed, low-latency interconnect for accelerators, supporting a maximum bidirectional data rate of 200 GT/s per lane with signaling at 212.5 GT/s to accommodate forward error correction and encoding overhead. UALinks can be configured as x1, x2, or x4, with a four-lane link achieving up to 800 GT/s in both transmit and receive directions.

One UALink system supports up to 1,024 accelerators (GPUs or other) connected through UALink Switches that assign one port per accelerator and a 10-bit unique identifier for precise routing. UALink cable lengths are optimized for <4 meters, enabling <1 µs round-trip latency with 64B/640B payloads. The links support deterministic performance across one to four racks.

The UALink protocol stack includes four hardware-optimized layers: physical, data link, transaction, and protocol. The Physical Layer uses standard Ethernet components (e.g., 200GBASE-KR1/CR1) and includes modifications for reduced latency with FEC. The Data Link Layer packages 64-byte flits from the transaction layer into 640-byte units, applying CRC and optional retry logic. This layer also handles inter-device messaging and supports UART-style firmware communication.

The Transaction Layer implements compressed addressing, streamlining data transfer with up to 95% protocol efficiency under real workloads. It also enables direct memory operations such as read, write, and atomic transactions between accelerators, preserving ordering across local and remote memory spaces.

As it's aimed at modern data centers, the UALink protocol supports integrated security and management capabilities. For example, UALinkSec provides hardware-level encryption and authentication of all traffic, protecting against physical tampering and supporting Confidential Computing through tenant-controlled Trusted Execution Environments (such as AMD SEV, Arm CCA, and Intel TDX). The specification allows Virtual Pod partitioning, where groups of accelerators are isolated within a single Pod by switch-level configuration to enable concurrent multi-tenant workloads on a shared infrastructure.

UALink Pods will be managed via dedicated control software and firmware agents using standard interfaces like PCIe and Ethernet. Full manageability is supported through REST APIs, telemetry, workload control, and fault isolation.

"With the release of the UALink 200G 1.0 specification, the UALink Consortium's member companies are actively building an open ecosystem for scale-up accelerator connectivity," said Peter Onufryk, UALink Consortium President. "We are excited to witness the variety of solutions that will soon be entering the market and enabling future AI applications."

Nvidia currently dominates in the AI accelerator market, thanks to its robust ecosystem and scale-up solutions. It's currently shipping Blackwell NVL72 racks that use NVLink to connect up to 72 GPUs in a single rack, with inter-rack pods allowing for up to 576 Blackwell B200 GPUs in a single pod. With its upcoming Vera Rubin platform next year, Nvidia intends to scale up to 144 GPUs in a single rack, while Rubin Ultra in 2027 will scale up to 576 GPUs in a single rack.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user It'll be interesting to see just how long Nvidia keeps its lead in AI. I think others don't need to beat them on performance, but just come close, offer similar scalability, and beat them on perf/$. With UALink, that's one less point on the board in Nvidia's favor.Reply -

atomicWAR It be nice to see someone, anyone, humble Nvidia a little bit as their greed knows no bounds. Hopefully with more competition Nvidia will need to re-evaluate it's pricing in both AI and gaming workloads. The AI bubble won't last forever and Nvidia has to know that.Reply

bit_user said:It'll be interesting to see just how long Nvidia keeps its lead in AI. I think others don't need to beat them on performance, but just come close, offer similar scalability, and beat them on perf/$. With UALink, that's one less point on the board in Nvidia's favor.

This has been on my mind a lot as of late. Nvidia had so much success over the years I feel like they got overly confident. Their prices such seem to indicate as much. I suspect someone will challenge them soon in AI that costs them market share. But I could be wrong. Intel led in the CPU server/consumer space for decades before their foundry issues tripped them up at the same time AMD really began to challenge them again. After AMDs x64 coup d'etat moment that helped sink Itanium with the introduction of Opteron CPUs (Athlon 64 CPUs for consumer), Bulldozer-> Excavator did a lot of damage to their marketshare/mindshare. Point being no telling how long Nvidia might hold their king of the hill position. It could be a couple years or a couple decades. Either way they need to be humbled in a big way soon for the good of consumers. Their pricing is getting a *little* <cough cough> out of control. -

ReplyatomicWAR said:Either way they need to be humbled in a big way soon for the good of consumers. Their pricing is getting a *little* <cough cough> out of control.

Yeah, I wouldn’t mind Nvidia having another DeepSeek moment. Consumers would definitely benefit from that. -

bit_user Reply

Nvidia needs to move beyond the general-purpose compute architectures they've been using for AI. How quickly and successfully they do that will help determine their ability to stay on top. They have their NVDLA NPUs for doing inference workloads in their embedded SoCs. So, they do "get it".atomicWAR said:Point being no telling how long Nvidia might hold their king of the hill position. It could be a couple years or a couple decades. Either way they need to be humbled in a big way soon for the good of consumers. Their pricing is getting a *little* <cough cough> out of control.

They're also becoming heavily power/cooling-limited. That's adding to the cost of their solutions and could add more delays (there's some suggestion it was part of Blackwell's holdups). So, we might be nearing a point where they stumble in the face of a more efficient-dataflow architecture, like most of the other NPUs out there.

One thing I can say with a fair degree of certainty: it doesn't seem like AMD will be the one to usurp Nvidia's dominance in AI. AMD is making its usual mistake of trying to beat Nvidia at its own game and they're fumbling the ball quite badly.

https://semianalysis.com/2024/12/22/mi300x-vs-h100-vs-h200-benchmark-part-1-training/

Maybe UDNA will be a game changer. I wouldn't bet on it, but we'll see.