20-Core Apple M1 Ultra Supercharges Mac, 64-Core GPU Rivals RTX 3090

Apple goes for the jugular with M1 Ultra

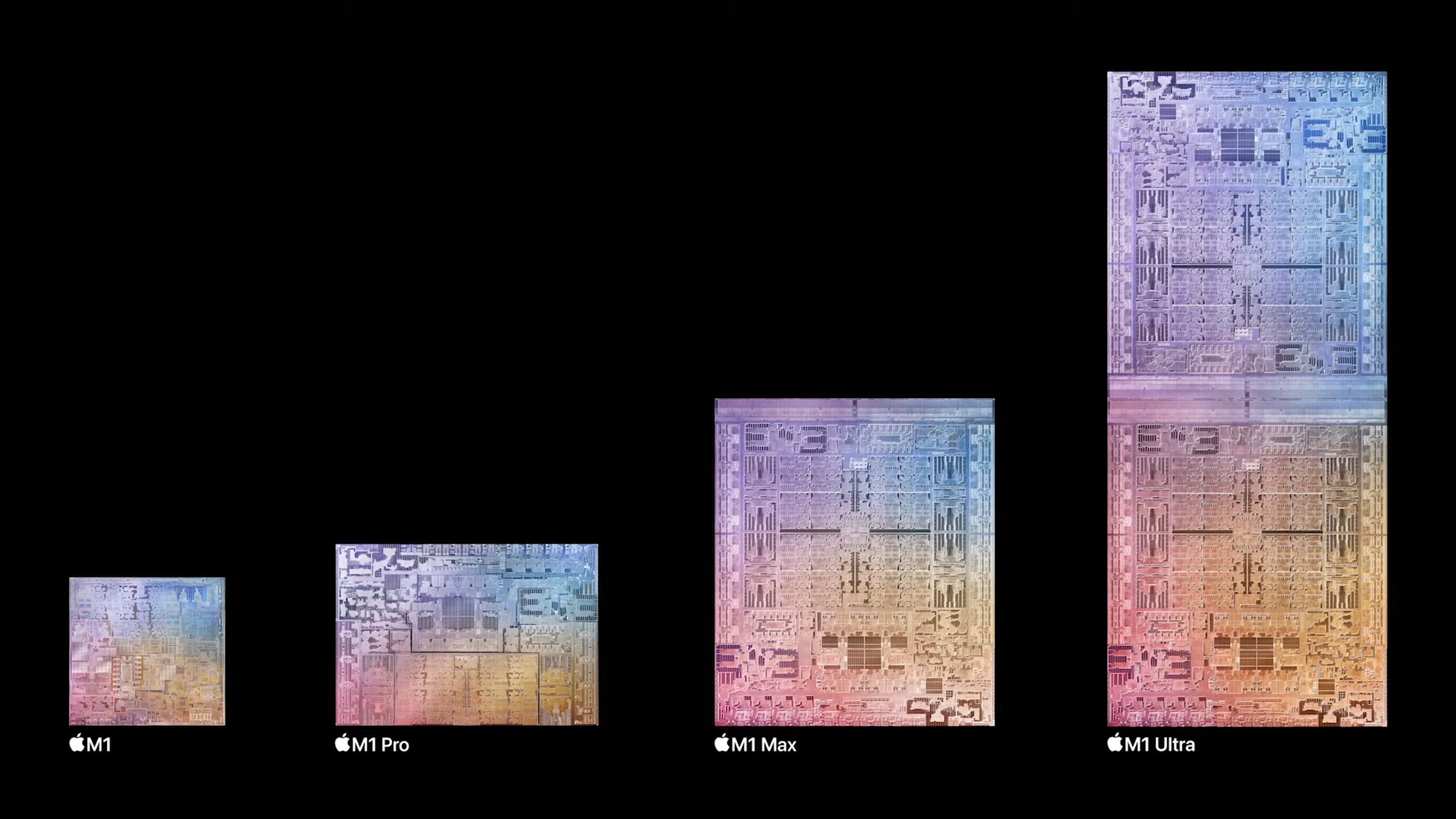

Apple is ready to take Mac performance to the next level with Apple Silicon, and the end result was hinted at late last year. While the general consensus was that Apple would introduce an all-new M2 chip to leap ahead of the M1, M1 Pro and M1 Max, what we got was something that is not only more powerful but also quite familiar: M1 Ultra.

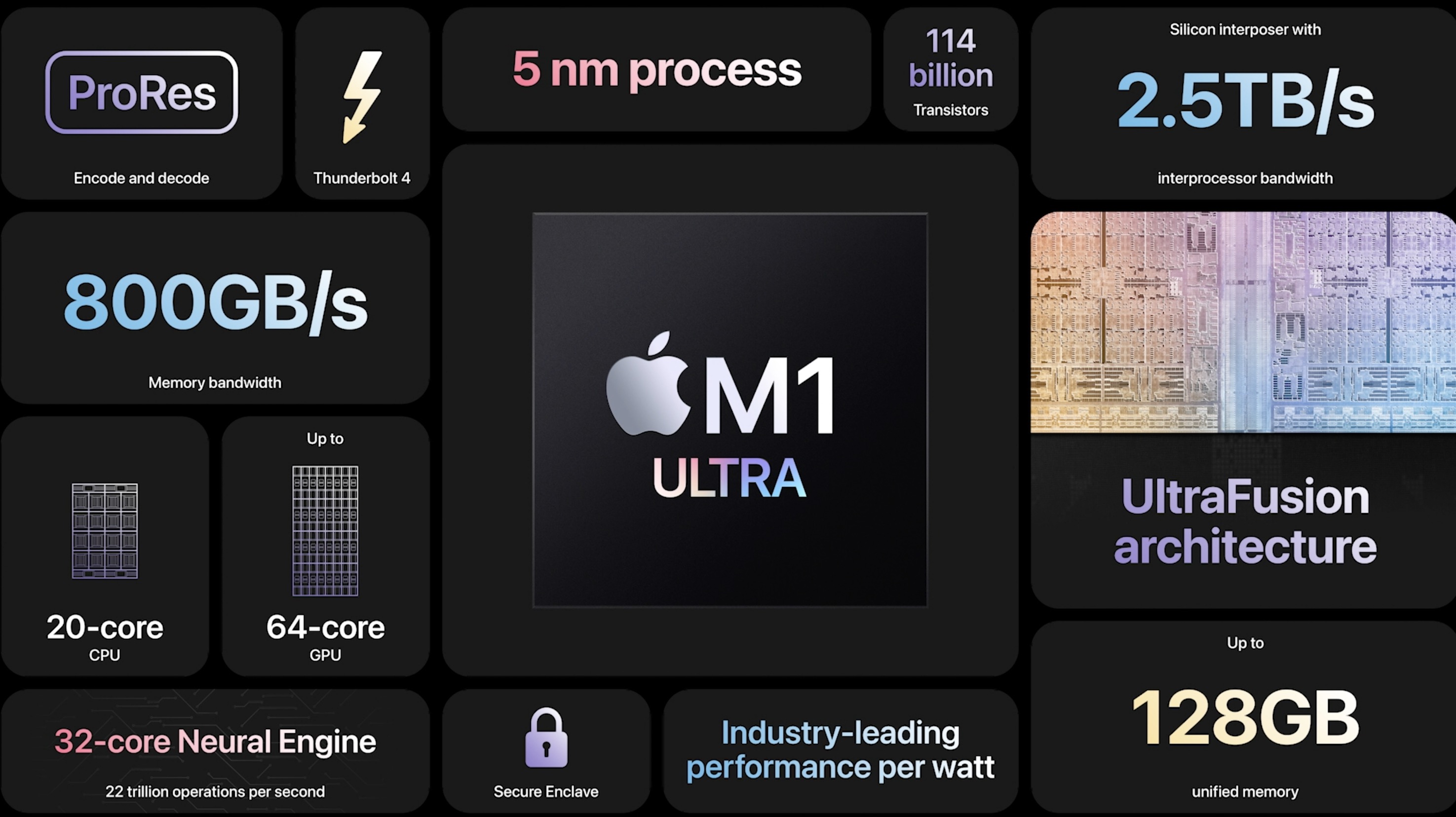

The M1 Ultra is Apple's latest flagship SoC that will make its way into the all-new Mac Studio desktop. When Apple introduced the M1 Max last year, it didn't tell us that it had a hidden feature: a silicon interposer that allows two M1 Max SoCs to be joined together, delivering interprocessor bandwidth of 2.5 TB/sec.

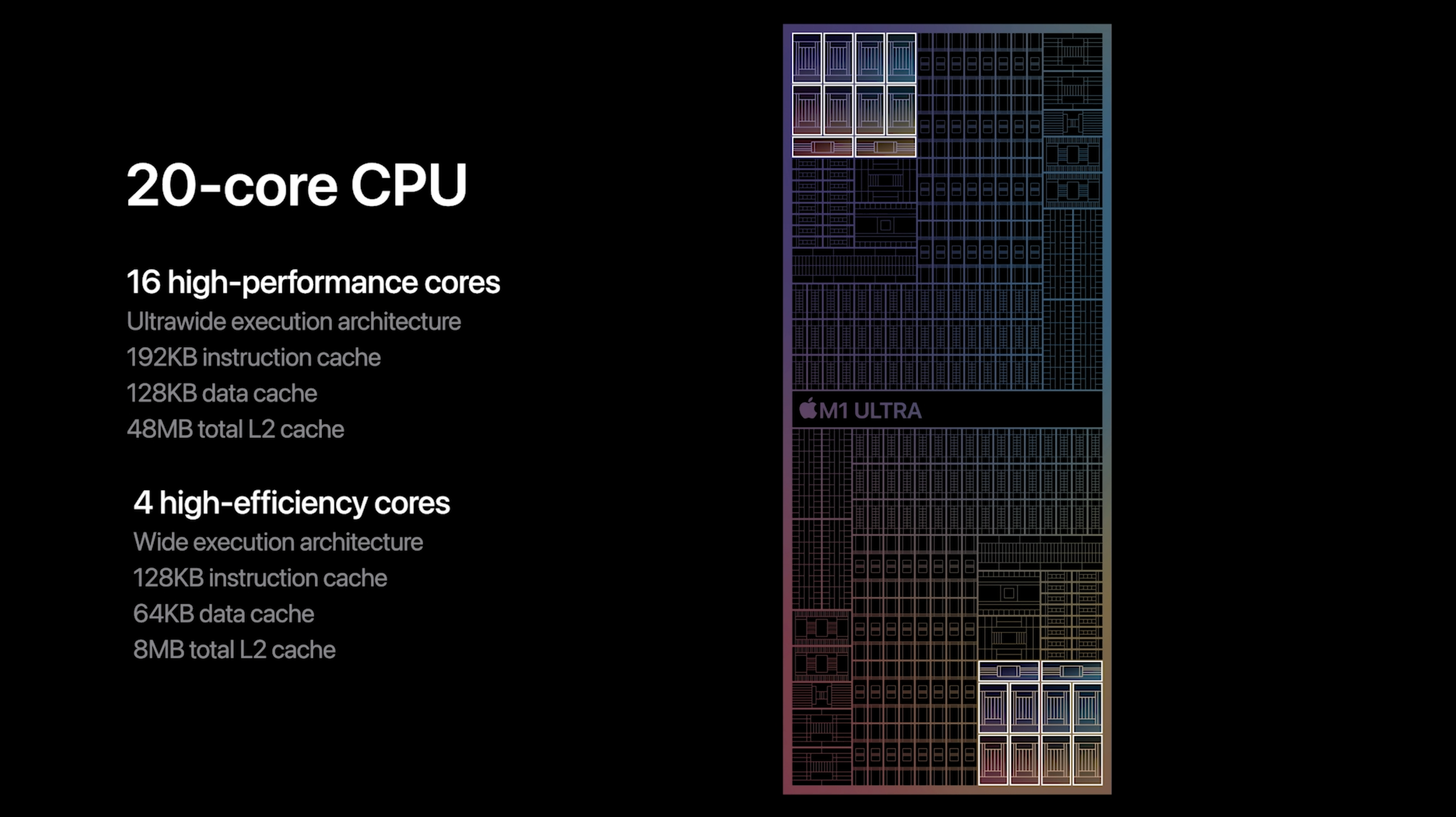

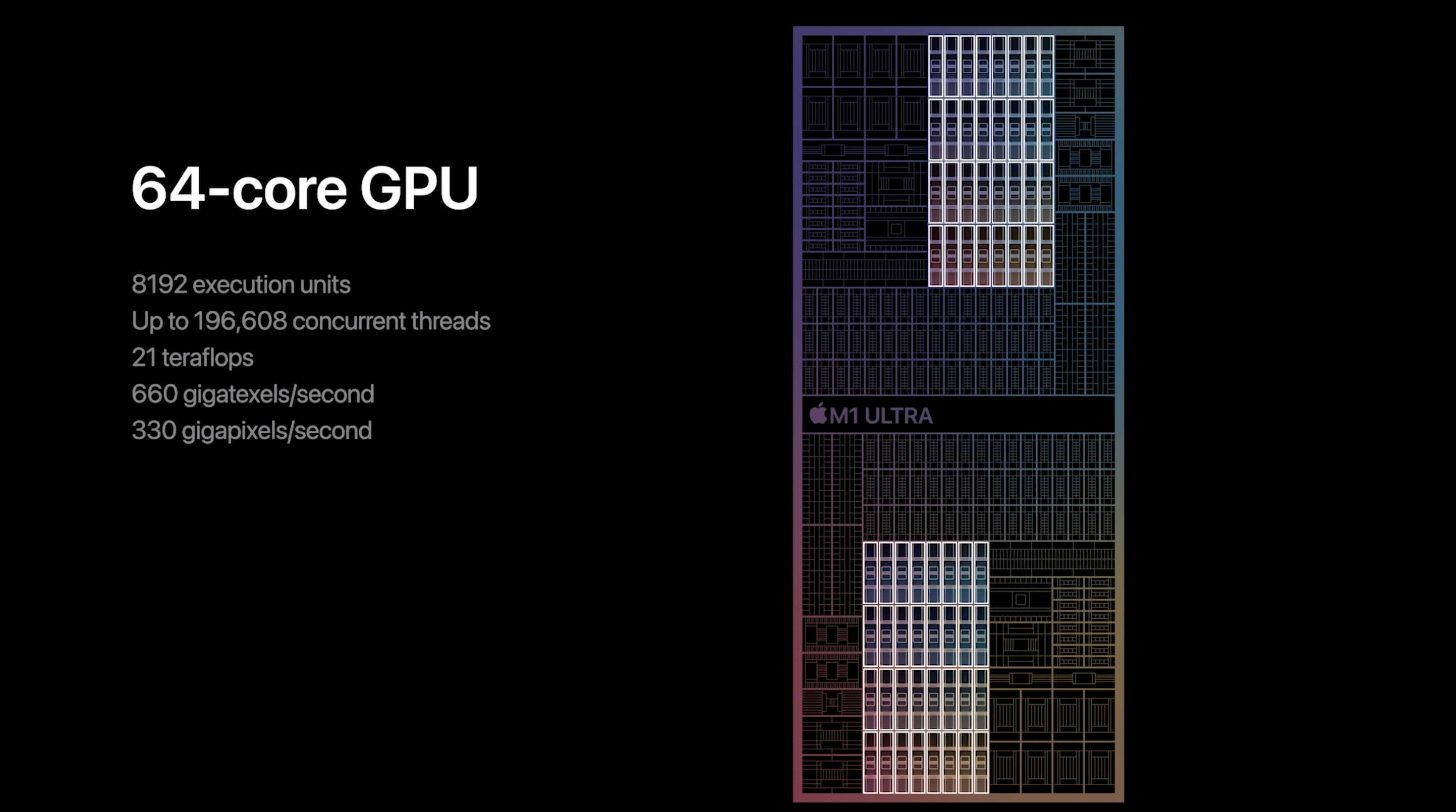

Apple calls this UltraFusion architecture, and it promises performance that was never before possible on the Mac platform (with Intel processors) with incredibly low power draw. The result is an effective doubling of the maximum specs of the already powerful M1 Max. That means that you'll get up to 20 CPU cores (four efficiency cores, 16 high-performance cores), up to 64 GPU cores and up to 128GB of unified memory on-tap for users. Total memory bandwidth comes in a staggering 800 GB/sec. Oh, and the total transistor count jumps to 114 billion, the highest in the industry.

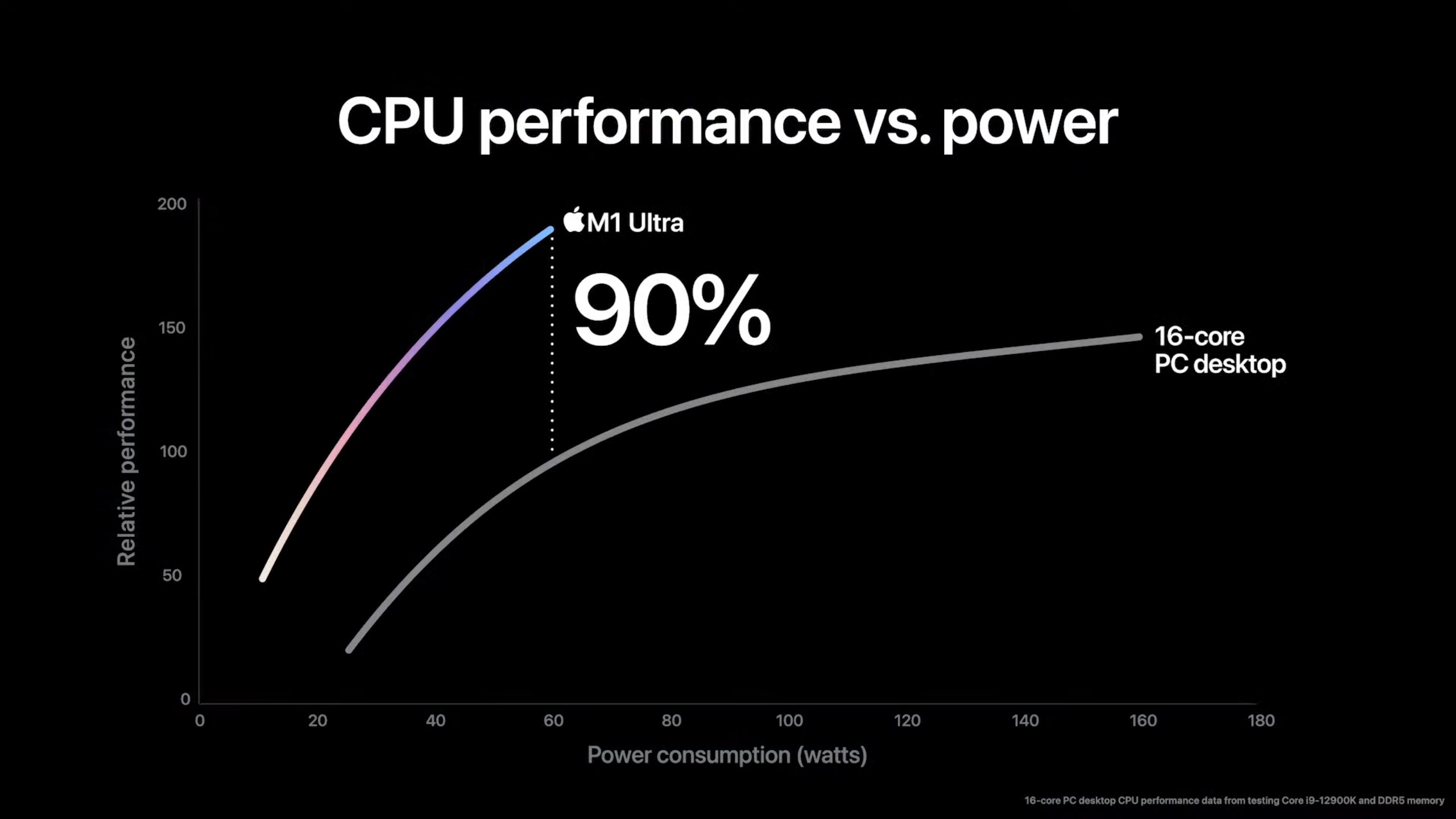

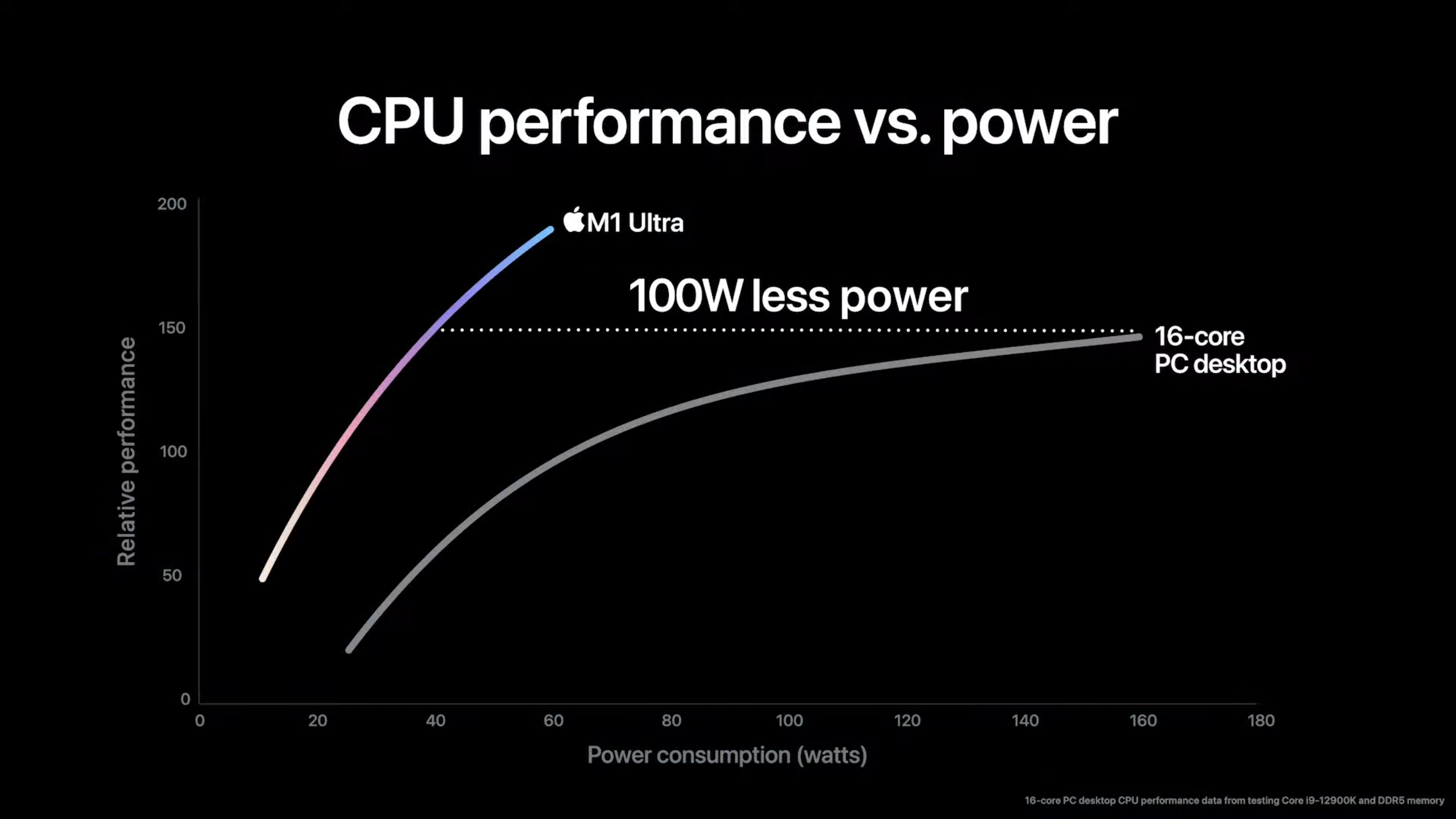

All of this would be for naught if we didn't have an idea of how M1 Ultra will perform against modern processors from AMD and Intel. Regarding relative performance, Apple says that the M1 Ultra can operate at the same performance level as the Alder Lake-based Core i5-12600K (paired with DDR5 memory) while using 65 percent less power. When running full-bore, the M1 Ultra allegedly delivers 90 percent higher multi-core performance compared to the flagship Core i9-12900K while consuming just a third of the power.

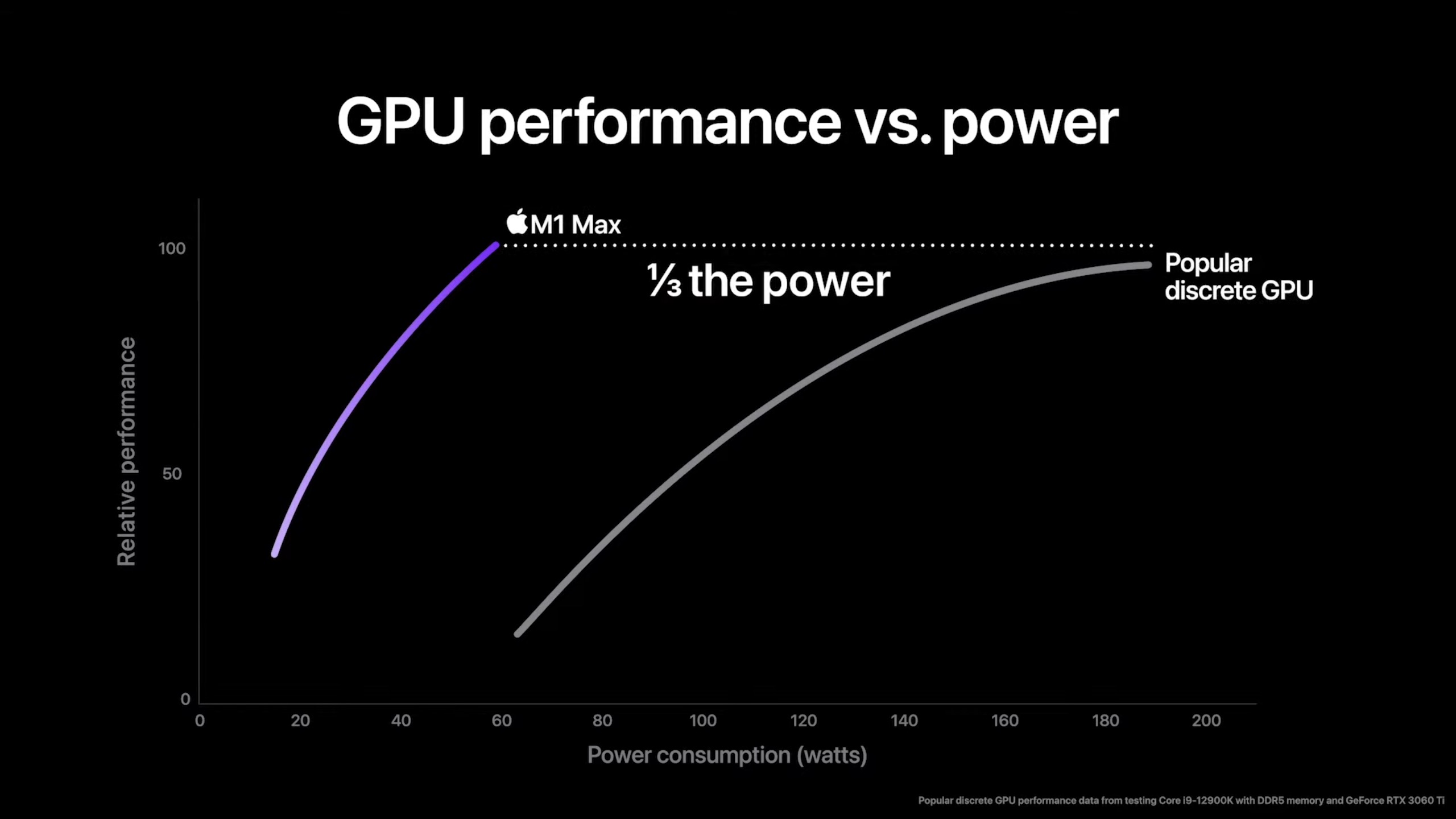

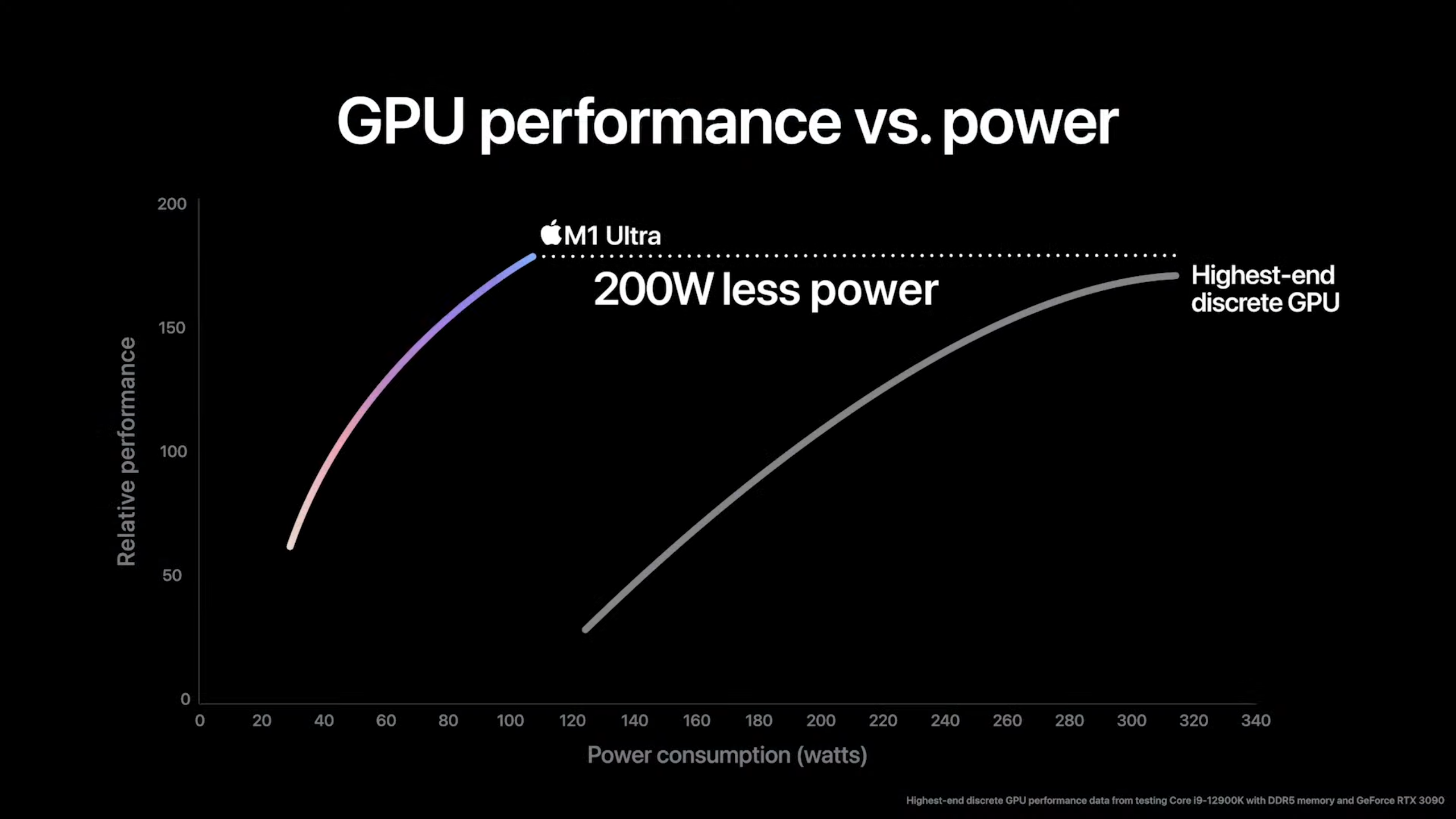

When it comes to the 64-core GPU on the M1 Ultra (8,192 execution units, 21 TFLOPs), Apple says that it offers performance on par with the Nvidia GeForce RTX 3090, while consuming 200 fewer watts. These performance figures for the CPU and GPU are quite spectacular, but we wish that we had more information on what benchmarks Apple is using to compare more PC-centric hardware. That would allow us to determine how much of this is marketing fluff and how much is raw computing power.

Rounding things out are a 32-core Neural Engine which can pump out 22 trillion operations per second, while media engine capabilities also double compared to the previous flagship M1 Max (the company says that the M1 Ultra-powered Mac Studio can play back up to 18 streams of 8K ProRes 422 video, which is unprecedented).

And so you don't think that all of this power would go to waste, Apple says that all of the APIs built into macOS Monterey allow app developers to take full advantage of both the CPU, GPU, Neural Engine and available memory bandwidth.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The first product to ship with M1 Ultra, including the Mac Studio, are available to preorder today and ship on March 18th.

"M1 Ultra is another game-changer for Apple silicon that once again will shock the PC industry. By connecting two M1 Max die with our UltraFusion packaging architecture, we’re able to scale Apple silicon to unprecedented new heights," said Johny Srouji, Apple’s senior vice president of Hardware Technologies. "With its powerful CPU, massive GPU, incredible Neural Engine, ProRes hardware acceleration, and huge amount of unified memory, M1 Ultra completes the M1 family as the world’s most powerful and capable chip for a personal computer."

Brandon Hill is a senior editor at Tom's Hardware. He has written about PC and Mac tech since the late 1990s with bylines at AnandTech, DailyTech, and Hot Hardware. When he is not consuming copious amounts of tech news, he can be found enjoying the NC mountains or the beach with his wife and two sons.

-

Akindabigdeal Articles like this are why apple has a lawsuit against them for misrepresenting the speed of the m1 processors. They never live up to the hype. Apple products are never worth the cost.Reply -

mdd1963 Intel and/or AMD need to hire these CPU designers away from whomever, if any of this hype is true...!Reply -

hotaru.hino I'm inclined to believe Apple that the M1 Ultra's GPU can achieve RTX 3090 performance if we had more data to compare it with. Apple claims they used "Industry standard benchmarks", but failed to mention which ones specifically.Reply

Looking at Notebookcheck's entry on the M1 Max, it certainly beats out the competition... in GFXBench. No 3DMark, no SPECviewperf, or anything else that may be considered "Industry standard." Plus I looked back on this a while ago and GFXBench is Metal for Apple in all of its tests, but only some of the tests have DirectX 12 or Vulkan, rendering the comparison even more moot.

Plus the whole "perf/watt" chart makes no sense to me if taken at face value. Why should I care about anything other than the performance at the part's maximum performance rating? If I wanted to consume less power, I'd look for a part specifically designed to consume less power. -

jacob249358 Idc how good it is as long as it comes with a ridiculous premium, and mac os. Make these good power efficient cpus, pair them with NVIDIA laptop gpus and make a great gaming laptop with windows 10.Reply -

JamesJones44 Replyjacob249358 said:Idc how good it is as long as it comes with a ridiculous premium, and mac os. Make these good power efficient cpus, pair them with NVIDIA laptop gpus and make a great gaming laptop with windows 10.

Are you that scared of macOS and other Unix/Linux based operating systems? These days the OS's are all basically the same with just a different GUI slapped on top from a feature point of view. Other than games, Windows really doesn't offer anything over any of the other operating systems. I haven't seen a productivity/developer tool made for Windows only in about a decade. -

gargoylenest either Apple is greatly inflating its numbers and prowess, or the other specialized company (intel, nvidia, amd) with lot of experience and years of upgrading and investing in their chips are underperforming a lot or especially incompetent...I tend to believe that Apple sell clouds to their followers. from afar, they look huge...Reply -

thisisaname Kind of hard to say how good it is when they do not giving any information on how much power it uses.Reply -

hotaru.hino Reply

It's in the x-axis of this chart:thisisaname said:Kind of hard to say how good it is when they do not giving any information on how much power it uses.

I would argue on the Linux side, it depends. For one thing, I really don't like most Linux based OS's default app management and distribution system. I tried installing a specific version of Python and it took me like 6-7 steps to get it to a point where I could finally type in "python" in the command line and get going. On Windows? Download installer, install, done.JamesJones44 said:Are you that scared of macOS and other Unix/Linux based operating systems? These days the OS's are all basically the same with just a different GUI slapped on top from a feature point of view. Other than games, Windows really doesn't offer anything over any of the other operating systems. I haven't seen a productivity/developer tool made for Windows only in about a decade.

But if you strip it down to the kernel level stuff, then yeah, I'd agree that for the most part, Windows and UNIX have enough similarities that arguing anything is purely academic. -

cryoburner Even Intel would be ashamed by the amount of nonsense in those slides.Reply

"Our integrated graphics are faster than a 3090 while drawing 100 watts!"

"Oh wow! What are they faster at?"

"You know... things. <_< "

No doubt they could be faster at certain workloads that utilize specific baked-in hardware features, like if their chip has hardware support for encoding a particular video format, while another does not have the same level of support for it, and must utilize general-purpose hardware to perform the task. But I really doubt we're going to see overall graphics performance anywhere remotely close to 3090 in that chip.