Intel CEO on Nvidia Grace CPUs: They Are Responding to Us

Pat Gelsinger not afraid of Nvidia's Grace.

Earlier this week Nvidia announced its first Arm-based Grace processor for AI as well as HPC servers and while the move was expected by many market observers, the formal debut negatively affected AMD and Intel and Nvidia stock prices. Nvidia is without any doubts a formidable competitor, but Intel believes that it does not really pose an imminent threat to its datacenter business.

"We announced our Ice Lake [processors for servers] last week with an extraordinarily positive response," said Pat Gelsinger, the new CEO of Intel, in an interview with Fortune. "And in Ice Lake, we have extraordinary expansions in the A.I. capabilities. [Nvidia is] responding to us. It is not us responding to them. Clearly this idea of CPUs that are AI-enhanced is the domain where Intel is a dramatic leader."

Nvidia's Grace processor for AI and HPC machines promises to be more than 10 times faster in AI and select HPC workloads when compared to today's x86 processors, but it will only come out in early 2023. Performance expectations like this always look rather formidable, but one has to keep in mind that the competition — AMD and Intel — is not standing still.

Intel's latest Xeon processors integrate AI-accelerating technologies under the umbrella name DL Boost, which currently includes such instruction set extensions as AVX512_VNNI (Cascade Lake and Ice Lake) and AVX512_BF16 (Cooper Lake only). Both have proven to be quite competitive in general (at least according to tests conducted by Intel) and when dealing with optimized algorithms, they can make Intel's CPUs outperform Nvidia's GPUs by up to 15 times.

Furthermore, because Intel's Xeon CPUs are so widespread, they are widely used for inference workloads and ISVs optimize their engines for these processors. In brief, modern Intel's Xeon Scalable products already have AI enhancements, and their successors will naturally expand AI capabilities.

In general, Arm, AMD, Intel, Nvidia, and a host of other companies are working hard to improve performance of their processors (CPUs, GPUs, IPUs, VPUs, etc.) in AI and/or HPC workloads as demand for artificial intelligence and supercomputing is increasing rapidly. As a result, there will be more progress in AI and HPC directions in the next few years than there was in the previous 30. Exciting times are coming

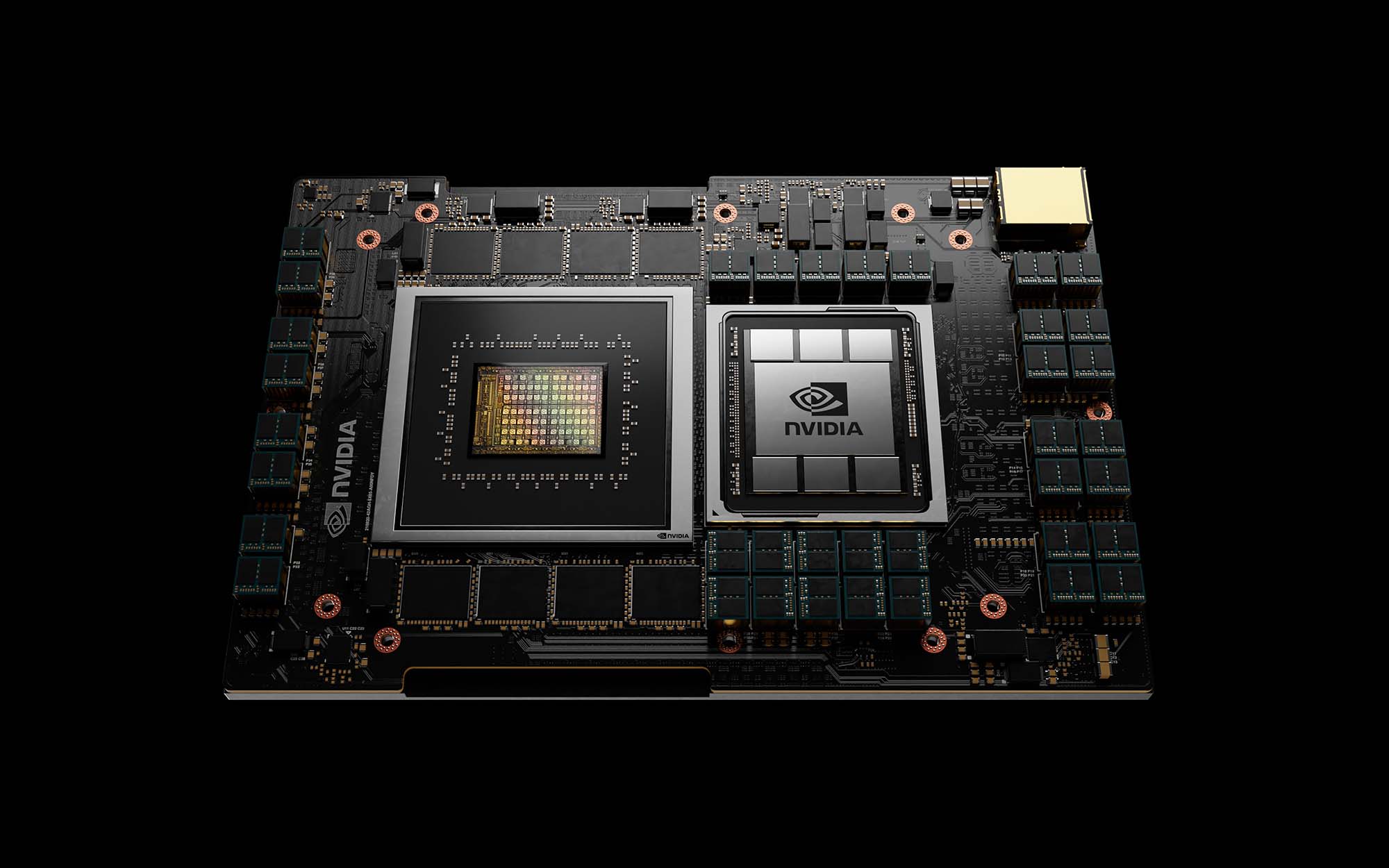

It is necessary to note that Nvidia currently shows its Grace CPU sitting next to its GPU, not separately. So, while Grace processors themselves may feature numerous AI boosters, Nvidia will sell them together with its datacenter GPUs that are optimized for compute, therefore offering a platform with impressive capabilities for AI and HPC workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Meanwhile, Intel will have a platform like this already in 2022. Intel's 4th Generation Xeon Scalable 'Sapphire Rapids' processor with up to 56 cores and numerous enhancements for various workloads will be accompanied by the company's 100-billion-transistor Ponte Vecchio compute GPU in the Aurora supercomputer next year. The GPU seems to feature about 1 FP16 PFLOPS performance, or 1,000 TFLOPS FP16 performance, which is three times higher when compared to Nvidia A100's 312 FP16 TFLOPS.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.