Almost 20 TB (Or $50,000) Of SSD DC S3700 Drives, Benchmarked

We've already reviewed Intel's SSD DC S3700 and determined it to be a fast, consistent performer. But what happens when we take two-dozen (or about $50,000) worth of them and create a massive RAID 0 array? Come along as we play around in storage heaven.

Results: 128 KB Sequential Performance Scaling In RAID 0

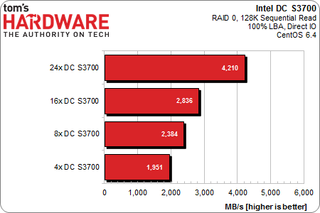

128 KB Sequential Read Performance Scaling in RAID 0

Testing with 128 KB sequential reads, we get almost 2 GB/s from the four-drive array and more than 4.2 GB/s using 24 of the SSD DC S3700s. If we were getting an even 500 MB/s per drive, as Intel specifies, the 24x array would yield around 12 GB/s. Each of our Intel controller cards uses eight third-gen PCI Express lanes, so each one should be able to push more than 4,000 MB/s.

Still, we're seeing a massive amount of throughput. It's almost like reading an entire single-layer DVD every second. And we can do that speed from the first LBA to the last because we're not relying on any caching. This is all-flash performance.

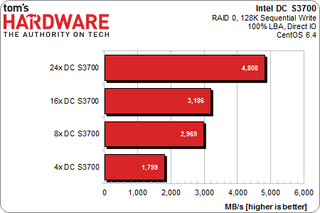

128 KB Sequential Write Performance Scaling in RAID 0

We get even better performance with writes. The 24x array encroaches on the 5 GB/s mark, falling just short at 4.8 GB/s. The 16x and 8x configurations group together around 3 GB/s, while the four-drive array backpedals just a few percent compared to its read numbers.

When Keepin' it Real Goes Wrong

Make no mistake; these are breathtakingly awesome numbers. But it's hard to shake the feeling that something is robbing us of achieving epic, face-melting benchmark results.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

So, what gives, then?

Could it be the strip size we chose for these RAID 0 arrays? No. After extensive testing, we settled on 64 KB chunks. Each 128 KB transfer is serviced by two drives, since 128 KB divided by two equals 64 KB, our chunk size. With enough parallel requests, everything should be good to go.

As we saw on the last page, we still get great scaling with 4 KB random transfers. So, it's probable that we're encountering a throughput issue. Since each drive is capable of large sequential transfers in excess of 400 MB/s, the issue is most pronounced on this page. The SSD DC S3700s can't put out more than 300 MB/s in our 4 KB random testing, and we expect to lose much of that anyway. So it makes sense that we'd run into bandwidth-sapping limitations here, and not there.

After investigating the RMS25KB/JB IR modules, we discovered that they were running in full PCI Express 3.0 mode and fully capable of pushing data back and forth from our SSD DC S3700s with minimal performance impact. As it happens, the culprit is the one thing we really need: our 24-bay SAS/SATA backplane.

Sad but true. Twenty-four total bays are enabled by a trio of eight-drive bays grafted to our server's exterior. Each possesses two SAS 8087 ports (one for every four drives), and they're just not able to get data through unmolested. Whether any backplane would work in this situation is uncertain, and bypassing ours simply wasn't an option for today's experiment.

Current page: Results: 128 KB Sequential Performance Scaling In RAID 0

Prev Page Results: 4 KB Random Performance Scaling In RAID 0 Next Page Results: Server Profile Testing-

sodaant Those graphs should be labeled IOPS, there's no way you are getting a terabyte per second of throughput.Reply -

cryan mayankleoboy1IIRC, Intel has enabled TRIM for RAID 0 setups. Doesnt that work here too?Reply

Intel has implemented TRIM in RAID, but you need to be using TRIM-enabled SSDs attached to their 7 series motherboards. Then, you have to be using Intel's latest 11.x RST drivers. If you're feeling frisky, you can update most recent motherboards with UEFI ROMs injected with the proper OROMs for some black market TRIM. Works like a charm.

In this case, we used host bus adapters, not Intel onboard PHYs, so Intel's TRIM in RAID doesn't really apply here.

Regards,

Christopher Ryan -

cryan DarkSableIdbuaha.I want.Reply

And I want it back! Intel needed the drives back, so off they went. I can't say I blame them since 24 800GB S3700s is basically the entire GDP of Canada.

techcuriousI like the 3D graphs..

Thanks! I think they complement the line charts and bar charts well. That, and they look pretty bitchin'.

Regards,

Christopher Ryan

-

utroz That sucks about your backplanes holding you back, and yes trying to do it with regular breakout cables and power cables would have been a total nightmare, possible only if you made special holding racks for the drives and had multiple power suppy units to have enough sata power connectors. (unless you used the dreaded y-connectors that are know to be iffy and are not commercial grade) I still would have been interested in someone doing that if someone is crazy enough to do it just for testing purposes to see how much the backplanes are holding performance back... But thanks for all the hard work, this type of benching is by no means easy. I remember doing my first Raid with Iwill 2 port ATA-66 Raid controller with 4 30GB 7200RPM drives and it hit the limits of PCI at 133MB/sec. I tried Raid 0, 1, and 0+1. You had to have all the same exact drives or it would be slower than single drives. The thing took forever to build the arrays and if you shut off the computer wrong it would cause huge issues in raid 0... Fun times...Reply

Most Popular