VisionTek CryoVenom R9 295X2: Two GPUs In One Slot

VisionTek recently introduced its CryoVenom R9 295X2, a dual-GPU graphics card that squeezes into a single PCIe expansion slot thanks to a thin and effective water block with impressive thermal performance. But is the board worth its price premium?

Temperature, Noise And Overclocking Benchmarks

Load Temperature

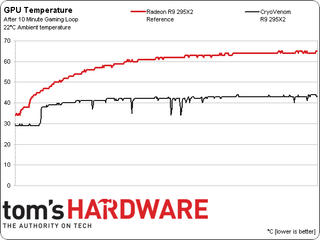

Now that the CryoVenom is installed, let's see what it can do. The reference Radeon R9 295X2 hit 65 degrees Celsius in our 10-minute gaming torture test. Does VisionTek's card fare any better?

The CryoVenom R9 295X2 bests the reference card by an astounding 20 degrees, and never crests 45 degrees Celsius during our tests. This isn't a comparison between liquid and air cooling, mind you. These are both liquid-based solutions.

As if that wasn't impressive enough, do you remember when we mentioned that the VRMs can climb to over 105 degrees on the reference card? That's because they don't come in contact with the water block. However, the CryoVenom's block extends to the power circuitry and memory though, so it's reasonable to assume those components are operating at a much lower peak temperature, too. While the CryoVenom uses AMD's reference PCB without a beefed up power delivery system, the purportedly-reduced VRM temperature is very welcome and has the potential to enhance stability.

Overclocking

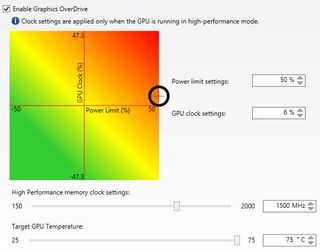

That brings us to overclocking. Of course we're at the mercy of the GPUs, since luck of the draw plays heavily into our hopes for higher clock rates. But blessed with lower temperatures, we should be able to extract the most headroom that these GPUs have to offer.

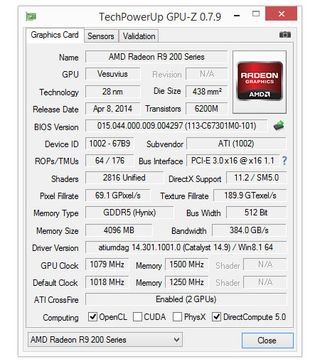

While we managed to run some tests with the graphics processors at 1160 MHz, we were only able to push the cores to a stable 1080 MHz.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

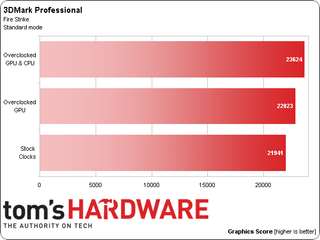

As for the 8 GB of on-board GDDR5 memory, we increased it from 1250 MHz stock (5 GT/s effective) to 1500 MHz (6 GT/s effective). These overclocks result in a noticeable performance increase, as demonstrated by the 3DMark scores:

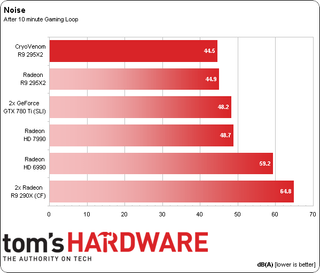

Noise

Lower temperatures and increased performance shouldn't come at the cost of unwanted noise in a no-compromise solution like VisionTek's CryoVenom. Let's see how it fares compared to other products after a 30-minute gaming loop:

VisionTek's CryoVenom nudges out the reference Radeon R9 295X2 for the best result. No matter how you slice it, this is a very low noise footprint, and is especially impressive when you consider the excellent temperatures that this product enables.

Current page: Temperature, Noise And Overclocking Benchmarks

Prev Page Installing The CryoVenom R9 295X2 Next Page Capable Cooling Hardware Tames The Beast-

youcanDUit YOU GOT ZOMBIE BLOOD ON YOUR LEG. YOU'RE INFECTED!Reply

http://www.dailymotion.com/video/x87h9y_28-days-later-28-days-later-blood-i_shortfilms -

Nuckles_56 I'm impressed with how cool that card runs, and damn is it sexy as well- apart from the price...Reply -

AndrewJacksonZA I would *NOT* mind two of these in my computer!!! However, how much are 4x 980s? :-(Reply

Although the inner geek in me STILL wants two of these cards! :-) -

Nuckles_56 Reply14367051 said:I would *NOT* mind two of these in my computer!!! However, how much are 4x 980s? :-(

Although the inner geek in me STILL wants two of these cards! :-)

4 gtx 980's will be less than two of these -

ta152h With the 390 coming out in a few months, and being moved to 20nm, this is heading towards severe obsolescence in the near future. The magnitude of the change is pretty large, since it's the first shrink in years, and is purported to be an extensive redesign as well.Reply

It's hard to justify tossing away $1000 away with this in mind. $2000 is even more difficult.

Yes, new technology is always around the corner, but these days it's often relatively minor, compared to what the 390 will be. We don't get shrinks that often anymore. I'd wait for it, unless you have enough money you can get both with no difficulty. Then, why not? -

B4vB5 This card is pretty irrelevant for normal people unless they want it out of spite.Reply

For computation farms and HPC, it's kinda interesting as you can now stuff 6-7 of these cards on the same motherboard and use 14 GPU's for OpenCL programming before you have to pay the overhead of another node in the farm(mb, cpu,ram,psu,cooling components).

The question is though if the high price tag and the high power consumption will pay off this cost saving in the long run, or 7x 290x is the better choice?

Nvidia is irrelevant for HPC/compute farms or at least use to be as they are much slower than AMDs cards.

If physical space matters and cost not so much, this could be a grand choice for now, simply for being able to stuff 14 GPU on one motherboard. SLI/Xfire dont matter and PCI 3.0 1x is usually enough although WS mb's from Asus with PLX onboard can handle PCI 3.0 8x/16x(well 84 total lanes) for high interaction/communication capability, though this is usually not the case in my experience with HPC via gfx.

Of cause you could also pick 7x normal 295x2s with extenders to PCI-e ports but then space and adequate cooling becomes a major issue(compute farms are suppose to run the cards at 80-100% most of the time to justify their existance).

I can see a slim market for this card though. And maybe for 5K gaming for extremist rich people in 3-4x Xfire if that even works and doesn't just falter in actual gaming performance like Linus latest video on the subject showed with 3-4x SLI. -

WilliamChan4 What happened to Eyefinity 6? Some time ago, having 6 mini-displayports in a single slot was supposed to be "the way of the future". Surely, if people still have legacy hardware, a simple converter cable would be a much better option than less display bandwidth.Reply -

Haravikk $2000 for a graphics card that will probably still be lagging behind in a few years? No thanks! Hell, I'm not sure I'd even spend $2000 in total to make a strong gaming rig, this just seems like one of these things that is only suited to people who feel a crushing burden from having too much money in their bank account, as anyone with any sense can build an extremely good system with $600-700 worth of GPU(s), and even that's still a bit overkill.Reply

Most Popular