New FurMark Version 2.0 Arrives After 16 Years

FurMark 2.0 is set to exit beta testing shortly.

FurMark, a popular tool for PC enthusiasts, is officially described as a lightweight but intensive stress test for GPUs. To earn its 'Mark' it also provides benchmark scores for comparing performance between GPUs. Unbeknownst to many, the FurMark app is now very close to its first major upgrade since 2007, and not just a point upgrade, but a move from version 1.X to version 2.X.

We often use FurMark in our GPU reviews purely for its stress testing. Thus, you will usually find references to the fur rendering engine app in the Power, Temps & Noise section of our reviews.

You may be wondering what's new in FurMark 2.X to warrant the version number upgrade. At the time of writing, we don't have any official release notes to compare versions; the beta only seems to offer change logs from prior beta releases. Our own investigations dug up a few notable differences, though.

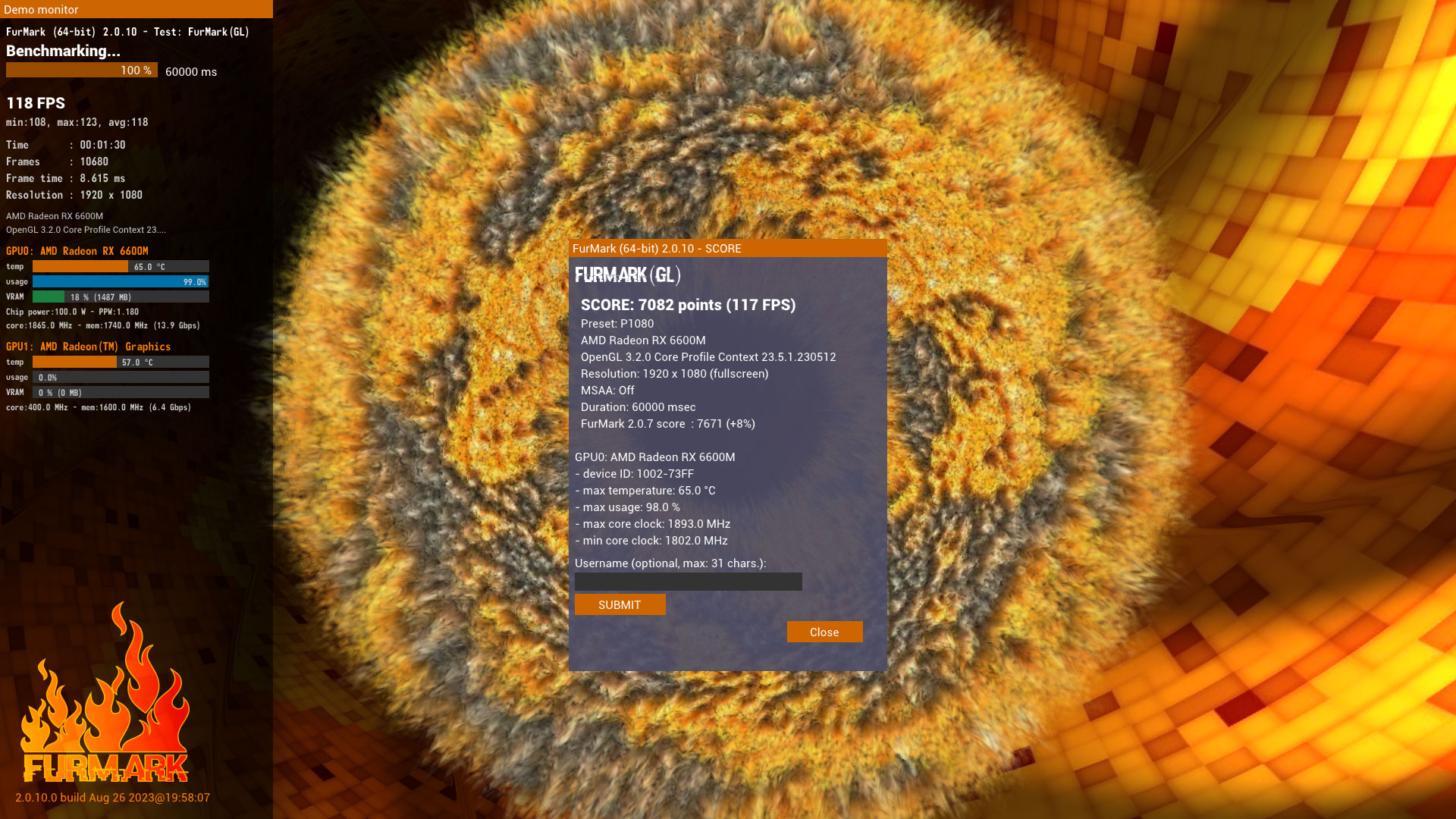

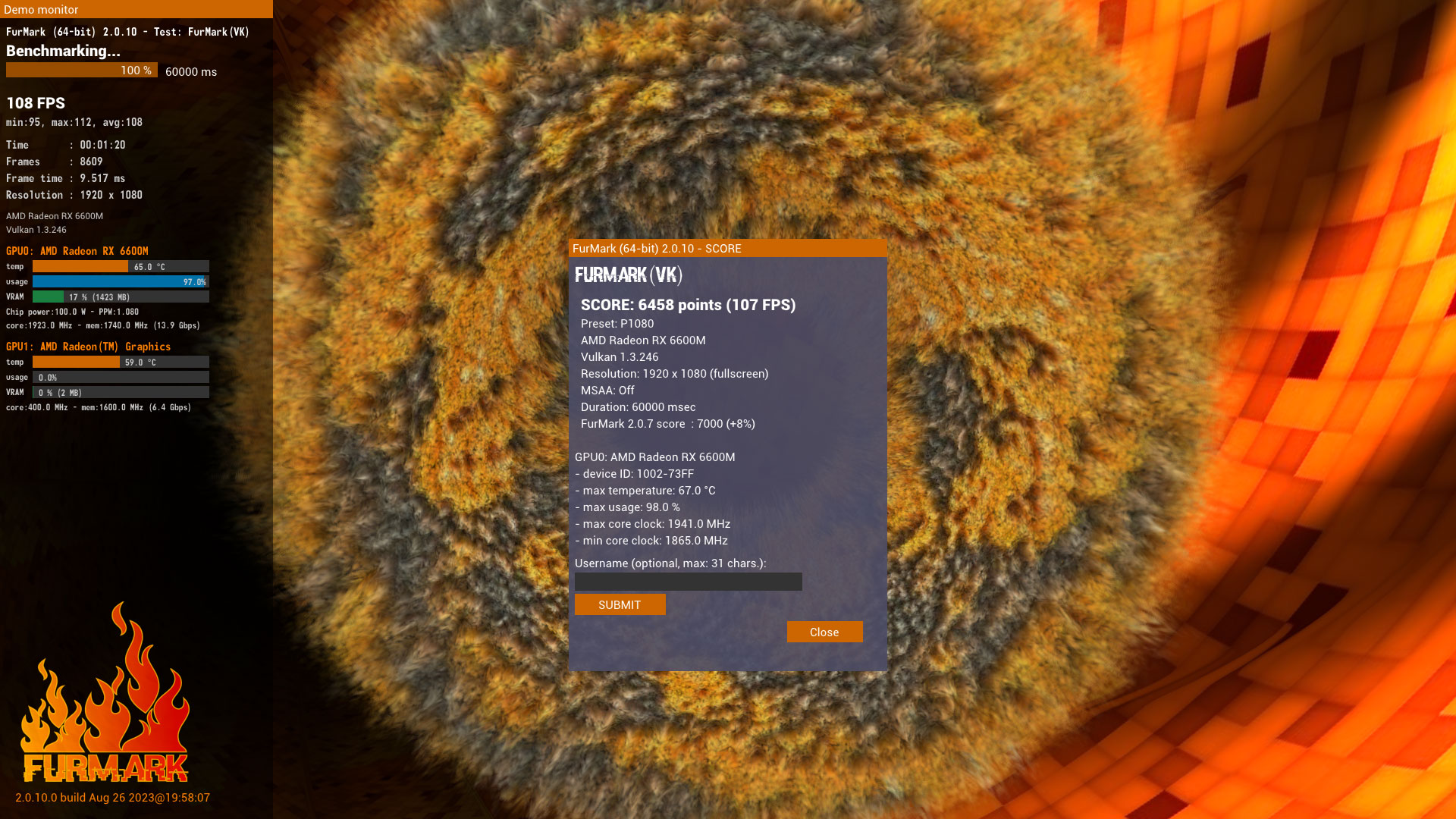

If you look at the screenshot of the UI above, you can see the new app looks very different from its predecessor. A new part of the revamped UI, with its text glitching theme, is the live GPU temperature/usage chart. Another major change we have spotted is with the graphics test toggle, which lets you switch between FurMark(GL) and FurMark(VK), which use the OpenGL and Vulkan APIs, respectively. The latest Furmark 1.36.0 only offers OpenGL rendering, stress testing, and benchmarking.

Access to complementary tools like GPU Shark, GPU-Z, and CPU Burner remains just a button click away in the new version of the software. Other similarities exist in the resolutions presented and the quick linking to online result comparisons. We noted that there were already quite a lot of tests logged on the FurMark 2 results database, which don't mix with results from the previous version of the app.

You can grab the latest FurMark v 2.X beta from Geeks3D's Discord server now. Those with an aversion or nervousness concerning beta software shouldn't have to wait too long for a general release of FurMark 2.X, as the beta released last month is likely the final test.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

SyCoREAPER Can't wait for my new GPU to catch fire! lolReply

The original FurMark was punishing as hell, can't even imagine 2.0 -

bit_user Reply

The thing about Furmark is that it seems both highly-parallel and 100% compute-bound. So, it's basically guaranteed to find a GPU's peak temperature, since there's no bottlenecking on memory, occupancy, or anything else to throttle it.brandonjclark said:I didn't even realize the 1.x was still relevant.

MakeModel

GFLOPS

GB/s

FLO/BAMDRX 6950 XT

38707

576

67.2AMDRX 7900 XTX

46690

960

48.6IntelUHD 770

825

90

9.2IntelA770

17203

560

30.7NvidiaRTX 3090 Ti

33500

1008

33.2NvidiaRTX 4090

73100

1008

72.5

I used base clocks, since we're talking about an all-core workload. Some GPUs could well throttle even below base. The one exception was the UHD 770, where I believe it will run at peak if there's not also a heavy CPU load.

Note how bandwidth-limited GPUs are! I computed fp32 ops per byte, but a single fp32 is actually 4 bytes! This is probably why increasing L2/L3 has helped AMD and Nvidia so much, over the past couple generations. You can probably tell that if you alleviate any bandwidth limitations and have enough threads, these GPUs could easily burn a lot more power than on typical games & apps.

It also should help explain why datacenter GPUs have like 3x the memory bandwidth of high-end desktop models, but not a lot more raw compute, as in HPC type workloads you're more often doing things like reading 2 values and outputting a 3rd. -

bit_user Reply

I'll bet the main difference is Vulkan support. Depending on how well they implemented the OpenGL version, there might not be much performance difference, between it and the Vulkan path. If there is, then you're right that Vulkan could pose a more serious fire risk!sycoreaper said:The original FurMark was punishing as hell, can't even imagine 2.0 -

Amdlova Reply

People use to fry the graphics card! My first two days with the 6700XT have used the furmark to burn in the card and loose some coil whine in process be cause you can set the resolution change the fps behavior bur the power are Aways in max My graphics giver about little more than 175w when gaming in furmark way beyond the limit!brandonjclark said:I didn't even realize the 1.x was still relevant.

*edit:SP

https://www.gpumagick.com/scores/show.php?id=3785

Oc score

https://www.gpumagick.com/scores/show.php?id=3789 -

artk2219 Reply

Most definitely, it still heavily punishes a graphics card, more than once ive found a defective one in a build with it. The card would work fine on Unigine Superposition pretty much indefinitely, but as soon as it ran furmark it would crap its pants, letting me know that its not 100% stable.brandonjclark said:I didn't even realize the 1.x was still relevant.

*edit:SP -

fireaza ...How is it that a GPU stress-test from 2007 can still be relevant anyway? If it was designed to stress GPUs from 2007, then surely it's a cakewalk for 2023 GPUs? Or if it's still stressful for 2023 GPUs, then how on Earth did 2007 GPUs not instantly catch fire while rendering at 1 frame per hour?Reply -

bit_user Reply

It just does a lot of shader arithmetic. No matter how fast a GPU is, a benchmark like that can saturate it - although, you might get absurd framerates like 900 fps.fireaza said:...How is it that a GPU stress-test from 2007 can still be relevant anyway?

You could also say that about cryptomining. Newer GPUs do it faster, but a GPU can never do it too fast.fireaza said:If it was designed to stress GPUs from 2007, then surely it's a cakewalk for 2023 GPUs?

Because GPUs typically ramp up their fans and then throttle their clock speeds, as their temperature increases or if their power limit is exceeded.fireaza said:if it's still stressful for 2023 GPUs, then how on Earth did 2007 GPUs not instantly catch fire while rendering at 1 frame per hour?