Intel shows Gaudi3 AI accelerator, promising quadruple BF16 performance in 2024

Big, fat, AI accelerator.

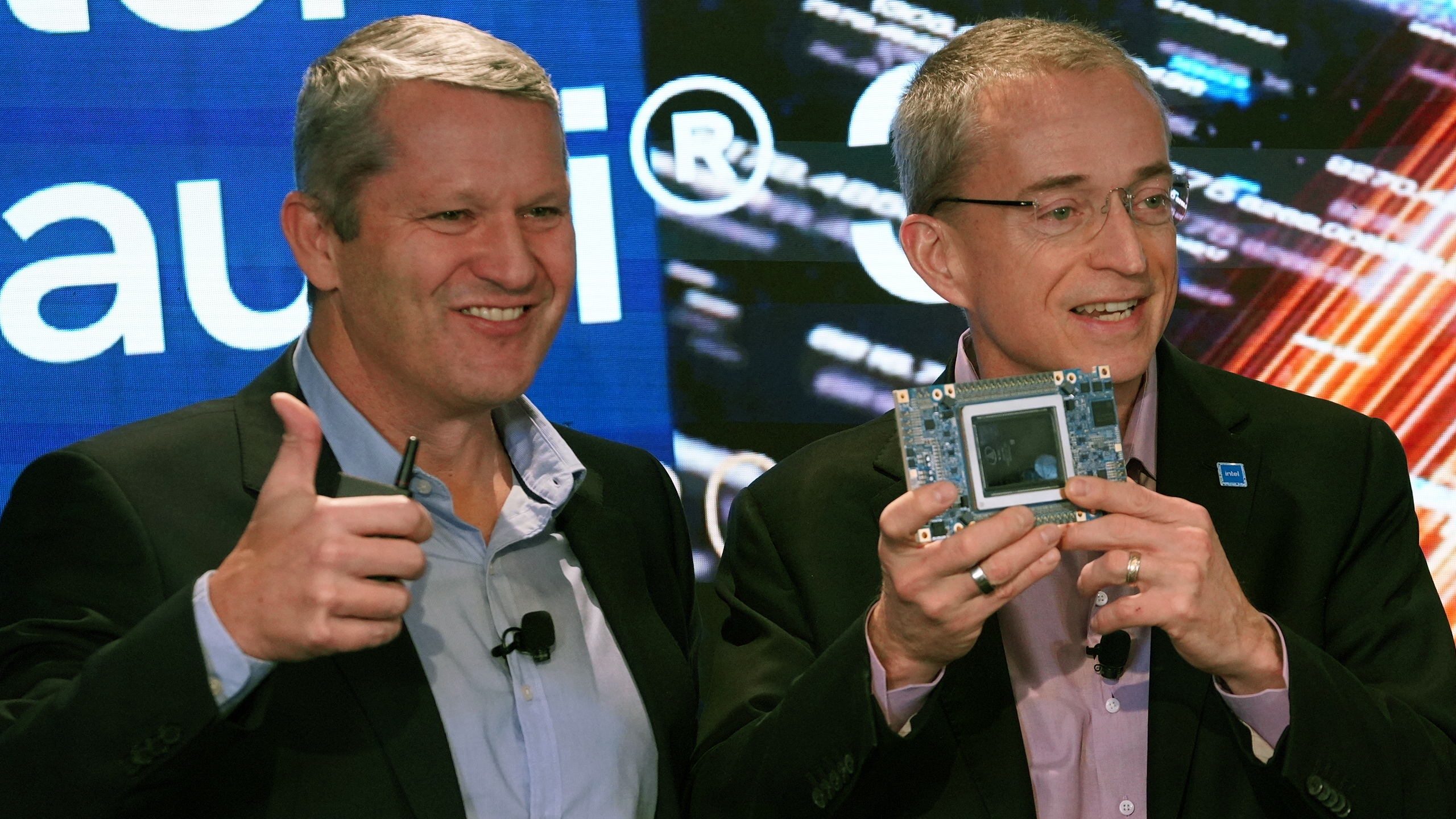

Intel showcased its Gaudi3 Processor for artificial intelligence (AI) workloads alongside the formal introduction of its 14th-Gen Core Ultra "Meteor Lake" processors and 5th-Gen Xeon Scalable CPUs for datacenters. The accelerator is set to arrive in 2024 and will offer a significant performance bump compared to its predecessor, the Gaudi2.

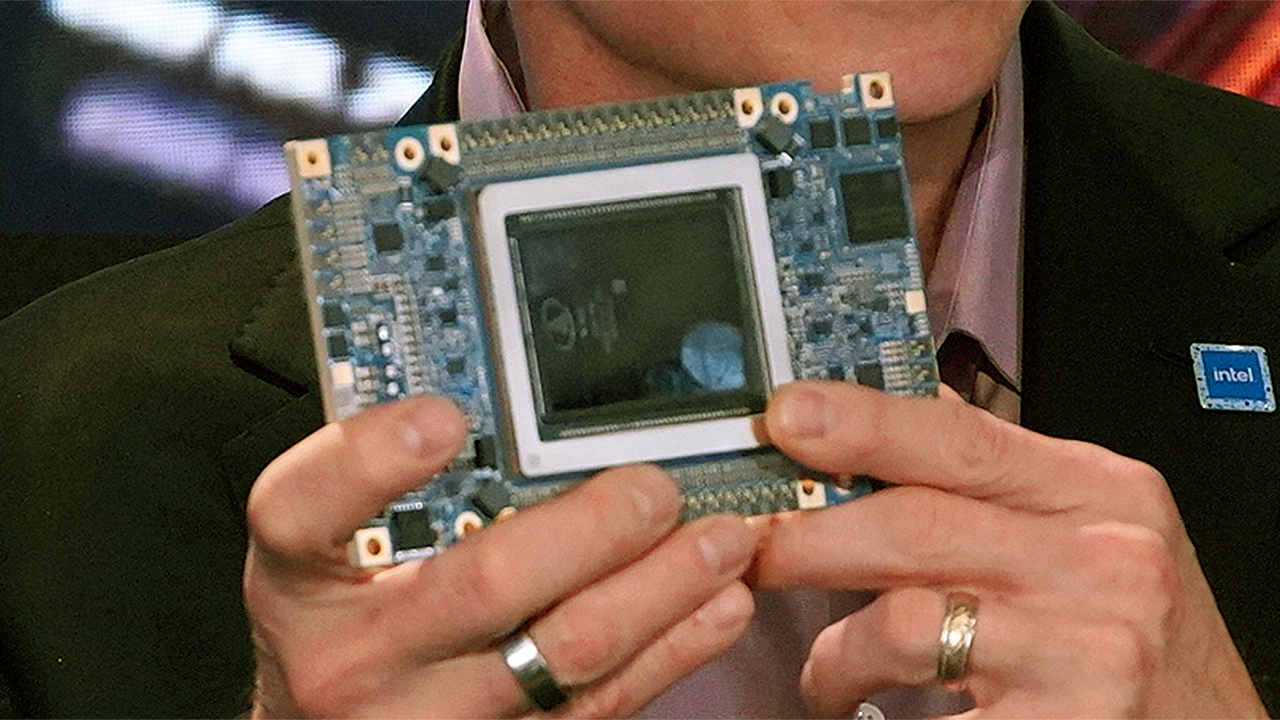

Intel CEO Gelsinger discussed the upcoming release of Intel's Gaudi3, which is scheduled for next year, and showed off the new AI accelerator aimed at deep learning and large-scale generative AI models for the first time. The new unit looks like a huge module (OAM, we presume) with a massive ASIC and multiple HBM3 (or HBM3E) memory stacks on it. The ASIC package looks significantly larger than the Gaudi2, so we presume that it is equipped with eight HBM3E stacks on it (rather then with six in case of Gaudi2). Based on a slide Intel presented at SC23, Gaudi3 is not a monolithic processor, but rather a dual-chiplet design that fuses together two processors.

In addition, Intel announced that the Gaudi3 will offer four times higher BF16 performance, two times faster networking performance, and 1.5X higher bandwidth compared to Gaudi2.

"Our Gaudi roadmap remains on track with Gaudi3 out of the fab, now in packaging and expected to launch next year," said Pat Gelsinger, CEO of Intel, at the company's latest conference call (via SeekingAlpha). In 2025, Falcon Shores brings our GPU and Gaudi capabilities into a single product."

Intel's Habana Gaudi2 already is a quite promising product, with 24 fully programmable Tensor Processor Cores (TPCs) and 96GB of HBM2E memory, capable of challenging Nvidia's H100 GPU for AI and HPC. Intel's Habana Gaudi3, which is expected to hit the market in 2024, will offer significantly improved performance ove its predecessor, Gelsinger said earlier this year.

Intel said the Gaudi line-up has experienced significant growth — attributed to its proven performance and competitive TCO, as well as its reasonable pricing. The company is confident that the rising demand for generative AI hardware will position Intel to secure a more substantial share of the accelerator market in 2024, primarily through its range of AI accelerators, which will be spearheaded by Gaudi.

"We are pleased with the customer momentum we are seeing from our accelerator portfolio and Gaudi in particular, and we have nearly doubled our pipeline over the last 90 days," said Gelsinger on the call.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Findecanor I wonder what Gaudi would have said if he had seen his name appropriated for a product made to steal art.Reply -

PEnns I wonder how Intel would feel if Gaudi's descendants opened an "Intel Butcher Shop", or "Intel Bordello Accessories Outlet".Reply -

abufrejoval Pretty sure Intel can't wait until La Sagrada Familia is be finished before their chips become a commercial success.Reply

And then Gaudi in Bavarian means fun, the very opposite of serious. -

BX4096 Reply

You may wonder that when such a product gets released. Anyone who still believes that generative "AI" models are "stealing" is entirely out of their depth posting on a technical website like this.Findecanor said:I wonder what Gaudi would have said if he had seen his name appropriated for a product made to steal art.

Although, the idea of what a 19th century inhabitant might think of this is rather apropos, since this whole controversy reminds me of people who used to refuse to have their picture taken back then because they believed that the photograph would steal their soul. Two centuries later, and here we go again. -

dalek1234 Reply

I think Intel is Sagrada Familiaabufrejoval said:Pretty sure Intel can't wait until La Sagrada Familia is be finished before their chips become a commercial success.

And then Gaudi in Bavarian means fun, the very opposite of serious. -

abufrejoval Reply

Surly they think they are and some others they seem to have convinced some others of that, too.dalek1234 said:I think Intel is Sagrada Familia

Pero no a mi, yo les veo como merecen... -

leoneo.x64 Reply

Oh damn...we totally forgot about the global royalty distribution system that regularly pays everybody whose content was used by AI to train itself!BX4096 said:You may wonder that when such a product gets released. Anyone who still believes that generative "AI" models are "stealing" is entirely out of their depth posting on a technical website like this.

Although, the idea of what a 19th century inhabitant might think of this is rather apropos, since this whole controversy reminds me of people who used to refuse to have their picture taken back then because they believed that the photograph would steal their soul. Two centuries later, and here we go again.

If you don't like getting called a thief, don't steal. Simple. Make up your own test data and then train your models. AI is not human (am surprised I need to spell it out) and can't enjoy human privileges like access to public info. Gen AI represents a flawed foundation built from the groundup to obscure source data lineage.

If we have the power to create such a powerful thing, we also have the responsibility to teach it some manners, else what's the point raising a cute baby T Rex only to be eaten alive by it later. -

leoneo.x64 Reply

Might have been "Me da in telele"Findecanor said:I wonder what Gaudi would have said if he had seen his name appropriated for a product made to steal art. -

BX4096 Reply

Again, all it says is that you're woefully ignorant of how these models are trained and work. The idea of a "global royalty distribution system" for every single source that contributed to training of a model of ChatGTP's scale is ludicrous beyond belief, especially since the actual output only has a very faint resemblance to these sources and will only become infinitely hazier and hazier once their number grows. What's even more laughable is that even if we went with something like this, the royalties for every author out there would realistically amount to perhaps a cent or two per year, if not less.leoneo.x64 said:Oh damn...we totally forgot about the global royalty distribution system that regularly pays everybody whose content was used by AI to train itself!

If you don't like getting called a thief, don't steal. Simple. Make up your own test data and then train your models. AI is not human (am surprised I need to spell it out) and can't enjoy human privileges like access to public info. Gen AI represents a flawed foundation built from the groundup to obscure source data lineage.

If we have the power to create such a powerful thing, we also have the responsibility to teach it some manners, else what's the point raising a cute baby T Rex only to be eaten alive by it later.

While AI and model training in particular are nuanced and multi-faceted issues with plenty of ethical considerations, comparing the models themselves to "stealing" is just as inane as claiming that taking a photograph of a public place is theft. Actually, not even that is accurate, as it's more akin to claiming that painting a generic picture very vaguely reminiscent of something (or rather, several unrelated somethings at once) is stealing, or asking a random number generator to come up with well-sourced attribution for every random number it generates.

If we're talking actual legality of it, it's even more clear-cut. The copyright law explicitly sees infringement as a reproducing or redistributing copyrighted work without permission, which is not at all what is happening with AI models. Even the derivative work clauses don't apply, since a derivative work "must incorporate some or all of a preexisting work" in order to be protected, which is not what happens when something like Stable Diffusion generates stuff. With "derivative work", we're talking largely recognizable characters, copyrighted logos, nearly verbatim reproduction, and so on. Being inspired by a work or resembling it very loosely are not protected at all – by design, since that is how virtually all human-created art happens.

So, again, while the subject itself is very complicated, claims like yours are nothing but uneducated nonsense with no basis in reality or copyright law. -

COLGeek Folks, please maintain your civility. Attack ideas, with sources when possible, but not each other.Reply

We can disagree without becoming disagreeable.