Intel Reveals Optane SSD P1600X: Entry-Level Boot & Caching SSDs

Intel's Optane SSD P1600X are designed for boot, caching, and logging workloads.

Intel has released its new datacenter Optane SSD designed specifically for boot, caching, and logging workloads. The Optane SSD P1600X product line does not boast huge capacities, yet its performance and extreme endurance promise to ensure high reliability and cost efficiency required for workloads.

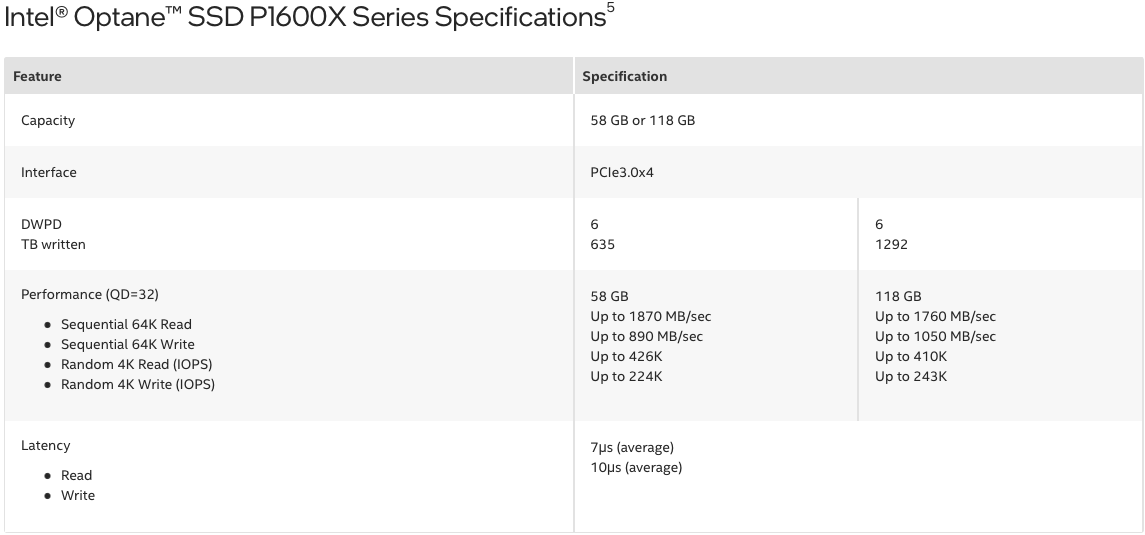

Intel's Optane SSD P1600X family includes drives featuring 58GB and 118GB capacities, enough for an operating system and things like caching, but not meant to store huge amounts of data. These drives are supposed to sit in front of higher-capacity SSDs or HDDs, boot an OS and then cache frequently used data to either improve user experience or meet QoS requirements of a server/datacenter. The drives come in an M.2-2280 form-factor and use a PCIe 3.0 x4 interface to maintain compatibility both with 'legacy' machines (how Intel puts it) as well as the latest servers supporting PCIe Gen 4.

Not anywhere close to the performance of the best SSDs, the Optane SSD P1600X SSDs are rated for an up to 1870 MB/s sequential read speed, an up to 1050 MB/s sequential write speed as well as up to 426K/243K random read/write speeds. By today's standards, these drives are not really fast, unless multiple of such drives are used in RAID 0 mode for maximum performance.

But this is where unique advantages of 3D XPoint memory come into play. The drives can endure up to six drive writes per day (6 DWPD) over five years (something you cannot expect from 3D NAND-based SSDs featuring similar capacities) and offer up to 2 million hours mean time between failures. Also, Intel says that the drives feature rather low 7μs average read latency and a 10μs average write latency, which is important for caching drives.

It is noteworthy that Intel's Optane SSD P1600X are not the first boot/caching drives in the company's lineup. The manufacturer already released its Optane SSD 800p-series 58GB and 118GB drives aimed at exactly the same market segment back in 2018. Those SSDs, however, featured a PCIe 3.0 x2 interface and were noticeably slower both in terms of sequential and random read/write speeds. They also provided a considerably higher write latency.

The surprising part about the announcement is, of course, that Intel decided to introduce a successor for its rather niche family of products amid uncertainties with 3D XPoint memory supply now that Texas Instruments acquired the fab which produced this memory from Micron. Intel has wanted to make 3D XPoint memory at its 3D NAND fab in China (which is about to be transferred to SK Hynix, but which will be operated by Intel and SK Hynix together for some time), so Intel may have enough memory for all of its product lineups. As for the Optane SSD P1600X product series launch, perhaps, Intel needs to serve very particular customers with its caching drives, which is why it had to introduce this new lineup.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

escksu Hmm, I am not sure if its really worth it. Although 6DPWD seems incredible, the drive is just 118GB. 6 drive writes = 708GB a day. 5yrs = 1.3TBReply

higher capacity enterprise drivers (eg. Kingston DC1500) is rated for 1DPWD. A 960GB one is rated for 960GB a day, 1.75TB for 5yrs.

A 960GB drive can cache way more data than 118GB one. The drive cost ~USD485. Even if the 118GB Xpoint cost USD85, I don't think 400 makes a big difference to a server. -

JWNoctis So they are rated for some 635/1290TBW. Similar numbers to typical consumer SSD of 1TB/2TB, or enterprise ones half that size or less, but impressive for their capacity.Reply

Latency is...still two orders of magnitude greater than that of DRAM. Though most of that is probably the interface.

Still an imperfect solution for a problem that you'd have to squint to find right now. They'd better not get too expensive. -

PCWarrior Reply

You got your units mixed up buddy. True for the 118GB drive, 6 drive writes per day is 708GB per day. But 5 years is 1825 days so 1825x708GB=1,292,100GB≈1300TB≈1.3PB. That is 1.3 Peta bytes not Terra bytes. For reference the 1TB 980 Pro (which is TLC despite the name Pro) has endurance of 600TB and the 2TB 980Pro has 1200TB.escksu said:Hmm, I am not sure if its really worth it. Although 6DPWD seems incredible, the drive is just 118GB. 6 drive writes = 708GB a day. 5yrs = 1.3TB

What you are suggesting is not really caching. You are essentially suggesting using the HDD (or the slower SSD) + the fast SSD al for storage. For caching there is not much point using a larger drive than 64GB and that was and the reason Intel's RST for years was topping out at 64GB as the optimum capacity for caching. Probably they managed to extent that to 128GB but in any case if you have a larger a capacity drive to use as cache you will essentially be using that drive for storage not for caching for the bigger drive you want to cache for. Also if cost is not an issue you can just buy more (in number) fast SSDs. You have to think big though. If we talk about a data centre with tens of 1000s of drives 400 dollars per drive difference becomes several millions of dollars difference.escksu said:A 960GB drive can cache way more data than 118GB one. The drive cost ~USD485. Even if the 118GB Xpoint cost USD85, I don't think 400 makes a big difference to a server. -

InvalidError Reply

Depends on what you are caching and why. If you occasionally run simulations or other workloads that have a huge memory footprint and isn't particularly performance-critical because you just need to be able to complete it, I could imagine spending $90 on a caching SSD for your system that can handle everything else you need to do with 32GB of DDR4 being a far more attractive option than getting 128+GB of DDR4 and a quad-channel platform that supports it if necessary.PCWarrior said:For caching there is not much point using a larger drive than 64GB

I can also imagine those drives being an attractive option for people with drive endurance anxiety. With one of those, most normal people will never need to worry about boot+swap+hibernation+browser state saves+etc. wearing it down by any meaningful amount through the system's useful life. My 500GB 860 EVO got the bulk of its 3% wear in less than a year from browser state saves. -

bambinone Hello, new ZFS SLOG devices. I'll take two! When and where can I buy them and how much will they cost?!Reply -

Kamen Rider Blade So how much do they cost, and where do I get it?Reply

I want that ultra low latency @ QD1 & QD2 along with consistent latency across the range of (How full is the drive) & RAND IOP performance.

My OS drive doesn't need to be a linear performance monster, I can get another SSD for that purpose. -

DPantigoso Hey guys, sorry for the necro.Reply

New user here, but I'm looking for a new boot drive.

Does the ultra-low latency mean that my Windows experience will be snappier? I'm a power user with multiple browser windows open while coding, then playing a game when I hit a wall, and back.

I use 3 144Hz monitors (1440p, 1440p 21:9, 1080p).

PC Specs are as follows:

CPU: Ryzen 7 7700X

RAM: XPG 6000Mbps @ CL40

Motherboard: ASUS ROG X670E-E Gaming WiFi

GPU: MSI Gaming X Trio RTX 4070 Ti

Boot SSD: 960 Pro

Would this drive be better for boot over a 1TB 990 Pro?

Thanks in advance for your help :) -

cyrusfox Reply

I run a 960GB 905P and have a P1600 118GB. In low queue nothing beat its. Biggest issue with the 118GB is you are quite limited on what you can install with it.DPantigoso said:Would this drive be better for boot over a 1TB 990 Pro?

It may feel a bit faster, will not always be perceptible, will largely be comparable to the 990 Pro, and will lose at sequential read/write. The bigger point/issue is the limited space which means managing where Windows puts downloads and other items so you don't prematurely fill the drive.

Its great for endurance and speed, but I find 118gb is a bit too small. Also they are not power efficient... expect it to idle at 50°C with a passive heat sink on it.

I was a giant Optane proponent and have owned nearly all except the P5800x, Unless the price is unbelievable, I think the money is better saved or put somewhere else. It's continually on fire sale, get it now, or pick it up used for less in the future. -

DPantigoso Reply

Thanks for the info! Given that I'll only use it for boot and will use my other drives for other stuff, I think I'll take the leap :)cyrusfox said:I run a 960GB 905P and have a P1600 118GB. In low queue nothing beat its. Biggest issue with the 118GB is you are quite limited on what you can install with it.

It may feel a bit faster, will not always be perceptible, will largely be comparable to the 990 Pro, and will lose at sequential read/write. The bigger point/issue is the limited space which means managing where Windows puts downloads and other items so you don't prematurely fill the drive.

Its great for endurance and speed, but I find 118gb is a bit too small. Also they are not power efficient... expect it to idle at 50°C with a passive heat sink on it.

I was a giant Optane proponent and have owned nearly all except the P5800x, Unless the price is unbelievable, I think the money is better saved or put somewhere else. It's continually on fire sale, get it now, or pick it up used for less in the future.

You mentioned sequential read/write, but does that impact the OS experience? I really only plan on installing the OS on the C: drive -

cyrusfox Reply

No, sequential read/write limitations are only felt when copying/moving large files, will not run into with OS day to day operation.DPantigoso said:You mentioned sequential read/write, but does that impact the OS experience? I really only plan on installing the OS on the C: drive

The P1600X can still swing 1870 /1050 MB/s sequential read/write speed. So if you are moving massive files between another pcie drive this may bottleneck performance. At worst it will resolve/finish in less than 118 seconds... Full drive fill, so I wouldn't worry about it.