Intel Cancels Omni-Path 200 Fabric and Stops Development

Intel has stopped its plans for the second-generation Omni-Path Architecture fabric for high-performance computing (HPC), known as the OPA200 series, which the company announced last year. The company will continue to support OPA100, although its availability has reportedly changed to build-to-order, but the future of Omni-Path looks bleak.

Intel told this to CRN on Wednesday after CRN received comments from two solution providers that Intel had informed some partners of its cancellation. Jennifer Huffstetler, vice president of data center product management and storage, confirmed the news to CRN with the following statement: “We see connectivity as a critical pillar in delivering the performance and scalability for a modern data center. We're continuing our investment there while we will no longer support the Omni-Path 200 series. We are continuing to see uptake in the HPC portfolio for OPA100.”

The partners said that they are not aware of the company working on an alternative product line that could serve as a replacement for the Omni-Path Architecture interconnect. So despite continuing to support OPA100, this would imply that Intel would fall behind the leading edge in HPC interconnects, as Mellanox has started shipping its InfiniBand 200G HDR solution (PDF) with 200Gb/s switches, adapters and cables this year, called ConnectX-6. NVIDIA snatched Mellanox earlier this year, with Intel being one of the other bidders.

When asked about a possible replacement, she did point to Ethernet: “We are evaluating options for extending the capabilities for high-performance Ethernet switches that we can expand the ability to meet the growing needs of HPC and AI. More to come.” This could indicate that Intel wants to leverage its Ethernet portfolio as a replacement interconnect for HPC. Currently, InfiniBand is most widely used for HPC workloads (followed by Omni-Path), while Ethernet is more used in the cloud, with little presence in HPC.

Earlier this year, Intel announced its Columbiaville 800 Series 100Gb/s Ethernet adapter for a Q3 launch to join the leading edge. (Mellanox has been offering 200GbE for several years now.) In June, Intel entered the Ethernet switch market by announcing its acquisition of Barefoot Networks.

Secondly, CRN’s sources also said that Intel had changed the availability status of OPA100: it is now reportedly producing the parts on a build-to-order basis, which could mean long lead times and delays. However, Huffstetler did not want to confirm that directly: “I can speak to the fact that we are continuing to sell, maintain, and support that product line.”

However, the absence of a roadmap for Omni-Path will likely inhibit partners from further investing in that product line, similar to how Knights Landing and Knights Mill, Intel’s other former key HPC product line, likely faced similar difficulties after the cancellation of the 10nm Knights Hill.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

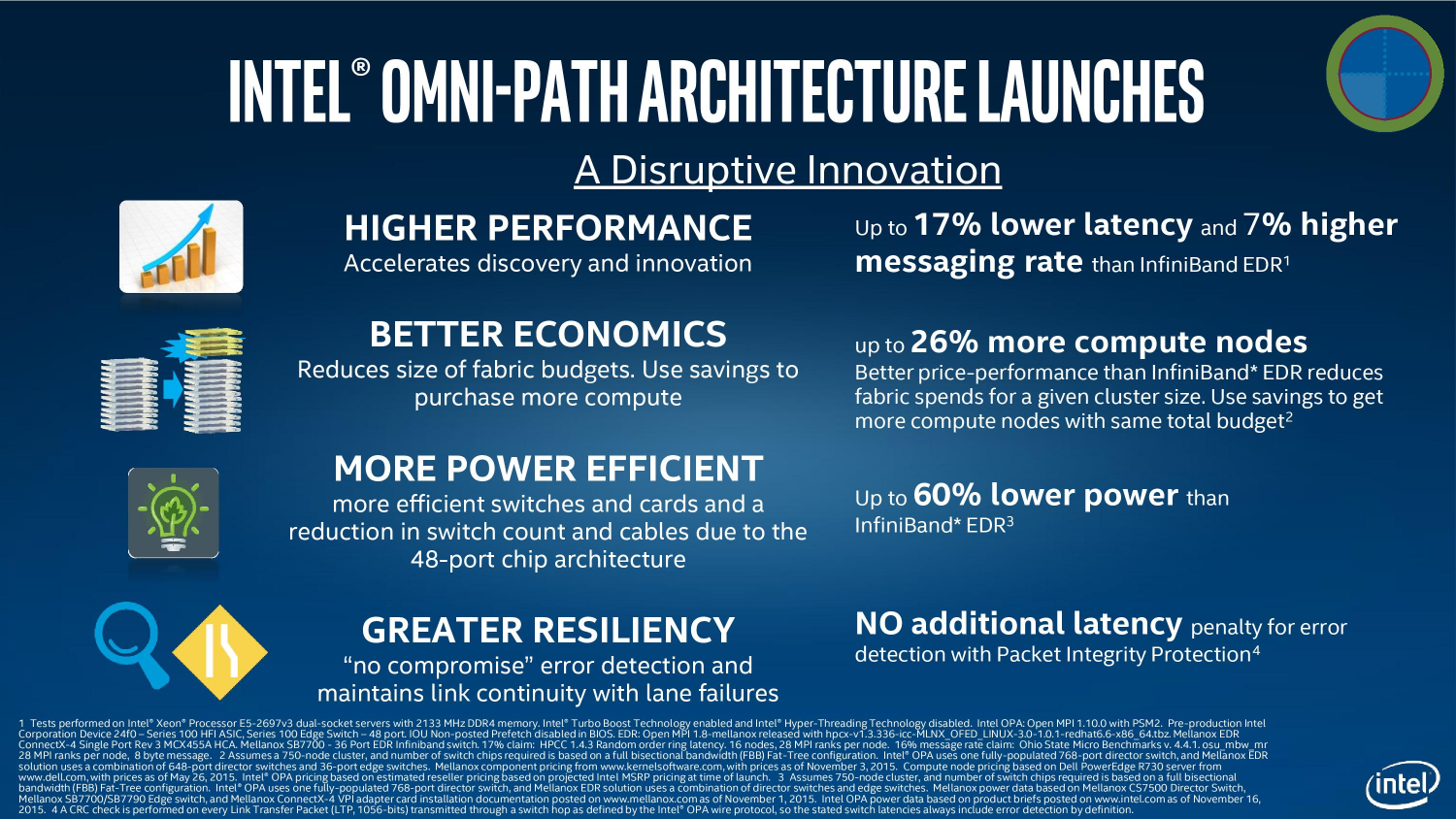

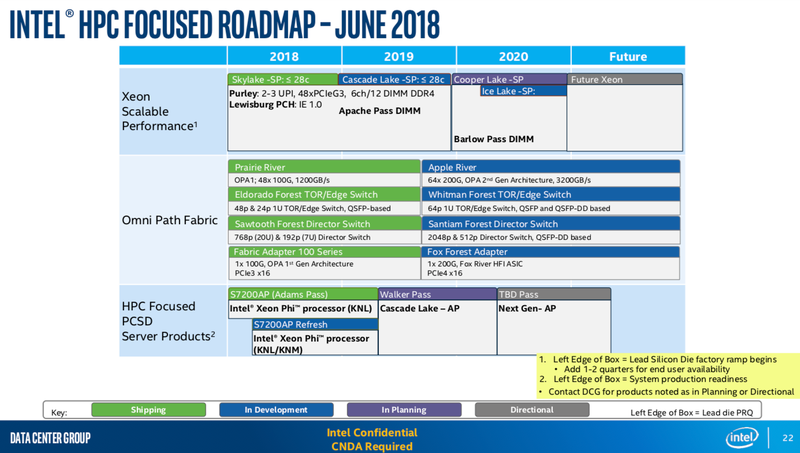

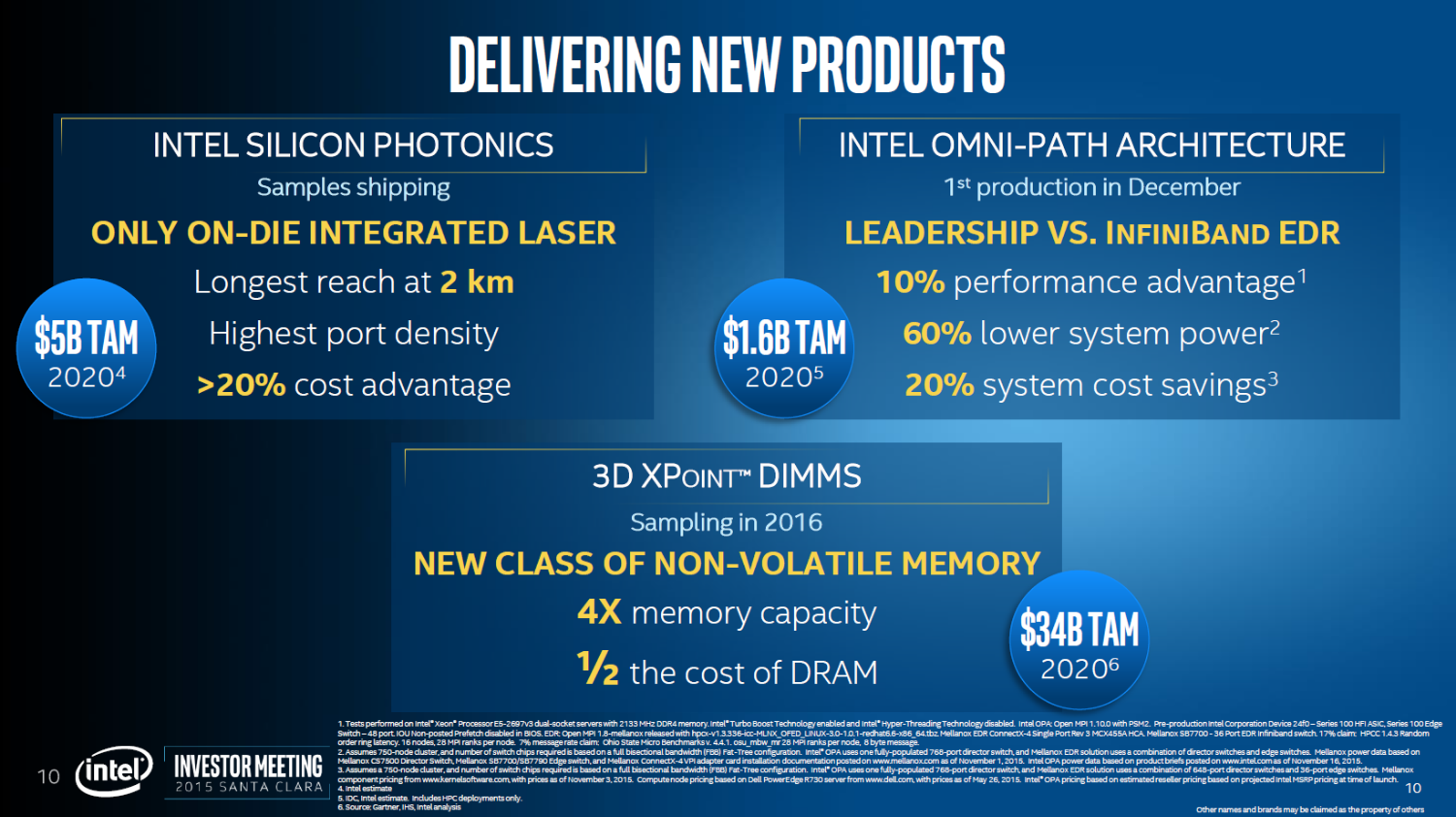

Intel launched the OPA100 fabric for HPC in conjunction with Knights Landing in 2016. Last year at the International Supercomputing Conference, Intel teased the 200Gbps OPA200 as coming in 2019. This was subsequently confirmed by a leaked HPC roadmap that indicated a launch in the second half of the year. Besides twice the bandwidth, the number of ports per switch was also going to increase from 48 to 64. OPA100 was qualified to handle 16,000 nodes, but OPA200 would increase that to “tens of thousands” of nodes. “We believe that we will have an extensible architecture for switches that we can carry forward,” said Yaworski, director of product marketing for high-performance fabrics and networking at Intel.

However, throughout the year, bits and pieces of information started to indicate a quietness around Omni-Path. For one, while there had been dedicated Skylake-SP SKUs with integrated Omni-Path fabric support, those SKUs were absent from Cascade Lake-SP. Moreover, Omni-Path as a whole wasn’t even mentioned during Intel’s big April data-centric launch event, nor at subsequent HPC events, leading some to question its future. That question has now been answered.

In general, the Omni-Path Architecture HPC fabric had been one of Intel’s supposed growth drivers in the data center and one of the ways in which Intel sought to play a role in that market besides merely delivering the CPU, which Intel categorizes as its adjacency businesses. However, the growth of those businesses in recent years has been somewhat below its long-term projections.

-

bit_user I wonder how much the rise of EPYC had to do with the demise of OmniPath. If you have any reason to think you won't be running a 100% Intel stack, then it must call into question the idea of buying into Intel's interconnect technology.Reply -

DavidC1 I think OmniPath died because it debuted with Knights Landing processors and when that lineup got cancelled, the roadmap became blurry as that caused the delay in future OmniPath generations.Reply

Also, OmniPath 200 needs PCIe 4 interconnect to allow such bandwidth. There are no such Intel processors. -

bit_user Reply

Technically, PCIe 3.0 can bond up to 32 lanes. So, that probably explains a lot about why they had some CPUs with it integrated in-package.DavidC1 said:Also, OmniPath 200 needs PCIe 4 interconnect to allow such bandwidth. There are no such Intel processors.

But, that's a really good point about connectivity. I hadn't considered that. However, here's a PCIe 3.0 x8 Omni-Path 100 host adapter and a x16 dual-port card:

https://ark.intel.com/content/www/us/en/ark/products/92004/intel-omni-path-host-fabric-interface-adapter-100-series-1-port-pcie-x8.htmlhttps://ark.intel.com/content/www/us/en/ark/products/92008/intel-omni-path-host-fabric-interface-adapter-100-series-2-port-split-pcie-x16.html

The dual-port makes more sense, since you might not have a full load on both ports, in the same direction, at the same time. But their willingness to offer a x8 single-port adapter shows they're not too concerned about being bus-bottlenecked, even in a simple uni-directional sense.

Thinking about it some more, if Infiniband can already offer 200 Gbps per direction, that puts Intel in a really bad spot, with their lack of PCIe 4. POWER has had PCIe 4 for over a year and now AMD is out there.