Nvidia Preps A100 GPU with 80GB of HBM2E Memory

80 Giga...what?

Nvidia recently added a yet-unannounced version of its A100 compute GPU with 80GB of HBM2E memory in a standard full-length, full-height (FLFH) card form-factor, meaning that this beastly GPU drops into a PCIe slot just like a 'regular' GPU. Given that Nvidia's compute GPUs like A100 and V100 are mainly aimed at servers in cloud data centers, Nvidia prioritizes the SXM versions (which mount on a motherboard) over regular PCIe versions. That doesn't mean the company doesn't have leading-edge GPUs in a regular PCIe card form-factor, though.

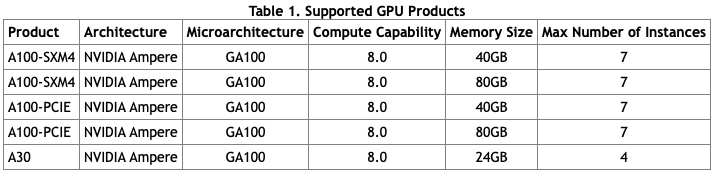

Nvidia's A100-PCIe accelerator based on the GA100 GPU with 6912 CUDA cores and 80GB of HBM2E ECC memory (featuring 2TB/s of bandwidth) will have the same proficiencies as the company's A100-SXM4 accelerator with 80GB of memory, at least as far compute capabilities (version 8.0) and virtualization/instance capabilities (up to seven instances) are concerned. There will of course be differences as far as power limits are concerned.

Nvidia has not officially introduced its A100-PCIe 80GB HBM2E compute card, but since it is listed in an official document found by VideoCardz, we can expect the company to launch it in the coming months. Since the A100-PCIe 80GB HBM2E compute card has not been launched yet, it's impossible to know the actual pricing. CDW's partners have A100 PCIe cards with 40GB of memory for $15,849 ~ $27,113 depending on an exact reseller, so it is pretty obvious that an 80GB version will cost more than that.

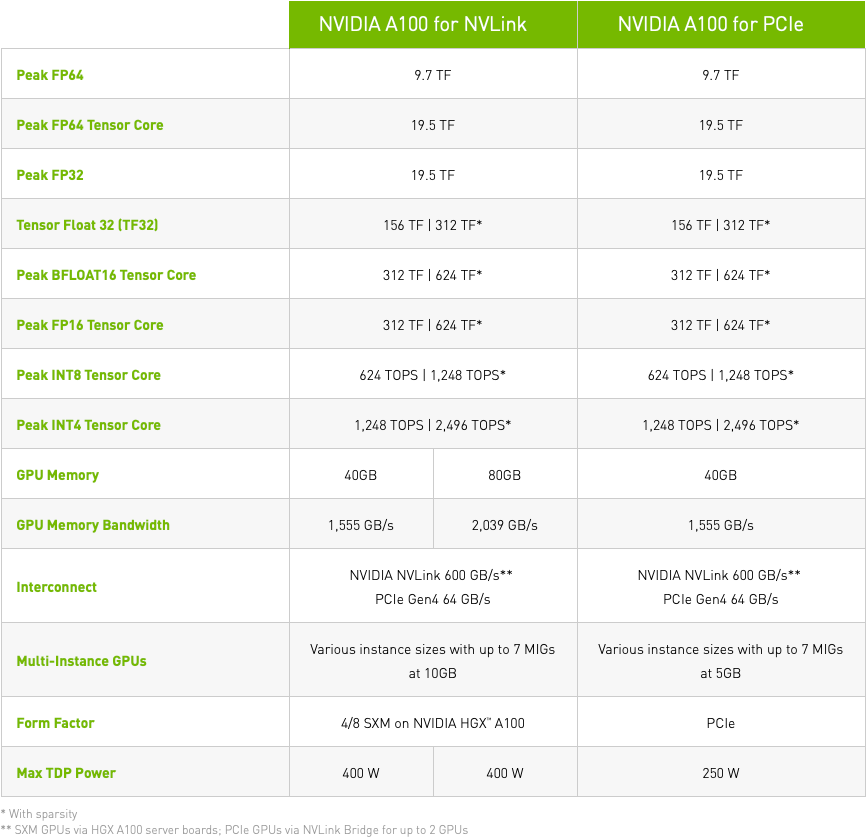

Nvidia's proprietary SXM compute GPU form-factor has several advantages over regular PCIe cards. Nvidia's latest A100-SXM4 modules support a maximum thermal design power (TDP) of up to 400W (both for 40GB and 80GB versions) since it is easier to supply the necessary amount of power to such modules and it is easier to cool them down (for example, using a refrigerant cooling system in the latest DGX Station A100). In contrast, Nvidia's A100 PCIe cards are rated for up to 250W. Meanwhile, they can be used inside rack servers as well as in high-end workstations.

Nvidia's cloud datacenter customers seem to prefer SXM4 modules over cards. As a result, Nvidia first launched its A100-SXM4 40GB HBM2E module (with 1.6TB/s of bandwidth) last year and followed up with a PCIe card version several months after. The company also first introduced its A100-SXM4 80GB HBM2E module (with faster HBM2E) last November but only started shipping it fairly recently.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.