Stable Diffusion Optimized for Intel Silicon Boosts Arc A770 Performance by 54%

Create AI-generated images even faster

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Automatic1111's Stable Diffusion WebUI now works with Intel GPU hardware, thanks to the integration of Intel's OpenVINO toolkit that takes AI models and optimizes them to run on Intel hardware. We've re-tested the latest release of Stable Diffusion to see how much faster Intel's GPUs are compared to our previous results, with gains of 40 to 55 percent.

Stable Diffusion (that currently has our previous testing, though we're working on updating the results) is a deep-learning AI model used to generate images from text descriptions. What makes Stable Diffusion special is its ability to run on local consumer hardware. The AI community has plenty of projects out there, with Stable Diffusion WebUI being the most popular. It provides a browser interface that's easy to use and experiment with.

After months of work in the background (we've been hearing rumblings of this for a while now), the latest updates are now available for Intel Arc owners and provide a substantial boost to performance. Also note that AMD has improved support from the Automatic1111 project now, but this article specifically focused on the Intel GPU support. AMD performance has improved as well, and we'll revisit this topic in more detail in the future.

Check out the Stable Diffusion A1111 webui for Intel Silicon. Works with my A770 or can run on your CPU or iGPU. It's powered by OpenVINO, so its optimized.😃Example of image on the right, pure prompting. Left is same image with increase detail on the eyes using InPainting.… pic.twitter.com/zpbQOMvJF3August 17, 2023

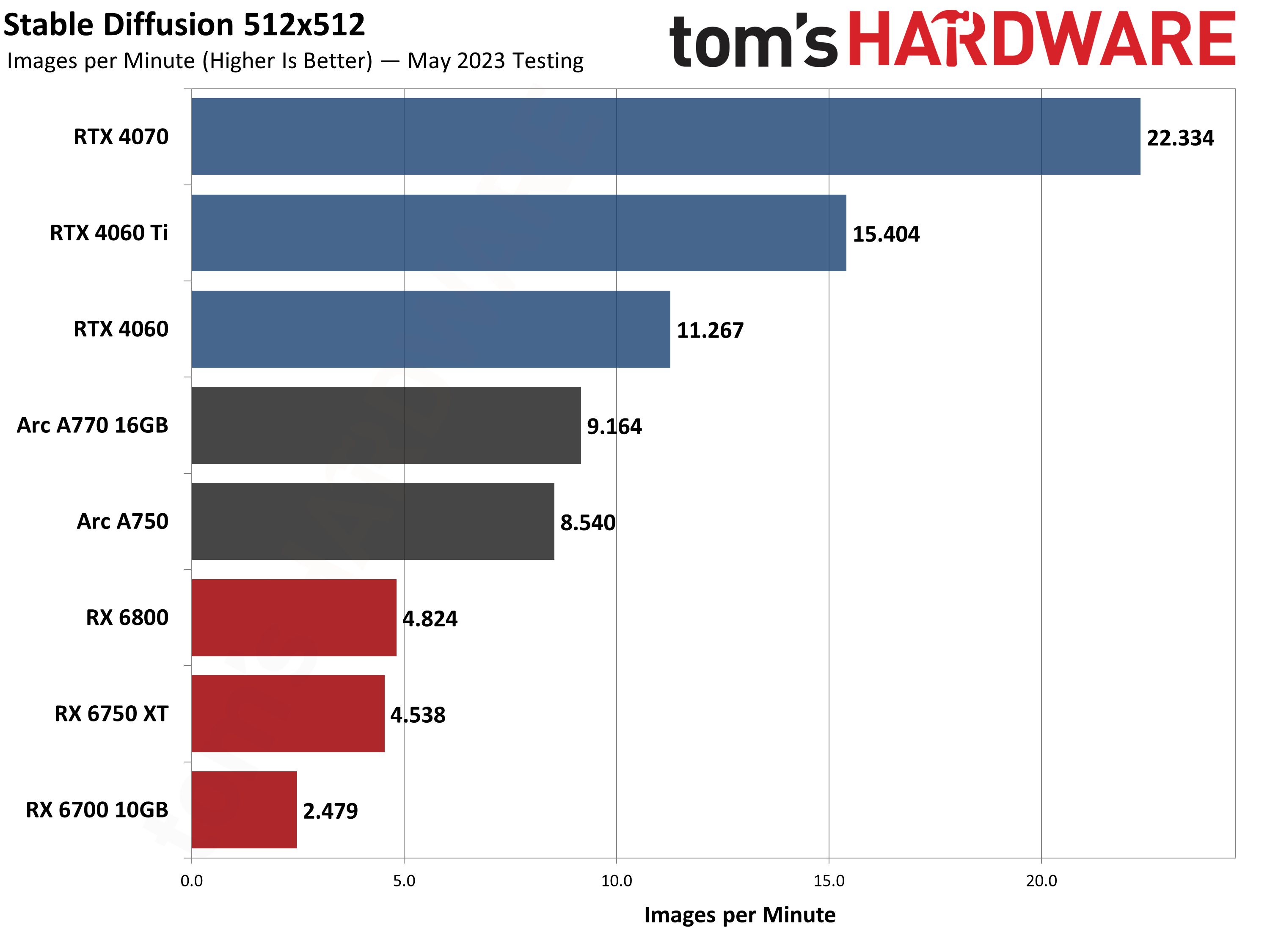

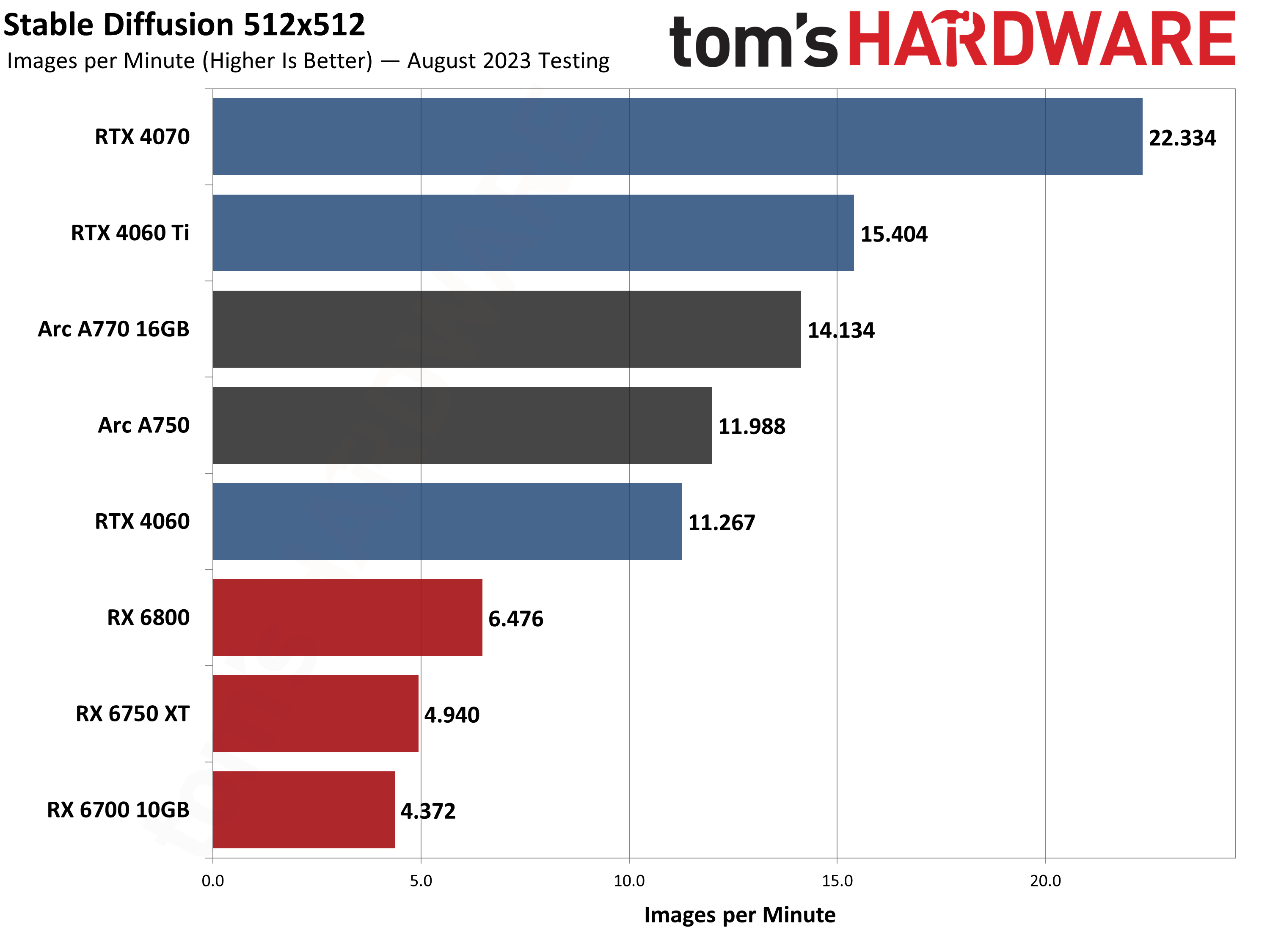

Here are the results of our previous and updated testing of Stable Diffusion. We used a slightly tweaked Stable Diffusion OpenVINO for our previous testing, and have retested with the fork of Automatic1111 webui with OpenVINO. We also retested several of AMD's GPUs with a newer build of Nod.ai's Shark-based Stable Diffusion. The Nvidia results haven't been updated, though we'll look at retesting with the latest version in the near future (and update the main Stable Diffusion benchmarks article when we're finished).

We should note that we also changed our prompt, which makes the new results generally more demanding. (The new prompt is "messy room," which tends to have a lot of tiny details in the images that require more effort for the AI to generate.) There's variation between runs, and there are caveats that apply specifically to Arc right now, but here are the before/after results.

The Intel ARC and AMD GPUs all show improved performance, with most delivering significant gains. The Arc A770 16GB improved by 54%, while the A750 improved by 40% in the same scenario. (Note that we used the Intel Arc A770 Limited Edition card for testing, which is now discontinued, though Acer, ASRock, Sparkle, and Gunnir still offer A770 cards — both 16GB and 8GB variants.)

Nod.ai hasn't been sitting still either. AMD's RX 6800, RX 6750 XT, and RX 6700 10GB are all faster, with the 6800 and 6700 10GB in particular showing large gains. We're not sure why the 6750 XT didn't do as well, but the RX 6800 saw a performance boost of 34% and the RX 6700 10GB saw an even greater 76% performance improvement. The RX 6750 XT for some reason only saw a measly 9% increase, even though all three AMD GPUs share the same RDNA2 architecture. (Again, we'll be retesting other GPUs; this is merely a snapshot in time using what was readily available and working at the time of writing.)

We did not retest the three Nvidia RTX 40-series GPUs, which is why the performance statistics remain identical between the two graphs. Even so, with the new OpenVINO optimizations, Intel's Arc A750 and A770 are now able to outperform the RTX 4060, and the A770 16GB is close behind the RTX 4060 Ti.

There's still plenty of ongoing work, including making the installation more straightforward, and fixes so that other image resolutions and Stable Diffusion models work. We had to rely on the "v1-5-pruned-emaonly.safetensors" default model, as the newer "v2-1_512-ema-pruned.safetensors" and "v2-1_768-ema-pruned.safetensors" failed to generate meaningful output. Also, 768x768 generation currently fails on Arc GPUs — we could do up to 720x720, but 744x744 ended up switching to CPU-based generation. We're told a fix for the 768x768 support should be coming relatively soon, though, so Arc users should keep an eye out for that update.Update, 8/17/2023: The fix is live. To get 768x768 working, go the the directory where you've installed Stable Diffusion OpenVINO, and run: "venv\Scripts\activate" and then "pip install --pre openvino==2023.1.0.dev20230811" and generation of higher resolution images should work. We successfully tested 768x768 on an A750, where previously even the A770 16GB failed and seemed to run out of VRAM.

Update, 8/18/2023: A second fix is now available, which enabled v2-1_512-base support alongside the above 768x768 support. (Support for v2-1_768 is still being worked on.) In short, download the v2-1_512-ema-pruned.safetensors file and put that in the models folder. You should now be able to select the appropriate model and configuration in Stable Diffusion.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Nuullll https://github.com/vladmandic/automatic (SD.Next) also added openvino (with diffusers pipeline) support for Intel Silicon. Thanks to @Disty0's great work: https://github.com/vladmandic/automatic/commit/86ae8175e0a8cf9e645c283ad46f51c5d5e3ecddReply

Now SD.Next supports both IPEX and OpenVINO backends for Intel GPUs! -

Disty0 You can get 29 images per minute with A770 if you use higher batch sizes.Reply

ARC GPUs doesn't really like low resolutions.

Using SDNext WebUI on Linux with SD 1.5 model and using Diffusers backend;

512x512, Batch Size 32, Steps 20:

Time taken: 1m 5.19s |

GPU active 6269 MB reserved 7274 MB | System peak 4286 MB total 16288 MB

Diffusers Settings :

-

Disty0 Reply

Yes: https://github.com/bmaltais/kohya_ss/pull/1499NineMeow said:can i train Lyco models using arc (oneapi)?