Intel's Granite Rapids listed with huge L3 cache upgrade to tackle AMD EPYC - software development emulator spills the details

Compared to the 320MB L3 Cache of Emerald Rapids, Granite Rapids is expected to boast 480MB L3 Cache, or a solid 1.5x increase

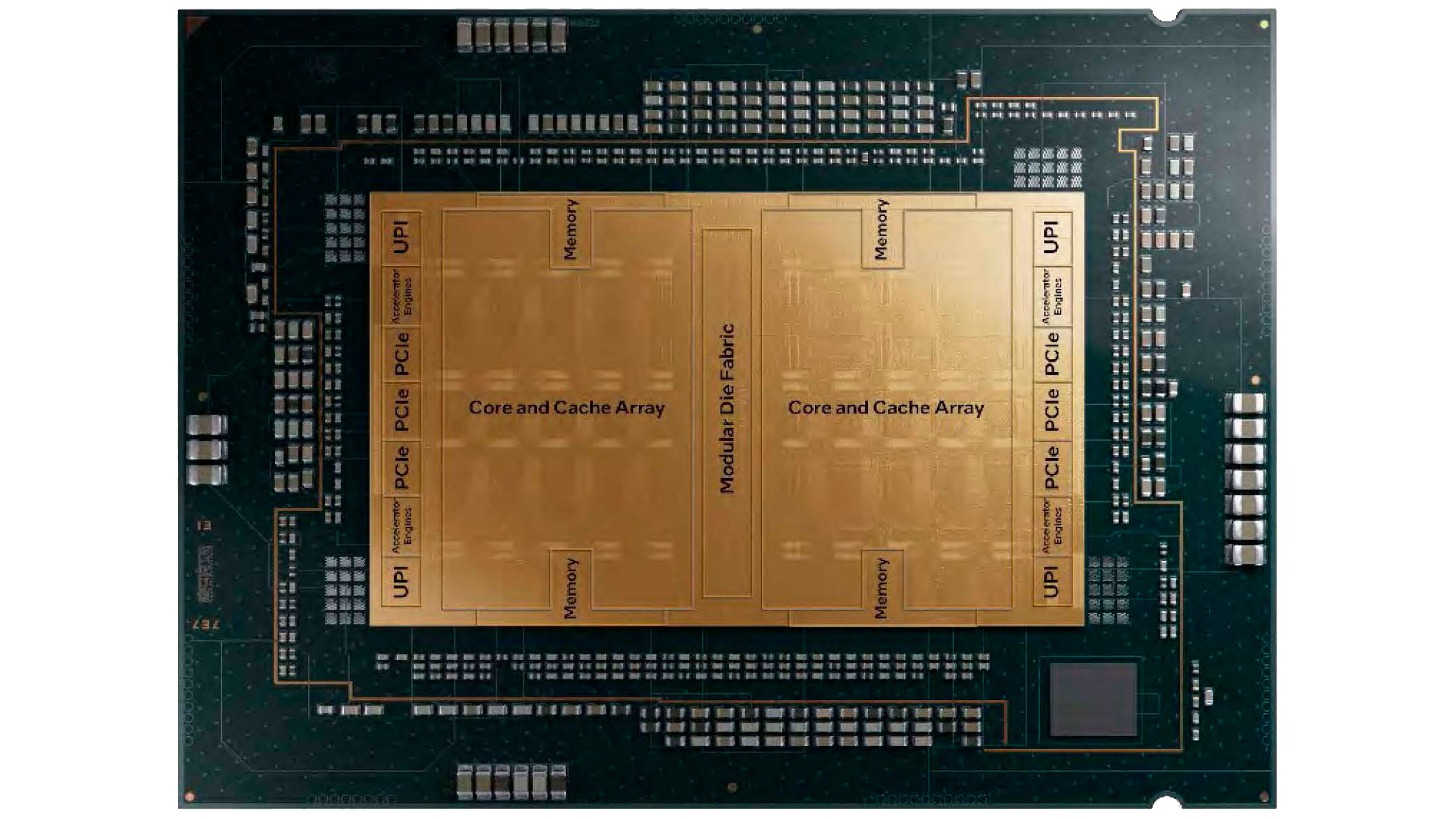

As observed by InstLatX64, the latest update to Intel's Software Development Emulator has given us an intriguing look at the L3 cache specification of Intel's upcoming Granite Rapids Xeon CPUs. Specifically, Intel SDE shows that Granite Rapids will now have 480MB of L3 cache, compared to Emerald Rapids' 320 MB.

When we reviewed the Emerald Rapids-based Intel Xeon Platinum 8592+ CPU late last year, we determined that its tripled L3 cache compared to past generations contributed significantly to its gains in Artificial Intelligence inference, data center, video encoding, and general compute workloads. While AMD EPYC generally remains the player to beat in the enterprise CPU space, Emerald Rapids marks a significant improvement from Intel's side of that battlefield, especially as it pertains to Artificial Intelligence workloads and multi-core performance in general.

So, what does Granite Rapids providing a further boost to the L3 cache mean in practical terms? Until we get to test the CPUs for ourselves, we can't be completely sure. Keep in mind that a shared L3 cache on a CPU corresponds to the level of cache that's shared by and accessible to all CPU cores on the die, whereas lower levels of cache (particularly L1 Caches) are usually distributed across specific cores or groups of cores.

It's reasonable to expect that Intel wants to remain competitive in the ever-essential server CPU space (the only market where Intel and AMD can get away with selling a few dozen CPU cores for thousands of dollars), and leveraging L3 cache seemed to improve its competitive performance last time around. It seems they're hoping that trend continues with this boost to Granite Rapids' L3 cache ahead of its projected 2024 release.

As things currently stand, it's hard to tell whether or not Intel will be able to truly push against AMD's lead in multi-core throughput in the server space. You might think AMD is good at throwing a bunch of cores at a problem on desktops, but it has truly mastered this methodology in the data center.

However, Emerald Rapids' L3 cache improvement still managed to push Intel's Xeon CPUs into competition with EPYC Bergamo chips while technically being a refresh cycle, with solid wins in most AI workloads we tested, and at least comparable performance in most other benchmarks. Since Granite Rapids will leverage an even greater L3 cache improvement alongside the new Intel 3nm node process, the performance gain for Xeon's next generation could be among Intel's strongest leaps in server CPUs in quite a while.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Christopher Harper has been a successful freelance tech writer specializing in PC hardware and gaming since 2015, and ghostwrote for various B2B clients in High School before that. Outside of work, Christopher is best known to friends and rivals as an active competitive player in various eSports (particularly fighting games and arena shooters) and a purveyor of music ranging from Jimi Hendrix to Killer Mike to the Sonic Adventure 2 soundtrack.

-

-Fran- Ugh... So we're doing this dance, uh?Reply

What matters is how much L3/L4/eRAM/etc amounts each core has access to (I don't remember ever seeing L2 shared and even less L1) and the associated latency. Saying it has more of it, while positive, it's hardly something to be excited about, sorry.

Still, an improvement is an improvement, so good on Intel for trying to bring competition.

Regards. -

bit_user Reply

Pretty much what I was going to say. If you're increasing core counts, then you obviously have to increase L3 commensurately!-Fran- said:What matters is how much L3/L4/eRAM/etc amounts each core has access to

In Toms own reporting, they quoted Granite Rapids at 84-90 cores.

https://www.tomshardware.com/news/intel-displays-granite-rapids-cpus-as-specs-leak-five-chipletsThat's 1.3x to 1.4x as many cores as Emerald Rapids. Hence, we would logically expect 420 to 450 MiB of L3 cache, if they merely wanted to keep the same per-core cache levels.

480 MiB is only 14.3% to 6.7% above those estimates. So, it wouldn't represent a very significant increase in per-core L3, if the core count estimates are correct. -

bit_user ReplyKeep in mind that a shared L3 cache on a CPU corresponds to the level of cache that's shared by and accessible to all CPU cores on the die

It's not such a strict definition, actually. For instance, AMD limits the scope of L3 to a single chiplet. That makes it a little tricky to compare raw L3 quantities between EPYC and Xeon.

Emerald Rapids' L3 cache improvement still managed to push Intel's Xeon CPUs into competition with EPYC Bergamo chips while technically being a refresh cycle, with solid wins in most AI workloads we tested, and at least comparable performance in most other benchmarks.

Eh, not really. The AI benchmarks were largely due to the presence of AMX, in the Xeons. With that, even Sapphire Rapids could outperform Zen 4 EPYC (Genoa) on such tasks.

As for the other benchmarks you list, some exhibit poor multi-core scaling, which is why Phoronix excluded them from his test suite. If you check the geomeans, on the last page of this article, it's a bloodbath (and the blood is blue):

https://www.phoronix.com/review/intel-xeon-platinum-8592

It's telling that AMD didn't even need raw core-count to win. Look at the EPYC 9554 vs. Xeon 8592+. Both are 64-core CPUs, rated at 360 (EPYC) and 350 (Xeon) Watts. The EPYC beats the Xeon by 3.6% in 2P configuration and loses by just 2.2% in a 1P setup. That speaks volumes to how well AMD executed on this generation. Also, the EPYC averaged just 227.12 and 377.42 W in 1P and 2P configurations, respectively, while the Xeon burned 289.52 and 556.83 W. So, that seeming 10 W TDP advantage for the EPYC isn't decisive, in actual practice.

BTW, since this article is about cache, consider the EPYC 9554 has just 256 MiB of L3 cache, while the Xeon 8592+ has 320 MiB. So, even a decisive cache advantage wasn't enough to put Emerald Rapids solidly ahead of Genoa, on a per-core basis. -

rluker5 Reply

My 4980hq says hi. (134MB L3+L4 cache in 2014) Pretty sure that wasn't the first time cache was relatively increased by a lot, but it is a good enough example.richardvday said:AMD innovates and Intel copies them. Nothing new same thing different day. -

thestryker Reply

Whatever monitoring they're using isn't picking up the IO die you can tell by the minimum power consumption numbers on the AMD side.bit_user said:Also, the EPYC averaged just 227.12 and 377.42 W in 1P and 2P configurations, respectively, while the Xeon burned 289.52 and 556.83 W. So, that seeming 10 W TDP advantage for the EPYC isn't decisive, in actual practice. -

bit_user Reply

The 9554 has a minimum power of 11.1 W and 25.6 W in 1P and 2P configurations, respectively. Why do you find that too far fetched, for CPU package idle power figures?thestryker said:Whatever monitoring they're using isn't picking up the IO die you can tell by the minimum power consumption numbers on the AMD side.

Yes, the minimum spec EPYC uses just 6.7 W, but that's also the Siena platform, with fewer max chiplets, memory channels, and I/O. Also, I think its socket might only support 1P configurations.

Then again, there are some pretty absurd max figures. So, maybe the power-sampling is a little wonky, when it comes to min/max. Perhaps it's derived from energy metrics, in which case noisy timestamps could result in artificially low/high figures, without invalidating the averages. -

George³ Reply

Intel say 7.25MB L1+L2+L3 and nothing for e-dram? Maybe you have on mind another CPU?rluker5 said:My 4980hq says hi. (134MB L3+L4 cache in 2014) Pretty sure that wasn't the first time cache was relatively increased by a lot, but it is a good enough example. -

thestryker Reply

That would make it lower than Ryzen 7000 (desktop) not to mention the best the IO die managed alone last gen was ~50W and there's also no world where AMD's idle power consumption is lower than Intel's (this was a focus after SPR as they had unusually high idle due to the way they did tiles). The cores themselves absolutely can get that low as everything about the Zen 4 cores is fantastic for power consumption, but the IO die just isn't that efficient.bit_user said:The 9554 has a minimum power of 11.1 W and 25.6 W in 1P and 2P configurations, respectively. Why do you find that too far fetched, for CPU package idle power figures? -

thestryker Reply

Everything with Intel Iris Pro Graphics 5200 has 128MB eDRAM which every Crystal Well part has as far as I'm aware.George³ said:Intel say 7.25MB L1+L2+L3 and nothing for e-dram? Maybe you have on mind another CPU?