For Honor Performance Review

CPU & RAM Resources, And Conclusion

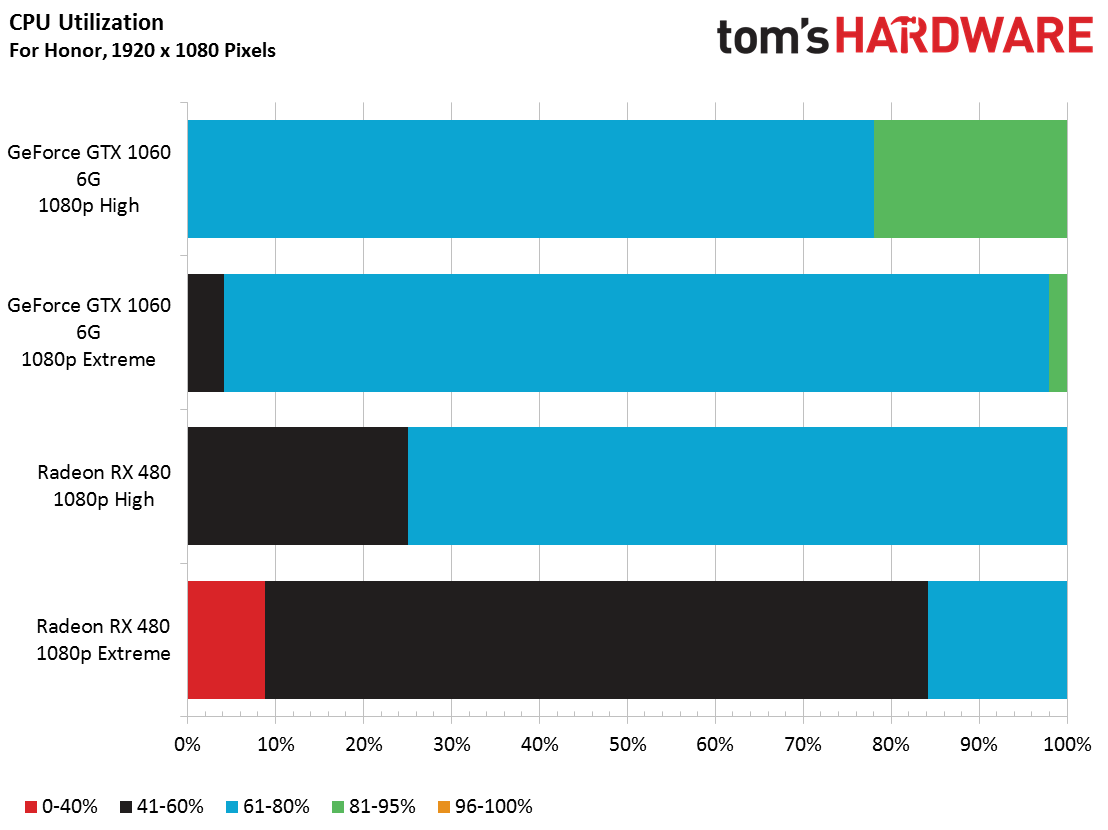

Processor Usage, Intel Core i5-6500

We noticed that For Honor reports higher CPU utilization on a GeForce than the Radeons, even more so when shifting down from Extreme quality to High.

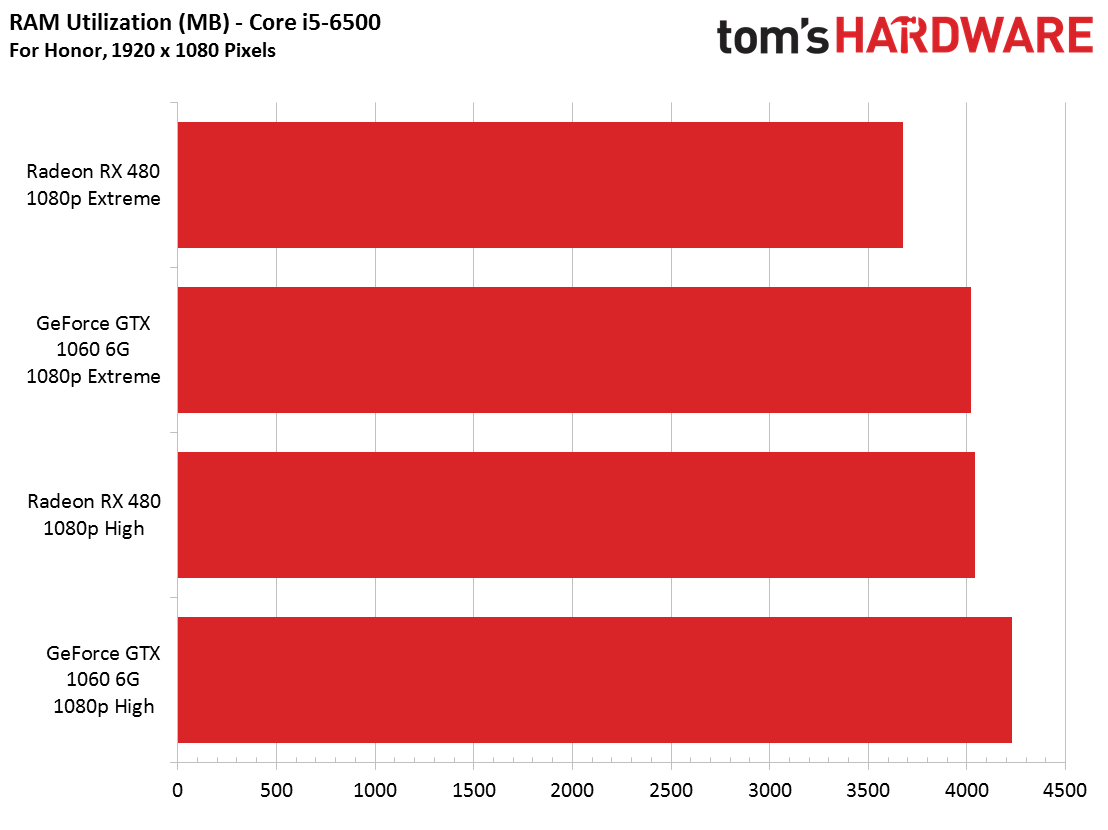

System Memory Usage

The game also seems to tie up more system memory on GeForce cards. Strangely, the High preset is more RAM-intensive than Extreme, and this applies to AMD and Nvidia cards alike.

Note that our tally counts total system memory, so you have to subtract what the OS and other services use as well. When we do that, For Honor appears quite thrifty with its RAM utilization.

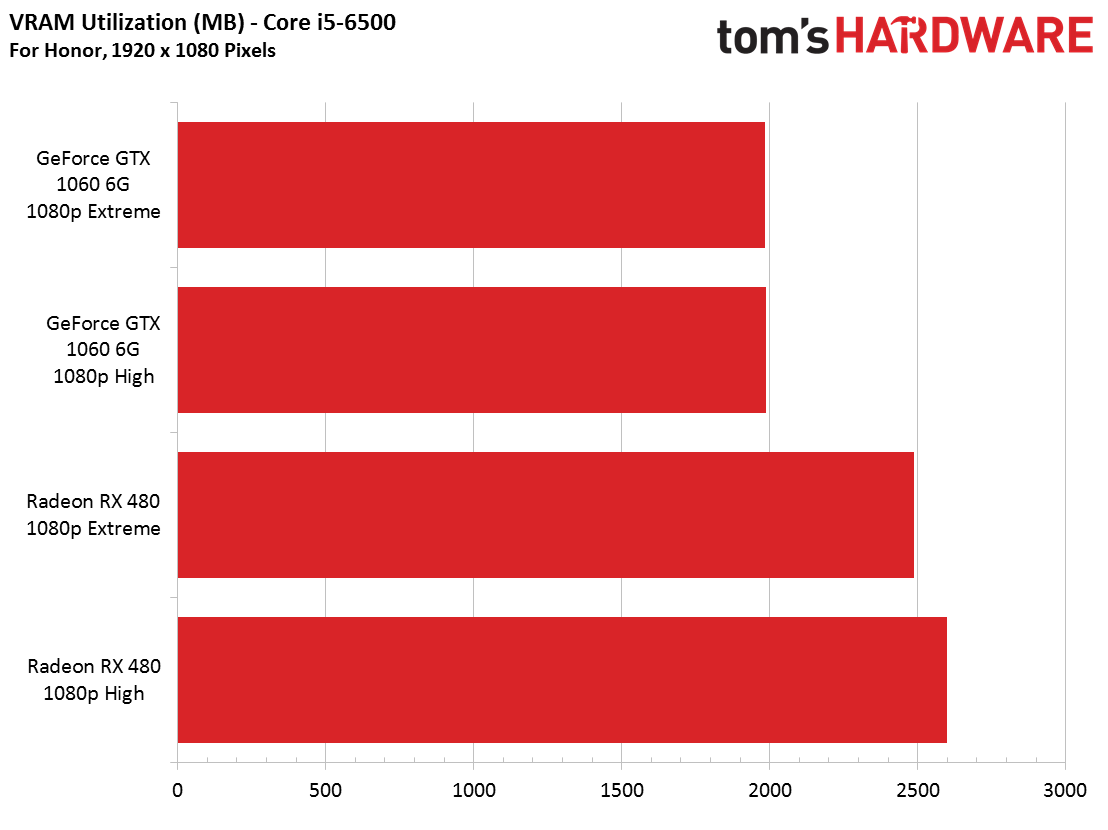

Video Memory Usage

At 1080p, For Honor doesn't come close to utilizing all of the GDDR5 memory these two cards offer. In fact, the AnvilNext 2.0 engine only monopolizes about one-third of it during our benchmark sequence.

While selecting graphics options, the game estimates how much memory it'll need, and those values were only slightly higher than our observations.

Conclusion

Although it's visually pleasing and seems like it'd be pretty demanding, For Honor proves to be playable at 1920x1080 using the Extreme quality preset on mid-range graphics cards. To achieve truly smooth frame rates on lower-end hardware, though, you'll need to make some sacrifices on the detail settings front, particularly if you own a Radeon RX 470 or GeForce GTX 970.

The AnvilNext 2.0 engine does a great job, but we are even more excited about the next version with DirectX 12 support.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Best Deals

MORE: All Gaming Content

Current page: CPU & RAM Resources, And Conclusion

Prev Page Benchmarks: Frame Rate, Frame Time, and Smoothness-

Sakkura While it is nice to see how last-gen cards like the 970 and 390 compare to current-gen cards, I think it would have been nice to see cards outside this performance category tested. How do the RX 460 and GTX 1050 do at 1080p High, for example.Reply -

coolitic I find it incredibly stupid that Toms doesn't make the obvious point that TAA is the reason why it looks blurry for Nvidia.Reply -

alextheblue Reply

They had it enabled on both cards. Are you telling me Nvidia's TAA implementation is inferior? I'd believe you if you told me that, but you have to use your words.19351221 said:I find it incredibly stupid that Toms doesn't make the obvious point that TAA is the reason why it looks blurry for Nvidia. -

irish_adam Reply19351221 said:I find it incredibly stupid that Toms doesn't make the obvious point that TAA is the reason why it looks blurry for Nvidia.

as has been stated setting were the same for both cards, maybe Nvidia sacrificing detail for the higher FPS score? I also found it interesting that Nvidia used more system resources than AMD. I was confused why the 3GB version of the 1060 did so poorly considering the lack vram usage but after looking it up nvidia gimped the card, seems a bit misleading for them to both be called the 1060, if you didnt look it up you would assume they are the same graphics chip with just differing amounts of ram. -

anthony8989 ^ yeah it's a pretty shifty move . Probably marketing related. The 1060 3 GB and 6 GB have a similar relationship to the GTX 660 and 660 TI from a few gens back. Only they were nice enough to differentiate the two with the TI moniker. Not so much this time.Reply -

Martell1977 Reply19352376 said:I was confused why the 3GB version of the 1060 did so poorly considering the lack vram usage but after looking it up nvidia gimped the card, seems a bit misleading for them to both be called the 1060, if you didnt look it up you would assume they are the same graphics chip with just differing amounts of ram.

I've been calling this a dirty trick since release and is part of the reason I recommend people get the 480 4gb instead. Just a shady move on nVidia's part, but they are known for such things.

Odd thing though, the 1060 3gb in laptops doesn't have a cut down chip, it has all its cores enabled and is just running lower clocks and VRAM.