Intel tempers expectations for next-gen Falcon Shores AI GPU — Gaudi 3 missed AI wave, Falcon will require fast iterations to be competitive

Jaguar Shores is the real thing?

Intel dumped its Xe-HPC GPU accelerators in favor of Gaudi but is still missing its sales goals with its Gaudi 3 AI accelerator. Thus, Intel is pinning its AI hopes on its next-gen Falcon Shores GPU platform. However, interim co-CEO Michelle Johnston Holthaus said this week that rather than Falcon Shores being a first-gen breakthrough, it would require fast iteration to become a competitive platform.

"We really need to think about how we go from Gaudi to our first-generation Falcon shores, which is a GPU," said at the Barclays 22nd Annual Global Technology Conference. "I will tell you right now, is it going to be wonderful? No, but it is a good first step in getting the platform done, learning from it, understanding how all that software is going to work, how the ecosystem is going to respond, so then we can very quickly iterate after that."

Intel admitted that its Gaudi 3 platform would miss its 2024 sales targets primarily due to imperfect software. At the Barclays Conference, the company shed additional light on the situation, saying that the Gaudi platform is complex to deploy, particularly in large clusters used for training. This is why it is primarily used for inference on the edge.

"Gaudi does not allow me to get to the masses; it is not a GPU that is easily deployed in systems around the globe," said the interim co-CEO. "When you think about those that deploy Gaudi, it is from the largest hyperscalers to smaller customers that are deploying at the edge."

There are good things about Intel's Gaudi platform, too, as it enables the company to learn more about the platform and software design. While the learning from the hardware side of the business can be used for next-generation AI platforms, it remains to be seen how the lessons learned from Gaudi can be applied to entirely different next-gen platforms.

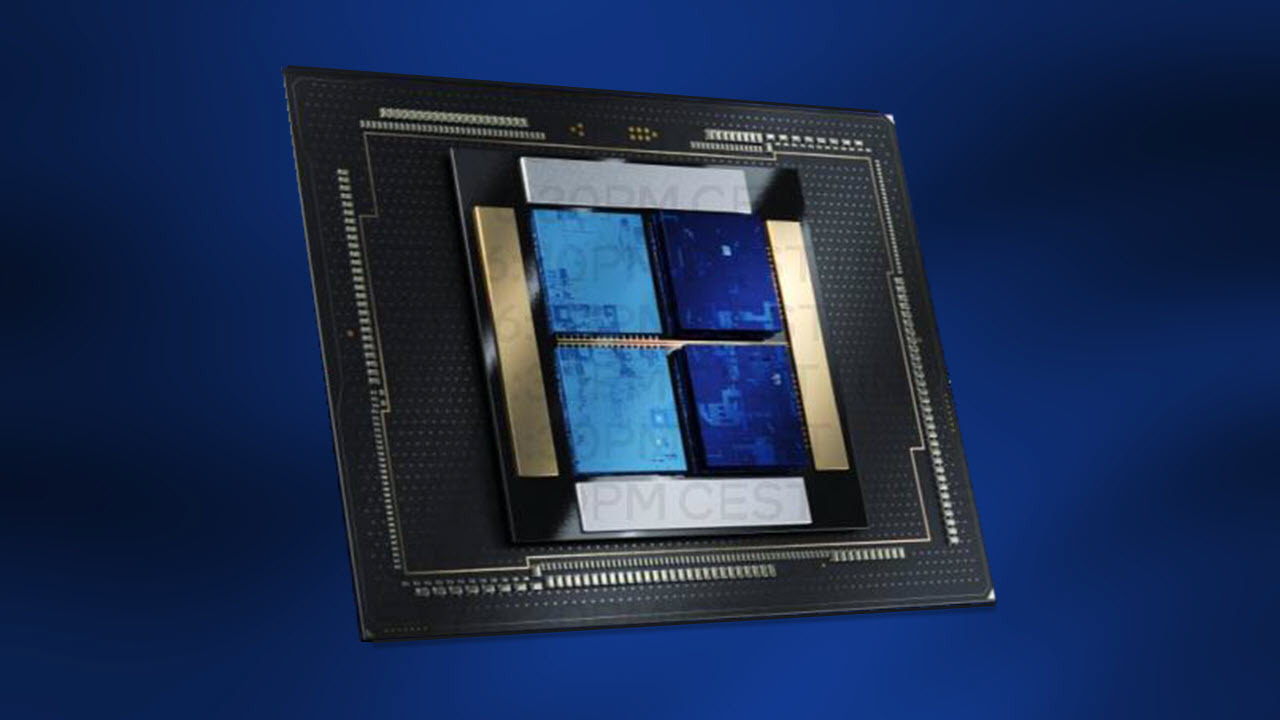

Intel's Falcon Shores is thought to be a multi-chiplet design with Xe-HPC (or at least Xe-HPC-like) and x86 chiplets with unified HBM memory. Integrating x86 CPUs and Xe-HPC GPUs into a single module with unified memory architecture will enable Intel to achieve over 5x higher compute density, memory capacity, bandwidth, and performance per watt compared to February 2022 platforms, the company said in 2022.

Keeping in mind that Falcon shores will adopt both refined architectures and process technologies, it is reasonable to expect this unit to be dramatically faster compared to the company's 2022 products, which are Xeon Scalable 'Ice Lake' processors and the first-gen Gaudi accelerator.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Logically, Falcon Shores will be a learning vehicle for Intel and its ISV partners. The company's Data Center GPU Max 'Ponte Vecchio' has not gained significant traction in the AI realm, so independent software vendors will have to learn how to use Intel's Xe-HPC (or rather Xe-AI?) architecture on Falcon Shores. Since developing AI software takes quite some time, Intel's next-next-gen Jaguar Shores would likely be the first platform with a shot at mass adoption.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Reply

No, it won't. At least, not according to this:The article said:Intel's Falcon Shores is thought to be a multi-chiplet design with Xe-HPC (or at least Xe-HPC-like) and x86 chiplets with unified HBM memory.

"Intel originally planned for its Falcon Shores chips to have both GPU and CPU cores under the hood, creating the company's first 'XPU' for high performance computing. However, its surprise announcement a few months ago that it would pivot to a GPU-only design and delay the chips to 2025 left industry observers shocked"

https://www.tomshardware.com/news/intel-explains-falcon-shores-redefinition-shares-roadmap-and-first-details

If they retracted that statement, please cite where/when. Tagging @PaulAlcorn , since Anton is contradicting the article you wrote. -

bit_user FWIW, I think Intel should keep Falcon Shores as HPC-oriented and Gaudi as their AI platform. Just because Nvidia has been successful using GPUs for AI doesn't mean it's the best architecture for that problem. I predict Nvidia will fork off a line of AI accelerators that look less like GPUs than their current products do - and they might not even support CUDA!Reply

I also think you don't need HBM to do AI right, especially if you're not constrained to a GPU-like architecture. What you need is lots of SRAM, so the thing to do is just stack your compute dies on SRAM. AI is a dataflow problem, whereas graphics isn't. That means GPUs have to be much more flexible with data movement & reuse, and that's why they need such crazy amounts of bandwidth to main memory. By contrast, AI has much better data locality, so you can do quite well with adequate amounts of SRAM and a mesh-like interconnect. -

P.Amini Reply

CUDA is one of the NVIDIA's big advantages and selling points right now, I don't think they drop it anytime soon (as long as NVIDIA is the main player).bit_user said:FWIW, I think Intel should keep Falcon Shores as HPC-oriented and Gaudi as their AI platform. Just because Nvidia has been successful using GPUs for AI doesn't mean it's the best architecture for that problem. I predict Nvidia will fork off a line of AI accelerators that look less like GPUs than their current products do - and they might not even support CUDA!

I also think you don't need HBM to do AI right, especially if you're not constrained to a GPU-like architecture. What you need is lots of SRAM, so the thing to do is just stack your compute dies on SRAM. AI is a dataflow problem, whereas graphics isn't. That means GPUs have to be much more flexible with data movement & reuse, and that's why they need such crazy amounts of bandwidth to main memory. By contrast, AI has much better data locality, so you can do quite well with adequate amounts of SRAM and a mesh-like interconnect. -

bit_user Reply

Not long ago, Jim Keller claimed CUDA was more of a swamp than a moat. I think his point is that the requirement for CUDA compatibility bogs down Nvidia's innovation on hardware because it forces them to make hardware that's more general than what you really need for AI. This comes at the expense of both efficiency and perf/$. So far, Nvidia has been able to avoid consequences, mainly by virtue of having the fastest and best-supported hardware out there. However, as AI consumes ever more power and their biggest customers increasingly roll out infrastructure powered by their own accelerator, I think these are going to catch up with Nvidia.P.Amini said:CUDA is one of the NVIDIA's big advantages and selling points right now, I don't think they drop it anytime soon (as long as NVIDIA is the main player).

If I'm right, then AMD and Intel will get burned by having tried to follow Nvidia's approach too closely. They also fell into a trap by relying on HBM, which is now one of the main production bottlenecks and also not cheap. Of course, you need HBM, if you have a GPU-like architecture. The only way to avoid it is to build a dataflow architecture with enough SRAM, like Cerebras has successfully done.