AMD Wins El Capitan: EPYC Genoa and Radeon Instinct to Power Two-Exaflop DOE Supercomputer

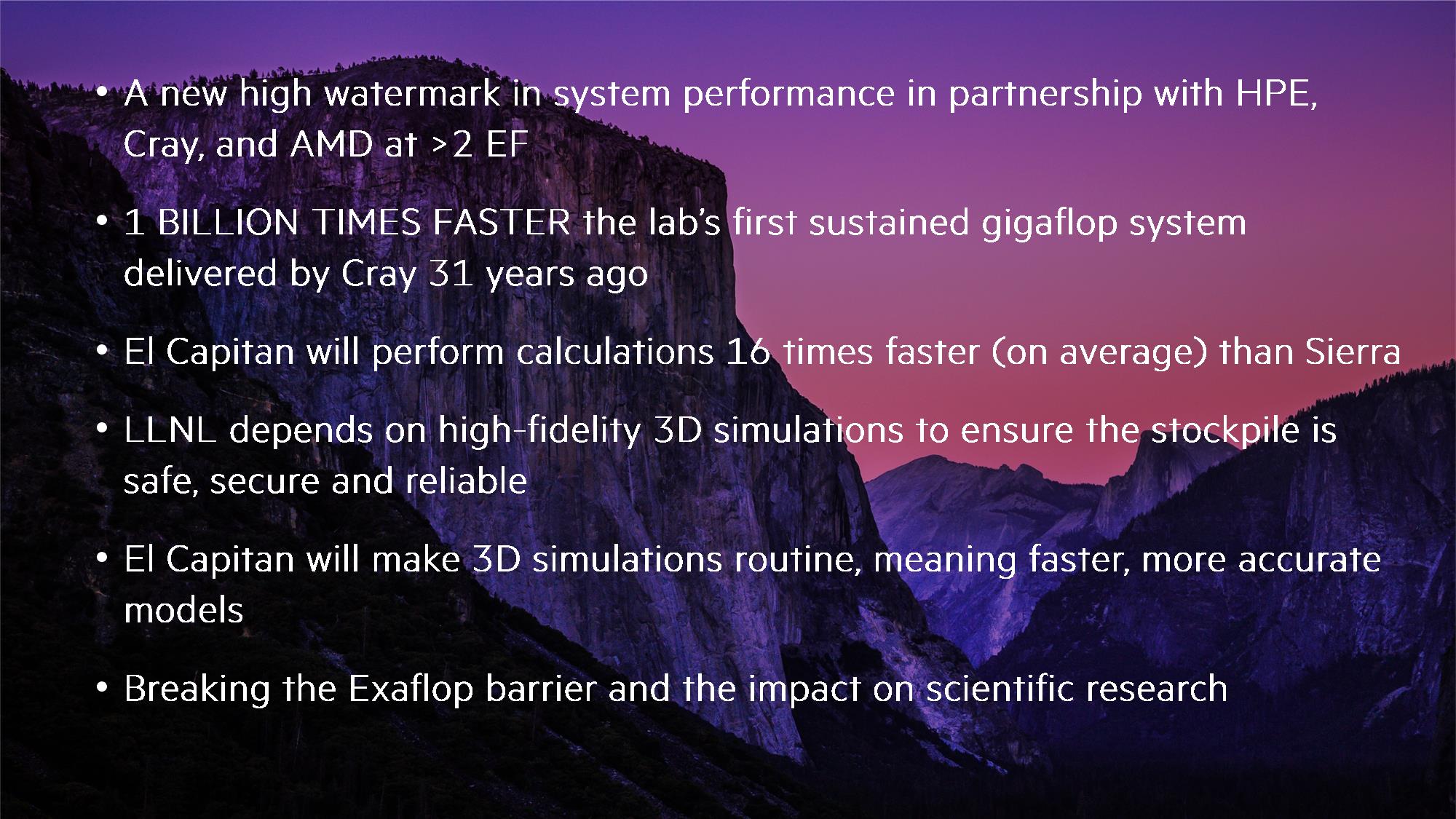

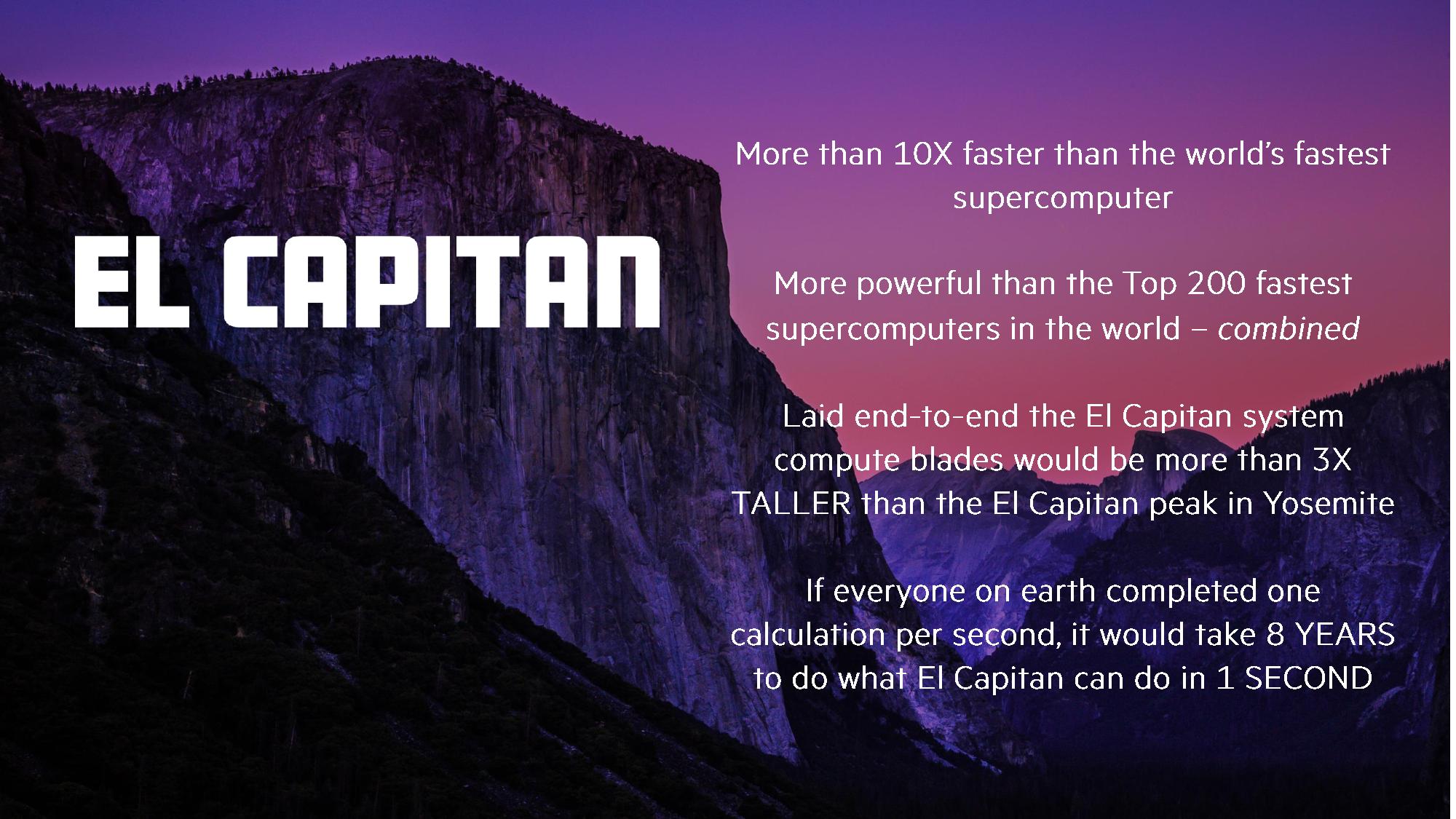

AMD scored another big win today with the announcement that the U.S. Department of Energy (DOE) has selected its next-next-gen EPYC Genoa processors with the Zen 4 architecture and Radeon GPUs to power the $600 million EL Capitan, a two-exaflop system that will be faster than the top 200 supercomputers in service today, combined.

AMD beat out both Intel and Nvidia for the contract, making this AMD's second win for an exascale system with the DOE (details on Frontier here). Meanwhile, Intel previously won the contract for the DOE's third (and only remaining) exascale supercomputer, Aurora.

Many analysts had contended that the DOE would offer the El Capitan contract to Nvidia, so today's announcement marks another loss for Nvidia, which currently isn't participating in any known exascale-class supercomputer project. That's particularly interesting because Nvidia GPUs currently dominate the Top 500 supercomputers and are the leading solution for GPU-accelerated compute in the data center.

The DOE originally announced El Capitan in August 2019, but at the time the agency hadn't come to a final decision on either the CPUs or GPUs that would power what will soon be the world's fastest supercomputer. That's because the agency engaged in a late-binding contract process to suss out which vendor could provide the best solution for a system that would be deployed in late 2022 and operational in 2023, meaning it evaluated future technology from multiple vendors.

As such, it's telling that the DOE selected AMD's next-gen platforms, as it highlights that its next-gen products are more suitable for the project than either Intel or Nvidia's future offerings. It's also noteworthy that the system has a particular focus on AI and machine learning workloads.

The DOE also revised the performance projections: The agency originally projected 1.5 exaflops of performance, but after considering the capabilities of the chosen AMD processors and graphics cards, revised that figure up to two exaflops of performance (double-precision). That's 16 times faster than the IBM/Nvidia Sierra system it will replace, which is currently the second-fastest supercomputer in the world, and ten times faster than Summit, the current leader.

Now, on to the technical bits.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AMD Zen 4 EPYC Genoa and Radeon Instinct

AMD's EPYC Genoa processors form the x86 backbone of El Capitan, but the company hasn't shared any details on the new design, including the process node, number of cores, or clock speeds. We do know that Genoa features the Zen 4 architecture that will come after the next-gen EPYC Milan processors that come packing the Zen 3 design. The Genoa processors support next-gen memory, implying DDR5 or some standard beyond today's DDR4, and also feature unspecified next-gen I/O connections.

AMD and the DOE are also being coy with details about the GPU architecture employed, merely characterizing the cards as a "new compute architecture" in the Radeon Instinct lineup. The GPUs support "mixed precision operations" and are obviously optimized for deep learning workloads. We also know that the design will come with "next-gen" high bandwidth memory (HBM) and will support CPU-to-GPU Infinity Fabric connections, which we'll cover shortly.

The $600 million El Capitan supercomputer will consume "substantially lower than 40 megawatts (MW)" of power, with DOE representatives stating that it will be closer to the 30MW range than 40MW.

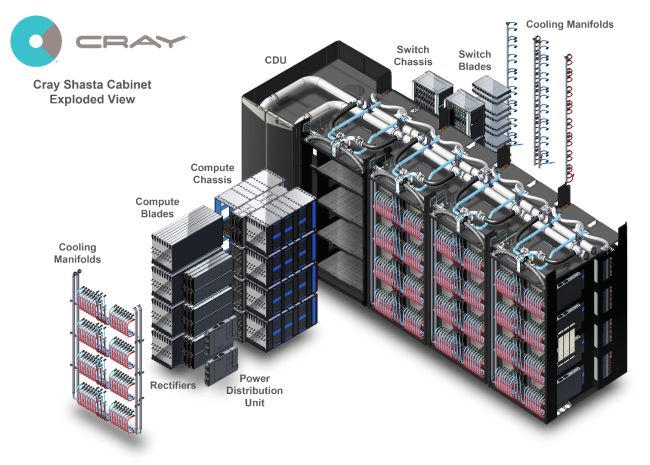

The system relies on the now widely-adopted Cray Shasta supercomputing platform, leaning on its watercooling subsystems to dissipate the incredible thermal load of ~30MW of compute power. The DOE hasn't revealed how many cabinets El Capitan will use, or the number of CPUs/GPUs, instead saying that if the blades were laid end to end they would be three times taller than the 3,600-foot tall El Capitan peak in Yosemite. Head here for a deeper look at the Shasta architecture, which includes the Slingshot networking solution that will be used with El Capitan.

Currently, Cray's proprietary Slingshot fabric connects the nodes to integrated top-of-rack switches that house a Cray-designed ASIC that pushes out 200 Gb/s per switched port, but El Capitan will likely use a future variant with beefed-up networking capabilities. The Slingshot networking fabric uses an enhanced low-latency protocol that includes intelligent routing mechanisms to alleviate congestion. The interconnect supports optical links, but it is primarily designed to support low-cost copper wiring.

The system will also use a storage solution based on the Clusterstor E1000, which consists of a mixed disk/flash environment that maximizes the economics and capacity of hard drives, while using tiering to enjoy the performance of flash. This system will also communicate over the Slingshot network.

AMD's Next-Gen CPU-to-GPU Infinity Fabric 3.0 and ROCm

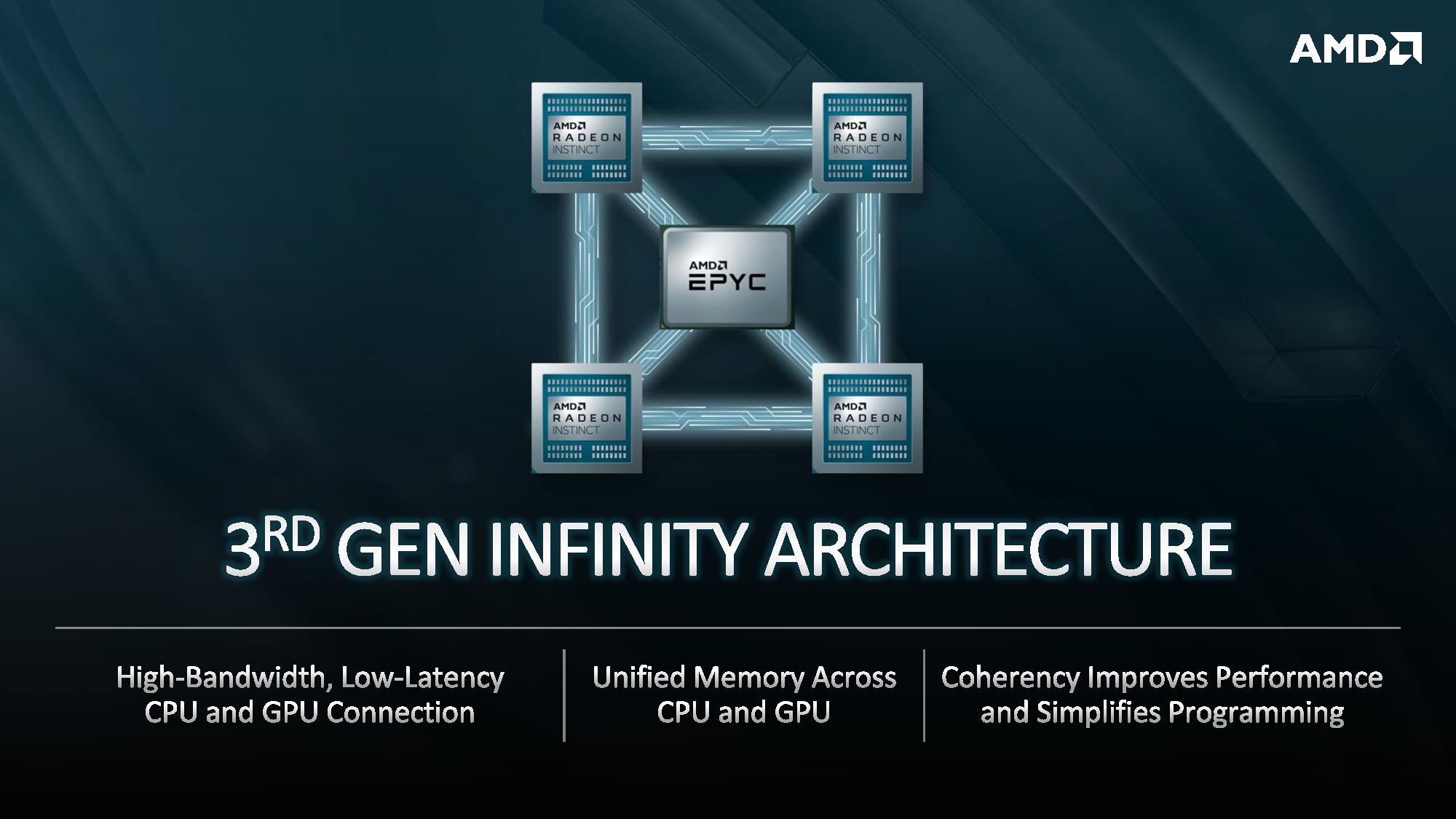

While the Slingshot fabric propels bits between nodes, intra-node data movement between the processor and GPU is incredibly important to maximize the impact of local compute. In that vein, AMD announced that its system will employ its third-gen Infinity Fabric that will support unified memory access across the CPU and GPU. AMD has previously disclosed that its CPU-to-GPU Infinity Fabric supports a 4:1 ratio of GPUs to CPUs,

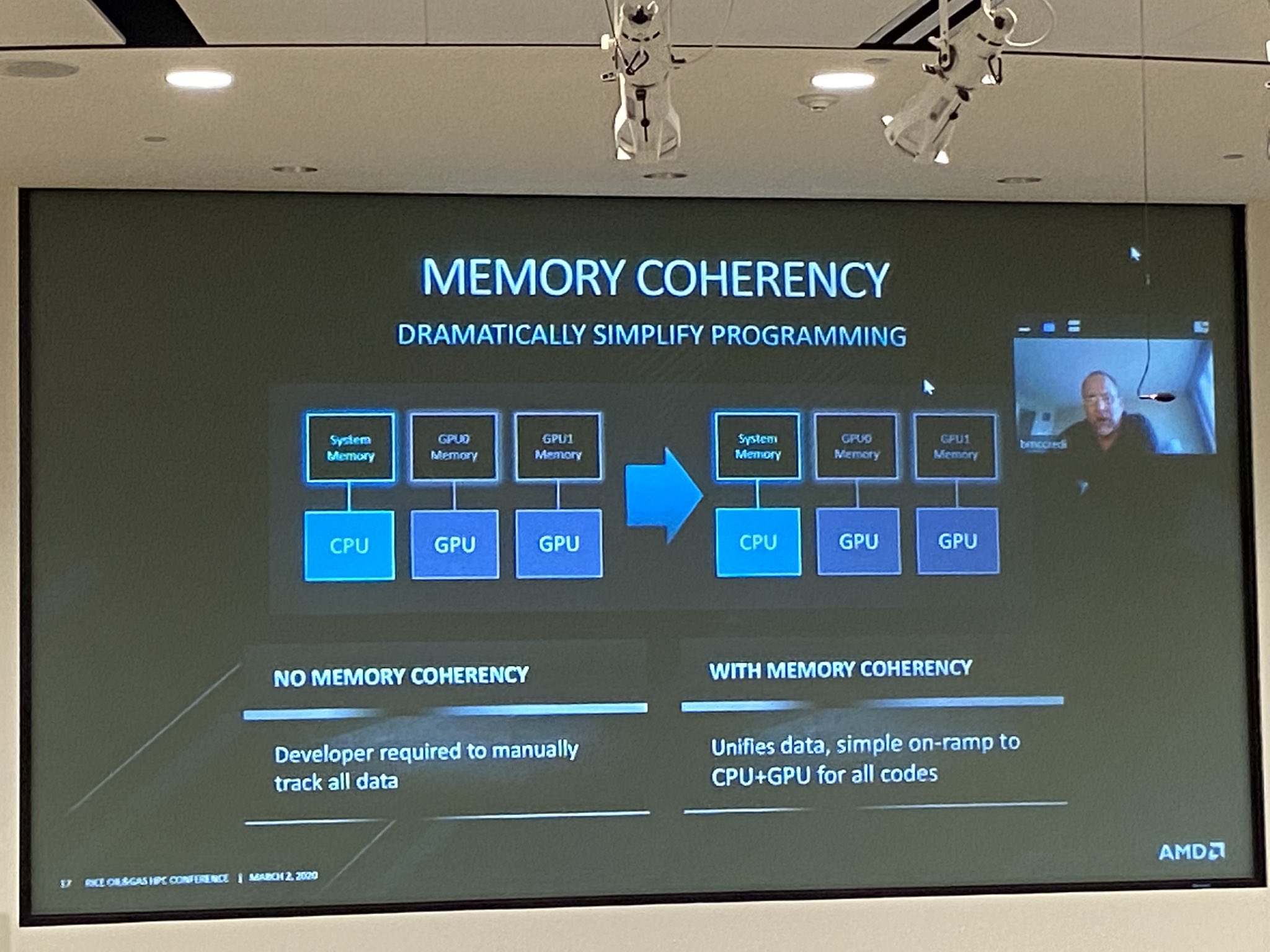

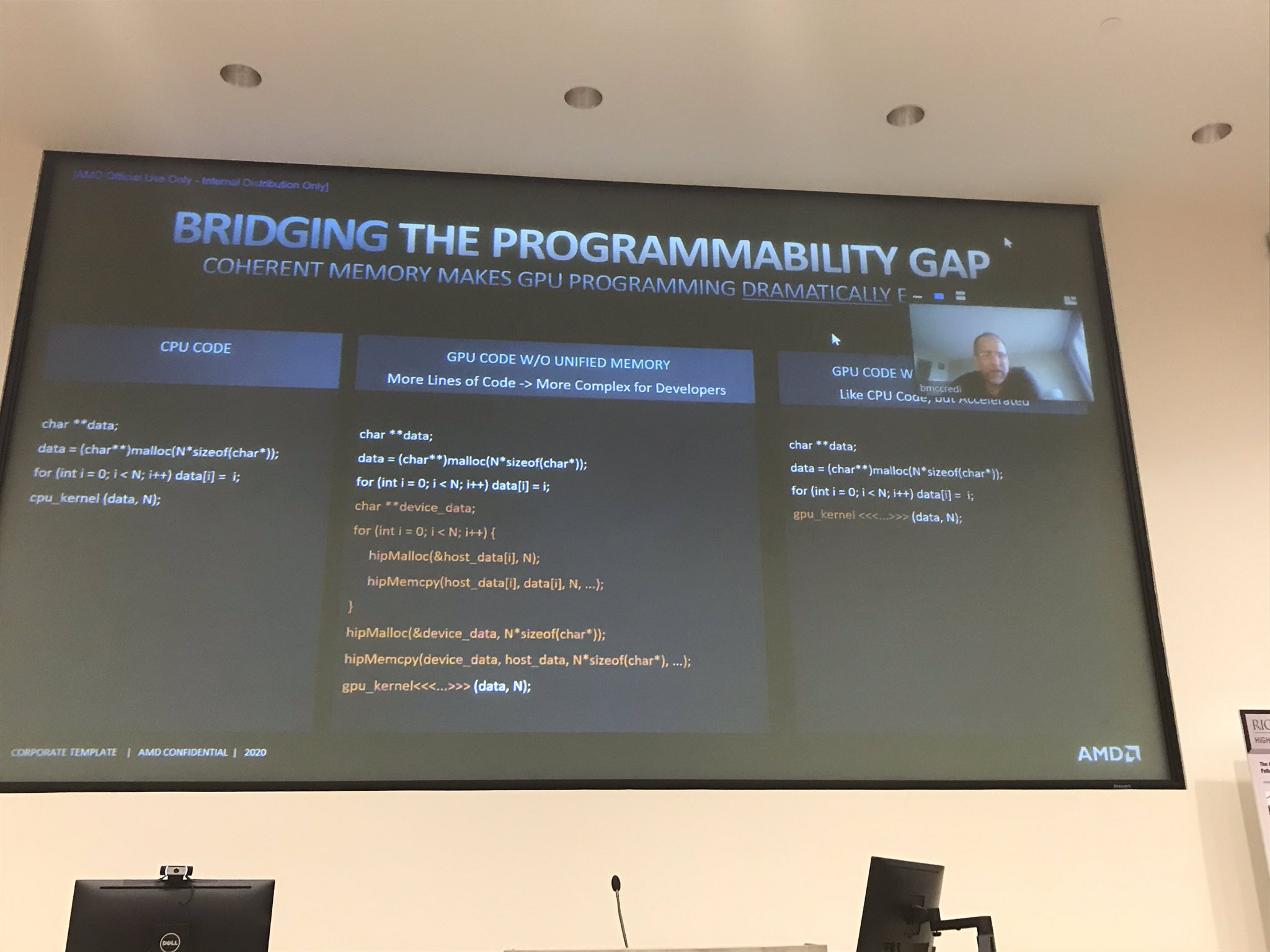

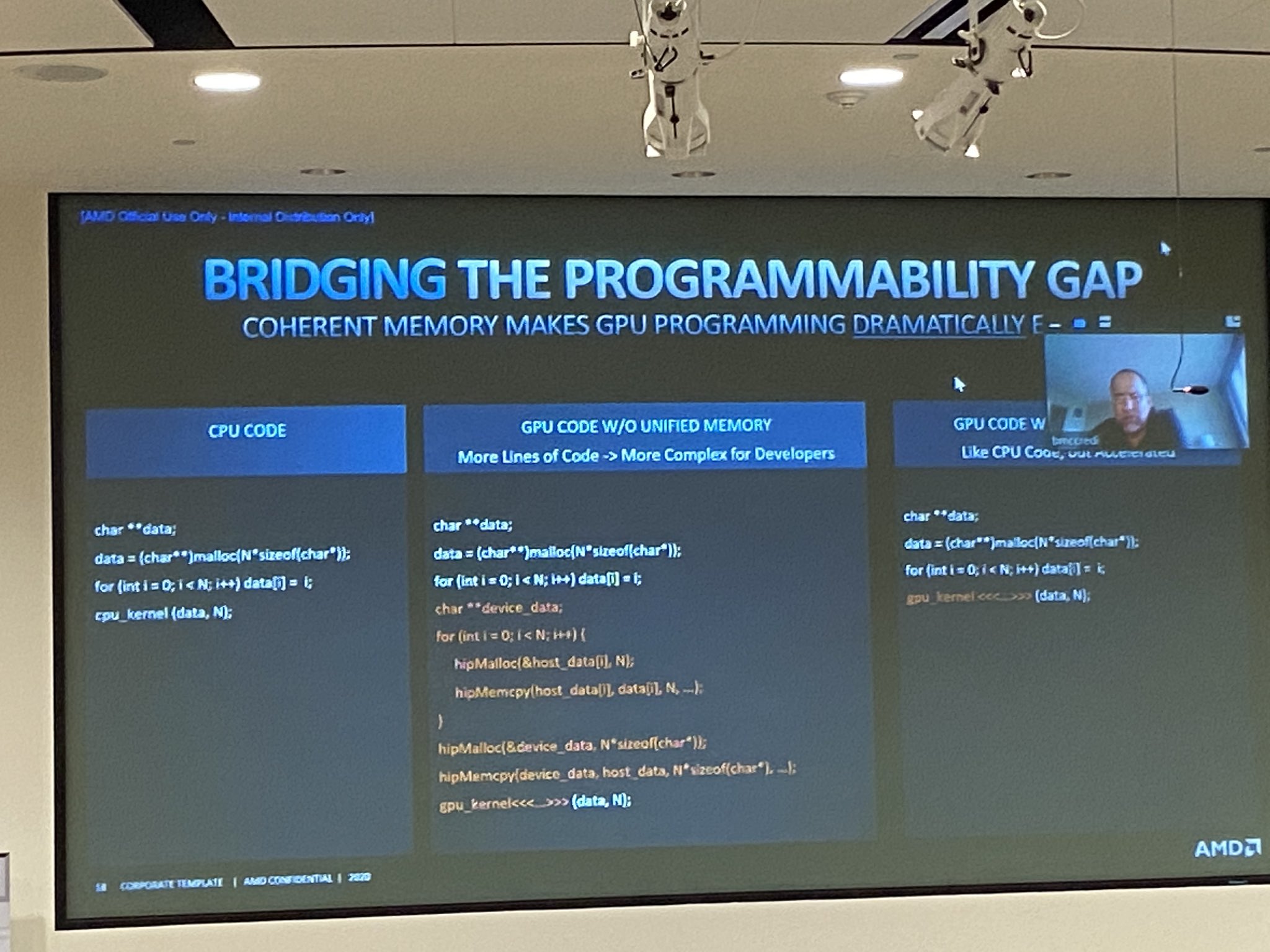

Infinity Fabric 3.0's memory coherence drastically simplifies programming and provides a high-bandwidth low-latency connection between the two types of compute. We covered AMD's new CPU-to-GPU Infinity Fabric in depth yesterday, but the key takeaway is that the cache-coherent virtual memory reduces data movement between the CPU and GPU that often consumes more power than the computation itself, thus reducing latency and improving performance and power efficiency. Head to our deep dive for more information.

All of the bleeding-edge tech in the world is useless if it can't be homogeneously tied together with easy-to-use tool-chains for developers. AMD leverages its open-source ROCm heterogeneous programming environment to maximize performance of the CPUs and GPUs in OpenMP environments. The DOE recently invested $100 million in a Center of Excellence at the Lawrence Livermore National Lab (part of the DOE) to help develop ROCm, which is a big shot in the arm for the ROCm foundation, not to mention AMD. That investment will help further the ecosystem for all AMD customers. El Capitan also uses the open-source Spack for package management.

Overall, the DOE plans for El Capitan to operate more like a typical cloud environment with support for microservices, Kubernetes, and containers.

What it Means

AMD now has two of the three exascale supercomputer contracts under its belt, which speaks to the potency of its next-gen EPYC platforms for HPC and supercomputing applications. As we've covered, the EPYC platform is enjoying rapid uptake in these markets, and the DOE's buy-in underlines that AMD's next-next-gen processors and graphics cards are uniquely well-suited for the task, beating out other entrants from Nvidia and Intel.

AMD's Infinity Fabric 3.0 plays a key role in this win, and likely helps explain Nvidia's continuing lack of exascale computing wins. Intel is also working on its Ponte Vecchio architecture that will power the Aurora supercomputer, and Intel's approach leans heavily on its OneAPI programming model and also ties together shared pools of memory between the CPU and GPU (lovingly named Rambo Cache).

Meanwhile, Nvidia might suffer in the supercomputer realm because it doesn't produce both CPUs and GPUs and, therefore, cannot enable similar functionality. Is this type of architecture, and the underlying unified programming models, required to hit exascale-class performance within acceptable power envelopes? That's an open question, but while Nvidia is part of the CXL consortium which should offer coherency features, both AMD and Intel have won exceedingly important contracts for the U.S. DOE's exascale-class supercomputers (the broader server ecosystem often adopts the winning HPC techniques), but Nvidia, and its soon-to-be procured Mellanox, haven't made any announcements about such wins despite their dominating position for GPU-accelerated compute in the HPC and data center space.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

velocityg4 I can't get past the name. Each time I read it. I just think this will be the most EPYC hackintosh ever created.:sneaky:Reply -

BT Nvidia DOES NOT Win DOD/DOE contracts because they make their products in Taiwan Fabs and not in the US. IBM, Intel and AMD make their stuff in US Fabs.Reply -

Deicidium369 Uh yeah, no. AMD makes it's processors at the same place Nvidia makes their GPUs - Taiwan - that's the T in TSMC. The older Ryzen are made in GloFo's US facility - but the "7nm" is in Taiwan. Taiwan may not consider itself a part of mainland China, but mainland China disagrees.Reply -

Paul Alcorn Intel is also reliant upon test and package facilities in China, among other pursuits. They have opened a new facility in Vietnam and Costa Rica to sidestep some of that, probably due to the trade war. However, most vendors are exposed to the Chinese supply chains in some form, AMD included. Doesn't matter where you make the chips if some of the key ingredients are sourced from China.Reply

Note that Nvidia has won plenty of DOE contracts in the past. -

mihen I think Instinct MI60 verse GV100 shows two similarly specced cards, but the AMD product meshes better with the other hardware, it's newer, and it was probably significantly cheaper.Reply -

Deicidium369 Most of the activities in China are for foreign markets.Reply

"Nvidia DOES NOT Win DOD/DOE contracts because they make their products in Taiwan Fabs and not in the US. IBM, Intel, and AMD make their stuff in US Fabs. "

is the comment I was responding to - the argument that Nvidia doesn't win because they fab in Taiwan is false - was just pointing out that AMD also makes their CPUs and GPUs at the same company in Taiwan.

Of course any large multinational like Intel has processes spread across the globe - I do, however, however, think that most of the work done on products does not come to the US market. Not sure what you mean by key ingredients - not the sand, not the wafers, not the fabrication, and maybe only raw materials to make the packaging are from China - the fabrication is done by machines from the EU and US. But, again, hard for any industry to not have some linkage to Chinese suppliers.

Supercomputing would not be where it is today without Nvidia GPUs - prior to that, it was massive amounts of CPUs. Intel's Larrabee derived products came at the exact wrong time - just before Nvidia GPUs started taking all of the oxygen out of the market - it is also the reason for Xe HPC - no need to cede a significant portion of the BoM to another company.

This whole manufactured trade war is total <Mod Edit>, amazing we have a president who thinks that raising tariffs on imports means there is money flowing into the treasury. Giving China MFN status was a MASSIVE mistake - but not understanding how tariffs work is the biggest blunder. -

Deicidium369 Replymihen said:I think Instinct MI60 verse GV100 shows two similarly specced cards, but the AMD product meshes better with the other hardware, it's newer, and it was probably significantly cheaper.

Meshes better? in what way? Newer is meaningless, cheaper is meaningless - what matters is ecosystem - Nvida's CUDA ecosystem is robust and comprehensive - and AMD's not even close - When XE HP/HPC drops one of the biggest challenges will be for Intel to build out tools to make migration from CUDA streamlined and compelling. Intel has the market muscle (installed base and $) to challenge Nvidia - at this point they are the only company that stands a chance to take on Nvidia. -

mihen I am talking about the communication between the Epyc processors and the GPUs. For someone like the DOE an existing ecosystem is not that important since everything will be made specific for the DOE.Reply -

ta152h This is just a bad article.Reply

Nowhere is Hewlett-Packard Enterprise mentioned, which by the way, owns Cray. Odd. I guess the author didn't know.

Also NVIDIA does have a very tightly knit GPU/CPU architecture, and had it long before Intel or AMD. Look up OpenPOWER to learn more. IBM/NVIDIA broke that ground years ago. First with OpenCAPI, then with NVLINK. Why the HP was chosen, I'm not sure, but it was not because NVIDIA did not have an intimate relationship with CPUs.