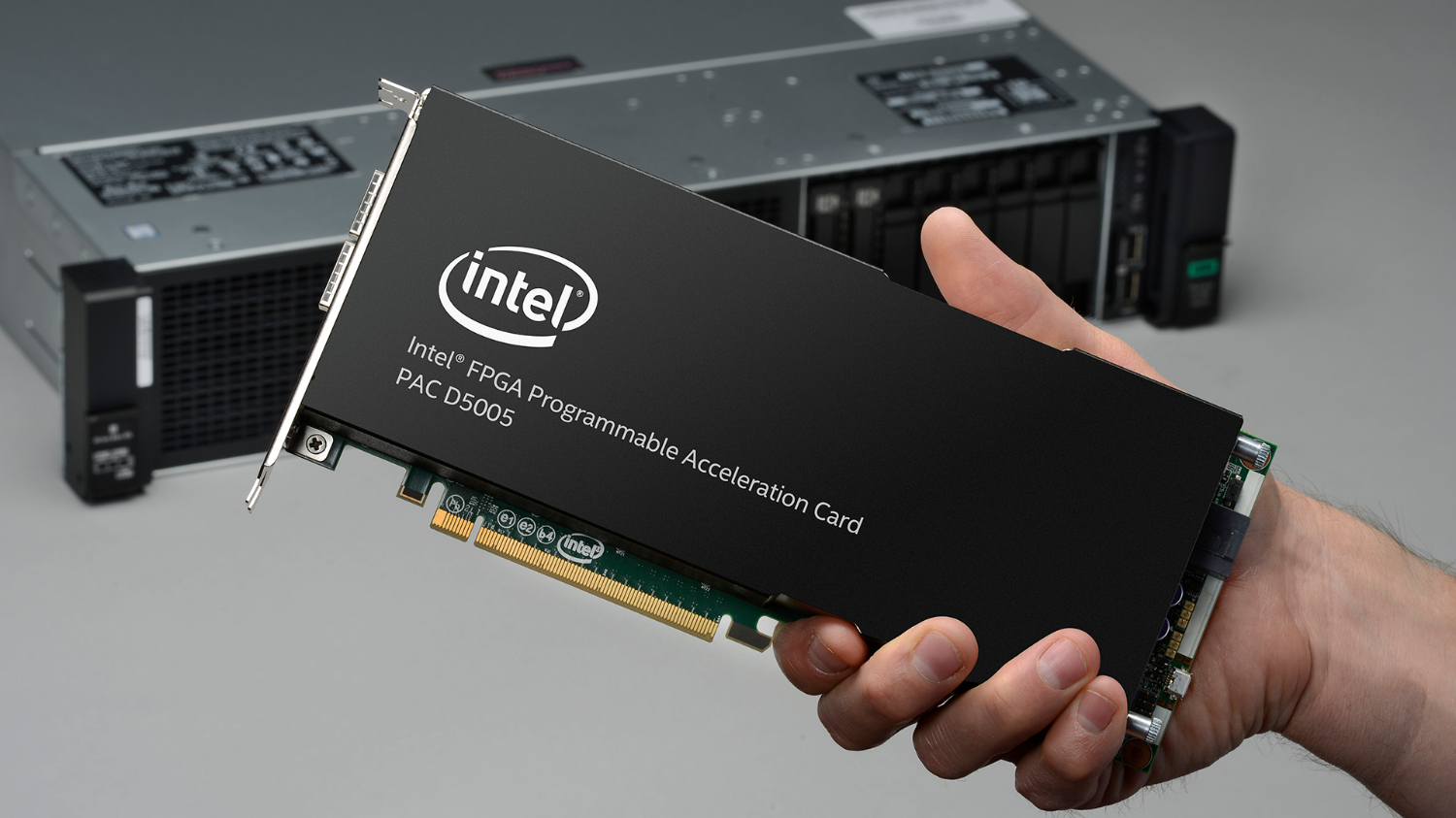

Intel Announces New Programmable Accelerator Card

Intel today announced the D5005 Programmable Accelerator Card based on the Stratix 10 FPGA. It is shipping now in the HPE ProLiant DL3809 Gen10 server.

Intel announced the Programmable Acceleration Card in 2017 as a comprehensive platform for using FPGAs in the data center, the first of which consisted of a 20nm Arria 10 GX. In 2018 already, the company had announced that it would ship a PAC with the newer 14nm FPGAs, and today marks its launch with Hewlett Packard Enterprise (HPE) as a launch partner.

The new Intel FPGA PAC D5005 accelerator is the second card in the PAC portfolio and contains a Stratix 10 SX FPGA. The PAC also comes with Intel’s Acceleration Stack that provides drivers, application programming interfaces (APIs), and an FPGA interface manager. It further contains four banks of 32GB DDR4 with ECC; it has two QSFP interfaces of 100Gbps each and the card is connected via PCIe 3.0 x16. It has a 215W TDP.

Compared to the Arria 10 PAC, this means three times more programmable logic, four times more DDR4 memory and twice the Ethernet ports with speeds up from 40GbE to 100GbE.

HPE is the first OEM to announce pre-qualification of the D5005 into its servers, specifically the HPE ProLiant DL380 Gen10 server. Targeted workloads include streaming analytics, video transcoding, financial technology, genomics, and artificial intelligence.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

bit_user I used to want a FPGA card, but GPUs are good enough for anything I'd currently want to do.Reply

Plus, I'm the specs tell me this thing must be at least $10k. -

NightHawkRMX um-1fAVU1OQView: https://www.youtube.com/watch?v=um-1fAVU1OQReply

From virtually 10 years ago -

bit_user Reply

What does that have to do with anything?remixislandmusic said:From virtually 10 years ago

If you watch the video, it's their Larrabee graphics card (or maybe the generation after), which they cancelled before it ever saw the light of day. It was rumored to have graphics performance that wasn't competitive with existing GPUs of the day, and I suspect it also might've cost too much.

https://en.wikipedia.org/wiki/Larrabee_(microarchitecture)

After that, they continued the program without any graphics hardware or connectors, and rebranded it as Xeon Phi.

https://en.wikipedia.org/wiki/Xeon_Phi

Last year, they abruptly cancelled Xeon Phi. Xeon Phi was a jack of all trades, but a master of none. After Intel announced their Xe dGPUs, bought Altera and Nervana, and with their mainstream Xeons having up to 28 cores, there was pretty much no niche where Xeon Phi wouldn't be surpassed by other Intel products.

https://en.wikipedia.org/wiki/Xilinxhttps://en.wikipedia.org/wiki/Nervana_Systems

Larrabee is dead. Its closest descendant is the Xeon Scalable line (originating with Skylake-SP), as it was the first to implement a mesh communications topology and AVX-512, both of which they inherited.

Of any of their existing/former products, the Xe dGPUs will likely most resemble their iGPUs. They killed Larrabee because it wasn't competitive with Nvidia. They're not going to bring it back in the form of Xe or anything else.

https://en.wikipedia.org/wiki/Skylake_(microarchitecture)#Skylake-SP

As the article says, this new compute card is FPGA-based, which are not new (as you said), and unlike any of the above.

https://en.wikipedia.org/wiki/Hardware_acceleration -

bit_user BTW, Linus is a really good salesman. After watching that video, even I almost wanted one. Except, he doesn't realize that GPUs have long been as programmable as he's saying Larrabee was.Reply

For instance, it's not that existing AMD cards can't do ray tracing, just that it would be too slow to be worthwhile. A lot of graphics is like that - once somebody puts some fixed-function logic to enable a particular feature, it's not practical to use software emulation, since any games written to use the feature are expecting the performance of the fixed-function implementation. -

NightHawkRMX Reply

Its too slow to be worthwile on rtx cards too lol.bit_user said:it would be too slow to be worthwhile

I mean linus did work for ncix so... -

bit_user Reply

But I trust you get my point, which is that if a game is (barely) playable with RTX, then imagine the same game at 1/3rd or 1/10th the speed. Definitely unplayable.remixislandmusic said:Its too slow to be worthwile on rtx cards too lol.

So, it's not enough just to be able to implement new features - they need to run at approximately the expected speed, or you still basically can't use them. -

NightHawkRMX Im aware. I have seen people render raytraced images on a graphing calculator before. It took 2 days to complete 1 frame, but possible.Reply -

bit_user Reply

Sorry, if I put too strong a point on that. It's really Linus' effusive praise of that Intel GPU's programmability that I'm reacting to.remixislandmusic said:Im aware.

I realize you were just having a bit of fun with my example.