Nvidia Makes Breakthrough In Reducing AI Training Time

Nvidia, one of the technology companies heavily invested in artificial intelligence, has revealed a breakthrough in reducing the time it takes to train AI.

In a blog post, the company discussed the rise of unsupervised learning and generative modeling thanks to the use of generative adversarial networks (GANs).

Thus far, the majority of deep learning has relied on supervised learning that gives machines a "human-like object recognition capability." An example for this, Nvidia notes, could be that "supervised learning can do a good job telling the difference between a Corgi and a German Shepherd, and labeled images of both breeds are readily available for training."

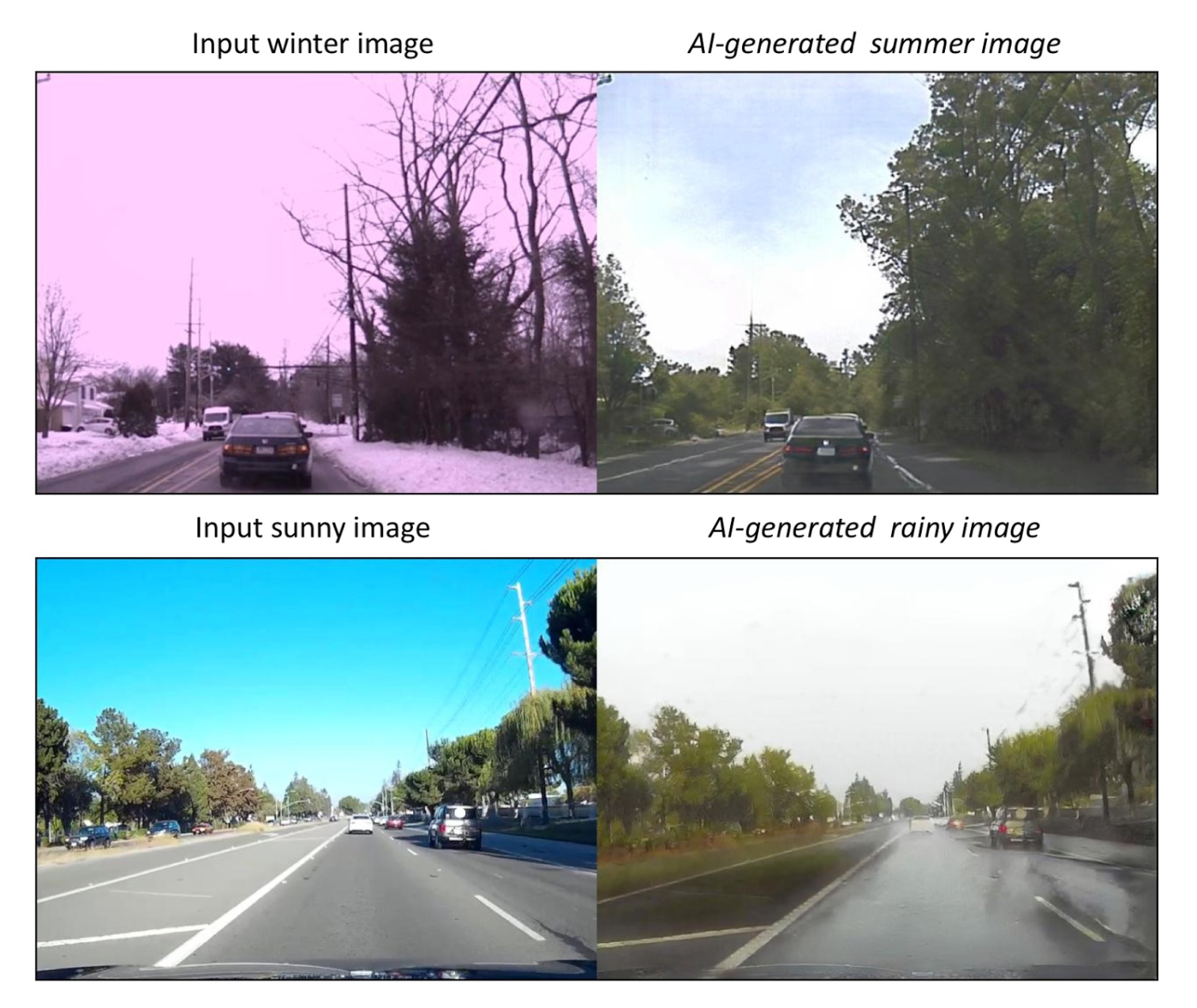

However, in order for machines to receive a more “imaginative” capability, like how a wintery scene may look during the summer, a research scientist team headed by Ming-Yu Liu made use of unsupervised learning and generative modeling. To see this in action, Nvidia gave the example seen below, where the winter and sunny scenes on the left are the inputs and the AI's imagined corresponding summer and rainy scenes are displayed on the right.

The aforementioned work was made possible by using a pair of GANs with a "shared latent space assumption".

“The use of GANs isn’t novel in unsupervised learning, but the NVIDIA research produced results — with shadows peeking through thick foliage under partly cloudy skies — far ahead of anything seen before,” the company explained.

As well as requiring less labeled data and the related time and effort to create and process it, deep learning experts will be able to implement the technique across several areas.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

“For self-driving cars alone, training data could be captured once and then simulated across a variety of virtual conditions: sunny, cloudy, snowy, rainy, nighttime, etc,” Nvidia said.

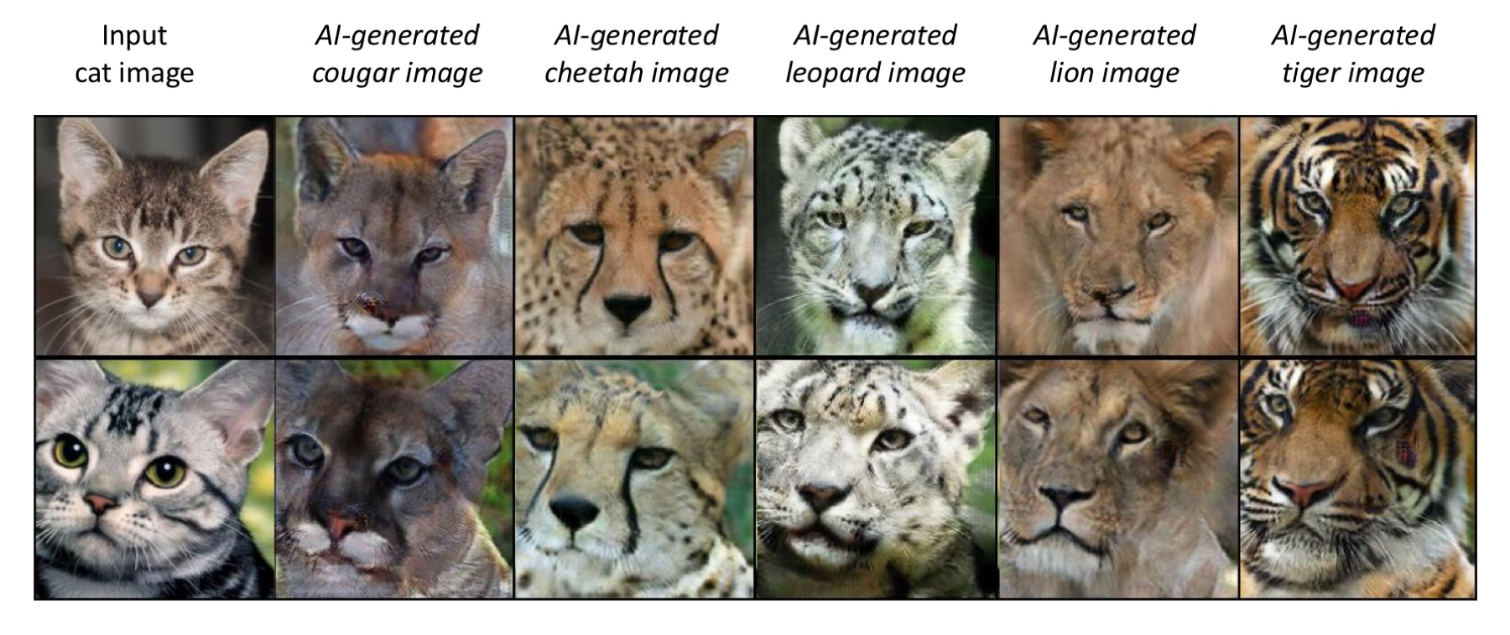

Another illustration of the use of unsupervised image-to-image translation networks was showcased through a picture showing how a cat could be used to produce images of leopards, cheetahs, and lions.

Elsewhere, a paper the company pointed towards is “Learning Affinity via Spatial Propagation Networks,” headed by Sifei Liu. The paper includes "theoretical underpinnings of the neural network’s operation along with mathematical proofs of its implementation." Nvidia highlights the speed in particular. The network, which runs on GPUs with the CUDA parallel programming model, is up to 100 times faster than previously possible.

Zak Islam is a freelance writer focusing on security, networking, and general computing. His work also appears at Digital Trends and Tom's Guide.

-

redgarl ohhhh a blog post... nice... can I do one that I discovered the secret of immortality? Am I going to be on TH headlines for this... breakthrough.Reply -

alfaalex101 10 years, and this is the coolest thing I've ever read on Tom's Hardware. It's insane how far technology has come.Reply -

bit_user Reply

That's how a lot of research organizations announce their findings, these days. The blogs are generally more accessible than the actual research papers, which are aimed squarely at academic researchers and industry practitioners. However, if you want to see the details, they pretty much always have a link to the papers.20447391 said:ohhhh a blog post... nice... can I do one that I discovered the secret of immortality? Am I going to be on TH headlines for this... breakthrough.

If you go back and look, many of the articles on this site that report on findings announced by researchers at Google, Nvidia, Facebook, Amazon, Microsoft, etc. actually link to blog entries as their source.

-

redgarl Reply20448271 said:

That's how a lot of research organizations announce their findings, these days. The blogs are generally more accessible than the actual research papers, which are aimed squarely at academic researchers and industry practitioners. However, if you want to see the details, they pretty much always have a link to the papers.20447391 said:ohhhh a blog post... nice... can I do one that I discovered the secret of immortality? Am I going to be on TH headlines for this... breakthrough.

If you go back and look, many of the articles on this site that report on findings announced by researchers at Google, Nvidia, Facebook, Amazon, Microsoft, etc. actually link to blog entries as their source.

Is it really that breakthrough? Nvidia is beating the drum about AI but the only thing they did is using video feed for autonomous car or trying to put an algorithm when it comes to neural network. There is so many players in the field, for example did you know that Blackberry is deeply involved in the process of car network communication.

But whatever I am saying, the mighty Nvidia just discover how to make cats with an algorithm...

-

bit_user Reply

Hmmm... First you criticize sourcing from a blog. Now it's questioning the novelty of the discoveries? What really is your issue, here?20448774 said:Is it really that breakthrough? Nvidia is beating the drum about AI but the only thing they did is using video feed for autonomous car or trying to put an algorithm when it comes to neural network.

I think it's legit. Look at the images. In one case, they developed a network capable of changing the weather in a photo or changing the species of cat. And the results look absolutely convincing. I can think of a lot of less newsworthy items covered on this site.

They also mentioned a case where a novel type of neural network was developed that can perform image segmentation (among other tasks) with industry-leading accuracy at (they claim) 100x the performance. That sure sounds like it's advancing the state of the art. In fairness, this is a bit esoteric for the typical Tom's reader.

I'm not saying you need to care about neural networks or AI, but IMO criticizing what you're clearly not even trying to understand only reflects poorly on you.20448774 said:But whatever I am saying, the mighty Nvidia just discover how to make cats with an algorithm...

Yeah, we're sorry your EVGA graphics card blew up. Seriously, I wouldn't wish that on anyone. But... I don't really see it as a good reason to dump on everything Nvidia-related.

Where I would voice a complaint about the article is the way it headlines an improvement in training time. It's not clear to me whether the author is assuming that the GAN technique reduces training time (I'm not sure it does - just reduces the need for labelled data) or what. -

photonboy Guys, it's a very COMPLICATED task to do AI like this. In fact, you probably shouldn't even make criticisms based on only a cursory understanding of the facts let alone reading this article alone...Reply

Heck, did you even really READ the article because it said there was up to a "100 times" improvement due to the training that they got by setting up an unsupervised analysis...

In fact, "true AI" (not scripted, limited reactions) requires the system be setup in some way to be unsupervised even if it's just for training purposes, but you guys probably knew that already. -

derekullo Reply20449153 said:Guys, it's a very COMPLICATED task to do AI like this. In fact, you probably shouldn't even make criticisms based on only a cursory understanding of the facts let alone reading this article alone...

Heck, did you even really READ the article because it said there was up to a "100 times" improvement due to the training that they got by setting up an unsupervised analysis...

In fact, "true AI" (not scripted, limited reactions) requires the system be setup in some way to be unsupervised even if it's just for training purposes, but you guys probably knew that already.

C'mon, I'm sure you're just a little bit curious what kind of lion a picture of a toaster would make.

If it can turn a baby kitten into a lion then I'm sure it can at least do something funny with a toaster.

-

bit_user Reply

Thanks, I looked at the papers.20449153 said:Guys, it's a very COMPLICATED task to do AI like this. In fact, you probably shouldn't even make criticisms based on only a cursory understanding of the facts let alone reading this article alone...

Heck, did you even really READ the article because it said there was up to a "100 times" improvement due to the training that they got by setting up an unsupervised analysis...

BTW, as impressive as the cherry-picked results are, other results are a fair bit more amusing:

https://photos.google.com/share/AF1QipNCYGAA1lDqXIzEHlE7_s7jfN7LnR3-qMoUXF9coH-0FaDwEAEZjwGPbTedzA5V3w?key=emoyWmw3eWNrdjFZd1lYc2F5QWNtNDUwNDk1dWZR

The 100 times is not a claim I saw in that paper, itself, and seems to refer to inferencing performance - not training.