Early Verdict

Thermal throttling isn't a big issue for most users, but a heat sink makes sense if your system has less than optimal airflow and a large video card covering your only M.2 slot. The Team Group T-Force Cardea 480GB delivers MLC NAND performance with passive cooling to create a more robust M.2 SSD than what we've seen before. We really like this drive, but expect to spend over $250.

Pros

- +

MLC NAND flash

- +

Passive cooling

- +

Priced well

Cons

- -

No SSD or migration software

- -

Limited availability

Why you can trust Tom's Hardware

Specifications & Overview

Modern integrated circuits have a problem. Lithographies have shrunk while transistor technology has increased the switching frequency. The result is faster devices with a smaller surface area to dissipate heat. There are several ways to address the challenge. The first is to set a lower base clock and then boost the speed under load. This allows the IC to run cooler more of the time, but there is always some latency involved in making the jump to hyperspace (the turbo clock speed). The second method is to run the chip at full speed until it reaches a critical temperature and then downclock to reduce heat generation, but that actually slows the IC down when you need it the most. The third method is kind of old school. You just simply put a big heat sink over the IC and run full speed all the time. That's the solution we're looking at today.

Some early M.2 SSDs had minor issues with thermal throttling. At one time, we even measured the Samsung XP941 controller with an 114C surface temperature. I've never seen thermal throttling as a significant issue for most users. The truth is, we don't run these drives like you do at home. Our testing spans four days, and the drive is active nearly the entire time. Over the course of testing, we read and write years worth of data compared to a typical user environment. The accelerated testing allows us to measure performance during different workload patterns and to explore corner case issues. To mimic a real-world environment, we inject idle time to cool the drives off between tests and allow them to recover from the previous workload.

In contrast, most PC users load a lot of data quickly, roughly 15GB over 10 minutes, during Windows installation. After installing drivers and commonly-used apps, like Office, most data comes to the PC at the speed of the internet connection. Other common use-cases involve backing up data (reading most of the data on your drive at queue depth 1), and editing a 2GB video file. In a properly configured desktop with adequate airflow, it's difficult to see the effects of thermal throttling during these simple workloads.

Heat sinks can look really good from an aesthetics point of view. To each their own, my grandmother always said. They are not quite as audacious as RGB everything, but heat sinks do provide positive benefits for SSDs in a system with limited airflow.

Enter the new Team Group T-Force Cardea SSD. This drive uses a large full-length heat sink across a standard Phison PS5007-E7 M.2 2280 SSD. The heat sink is a full departure from the thin aluminum strip Plextor used on the M8Pe(G) M.2 SSD we tested several months ago. The Cardea uses a beefy heat sink that is more than adequate to cool the tiny E7 controller.

At first glance, the heat sink's height looks to be an issue with video cards mounted above the M.2 slot, which motherboard vendors commonly situate between two PCIe slots. That isn't the case. We tested five video cards, both AMD- and NVIDIA-based, and they all fit without touching the Cardea's heat sink. You would need a feeler gauge to measure the distance between the heat sinks, but we didn't run into an issue where the video card wouldn't seat completely. That's not to say all video cards with custom coolers will fit without issue. The video card companies ride the line of the PCIe specification, but some of the cards we tried, like the Asus GeForce GTX 680 DirectCU II TOP, are fine. The DirectCU II TOP is also the best example of a large GPU (it consumes 3 PCIe slots) that restricts airflow to an SSD under the PCIe slot.

Specifications

Team Group released the T-Force Cardea in two capacities of 240GB and 480GB. We have the largest and fastest model in for testing.

There is a slight difference in performance between the two capacities. Most users will not see a difference in four-corner performance, but they will notice a difference in mixed workloads. The T-Force Cardea 480GB sports up to 2,650/1,450 MB/s sequential read/write speeds. Random performance comes in at 180,000/150,000 read/write IOPS, but to achieve those numbers you need multiple threads. We test with a single thread because that's how most software addresses storage.

The Cardea uses a PCIe 3.0 x4 connection and the NVMe protocol. This is the first Phison E7 we've tested with the new 3.6 firmware. The "M" in the E7M03.6 leads us to believe this firmware is MP or mass production, and that means it has been tested for compatibility and stability. We haven't heard anything about this update from any of the manufacturers, so it will be interesting to see what we find during testing.

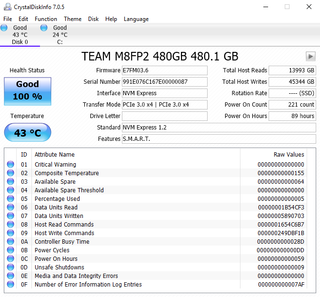

The image above was taken three-quarters of our way through testing, and we'd already written 45 terabytes to the drive.

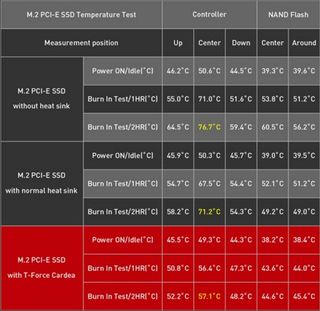

Team Group released temperature data for the T-Force Cardea with a large heat sink. There are three sets of data. The first is without a heat sink. The second says a "normal heat sink," but we're not sure what that actually defines. The third and final set measures the Cardea with the large heat sink. During a two-hour burn-in test, the heat sink kept the E7 processor nearly 20 degrees cooler than without a heat sink. We don't have any data about the testing environment. The Phison PS5007-E7 employs a dynamic throttling algorithm, and the controller can reach temperatures as high as 90C before activating an extreme throttle that severely degrades performance.

Pricing And Accessories

Newegg already carries the Team Group T-Force Cardea in both capacities. The 240GB model currently retails for $149.99. The 480GB model we have in for testing sells for $269.99. The pricing scheme moves the E7 out of the entry-level NVMe category and pits it against products like the OCZ RD400, Plextor M8Pe(G) (M.2 with heat sink model), and Samsung 960 EVO.

We didn't find any accessories inside the package and Team Group doesn't have SSD Toolbox software.

Warranty And Endurance

Team Group backs the T-Force Cardea with a 3-year warranty limited by the amount of data you write to the drive. The 240GB model can absorb 335 TB of warrantied data writes, while the 480GB model comes with 670 TB.

Packaging

The Team Group T-Force Cardea retail package is fairly straight forward. You get the drive in a retail-ready blister pack, but there is very little information available for retail shoppers. Team Group doesn't have a retail presence that we are aware of, so most of these drives sell online.

A Closer Look

The Team Force graphic appears with the drive at an angle. You can still see it straight on, but you get the full effect at an angle. The heat sink retention bracket doubles as a cooling device on the other side of the drive. This helps to spread the heat from the NAND packages.

I can't say with certainty that the T-Force Cardea will not fit in any notebook, but it doesn't fit in our Lenovo P70 mobile workstation, which is the largest notebook I have with NVMe support.

MORE: Best SSDs

MORE: How We Test HDDs And SSDs

MORE: All SSD Content

-

damric Just put an AIO liquid cooler on it. You know you want it.Reply

Btw typo on last page "The NAND shortage is at it speak" should be" its peak" -

bit_user ReplyI've never seen thermal throttling as a significant issue for most users.

Have you ever tried using a M.2 drive, in a laptop, for software development?

Builds can be fairly I/O intensive. Especially debug builds, where the CPU is doing little optimization and the images are bloated by debug symbols.

And laptops tend to be cramped and lack good cooling for their M.2 drives. Thus, we have a real world case for thermal throttling.

Video editing on laptops is another real world case I'd expect to trigger thermal throttling.

We test with a single thread because that's how most software addresses storage.

In normal software (i.e. not disk benchmarks, databases, or server applications), you actually have two threads. The OS kernel transparently does read prefetching and write buffering. So, even if the application is coded with a single thread that's doing blocking I/O, you should expect to see some amount of QD >= 2, in any mixed-workload scenario. About the only time you really get strictly QD=1 is for sequential reads.

That said, I'd agree that desktop users (with the possible exception of people doing lots of software builds) should care mostly about QD=1 performance and not even look at performance above QD=4. In this sense, perhaps tech journalists delving into corner cases and the I/O stress tests designed to do just that have done us all a bit of a disservice. -

bit_user Replymy grandmother always said they are not quite as audacious as RGB everything, but heat sinks do provide positive benefits ...

How I first read this.

Me: "Whoa, cool grandma."

Yeah, I read fast, mostly in a hurry to reach the benchmarks.

Seriously, you could spice up your articles with a few such devices. Maybe tech journalists would do well to cast some of their articles as short stories, in the same mold as historical fiction. You don't fictionalize the technical details - only the narrative around them.

Consider that - as odd as it might sound - it still wouldn't be quite as far out there as the Night Before Christmas pieces. The trick would be not to make it seem too forced... again, with my thoughts turning towards The Night Before Christmas pieces (as charming as they were). So, no fan fiction or Fresh Prince, please. -

bit_user ReplyWhen I started building PCs, it was common to see one fan at the bottom of the case to bring in cool air, and another on the back to exhaust warm air. I haven't seen a case with only two fans in a very long time. That doesn't mean they are not out there; it's just rare.

My workstation has a 2-fan configuration with an air-cooled 130 W CPU, 275 W GPU, quad-channel memory, the enterprise version of the Intel SSD 750, and 2 SATA drives. Front fan is 140 mm and blows cool air over the SATA drives, while rear fan is a 120 mm behind the CPU.

860 W PSU is bottom-mounted, and only spins its fan in high-load, which is rare. The graphics card has 2 axial fans. Everything stays pretty quiet, and I had no throttling issues when running multi-day CPU-intensive jobs or during the Folding @ Home contest.

In addition to that, I have an old i7-2600K that just uses the boxed CPU cooler, integrated graphics, and a single 80 mm Noctua exhaust fan, in a cheap mini-tower case. Never throttles, and I don't even hear it unless something pegs all of the CPU cores (it sits about 4' from my feet, on the other side of my UPS). It does have a top-mounted PSU, which is a Seasonic G-Series semi-modular, that I think is designed to keep its fan running full-time. It started as an experiment, but I never found a reason to increase its airflow. I haven't even dusted it in a couple years.

I'm left to conclude that you only need more than 2 big fans in cases with poor airflow, multi-GPU, or overclocking. -

mapesdhs The Comparison Products list includes the 950 Pro, but the graphs don't have results for this. Likewise, the graphs have results for the SM961, but that model is not in the Comparison Products list. A mixup here? To which model is that data actually referring?Reply

Ian.