The Latest On VR Locomotion

Among the many challenges presented by the development of consumer VR, locomotion is one of the most important. At a session during Oculus Connect 4, Oculus’ Tom Heath detailed some of the research the company has been conducting into understanding how to make movement work better for VR viewers.

We also saw and learned more about what’s happening in locomotion from some of the new games that were being showcased at the show.

The Locomotion Problem

In 2D games, we take for granted that you press a key or click a button or move a joystick or point a mouse to get yourself from Point A to Point B. Virtual reality, though, introduces two new layers of complexity to movement. One is that it begs you to move around using your body and head, rather than via simple input, which is extremely difficult to develop. The other is that moving in VR is tricky, because when your eyes think you’re moving in the VR experience, but your physical body remains stationary, you have a vestibular disconnect that makes you nauseous.

We’ve discussed the issues with locomotion many times before on these pages, including noting how many half-solutions are out there and discussing ways various developers have figured out creative solutions.

The VR industry is tackling the problem of locomotion from multiple angles, including via hardware (creating better controllers, adding inside-out tracking, adding body tracking), understanding the baseline comfort needs in VR (90 Hz refresh rate, high-enough resolution), and being clever with game development (“comfort modes,” teleportation, snap mechanics, and so on).

Oculus’ Research On Visual Tricks

Oculus has researchers looking at all of it, but at his Oculus Connect 4 session, Heath detailed the ways in which the company is experimenting with solving locomotion issues using what amounts to visual and mental trickery. It’s sort of the VR research equivalent of playing with brain teasers.

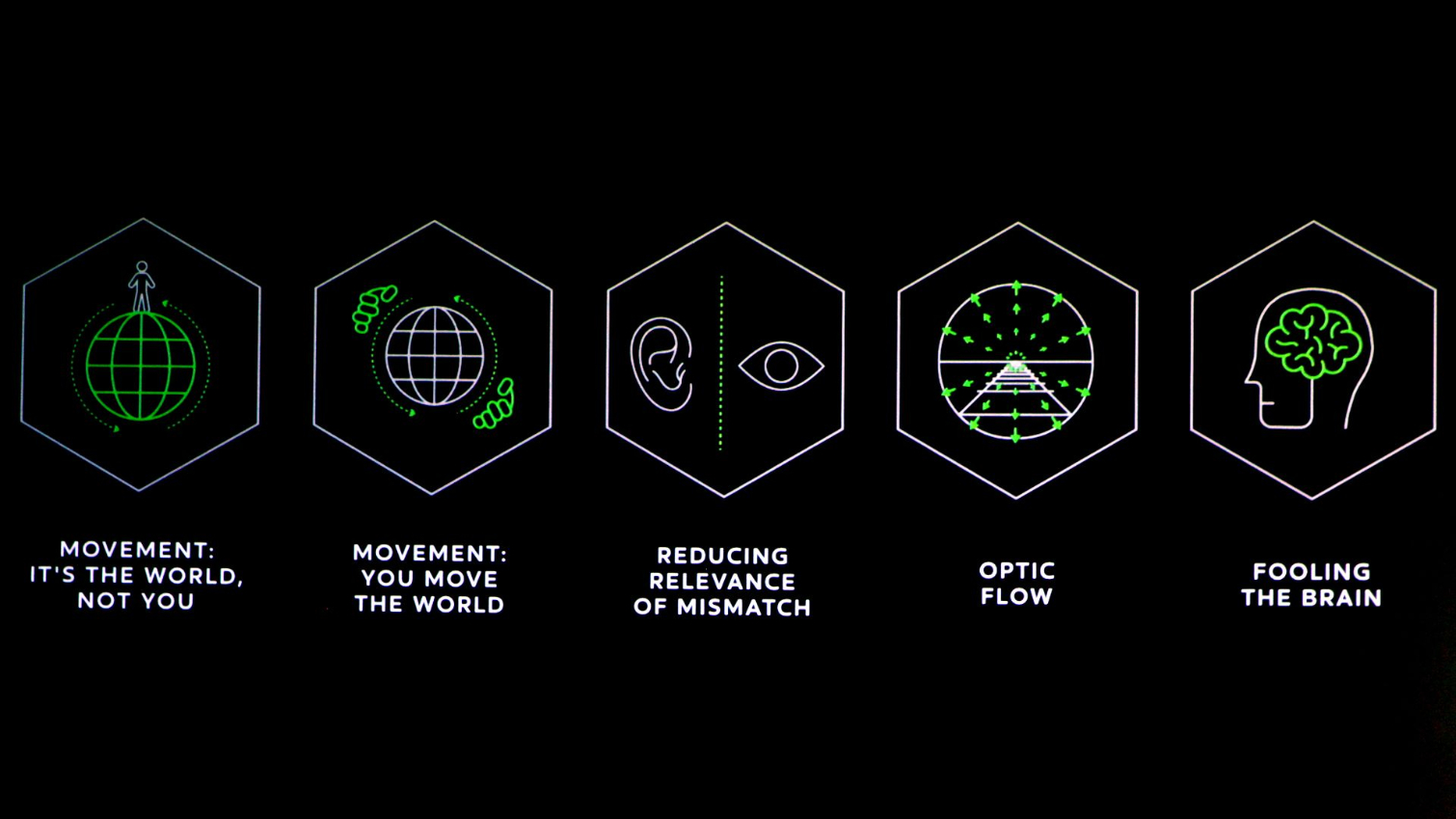

There are five notions Heath discussed:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The world is moving while you are notYou are moving the world Reduce relevance of mismatchOptic flowFooling the brain

There’s another layer to all of this, though. It’s not just that these are tricks you could employ when building a VR experience; you can use them to varying degrees depending on the comfort level of the viewer, and what’s more, Heath suggested that devs could use them to introduce people to VR and dial the aids down over time as they become more comfortable.

The World Is Moving, Not You

One of the ways you can defeat that vestibular disconnect is if your eyes perceive that the world is moving around you instead of the other way around. The main hook is introducing some kind of reference point for the viewer. Thus, instead of seeing one thing (a world that is moving), you see two things (a world that is moving inside of a world that is not).

Heath noted that tabletop games like Brass Tactics or Landfall are perfect for that kind of experience. You’re inside of a sky box (stationery world), and the moving parts of the game happen within that, on a tabletop. (It’s worth noting that these sorts of tabletop games would be ideal AR titles.) He also noted that this works really well in driving games where you can place a grid in the background, so even as you zip around a track, your brain always has that reference point. Archangel, a title that puts you inside a giant mech on rails, uses this to great effect by putting you inside a cockpit that serves as your static reference point.

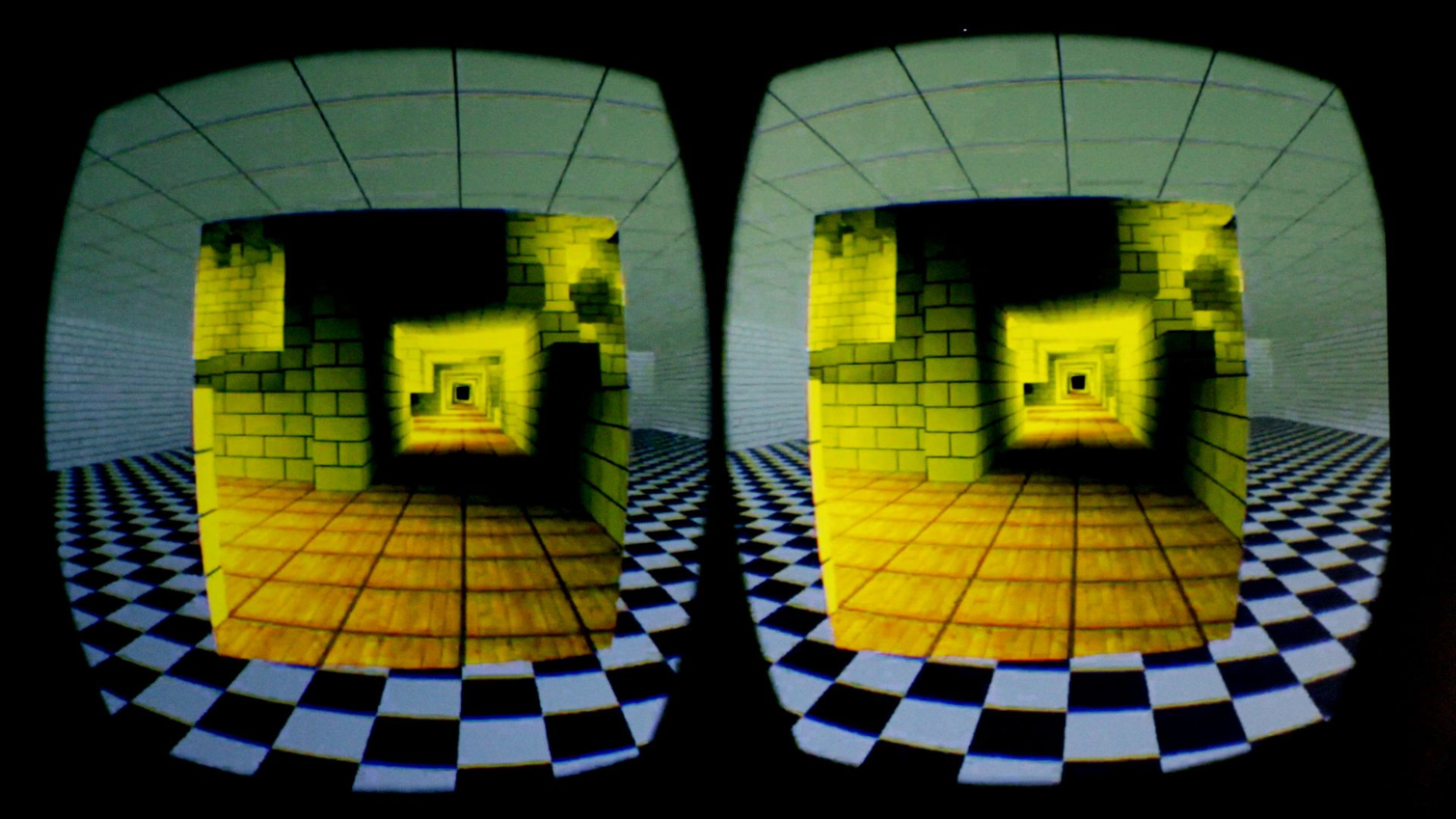

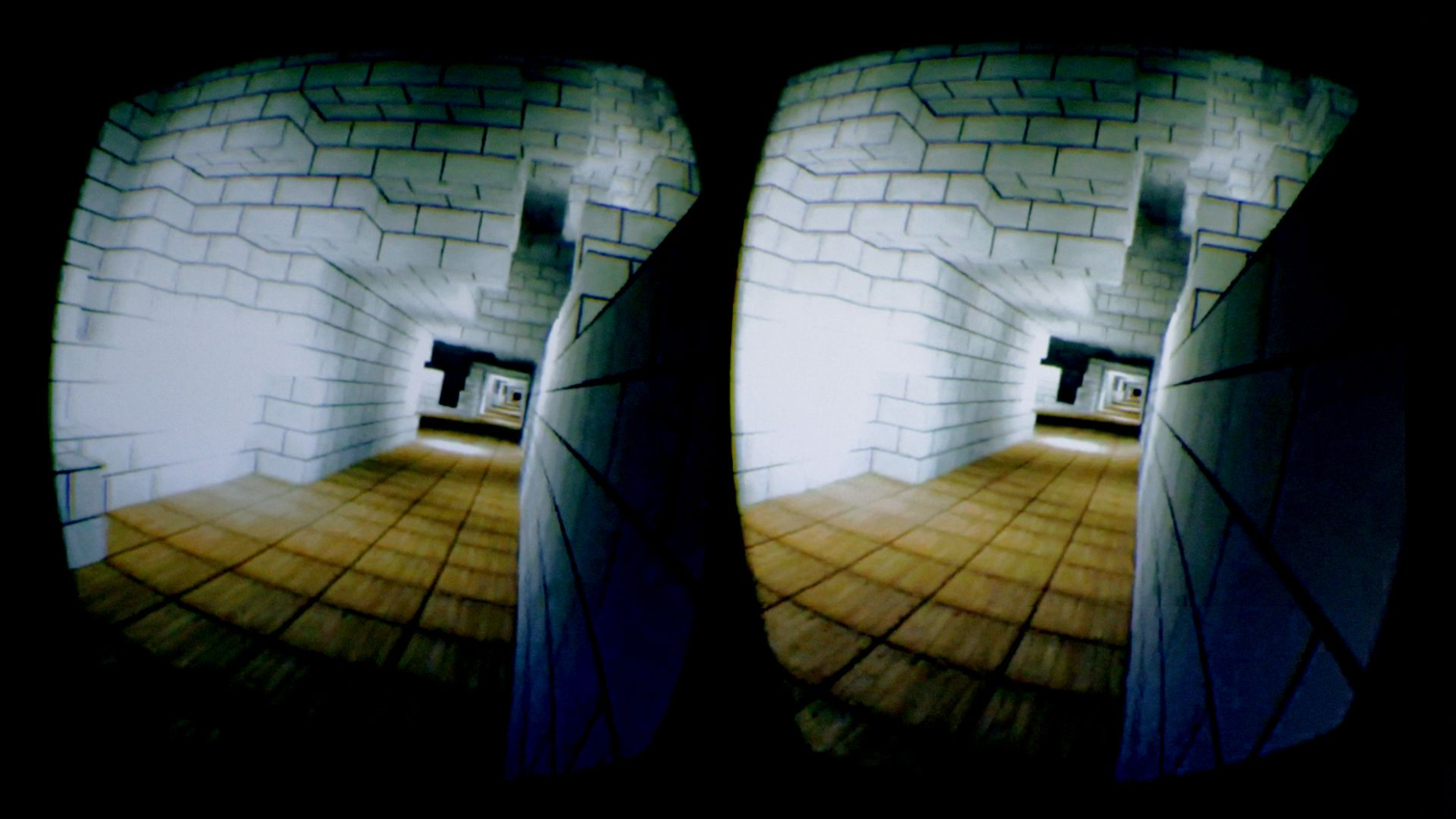

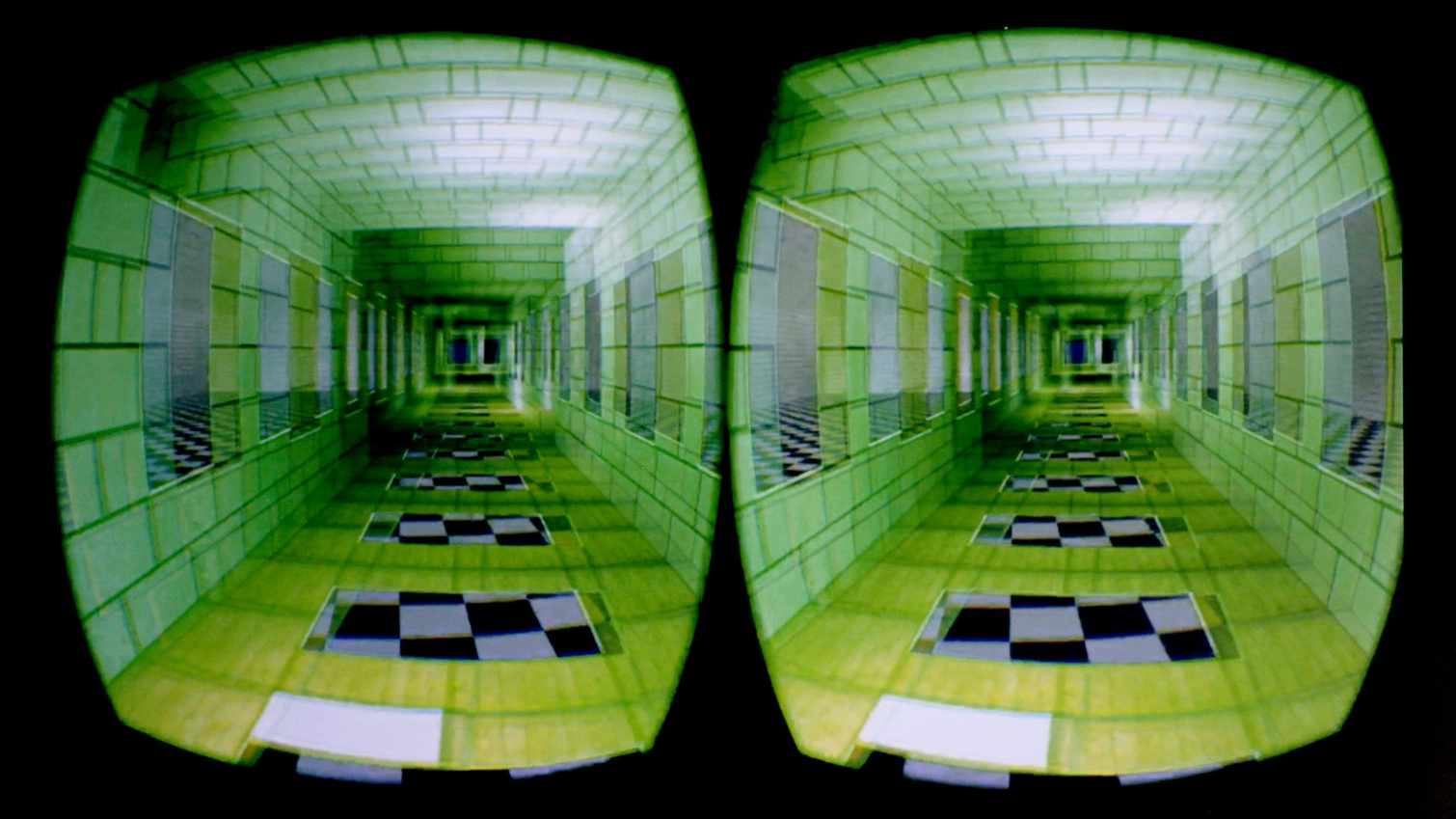

That concept, though, can be expanded to other types of VR games. In one example, Heath showed how adding a static periphery can help you. The yellow world is flying by, but the gray one doesn’t move. It’s not unlike the effect of a big TV screen, and depending on the comfort level of an individual, you can tighten or loosen the size of that frame.

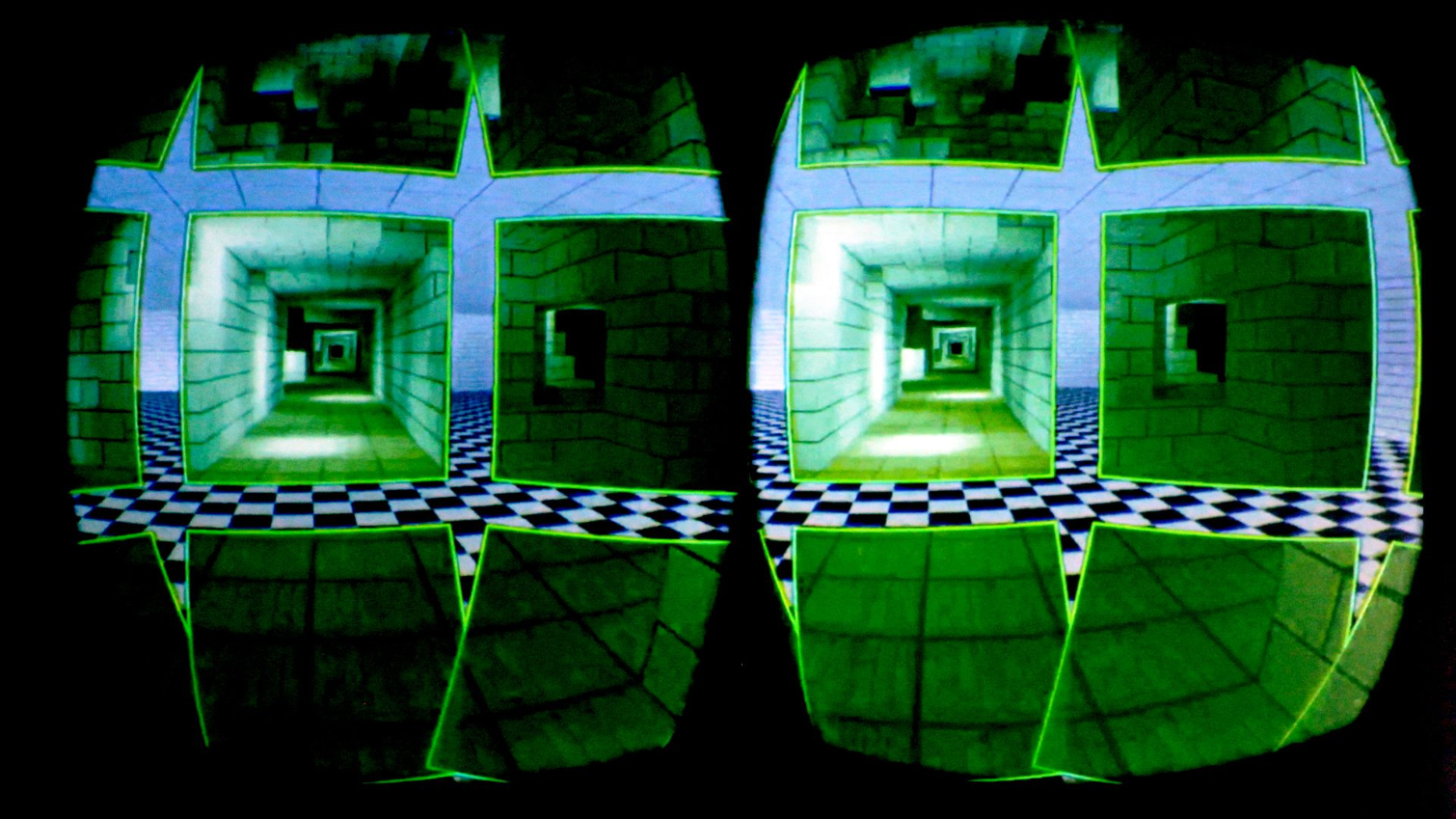

Another trick is to place portals to the static gray world within the green one that’s moving. This is, perhaps, a method that would actually blend quite well into games, because you can create layered environments. For example, you might be sprinting through a hallway, but the windows show the sky or clouds or mountains in the distance, which could ground your brain.

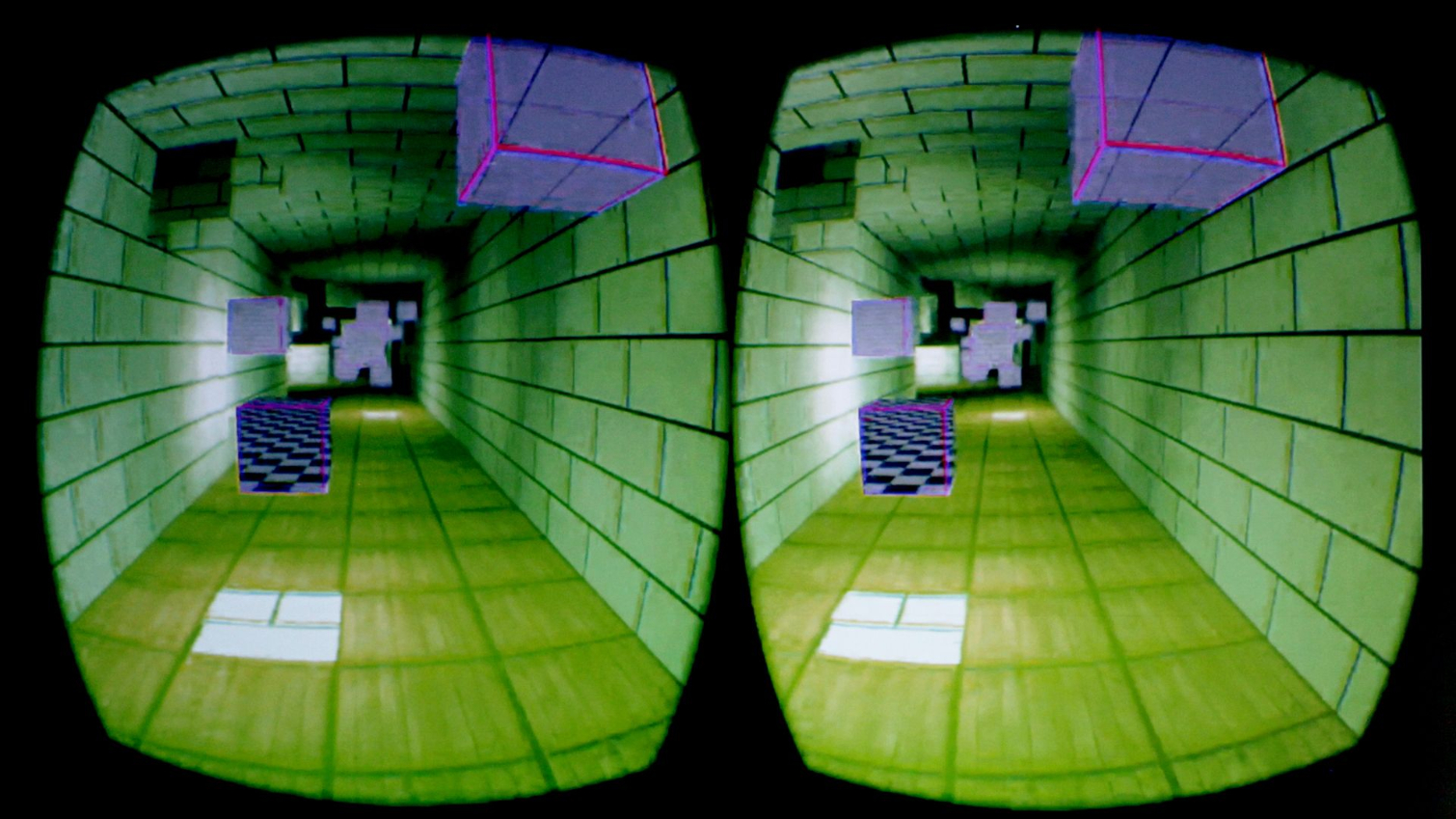

You can accomplish the same “window” notion by, for example, placing these floating blocks within the moving world. It’s perhaps a less graceful way to incorporate static reference points into a real VR experience, but then again, that’s not the purview of Heath’s research team; they're just trying to come up with every solution they can think up that will work.

You Move The World

Grandiose “I can move the world” metaphors aside, when your physical actions cause the virtual world to move, it makes your vestibular system happy. Well, happier.

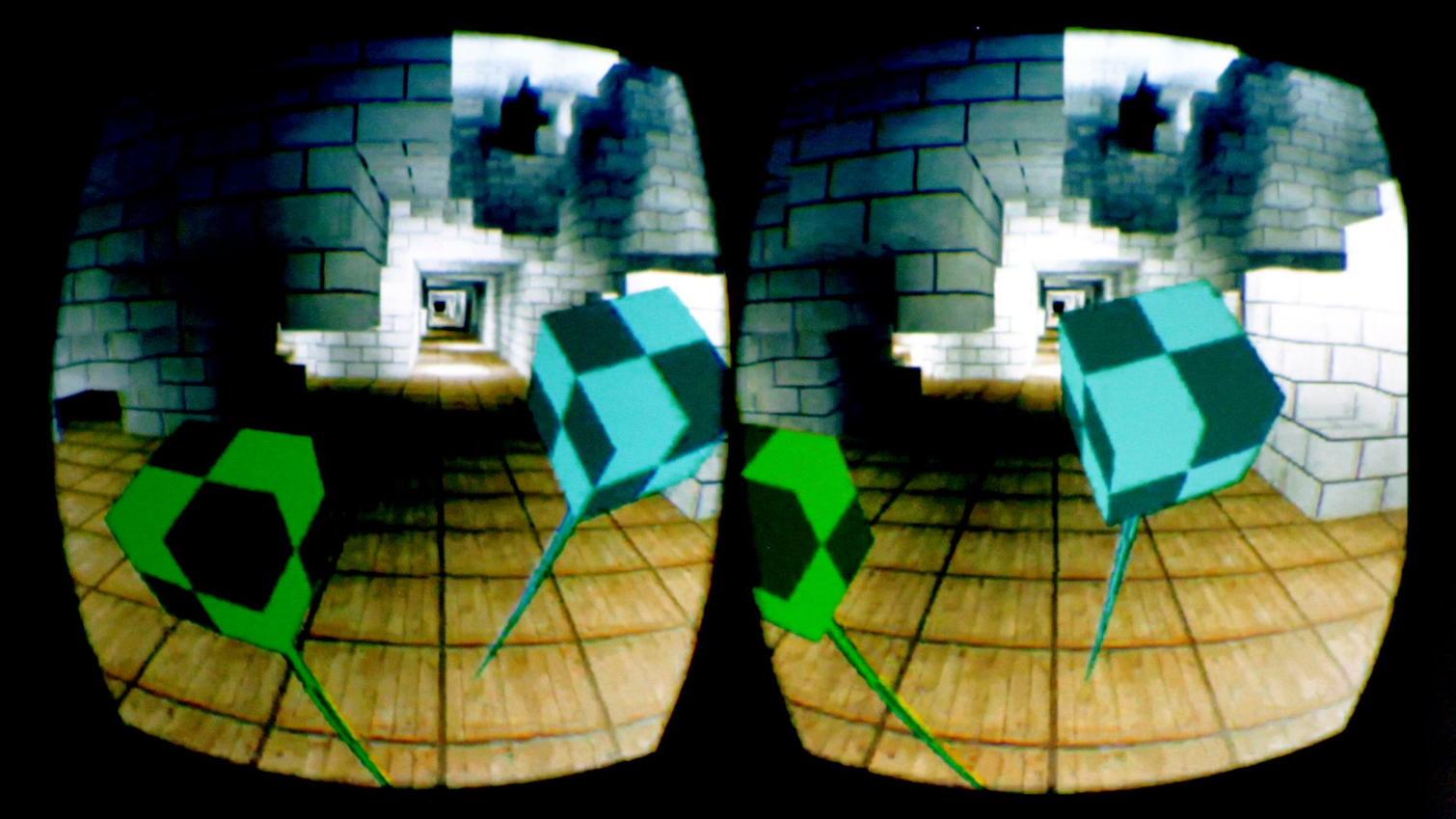

Heath pointed to titles like Lone Echo and The Climb, although we could add a number of non-Rift-exclusive titles to that list, such as Sprint Vector. In fact, Sprint Vector uses the exact “ski pole” mechanic that Heath illustrated:

In the image, the “ski poles” are just blocks on sticks, but the idea is that you can move fast and intuitively, and you can play with momentum and add elements such as yaw--all of which are keys to a fun and engaging VR experience.

Heath also noted that you can plant a ski pole and twist the world, although he acknowledged that not everyone’s brain seems to jibe with that particular type of movement.

Reduce The Relevance Of Mismatch

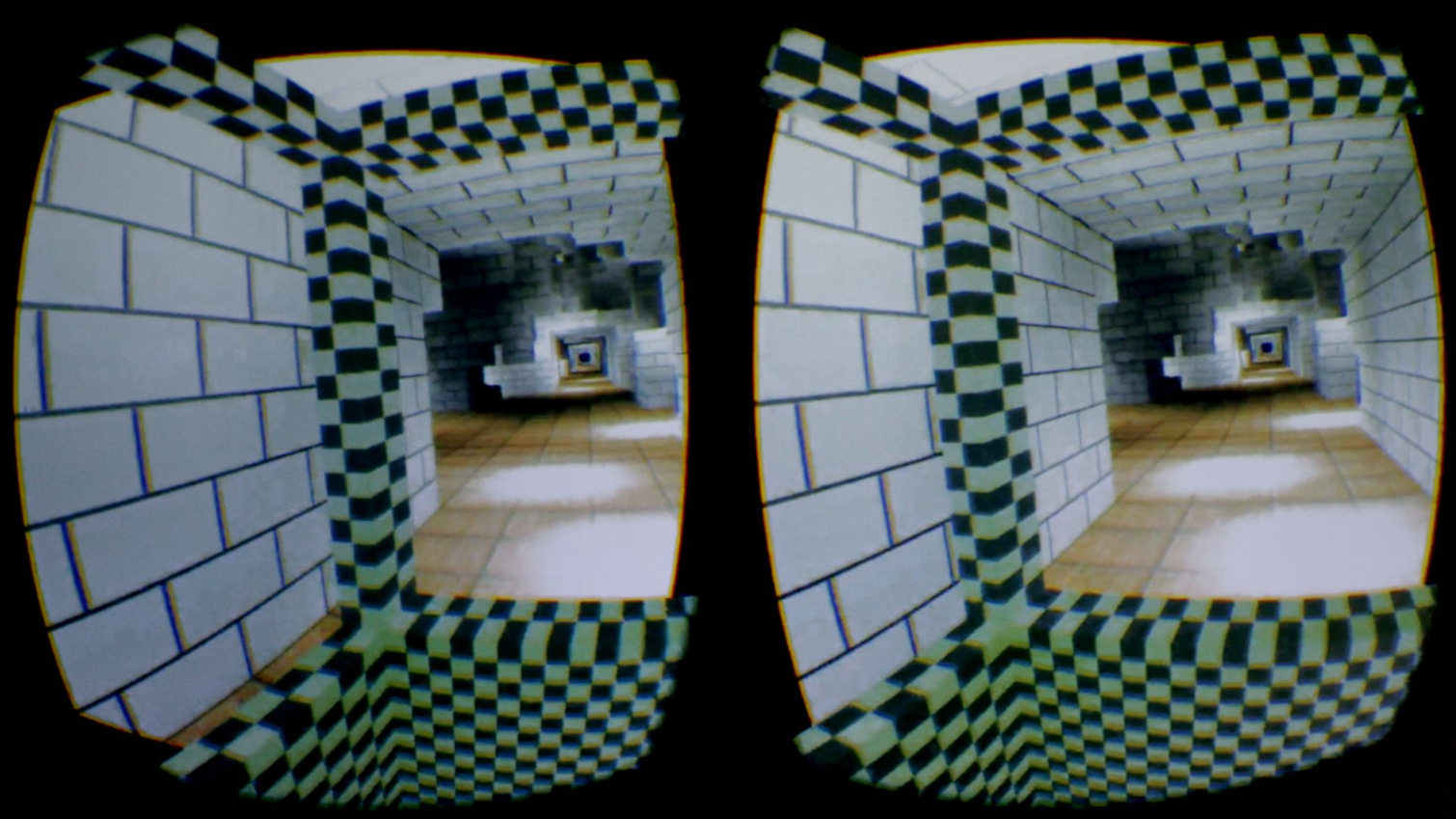

This technique is completely counter-intuitive to the holy-grail VR concept of believing that you’re somewhere other than where you physically are, but one of the ideas Heath’s team is playing with is to lean in to the vestibular mismatch instead of trying to hide it or make it more comfortable. By doing so, goes the theory, your brain doesn’t believe that what your eyes are seeing is real, so the brain/body link breaks, and there’s no vestibular disruption.

That is, you acknowledge the vestibular mismatch, but you reduce its relevance.

Heath gave examples such as introducing disruptive graphics--specifically, using a “cockpit” that’s moving in a “different, more pronounced way than the rest of the scene.” In this example, the cockpit lurches all around, but Heath noted that the team had trouble making this work. The problem they ran into is that if you don’t distort the world enough, you still have vestibular issues. He did note, though, that they had some success when they fiddled with the framerate just a bit to reduce the world’s realism.

This is, again, completely counter-intuitive, but the idea is that if the world looks too unreal, then your brain will automatically switch to using the real-feeling cockpit for stability. (Of all the tricks Heath discussed, this one seemed to be the least viable, but the germ of the idea is certainly fascinating.)

Optic Flow

The simplest way of describing optic flow is this: When stuff flies past your vision, you feel like you’re moving. Heath’s team is experimenting with providing an overlay that moves a second optic flow in an equal and opposite direction. The idea is that they’ll cancel each other out.

Heath admitted that they’re a bit skeptical of this one so far. And there’s an inherent problem, which is that you may end up confusing the reverse flow with the “real” one, with likely disastrous results. Solving that problem, he noted, should be fairly easy, although because everyone has different sensitivities to VR locomotion, it may not be quite as simple as all that.

Heath only touched on the technique of adjusting the optic flow, but there may be much deeper ideas represented here. Anecdotally, we had a conversation with Ubisoft developers who were demoing a new game called Space Junkie, which is a first-person shooter set in outer space. They noted that average people seemed to respond quite well to the locomotion in this title, but they had the opportunity to show the title to an actual astronaut, and they were shocked to find that he became nauseous instantly.

This particular astronaut told the Ubisoft devs that he felt discomfort in the flight sims he trained on, too--but not during his numerous missions to actual outer space.

Why on earth would that be? We have an untested theory that perhaps there isn’t enough optic flow for someone with such keenly tuned visual acuity--as in, there literally is not enough data hitting his eyeballs to make the experience feel real. People like astronauts and race car drivers are able to see much better than the average person, in terms of their ability to perceive more in their peripheral vision, for example. We wonder if a high-res, 90 Hz experience for someone like that is akin to a low-res, 60 Hz experience for a normal person.

Fooling The Brain

All of the above locomotion experiments are designed to trick the brain, but what Heath was getting at with this section has to do with how you use your head to alter your direction and angle of movement. The question is, can you give the brain just enough of what it wants to make it comfortable?

Instead of requiring you to tilt your head to move in a direction, you (as the developer) just make it look like you tilted your head. It’s a lesser-of-two-evils situation: fast animations versus fake head tilt.

In the demo he showed onstage, the view would tilt as it moved, which looks like natural head movement, but Heath said he was just using a keyboard to move forward, back, left, or right. He noted that it’s a lot of work to make this function correctly, and baking in just the right amount of tilt is a tricky balance. He said that devs who use such a technique would be wise to find out how much fake head tilt they can get away with and then dial it down well below that max.

More To Come

Heath said that he and his team have many more software techniques to try, which calls into relief the fact that he didn’t discuss any hardware-aided or physical techniques. As we learned during the subsequent Q&A session, though, they’re working with those as well (they were just beyond the scope of that particular presentation).

He acknowledged, for instance, the curious benefits of pumping your arms as a means of negating motion sickness. In Vindicta, for example, how fast you move depends on how fast you pump your arms. One Tom’s Hardware writer, who is usually sensitive to VR movement, found that the arm-pumping in Vindicta completely negated any nausea. Anecdotally, he’s successfully tried this as a nausea-reducing trick in other games that don’t use arm pumping for movement.

Heath also said that he’s excited about the prospect of haptics. The Touch controllers already use them to an extent, but controller haptics has room for improvement, and adding haptics to items like body trackers and mocap suits could further heighten the experience.

For example, Heath noted at one point in his talk that he has no nausea problems with rapid acceleration in VR driving simulators, but he realized that he misses that tension you get in your neck when you accelerate in real life. Adding a small haptic component to, say, the back strap of a Rift, he agreed, could simulate that muscle flex and add more realism to an experience.

Suffice it to say that there are multiple approaches to not just solving the problem of locomotion in VR, but using those solutions creativity to enhance games and experiences. Game developers are finding them on their own, but it’s perhaps reassuring that Oculus has a group devoted to research these things. Indeed, devs can go grab those assets from the PC SDK section of the Oculus developer site.

Seth Colaner previously served as News Director at Tom's Hardware. He covered technology news, focusing on keyboards, virtual reality, and wearables.

-

robcolecycling Most effective "trick" I discovered to avoid nausea in games with free locomotion is to simply walk on the spot.Reply

It doesn't need to be in time, or neatly done - just the act of walking provides a blunt stimulus to the vestibular system which is very effective to keep away sim sickness!