Fallen Enthusiast GPU Manufacturers

Introduction

The computer industry is competitive, and over the years numerous companies have been forced out of it. Today we're looking at some of the biggest graphics card vendors that fell along the way.

There are several brands we're deliberately avoiding. We aren't talking about OEMs, for example, nor will we discuss purveyors of 2D-only accelerators. Our focus is 3D, and we're including companies like Matrox that used to be prolific, but are no longer competitive in this space. You'll also find Rendition, S3, and the once-king, 3dfx.

MORE: The History Of Intel CPUs

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

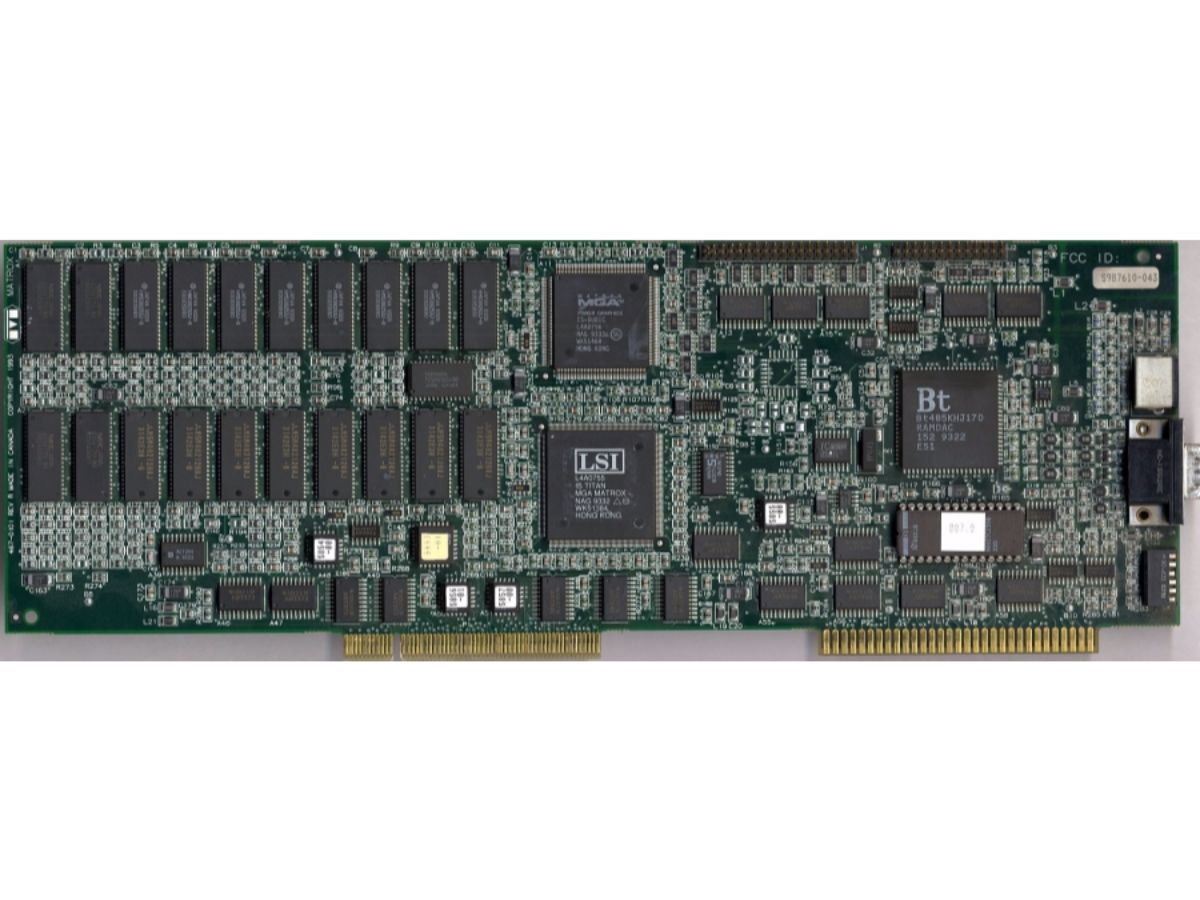

Matrox Impression

Matrox's first graphics cards were introduced in the late 1970s, but they were relatively simple adapters. The company's first product capable of handling 3D images was the Impression, which had to be accompanied by Matrox's Millennium 2D accelerator. Its overall performance was lackluster, as the Impression wasn't really designed for gaming. Rather, it was intended for CAD work. As a result, the Matrox Impression didn't really, well, make an impression on enthusiasts.

Image Credit: VGAMuseum.info

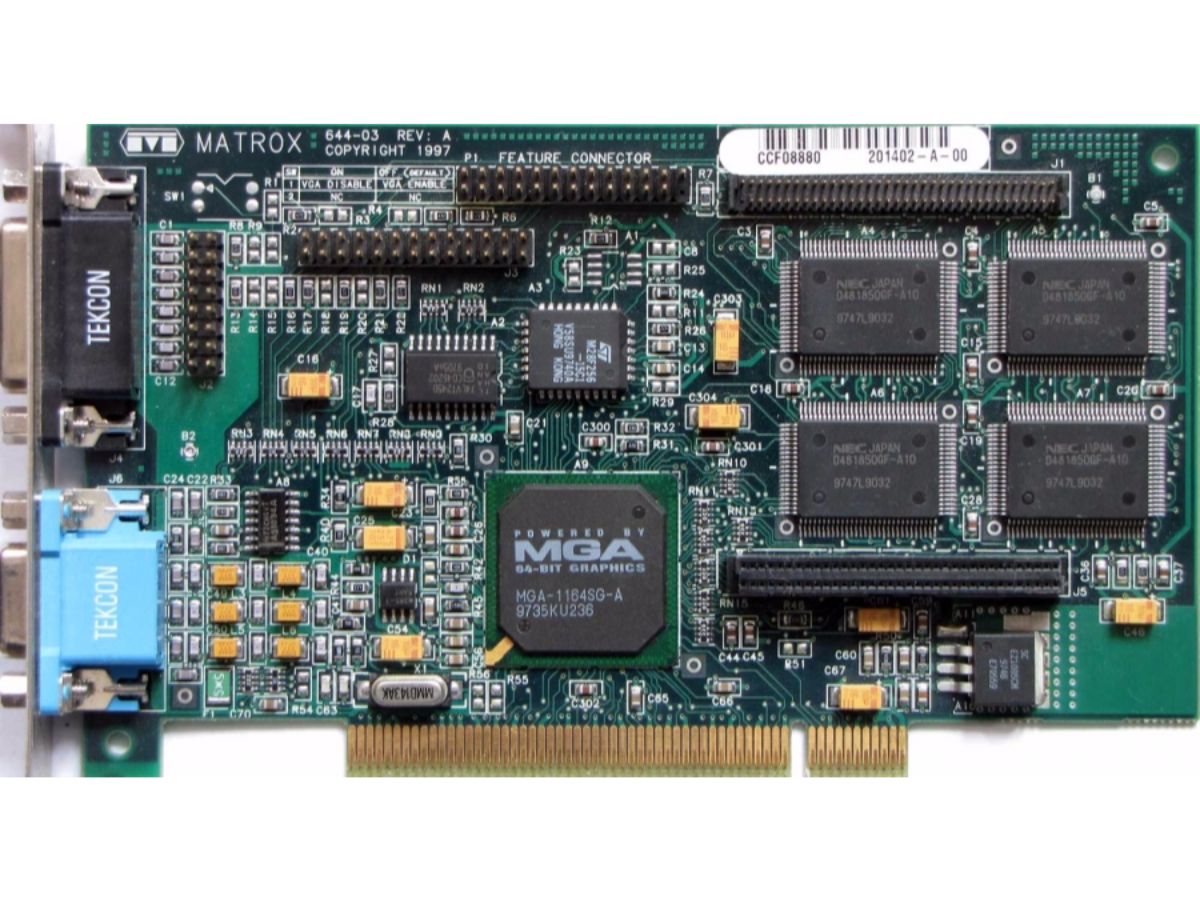

Matrox Mystique

Matrox introduced its Mystique in 1997, combining 2D and 3D acceleration on a single card. This helped pare back costs since you only had to buy one board instead of two. The Mystique was also a more competent gaming product thanks to features like texture mapping. The 64-bit graphics processor came equipped with a single pixel pipeline and one TMU.

It still lacked several key abilities, however, such as mipmapping, bilinear filtering, and transparency support. The Mystique shipped with anywhere from 2 MB to 8 MB of SGRAM. Memory on the 2 MB cards could be upgraded using add-on modules.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Despite improved performance, the Mystique's graphics quality was called into question. A later version, dubbed the Millennium II, included WRAM, which helped make the card faster than its predecessor.

Image Credit: VGAMuseum.info

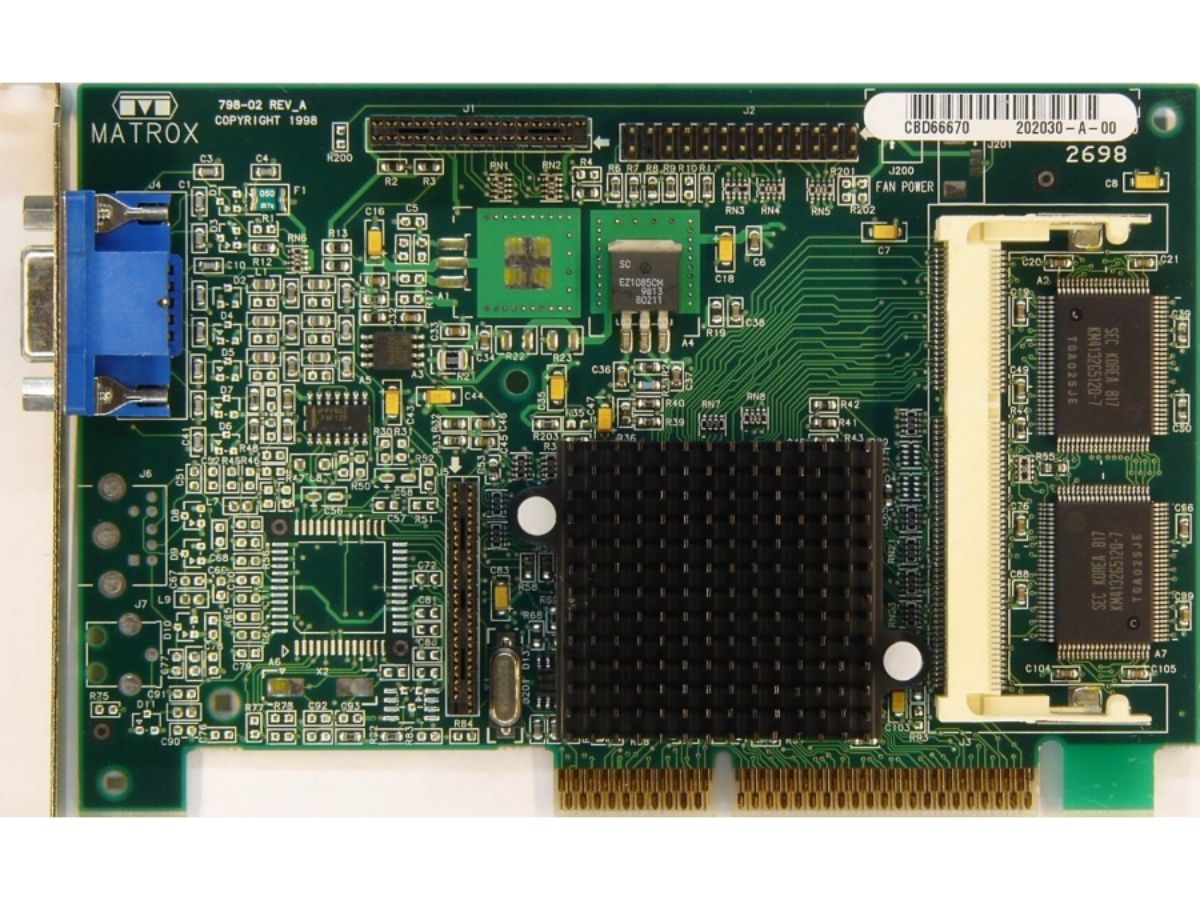

Matrox G200 & G250

Matrox's second 2D/3D graphics accelerator was far more successful; it offered several new features including full 32-bit color support, mipmapping, trilinear mipmap filtering, and anti-aliasing. The G200, released in 1998, used an internal 128-bit design with dual 64-bit unidrectional buses. The memory interface was 64 bits wide and accommodated between 8 and 16 MB of RAM. Although the G200 continued to employ a single pixel pipeline with just one TMU, Matrox's improvements allowed the card to far surpass its older Mystique. Because the company adopted 32-bit color at a time when the competition was mostly limited to 16-bit, the G200's image quality was often lauded for its lack of dithering artifacts.

The first Matrox G200 chips were built using 350 nm transistors, but Matrox later transitioned the design to a 250 nm process in 1999. This helped facilitate higher clock rates. Variants based on the 250 nm technology were sold as either G200A or G250. The G250 models offered the most aggressive frequencies, and thus the best performance.

Image Credit: VGAMuseum.info

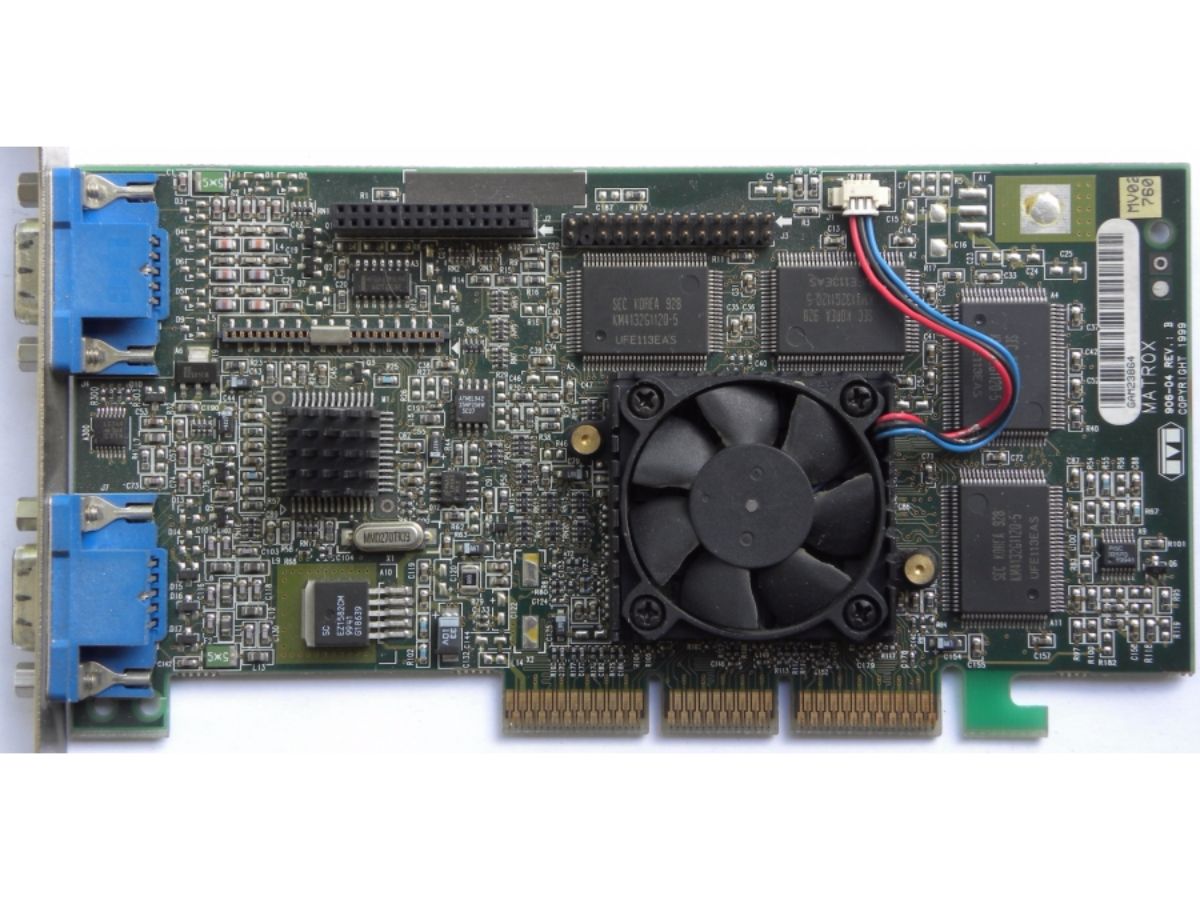

Matrox G400

The G400, released in 1999, was essentially a G200 graphics processor with twice as many resources. Instead of the 128-bit architecture complemented by two 64-bit buses, Matrox's G400 used a 256-bit design with two 128-bit unidirectional pathways. Matrox also added a second pixel pipeline with its own texture unit. The memory interface grew to 128 bits wide, and it supported twice as much RAM (from 16 to 32 MB). Clock rates increased significantly, while the processor's feature set grew to be DirectX 6-compliant. This was also one of the earliest cards to enable simultaneous video output to two displays.

Unfortunately, the G400 suffered from rather serious driver issues upon its introduction, limiting performance in the first reviews. Over time, the company resolved its issues with a stable OpenGL ICD and improved DirectX compatibility.

Matrox manufactured the G400 on a 250 nm process, but later transitioned to 180 nm transistors. Those smaller dies went into G450 cards, which were less expensive than the original G400. Matrox didn't increase the G450's clock rate, however, so its impact was fairly limited. A later version known as G550 added even more gaming-oriented functionality.

Image Credit: VGAMuseum.info

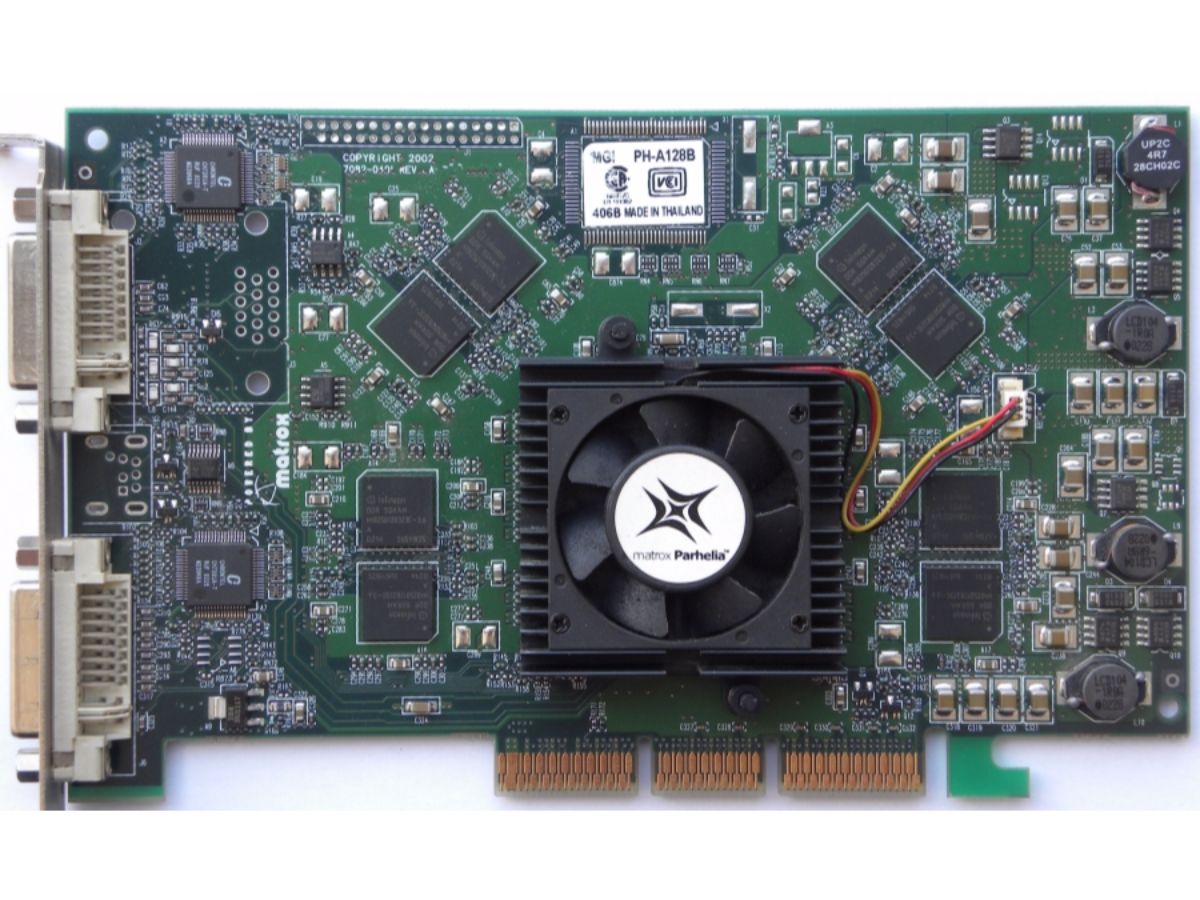

Matrox Parhelia-512

Matrox's final enthusiast-oriented graphics card was the Parhelia-512, released in 2002. Its design was rather ambitious, featuring four pixel pipelines, each with a vertex shader and four TMUs. The GPU was fed by a 256-bit ring bus connected to 128 MB or 256 MB of DDR memory. Matrox claimed that the ring bus topology allowed the 256-bit memory interface to operate as if it was 512 bits wide. It typically operated between 200 and 275 MHz, was fully compatible with DirectX 8.1, and it had partial support for DirectX 9.

The Parhelia-512 far surpassed Matrox's older cards. However, it was slower than contemporary ATI and Nvidia GPUs. It was also rather expensive, and ultimately failed to gain much market share.

A less expensive version known as the Parhelia-LX was also produced, but it had half of the Parhelia-512's resources and wasn't particularly competitive. The full Parhelia-512 die moved to 90 nm manufacturing in 2007 and was re-released as a budget-oriented card with DDR2, but again, it didn't make much of an impact.

Following the Parhelia-512, Matrox exited the enthusiast graphics market. Though the company is still in business today, it focuses on more specialized applications.

Image Credit: VGAMuseum.info

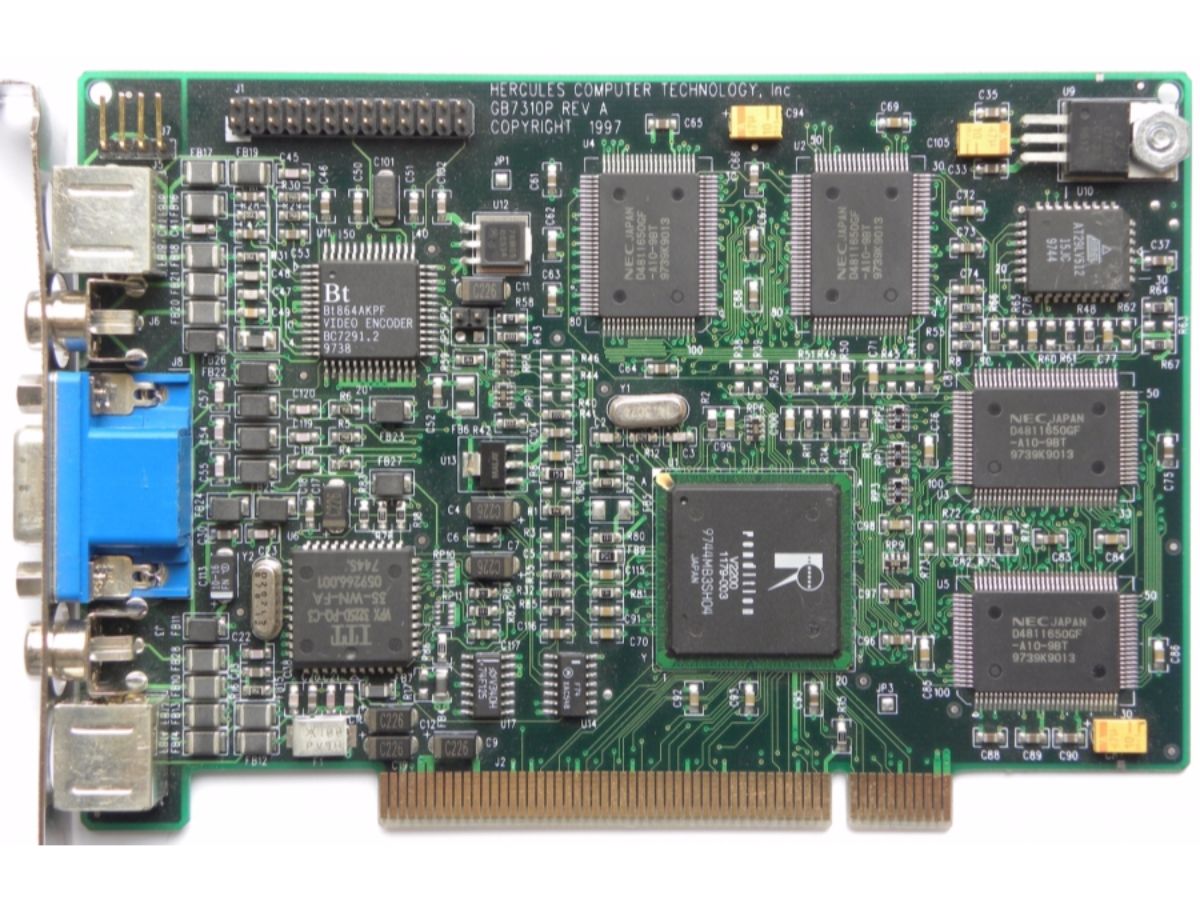

Rendition Vérité V1000

Rendition entered the market in 1996 with its Vérité V1000, which shipped with full 2D and 3D acceleration features. Back then, the Vérité was one of the fastest graphics cards you could find. It wasn't able to beat 3dfx's Voodoo. However, it did offer more value since the Voodoo card was limited to 3D gaming and required a companion 2D controller.

The graphics processor itself was based on a RISC design, and it connected to up to 4 MB of EDO RAM over a 64-bit bus. The card unfortunately ran into compatibility issues with several motherboards and its 2D performance trailed competing products. These factors prevented Rendition from taking a larger share of the market with its V1000.

Image Credit: VGAMuseum.info

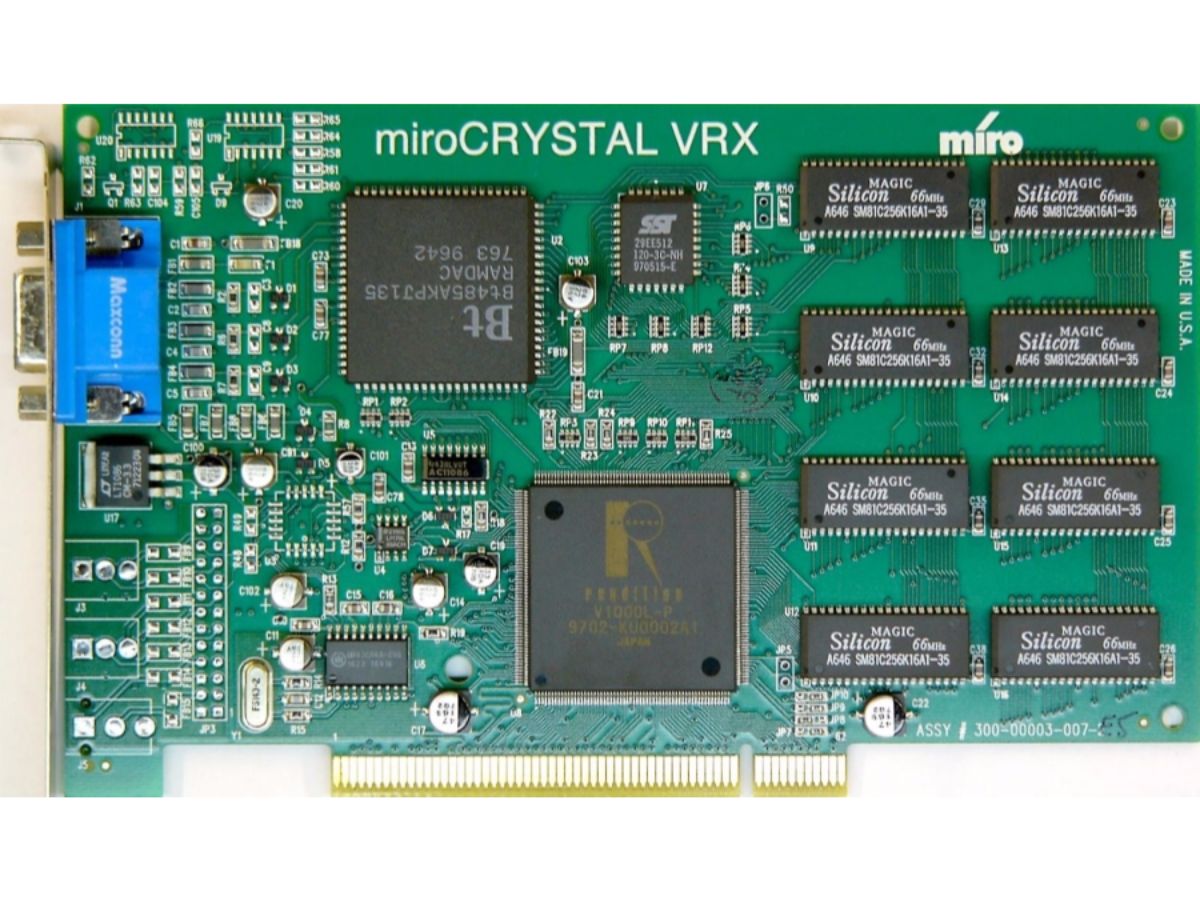

Rendition Vérité V2100 And V2200

Rendition's Vérité V2100 and V2200 were nearly identical to the V1000 at a hardware level. The company did improve the V1000's design, however, and nearly doubled its processor's fill rate. These cards operated at slightly higher clock rates and used faster memory, yielding modest performance improvements. The V2100 and V2200 differed from each other in two key areas: the latter offered higher frequencies and came with either 4 or 8 MB of SGRAM, while the former was slightly slower and limited to 4 MB of SGRAM.

After the release of its Vérité V2100 and V2200, Rendition attempted to design a new graphics processor. But the design was delayed several times and Rendition was eventually purchased by Micron.

Image Credit: VGAMuseum.info

MORE: The History Of AMD CPUs

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

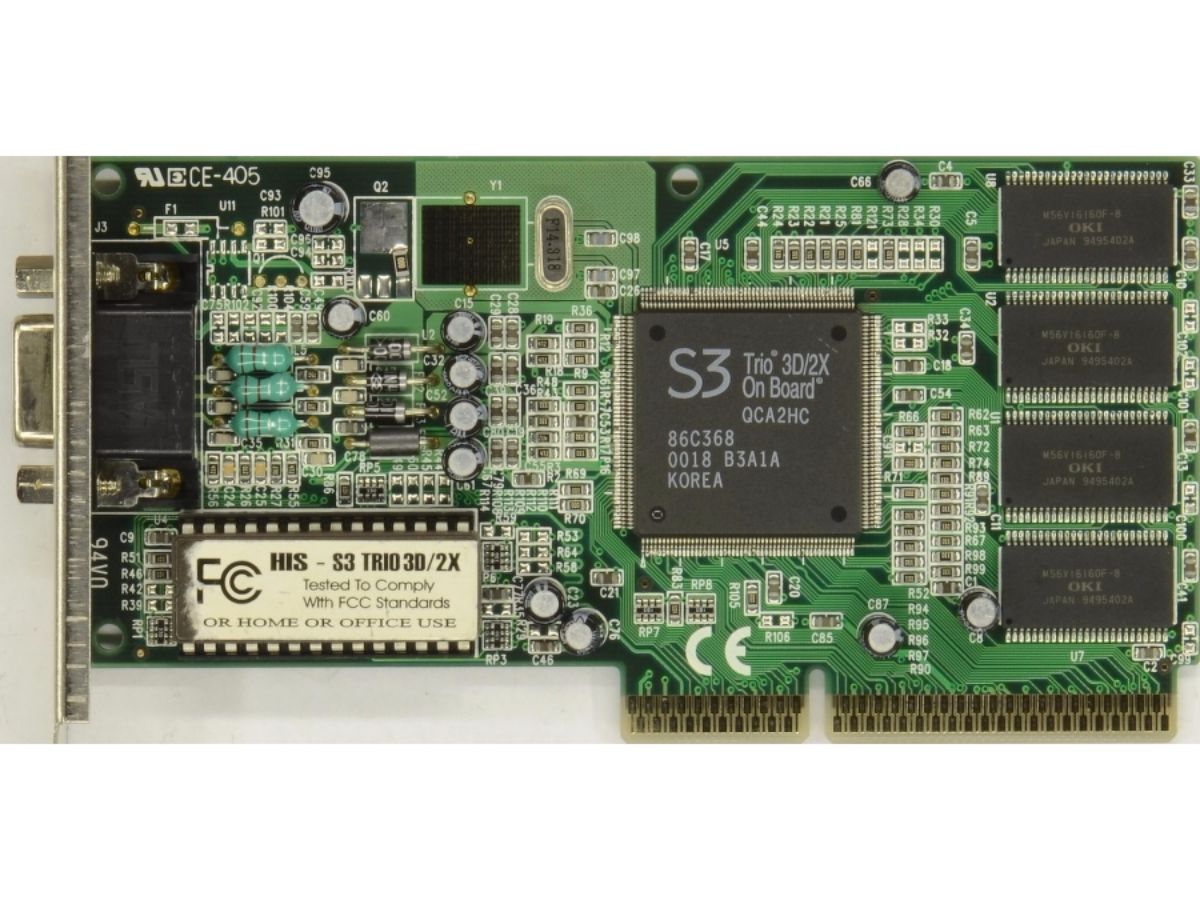

S3 Trio3D

S3 entered the graphics card market in the early 1990s. It produced 2D accelerators for several years, culminating in the Trio series. Although that family mostly consisted of 2D cards, its final incarnation was the Trio3D, a 3D-capable board with a 128-bit processing engine. It was complemented by up to 4 MB of RAM.

Image Credit: VGAMuseum.info

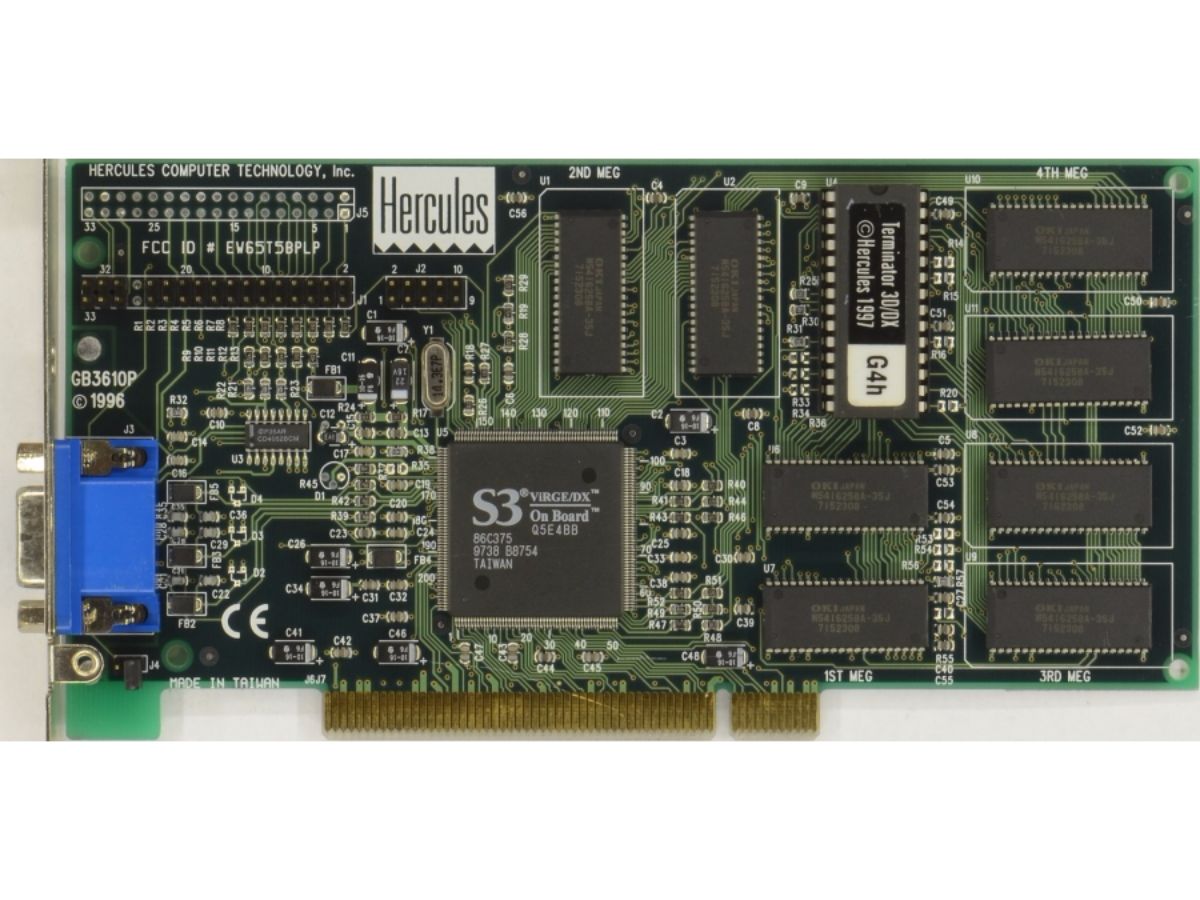

S3 ViRGE

S3 introduced its ViRGE line in 1995, a brand it continued to use until 1998. The ViRGE was capable of handling both 2D and 3D acceleration. Early models had access to either 2 or 4 MB of EDO RAM over a 64-bit bus, but later variants shipped with 8 MB. Unlike many graphics cards of this time, the ViRGE was a single-chip solution. This made it less expensive and easier to produce. But in order to fit all of the necessary hardware into one die, S3 devoted fewer resources to 3D processing. As a result, the ViRGE wasn't a very fast gaming card.

Although its 3D abilities were unimpressive, the ViRGE had outstanding 2D performance and it came to be known as one of the fastest 2D accelerators you could buy.

Image Credit: VGAMuseum.info

S3 Savage3D

The S3 Savage3D was introduced in 1998. It leveraged an entirely new design and possessed advanced features like trilinear filtering, a 24-bit Z-buffer, hardware-accelerated motion compensation and alpha blending for MPEG-2 video decoding, an integrated TV encoder, and texture compression. S3 implemented a dual-pipeline design. The pipelines were somewhat unusual in that one was used exclusively for rendering while the other was dedicated to texture processing. At most, the Savage3D could ship with 8 MB of RAM, which was less than competing cards.

Although the Savage3D was feature-rich, it was ultimately a failure. The Savage3D's performance lagged far behind its competitors, and S3 faced serious yield challenges that limited sales.

Image Credit: VGAMuseum.info

-

apache_lives Voodoo II section for 3Dfx doesnt seem right - its talking about the Voodoo5 with a Voodoo5 picture...Reply -

IInuyasha74 Reply18732147 said:Kyro is missing!

I left Kyro and all PowerVR based graphics solutions out, because PowerVR is not a fallen GPU manufacturer. Technically, a company could still use PowerVR graphics on a GPU add-on card or as an integrated graphics solution.Intel did this a few years ago with its Atom-based systems. PowerVR also has a long list of graphics processors it has developed over the years, more than all of the companies listed above combined. More than enough to warrant a separate piece of its own when time permits. -

clonazepam I remember being so hyped about the Voodoo 6000 and its external power supply. Their marketing back then was fantastic. The box art was pretty amazing.Reply -

Realist9 I still remember the 3dfx voodoo2 and sli. When I finally got Jedi Knight Dark Forces II into "3d", it was awesome. Never will forget that.Reply -

clonazepam Reply18732836 said:I still remember the 3dfx voodoo2 and sli. When I finally got Jedi Knight Dark Forces II into "3d", it was awesome. Never will forget that.

A memory that really sticks out for me is when glide was out, and games had to be patched to opengl. We're talking about hundreds of MBs over dial-up.

I ended up selling my two Voodoo2s to an enthusiast around '99-00. -

DoDidDont 3dLabs?? The company was a leader for years in the professional graphics market, and considered the "choice" brand for professionals. They brought many innovations that still exist today under different guises. I remember Nvidia buying up all the Realizm 800's after the company was sold and broken up by Creative. Cant believe their GPU support site is still up and running... Nvidia and ATi had nothing that could come anywhere near the performance of the Realizm 800 the year it was launched.Reply -

Fixadent 3Dfx and the Voodoo 2 are still legends in the world of GPU's.Reply

I wish that 3Dfx still existed and competed with AMD and Nvidia. -

Fixadent Back in the late 1990's, I was awed at the graphics of Quake 2 running on a voodoo 2.Reply -

LORD_ORION I had a Diamond Vodoo 1, Mech Warrior 2 3dfx edition was so damn good.Reply

I also had a vodoo 5.... Heretic II was so heli-good with AA.

The cards were expensive and quickly fell behind in performance though... so poor investments. :(

Anyone remember the 3dfx commercials? ;)

Like the one when they are makinf advanced medical equipment and then... everyone stop, we're going to use this tech to make video game cards instead.