Testing EVGA's GeForce GTX 1080 FTW2 With New iCX Cooler

Does a new heat sink translate to new success? EVGA complements its existing technology with some on-board sensors and gives enthusiasts access to asynchronous fan control.

EVGA GeForce GTX 1080 FTW2 With iCX

The iCX cooler is not completely new. Rather, it has been compared to EVGA’s existing ACX thermal solution, found on the FTW and SC models. But there’s one innovation we’re sure that no other manufacturer shares: built-in temperature sensors with a matching micro-controller.

Unfortunately, we received the iCX-equipped card just on Wednesday afternoon, limiting the time we could spend time with it. Do we dig into the tech or run loads of gaming benchmarks? We went with the former, and are glad we did.

EVGA aimed its latest at enthusiasts who want more control over individual temperatures. Thus, the company’s focus is more on the technology used to enable this, along with its Precision XOC software, and less on the heat sink itself. EVGA also implemented some incremental performance improvements, such as more (and thicker) thermal pads. But we aren’t expecting any miracles compared to the ACX design.

Meet the GeForce GTX 1080 FTW2 with iCX

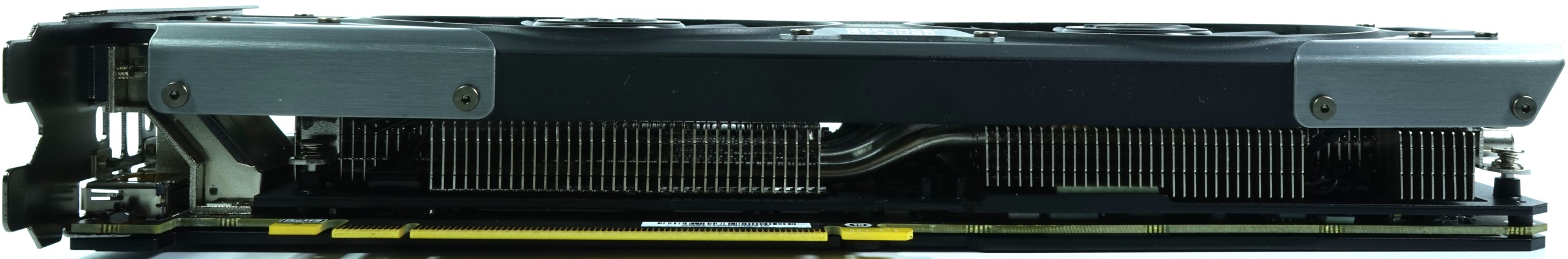

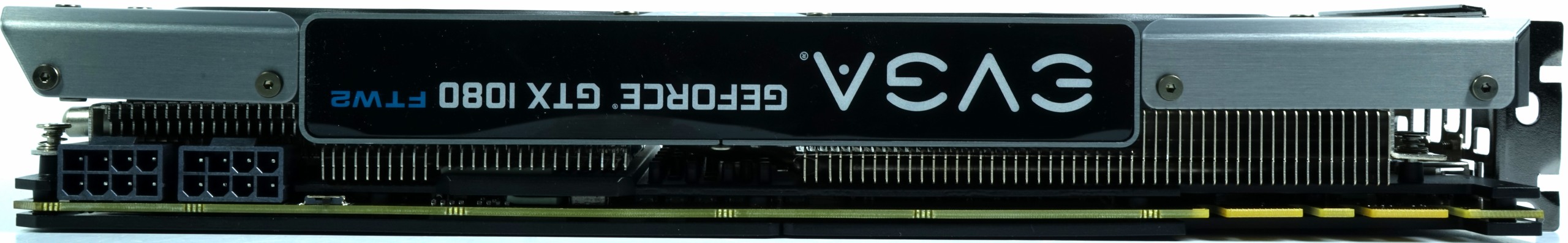

The new card weighs in at a hefty 37 ounces (1,050g), yet it’s still lighter than some competing 1080s. Measurements of 10.95 inches long, 4.92 inches tall, and 1.4 inches wide are about average for a two-slot card.

EVGA’s fan shroud consists of anthracite-colored plastic with aluminum highlights, illuminated by LEDs. A look at the bottom shows that EVGA again opts for vertically-oriented fins.

The top of the card is dominated by two eight-pin power connectors, a large panel with various LED indicators, and a back-lit logo.

At the end of the card, we see four 6mm heat pipes and two larger 8mm heat pipes. There is another, shorter 8mm heat pipe toward the front. The capillary action of these nickel-plated composite pipes works well in any orientation.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

EVGA implemented a familiar array of display outputs, including one DVI-I, one HDMI, and three DisplayPort connectors.

Layout and Features

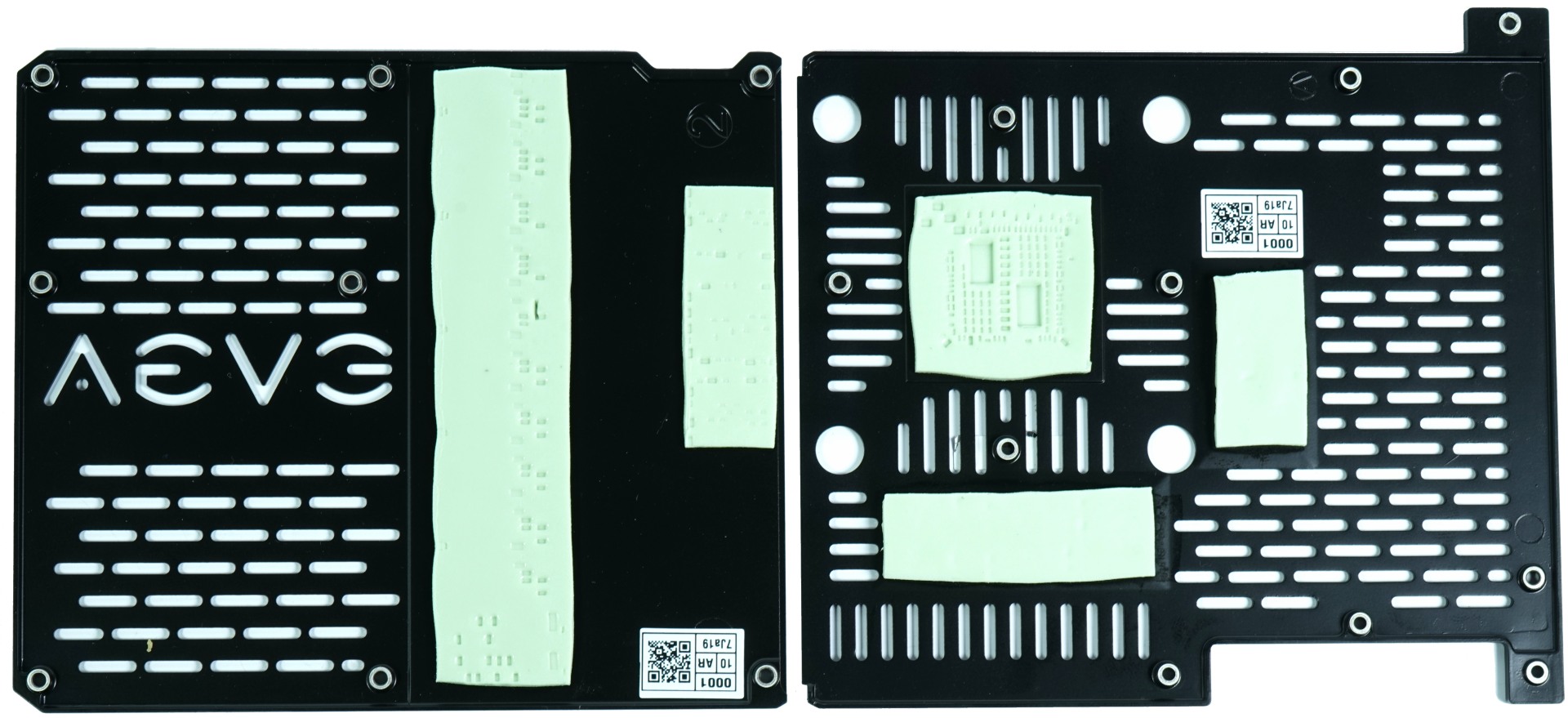

Initially, we didn’t see any differences between this card’s PCB and the ACX cooler-equipped version, but the design does diverge in places. To start, the newer model sports a two-part backplate with plenty of heat-conducting pads. This solves issues we uncovered in our Nvidia GeForce GTX 1080 Graphics Card Roundup, helping the plate actively contribute to the card’s cooling performance.

The GDDR5X modules come from Micron and are sold together with the GP104 processor to Nvidia’s board partners. Eight of these memory chips, operating at 1,251MHz, are connected to a 256-bit aggregate bus, yielding a theoretical maximum bandwidth of 320GB/s.

EVGA again employed a Texas Instruments INA3221 three-channel high-side current and bus voltage monitor. For additional protection, should something go seriously wrong, it also solders a fuse onto the PCB.

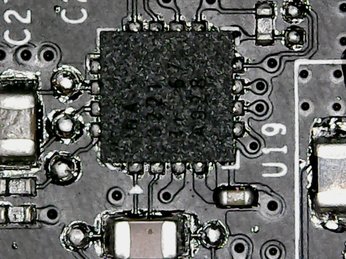

Power to the memory is provided by two phases controlled by an 81278 that doesn’t come from ON Semiconductor and is in a different package than we’re used to. This dual-phase synchronous buck controller facilitates phase interleaving and includes two low-dropout regulators. It also integrates gate drivers and the PWM VID interface.

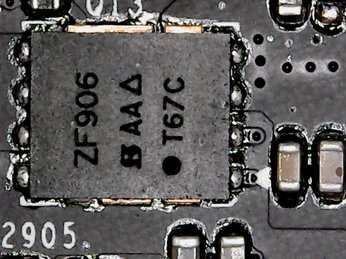

A Siliconix ZF906 dual-channel MOSFET, which unifies the high- and low-side MOSFETs, is used instead of ON Semiconductor’s NTMFD4C85N.

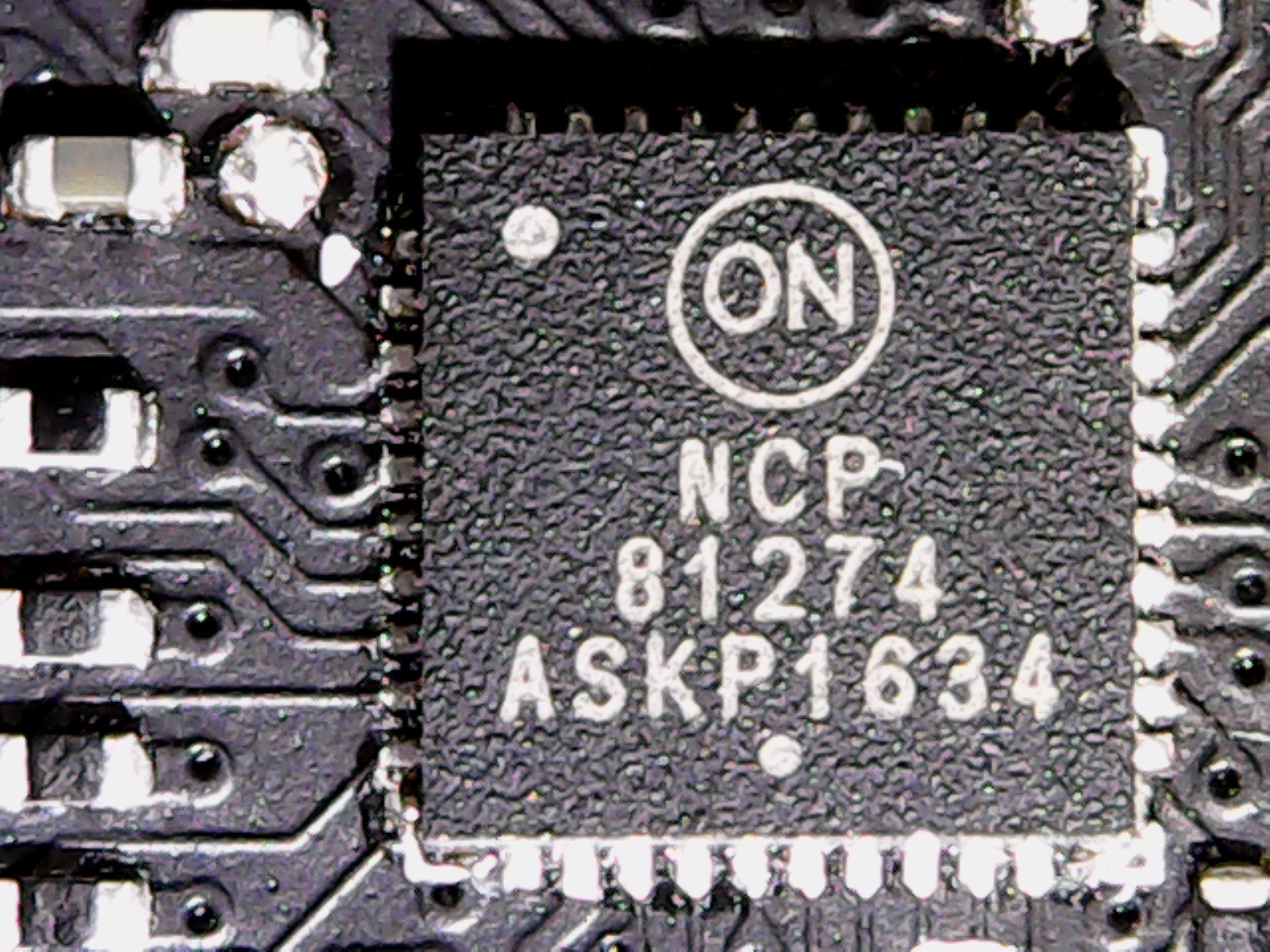

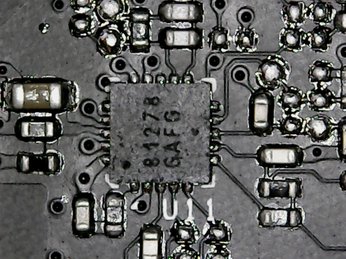

The 5+2-phase implementation we covered when we reviewed EVGA’s GeForce GTX 1080 FTW Gaming ACX 3.0 made its return here, utilizing an ON Semiconductor NPC81274 PWM controller that offers many more control options than the µP9511P on Nvidia’s reference design.

EVGA somewhat deceptively claimed the GPU gets 10 power phases, but there are really only five, each of which is split into two separate converter circuits. (This isn’t a new trick by any means.) It does help improve the distribution of current to create a larger cooling area, though.

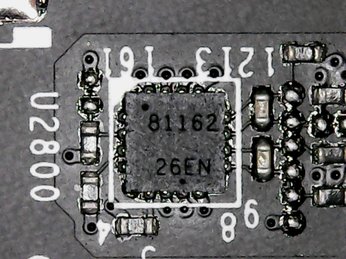

Furthermore, the shunt connection reduces the circuit's internal resistance. This is achieved with a NCP81162 current balancing phase doubler, which also contains the gate and power drivers.

For voltage regulation, one highly-integrated DG44E (instead of a NCP81382) is used per converter circuit, combining the high-side and low-side MOSFETs, as well as the Schottky diode, in a single chip.

Thanks to the doubling of converter circuits, the coils are significantly smaller. This can be quite an advantage because the current per circuit is smaller as well. As a result, conductors can be reduced in diameter while retaining the same inductance.

MORE: Best Graphics Cards

Current page: EVGA GeForce GTX 1080 FTW2 With iCX

Next Page Cooler Design And Technical Implementation

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

FormatC Very similar to the AVX after thermal mod and BIOS flash. And as I wrote: generally a little bit better :)Reply

No idea, were are all the previous posts. Horrible tech... -

redgarl My first 1080 GTX FTW exploded. These cards were having huge issues due to the cooler.Reply -

FormatC I don't think, that any card can explode if you use it in a normal case with a good PSU. Normally ;)Reply

The main problem is every time the cooler philosophy. You can see three main solutions on the market:

Most used cooler types:

(1) Sandwich (like EVGA or MSI) with a large cooling plate/frame between PCB and cooler with tons of thick thermal pads

(2) Cooler only and a separate VRM cooler below the main cooler, memory mostly cooled over the heatsink

(3) Integrated real heatsink for VRM/coils and larger CPU heatsink/frame for direct memory cooling (Gigabyte, Palit, Zotac, Galax etc.)

I'm investigating this things since years and visited a lot of factories in Asia and the HQs of the bigger manufacturers. I have contact to a lot of R&D guys of this companies and we exchanged/ discussed my data over a long time. I remember, that I was sitting with the PM and R&D from Brand G in Taipei to discuss the first coolers of Type 3 in 2013 and it was good to see, how the R&D was following my suggestions:

This were the first coolers with integrated heatsinks for VRM and memory. Later it was improved to include the coils into this concept. The problem was at the begin the stability of the heavy cards and they moved to backplates. I was also in discussion with a few companies to use this backplates not only for marketing or stabilization but also for cooling. One of the first cards with thermal pads between PCB and backplate was the R9 380X Nitro from Sapphire. Other companies copied this and the cards with the biggest amount of thermal pads are now the FTW with thermal mod and the FTW2. I reported the issues to EVGA in early August 2016 and we had to wait over 3 months to see the suggested solution on the market.

One of the the problems is based on the splitted development/production process. The PBCs are mostly designed/produced from/together with a few big, specialized OEMs. But nobody is proceeding a simulation to detect possible thermal hotspots (design dependend) first. The cooler industry works also totally separately and the data exchange is simply worse. Mostly they are using (or get) only the main info about dimensions of the PCB, holes and component positions (especially height) and nothing else. This may work if you lucky, but the chance is 50:50. Other things, like a strictly cost-down and useless discussions about a few washers or screws (yes, it's not a joke!) will produce even more possible issues. Companies like EVGA are totally fabless and it is a very hard job to keep all this OEMs and third-party vendors on a common line. Especially the communication between the different OEMs is mostly too bad or not existing.

Another problem is the equipment and the utilization in the R&Ds. If I see pseudo-thermal cams (in truth it are mostly cheap pyrometers with a fake graphical output and not real bolometers) and how the guys are using it (wrong angle and distance, wrong or no emissive factor, no calibrated paintings) I'm not surprised, what happens each day. Heat is a real bitch and the density their terrible sister. :D

For all people, interested in development and production of VGA cards:

I collected over the years a lot of material and pictures/videos from inside the factories and write now, step-by-step, an article about this industry, their projects, prototypes and biggest fails. But I have to wait for all permissions, because a few things are/were secret (yet) or it was prohibited to use it public. But I think that's worth to be published at the right moment:)

-

FormatC The MX-2 ist totally outdated and the performance is really worse in direct comparison with current mid-class products. The long-term stability is also nothing to believe (on a VGA card). The problem of too thick thermal grease to compensate some bigger gaps (instead of pads) is the dry-out-problem. The paste will be thinner and lose the contact to the component or heatsink. The sense of such products is to have a very thin film between heatsink and heatpreader/die in combination with a higher pressure.Reply

I tested over the weekend the OC stablity of the memory modules. If I use the original ACX 3.0 or the iCX, I get not more than 100-150 MHz stable (tested with heavy scientific workloads). With a water block I was able to OC the same modules up to 300 MHz more and got no errors - with a big headroom. I write not about gaming, some games are running with much higher memory clocks. But this isn't really stable. It only seems so. But this is nothing to work with it. :) -

FormatC Gelid GC Extreme or Thermal Grizzly Kryonaut. A lot better and not bad for long-term projects. The Gelid must be warmed up a little bit, it's more comfortable without experience. :)Reply -

FormatC Too dangerous without experience and not so much better. And it is nearly impossible to clean it later without issues.Reply -

Martell1977 I've used Arctic MX-4 on several CPU's, GPU's and in laptops. In fact I just used some last night in a laptop that was hitting 100c under load. I put some of the MX-4 on th CPU and both sides of the thermal pad that was on the GPU and the system runs at 58c under load now.Reply -

FormatC The MX-2 is entry level, the MX-4 mid-class but both were developed years ago. The best bang for the buck is the Gelid GC Extreme (a lot of overclockers like it) but the handling is not so easy. The Kryonaut is a fresh high-performance product and easier to use (more liquid). You have only a short Burn-In time and the performance is perfect from the begin. The older arctic products are simply outdated but good enough for cheaper CPUs. Nothing for VGA.Reply

I tested it a few weeks ago, also here (how to improve VGA cooling):