Rome in Detail: A Closer Look at AMD's 64-Core 7nm EPYC CPUs

AMD continues to step up its CPU core game. Today the company announced its new EPYC server line-up, codenamed Rome, that scales up to 64 cores and 128 threads. This opens the possibility for AMD's enterprise customers to equip a single dual-socket motherboard with up to 128 cores and 256 threads.

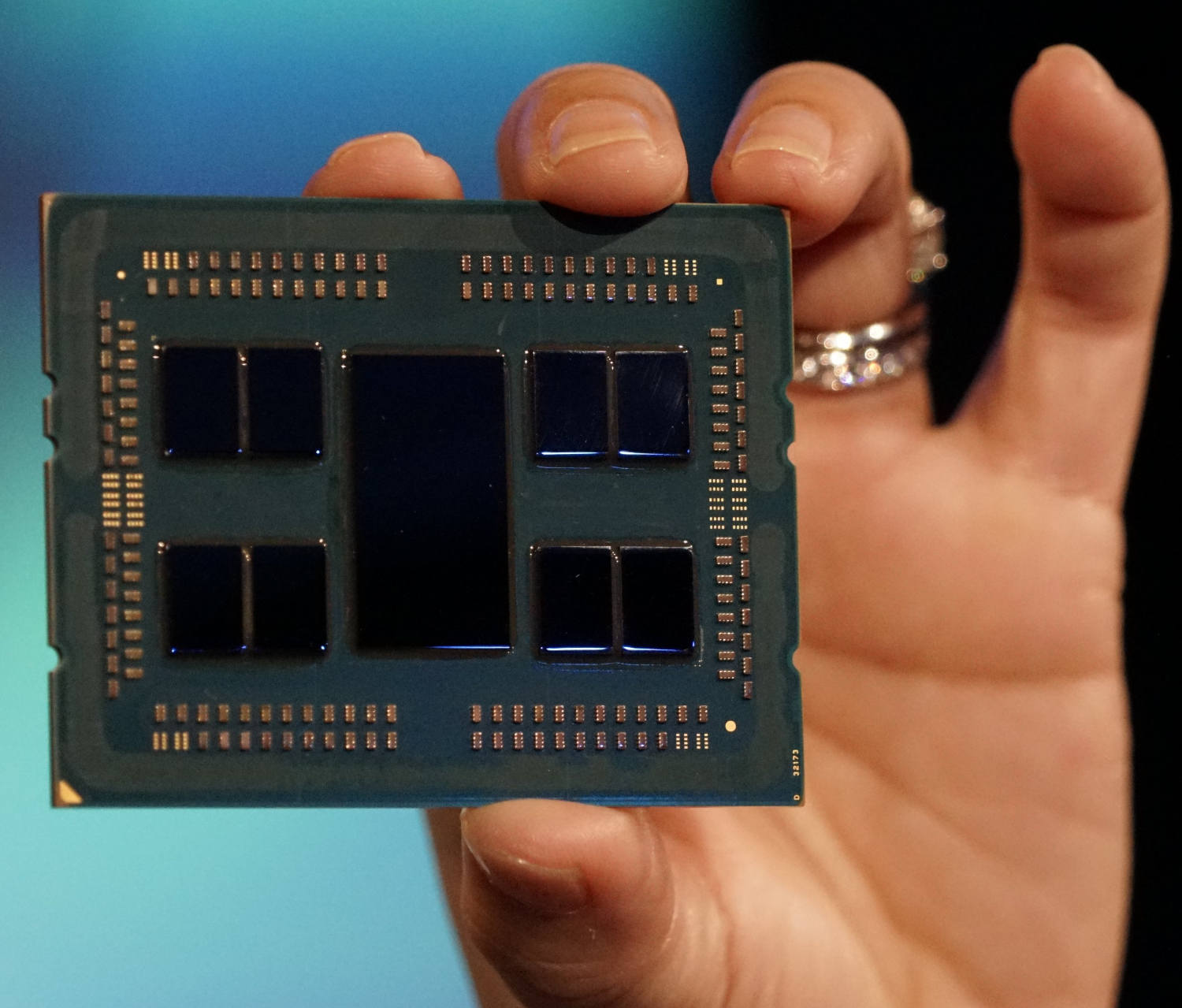

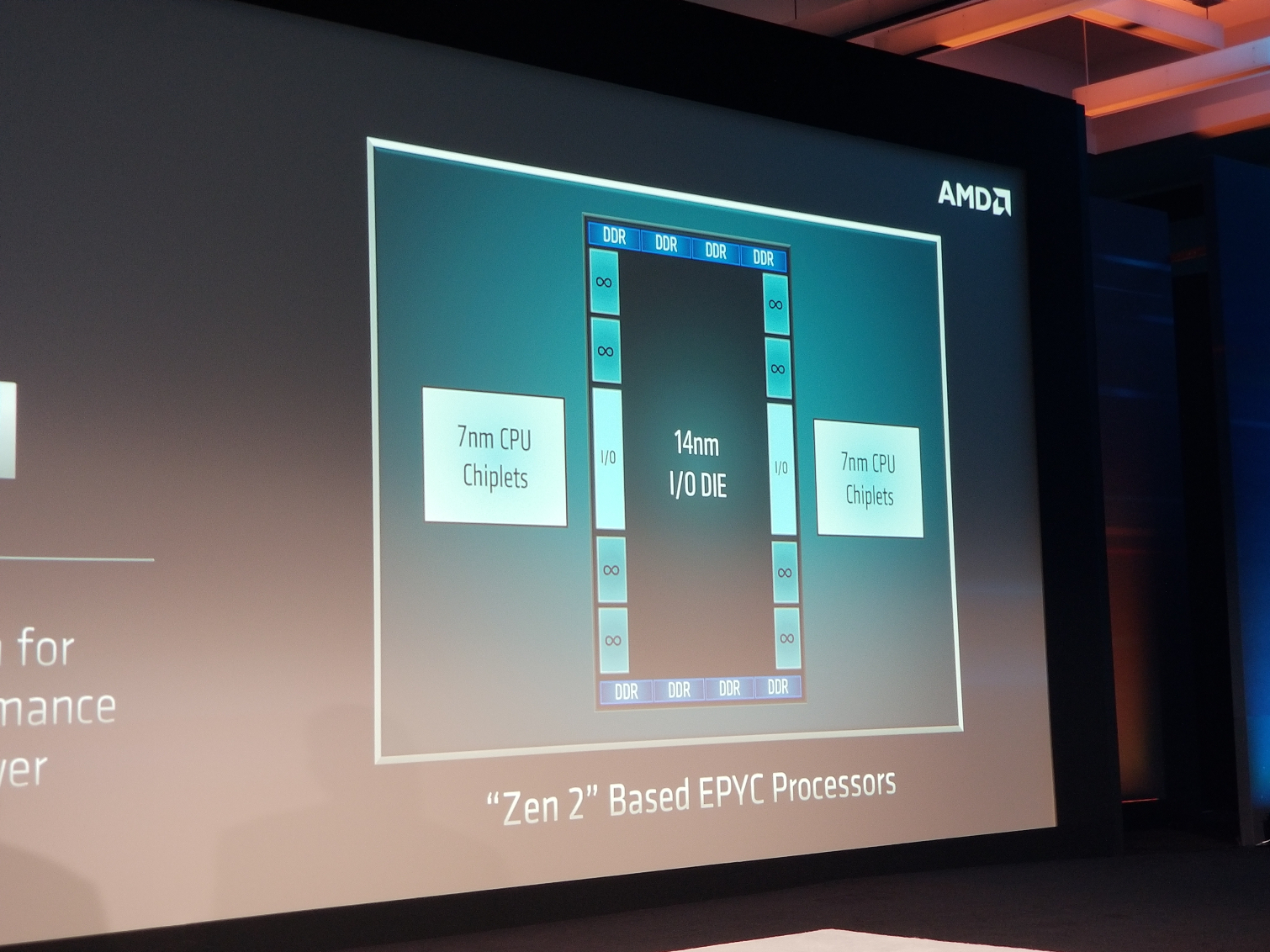

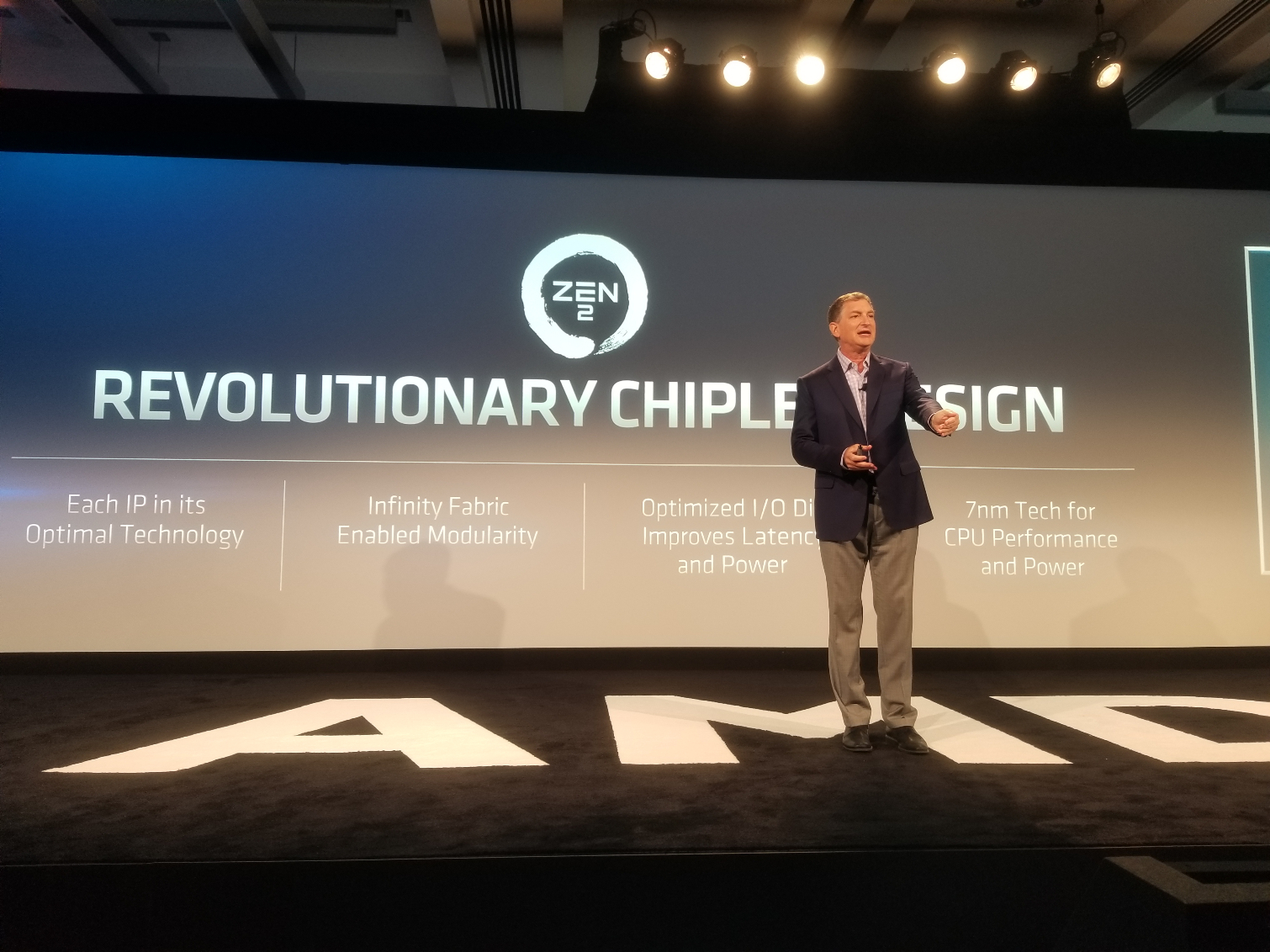

Shortly after unveiling its 7nm Radeon Instinct MI60 and MI50 accelerators at its Next Horizon Event, AMD revealed the company's forthcoming EPYC 'Rome' processors. The new processors are built around the chipmaker's Zen 2 CPU microarchitecture. They feature a new and revolutionary "chiplet" ecosystem whereby a 14nm I/O die sits at the center of the processor, surrounded by four 7nm CPU chiplet modules on each side. The chiplets are interconnected with the I/O die via AMD's second-generation Infinity Fabric architecture. Each chiplet carries up to eight cores and 16 threads.

EPYC 'Rome' processors are equipped with an eight-channel DDR4 memory controller, which is now housed inside the I/O die itself. Thanks to this improved design, each chiplet can access the memory with equal latency. The multi-core beasts can support to 4TB of DDR4 memory per socket. They are also the first processors to support the PCIe 4.0 standard and have up to 128 PCIe 4.0 lanes, which make them an ideal companion for AMD's recently announced Instinct MI60 and MI50 accelerators that utilize the PCIe 4.0 x16 interface. However, AMD didn't specify whether the PCIe resides inside the I/O die. It's probably safe to assume that each chiplet has its own PCIe lanes.

EPYC 'Rome' processors will work without hiccups on the existing 'Naples' server platform and the future 'Milan' platform aimed at processors based on the Zen 3 CPU microarchitecture. AMD has already started sampling EPYC 'Rome' processors with some of the company's biggest enterprise customers.

AMD hasn't revealed the pricing and availability of the EPYC 'Rome' line-up. Nevertheless, the processors are expected to be released in 2019, assuming there are no major setbacks.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

milkod2001 Just wondering if with PCIe 4.0 will be also get faster m2. SSD drives or it will be the same. Also what other real benefits are coming from PCIe 4.0?Reply -

RememberThe5th pcie should give more bandwith, but current gpus and m.2 still havent reached limit of pcie 3.0 or 2.0 limit yet, in short not even close.Reply -

bit_user Reply

I don't want equal latency to come at the expense of low latency. The current EPYC architecture provides low latency for local memory accesses, which can be achieved though NUMA-aware apps, OS, and/or VM partitioning.21464645 said:Thanks to this improved design, each chiplet can access the memory with equal latency.

I hope AMD didn't just cede ground on one of EPYC's strengths. -

bit_user Reply

The main benefit for consumers (and we still don't know when it will arrive in a consumer platform) will be that you can do more with fewer PCIe lanes. So, splitting your GPU lanes to dual x8 will be virtually painless, as will splitting your M.2 to dual x2 connections.21465529 said:Just wondering if with PCIe 4.0 will be also get faster m2. SSD drives or it will be the same. Also what other real benefits are coming from PCIe 4.0?

Personally, I'm most interested in seeing full-rate 10 Gbps ethernet on a little PCIe 4.0 x1 card.

Yes, they have exceeded what PCIe 2.0 can support, as that's < 2 GB/sec for a x4 link (or a x2 link @ PCIe 3.0).21465536 said:pcie should give more bandwith, but current gpus and m.2 still havent reached limit of pcie 3.0 or 2.0 limit yet, in short not even close. -

RememberThe5th Well I've meant for the x16 lanes, I havent seen or watched around the m.2 and x2/x4/x8 modes and transfer rate, but its good to know.Reply -

redgarl PCIe 4.0 seems to be important for AMD because of this:Reply

https://images.anandtech.com/doci/13547/1541527920197687529224_575px.jpg

01:03PM EST - Infinity Fabric GPU to GPU at 100 GB/s per link

01:03PM EST - Infinity Fabric GPU to GPU at 100 GB/s per link

01:03PM EST - Without bridges or switches

01:03PM EST - Connected in a ring

01:03PM EST - Helps scaling multiGPU -

RememberThe5th Atleast they didnt stick to the same "PCIE LANES" as Intel over the course of the years...Reply -

stdragon I can imagine the TDP being at least 200 watts under full load. But how much more than that?? I mean, I get 7nm is more efficient than 14nm at the transistor level, but there's also a lot more dies on that package too. Will be very interesting on cooling strategies for a racked up server that uses as few U's as possible.Reply