AMD Embraces USB-C For Some Navi 21 Radeon GPUs

While Nvidia is killing off VirtualLink, AMD is seemingly implementing the USB-C port on some Big Navi models, which will probably compete with the best gaming graphics cards that are on the market.

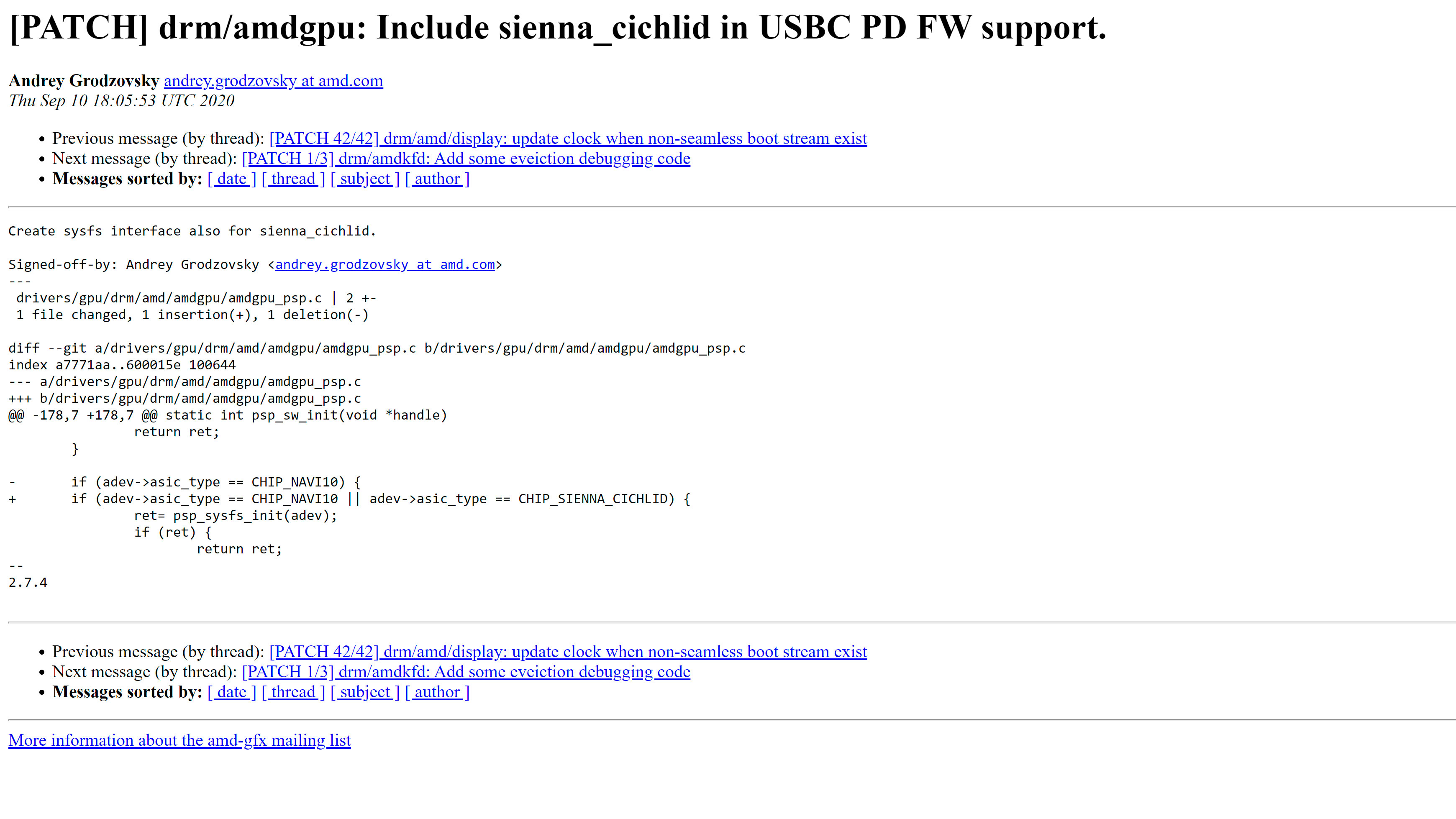

One eagle-eyed Redditor spotted a new patch for AMD's open-source AMDGPU driver for Linux that adds support for the USB-C interface. The patch mentions Sienna Cichlid, which is the rumored codename for AMD's Navi 21-based graphics card. It's not like it's the first time that AMD is incorporating USB-C on a graphics card either. The Radeon Pro W5700, which is powered by Navi 10, comes equipped with a USB-C port. However, this might be the first time that the chipmaker is putting one on a gaming graphics card.

Despite popular belief, the USB-C port on Nvidia's previous GeForce RTX 20-series (codename Turing) graphics cards wasn't some specialized interface for VirtualLink. It was just your everyday USB-C interface that Nvidia conveniently adopted to support VR headsets, a feature that never took off. In fact, you could use it like any other USB-C port to connect your headphones, external SSD enclosures or USB 3.0 hubs, charge your Android smartphone, etc.

AMD's reasons for embracing USB-C on Big Navi are unknown for the moment. With the proliferation of USB-C monitors in the last couple of years, the most obvious explanation would be to accommodate the new wave of monitors. Nevertheless, AMD will likely give us the low-down real soon on October 28, which is when chipmaker has scheduled its Radeon RX 6000 announcement.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

bigdragon I think this is a good call by AMD. USB-C video output is becoming increasingly common on laptops and tablets. I'm also seeing it on more monitors. I look forward to having 1 cable solve everything for me instead of having a variety of cables and adaptors.Reply

AMD's higher VRAM amount and USB-C support has really diminished my excitement for what Nvidia released. I expect graphics cards to go a minimum of 4 years, so forward-looking specifications are important to me. -

jimmysmitty Replybigdragon said:I think this is a good call by AMD. USB-C video output is becoming increasingly common on laptops and tablets. I'm also seeing it on more monitors. I look forward to having 1 cable solve everything for me instead of having a variety of cables and adaptors.

AMD's higher VRAM amount and USB-C support has really diminished my excitement for what Nvidia released. I expect graphics cards to go a minimum of 4 years, so forward-looking specifications are important to me.

Its eDP though just using the USB-C port as Intel has been pushing heavily to unify the standards. In fact I think if Intel had their way everything would use Type-C and Thunderbolt.

I guess we will see what AMDs plans are for it. Not sure I see much benefit in it other than what NVidia had planned but didn't pan out. -

truerock The only thing that bothers me about USB-C is that it supports 40Gbs which is OK for HDMI 2.1 - but, DisplayPort 2.0 runs at 80Gbs.Reply

I've been running 1080p at 60fps since 2012. I've been waiting to upgrade to 4k at 120fps and HDR10 color depth - and, I'll need DisplayPort 2.0 to do that.

I assume DisplayPort 2.0 will start shipping at the end of this year - but, Nvidia's newest GeForce RTX 3080 is not powerful enough to support DisplayPort 2.0

I'm hoping that Nvidia's newest cards in 2021 will support DisplayPort 2.0 -

Khahandran Reply

Display 2.0 is next year. There are zero monitors supporting it because the scalers haven't been made yet. As a result you won't see it in GPUs either, whereas there are HDMI 2.1 TVs now, and some monitors launching within the next 6 months supporting it.truerock said:The only thing that bothers me about USB-C is that it supports 40Gbs which is OK for HDMI 2.1 - but, DisplayPort 2.0 runs at 80Gbs.

I've been running 1080p at 60fps since 2012. I've been waiting to upgrade to 4k at 120fps and HDR10 color depth - and, I'll need DisplayPort 2.0 to do that.

I assume DisplayPort 2.0 will start shipping at the end of this year - but, Nvidia's newest GeForce RTX 3080 is not powerful enough to support DisplayPort 2.0

I'm hoping that Nvidia's newest cards in 2021 will support DisplayPort 2.0

Also full HDMI 2.1 at 48gps (total max speed and not the actual usable speed) and CVT-RB monitor timings can support 4K HDR10 at 144hz at 4:4:4 without DSC. Even HDMI 2.0 or DP 1.4a can do that if you're willing to accept DSC or chroma subsampling. -

Chung Leong The port lets you add a small portable touch screen to a gaming PC. Not sure how useful that is.Reply -

spentshells Reply

would be fun to be able to tune on the fly, in game.Chung Leong said:The port lets you add a small portable touch screen to a gaming PC. Not sure how useful that is. -

cryoburner ReplyAMD's reasons for embracing USB-C on Big Navi are unknown for the moment.

My best guess would be that AMD saw Nvidia adding it to their 20-series cards, and while it may have been too late to work into the 5000-series, incorporated it into their higher-end RDNA2 designs assuming that it might become a common feature moving forward. By the time Nvidia started getting rid of it, AMD probably already had it in the works and decided to go through with it anyway.

It would be funny if USB-C did become a standard on graphics cards in the future though, and Nvidia ended up including again on their next-generation cards. -

graylion Reply

Are there any cards out there that actually have the port?Admin said:AMD adds support for USB-C on Sienna Cichlid (reportedly Navi 21) graphics card in AMDGPU driver for Linux.

AMD Embraces USB-C For Some Navi 21 Radeon GPUs : Read more -

cryoburner Reply

As far as current generation consumer-focused cards go, you may be able to find a few models of the Radeon 7900 XT or XTX that support it. And of course, some older hardware from Nvidia's RTX 20 series and AMD's Radeon 6000 series does as well, but it seems like the port has largely been dropped by graphics card manufacturers. Perhaps it will make a reappearance as USB-C becomes more common for displays, but it added additional cost to cards, and they probably discovered that more people would rather have access to another DP or HDMI port in its place. If you just want to hook a USB-C display up to a desktop graphics card, then your best bet would likely be to get an adapter for one of those ports.graylion said:Are there any cards out there that actually have the port?

When Nvidia first put USB-C on their higher-end 20-series cards 5 years ago, the idea was that it would be used for wired VR headsets utilizing the VirtualLink standard, but that never really took off.