Arc A770 Beats RTX 3060 in Ray Tracing Performance, According to Intel Benchmarks

As promised, Intel has released a new video highlighting the ray tracing technology behind its Arc Alchemist graphics cards. While the Arc A770 has yet to prove itself, the looming graphics card aims to fight for a spot on the list of best graphics cards.

We've already seen how the Arc A380 measures up to the competition in ray tracing performance. However, the Arc A380 is the entry-level SKU with just eight ray tracing units (RTUs), whereas the Arc A770 bears the flagship crown and has 32 RTUs. The Arc A770 is much more interesting, especially since Intel's backing up its previous claims that Arc is competitive or even a bit better than Nvidia's second-generation RT cores inside Ampere.

That's quite the claim, though Intel does go on to provide some low-level details of its ray tracing hardware. Unlike AMD's Ray Accelerators and the Radeon RX 6000 series of GPUs, Arc has full ray tracing acceleration. AMD uses the texture units to do ray/box BVH intersections at a rate of 4 box/cycle. Intel does the BVH traversal in hardware and can do 12 ray/box intersections per cycle. Beyond that, both AMD and Intel can do 1 ray/triangle intersection per cycle, per core. Nvidia can do twice the ray/triangle intersections per RT core with Ampere, but it's not exactly clear what the ray/box rate is — for Turing or Ampere.

Intel details its BVH cache, which further speeds up BVH traversal. The RTUs also have a Thread Sorting Unit that takes the results of the ray tracing work and then groups them according to complexity to help maximize utilization of the GPU shader cores. It all sounds pretty good on paper, but how does it work in the real world of gaming?

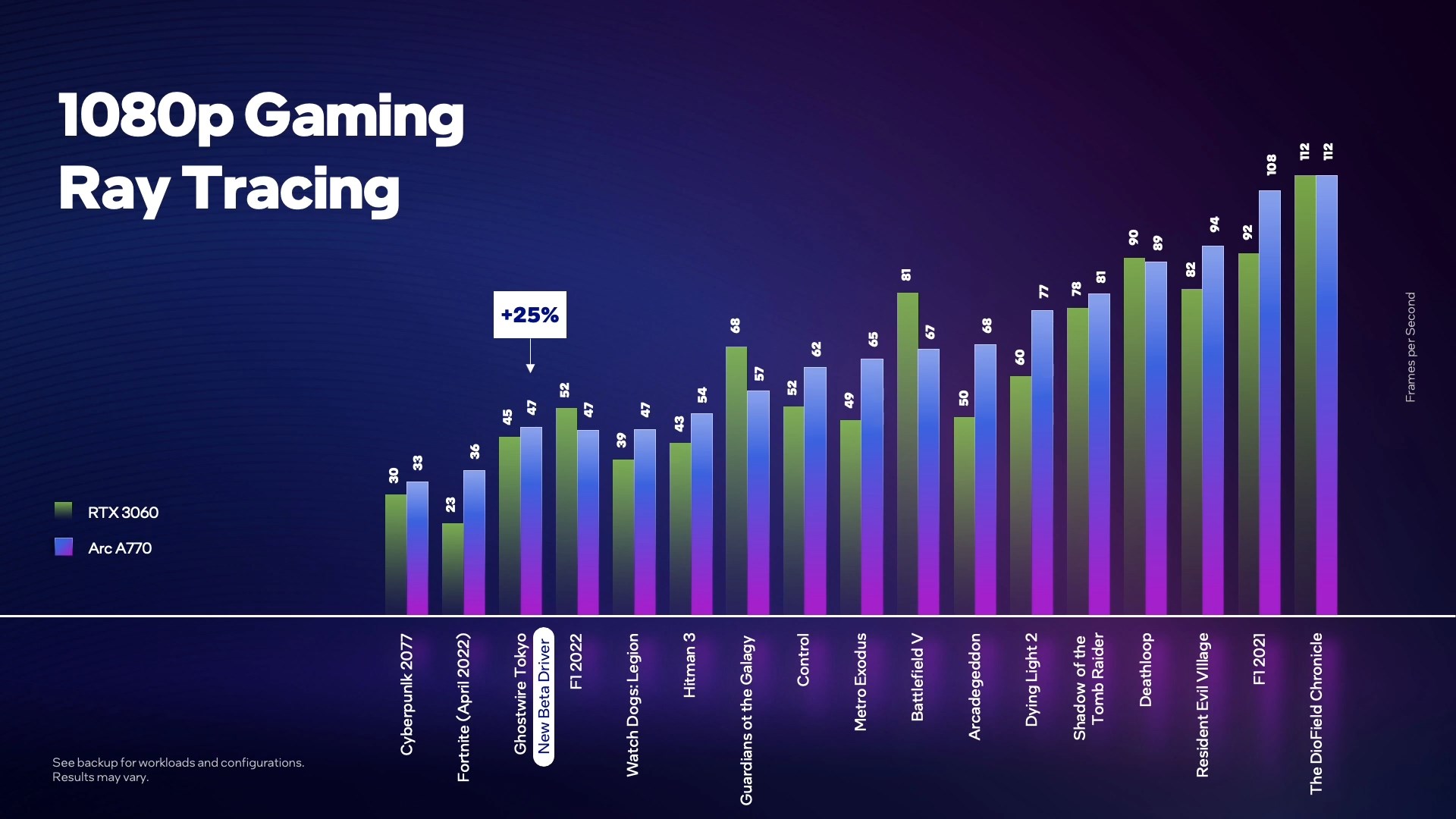

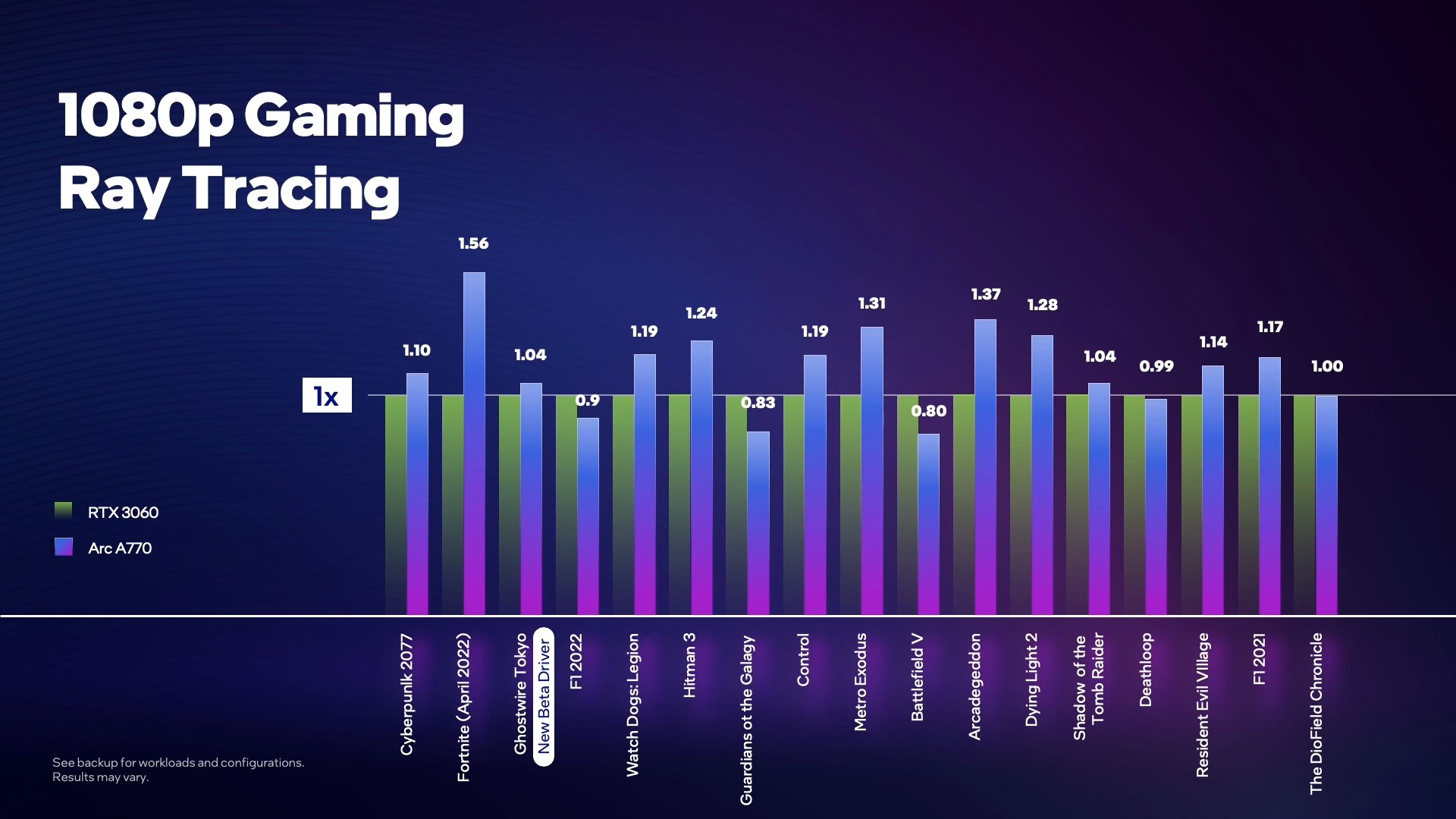

According to Intel's benchmarks, the Arc A770 delivered better ray tracing performance than the GeForce RTX 3060 at 1080p (1920x1080) on ultra settings. Alchemist outperformed Ampere between 1.04X to 1.56X. The Arc A770 only lost to the GeForce RTX 3060 in four titles, including F1 2022, Guardians of the Galaxy, Battlefield V, and Deathloop. Intel's graphics card is tied with the GeForce RTX 3060 in The DioField Chronicle.

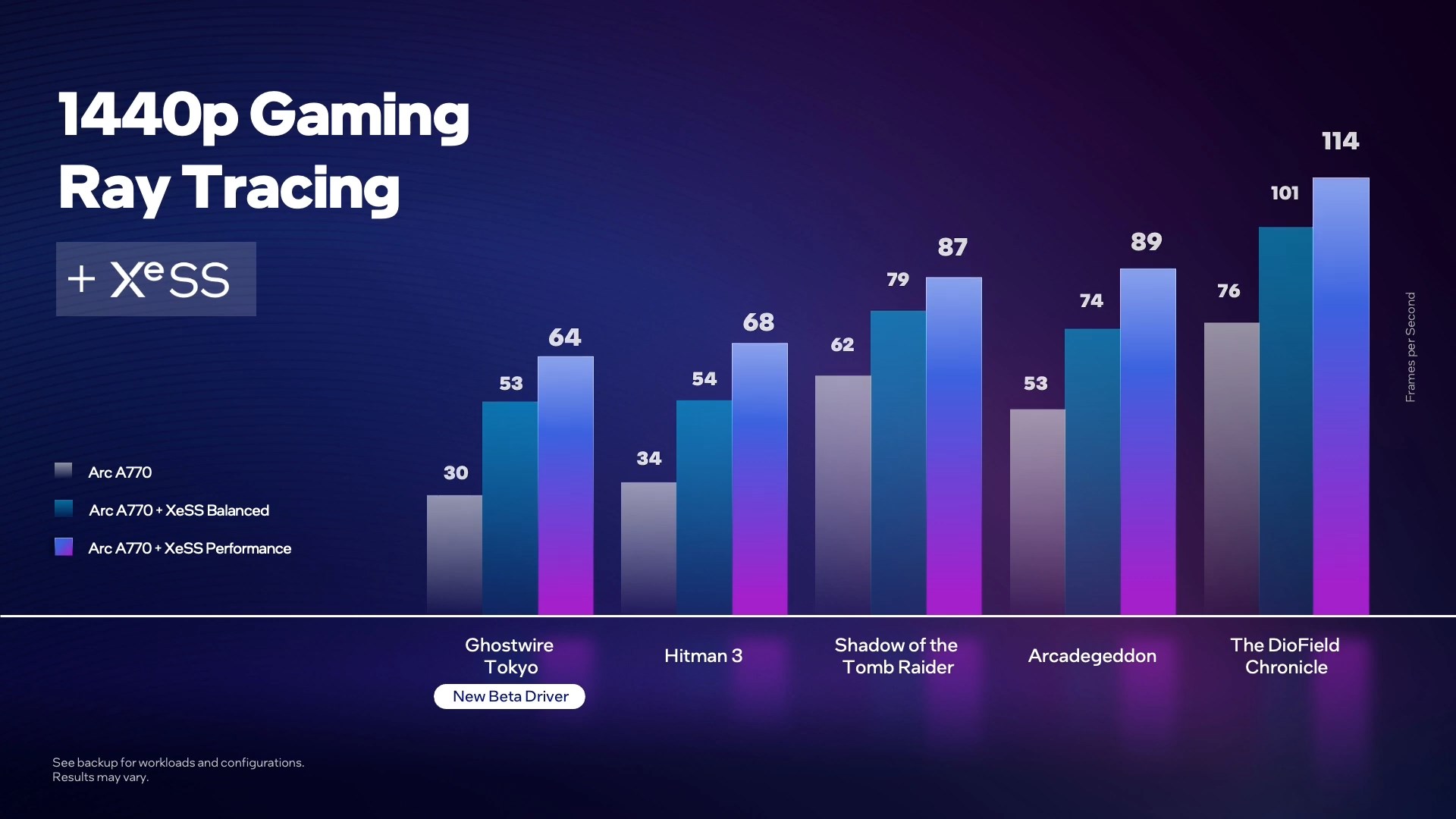

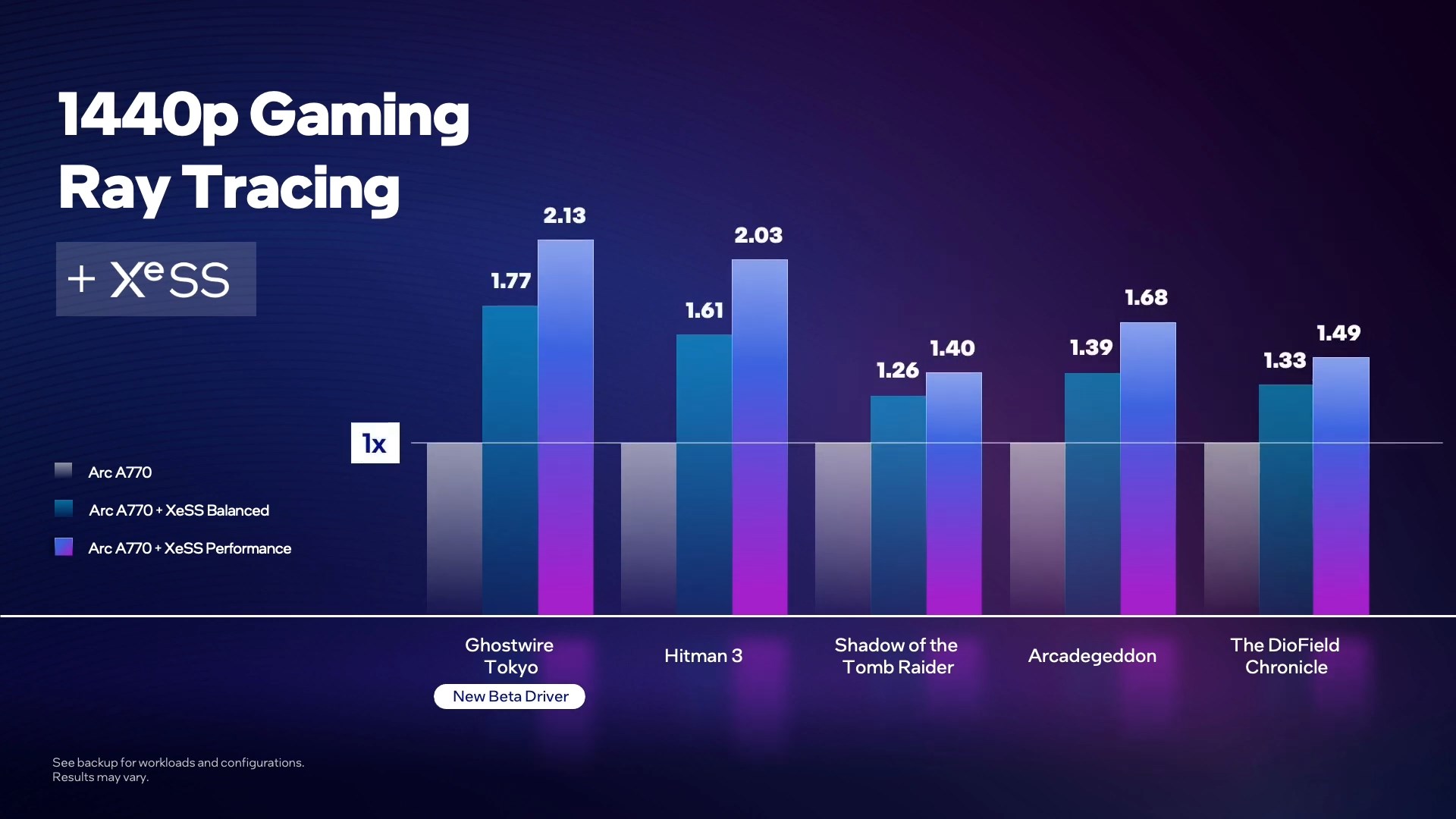

Ray tracing taxes the graphics card regardless of the resolution. As a result, performance drops as you increase the resolution. That's where Intel's XeSS AI upscaling technology comes in to save Arc. Intel used the highest possible settings for its XeSS 1440p tests with ray tracing configured to the maximum.

The results showed that the Arc A770 struggled to deliver frame rates above 40 FPS in titles such as Ghostwire Tokyo and Hitman 3. However, with XeSS enabled on a balanced preset, the Arc A770 showed 1.77X higher performance in Ghostwire Tokyo and 1.61X in Hitman 3. That's with the balanced preset, though. So if you value performance over eye candy, the XeSS performance setting can help boost performance further.

With XeSS on the performance preset, the Arc A770 offered over 2X better frame rates in Ghostwire Tokyo and Hitman 3. The graphics card also revealed visible performance upflits (up to 1.68X) in the other tested titles, like Shadow of the Tomb Raider, Arcadegeddon, or The DioField Chronicle.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Barring any further delays, the Arc A770 should arrive on the retail market before the end of the year. Intel has kept a tight lip on the graphics card's pricing. However, new clues suggest that the Arc A770 could cost around the $400 mark. That would make sense, considering the official MSRP for the RTX 3060 is only $330. It's not at that price, at least not yet, but with Ada Lovelace on the horizon, we could see GPU prices continue to fall.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

-Fran- Out of curiosity... Has nVidia or AMD/ATI ever done so much marketing for one of their own GPU releases in this manner?Reply

I personally don't recall them doing this, at all, ever. I understand Intel needs to keep some of the interest alive, but... What good does it do if they just don't release the darn cards and let independent reviewers do it? This is now officially bordering the pathetic.

Regards. -

PiranhaTech Reply

They generally have, at least AMD does as far as I know. AMD's charts have been pretty good, but gamers looking for a card know to be careful about them. I haven't heard many tech publications say they are shady outside the obvious marketing wankery (they showing where they are ahead and not too many where they are behind)-Fran- said:Out of curiosity... Has nVidia or AMD/ATI ever done so much marketing for one of their own GPU releases in this manner?

I personally don't recall them doing this, at all, ever. I understand Intel needs to keep some of the interest alive, but... What good does it do if they just don't release the darn cards and let independent reviewers do it? This is now officially bordering the pathetic.

Regards.

It's hard to tell if Intel will live up to their claims. -

chalabam The 3060 has real driver support, CUDA, Tensorflow, a lot of goodies I do not use due to not wanting to log into nvidia, and a lot of stuff that Intel-ShortTermSupportAndAHistoryOfAbandoningVideoCards doesn't.Reply -

jkflipflop98 Reply-Fran- said:Out of curiosity... Has nVidia or AMD/ATI ever done so much marketing for one of their own GPU releases in this manner?

I personally don't recall them doing this, at all, ever. I understand Intel needs to keep some of the interest alive, but... What good does it do if they just don't release the darn cards and let independent reviewers do it? This is now officially bordering the pathetic.

Regards.

Oh you kids.

There was an era back when. . .

1NWUqIhB04IView: https://www.youtube.com/watch?v=1NWUqIhB04I&ab_channel=LaMazmorraAbandon -

-Fran- Reply

I think you and some others missed the point of the question.jkflipflop98 said:Oh you kids.

There was an era back when. . .

1NWUqIhB04IView: https://www.youtube.com/watch?v=1NWUqIhB04I&ab_channel=LaMazmorraAbandon

When has AMD or nVidia (or 3DFX, Matrox, VIA, ImTech, Apple, Samsung, Qualcomm, etc) done this type of specialized marketing "hype" for a product that has, supposedly, already launched (kind of) and has been delayed for over a year? We already know about ARC, that is exists and Intel is kind of, maybe, producing them in some quantities, but they keep talking about and just not releasing it? When has this ever happened before in the semiconductor history? All things I can remember is pre-launch hype and there's plenty of ads during and slightly after release, but nothing this specialized to "keep the hype building". The closest I have in mind are Fury (AMD's flop) and Bulldozer, but mostly pre-hype until they crashed and burned. nVidia with Fermi and the FX5K series (see signature). None of them kept trying to hype those products after they launched and burned to a crisp and instead rushed the next gen of products (except Bulldozer, kind of... Piledriver fixed things a tad, but mostly same crap until Ryzen).

I don't know if some of you still believe Intel, but I for one am starting to get kind of bored and annoyed at the empty hype. Maybe it's just me, I guess.

Regards. -

cryoburner ReplyAlchemist outperformed Ampere between 1.04X to 1.56X.

1.56X RT performance might sound impressive until you realize they are talking about running Fortnite at a "cinematic" 36fps. : P

The Arc A770 is much more interesting, especially since Intel's backing up its previous claims that Arc is competitive or even a bit better than Nvidia's second-generation RT cores inside Ampere.

Considering they have suggested the A770 should be competitive with a 3060 Ti in modern APIs, falling back closer to 3060-level performance in RT implies that their RT performance is a bit worse than Nvidia's, not better. Their chart suggests the A770 may get around 10% better RT performance than a 3060 on average, but the 3060 Ti tends to get around 30% more RT performance than a 3060. The RT performance does look competitive, but not quite at the same level as far as the performance hit from enabling it is concerned. Of course, if they price the card like a 3060, that could still be a win.

There's also the question of how well XeSS will compare to DLSS, considering most of these games will require upscaling for optimal performance with RT. If DLSS can get away with a lower render resolution for similar output quality, that could easily eat up that ~10% difference between the 3060 and A770 in RT.

However, new clues suggest that the Arc A770 could cost around the $400 mark. That would make sense, considering the official MSRP for the RTX 3060 is only $330.

I would suspect it to be priced less than $400. If they are directly comparing it to the 3060 here, then they will likely price it to be competitive with the 3060. They have stated that the cards will be priced according to their performance in "tier 3" games on older APIs, and the A770 will probably perform more like a 3060 in those titles, even if it can be competitive with the 3060 Ti in newer APIs (at least when RT isn't involved).

Nvidia did a lot of pre-release hype for their 20-series when they knew the only real selling points were going to be RT and DLSS that were not going to be usable in actual games for many months after launch. That Star Wars: Reflections demo was showcased 6 months before the cards came out. So far I haven't seen Intel spending millions hiring Lucasfilm to make a promotional tech demo for their cards. : P-Fran- said:Out of curiosity... Has nVidia or AMD/ATI ever done so much marketing for one of their own GPU releases in this manner?

I personally don't recall them doing this, at all, ever. I understand Intel needs to keep some of the interest alive, but... What good does it do if they just don't release the darn cards and let independent reviewers do it? This is now officially bordering the pathetic.

Obviously, Intel most likely planned their cards to release earlier in the year, but decided they needed to spend more time on drivers than they originally expected. So their promotion of the cards got dragged out a bit. And of course, unlike AMD and Nvidia, Intel is a new entrant into the market. So it's only natural that they will need more promotion than their competitors to get the word out there about their new cards.

And the delays ultimately don't matter that much, so long as they release the cards at competitive price points. Intel is likely willing to sell the hardware at-cost to help them establish a presence in the market, and delaying the release until the drivers are in a mostly-usable state should make for a better first impression than if they released them with broken drivers half a year ago. Having the cards mostly get scooped up by mining operations and resellers probably wouldn't have helped them establish much of a presence in the market either. Considering the still-inflated prices of mid-range graphics hardware, they still have an opportunity to make a good impression in this range. -

OriginFree Replychalabam said:The 3060 has real driver support, CUDA, Tensorflow, a lot of goodies I do not use due to not wanting to log into nvidia, and a lot of stuff that Intel-ShortTermSupportAndAHistoryOfAbandoningVideoCards doesn't.

Yes this. I have zero doubts that Intel could outperform AMD & Nvidia on the hardware front if they want to (and they accept taking a loss to gain market share) but the drivers. Oh god, the drivers. Won't you please think of the drivers. /simpsons

I'd be happy if Intel could roll our 3060 and slower competition en mass and focus on getting the drivers / secondary software up to snuff for the 1st or 2nd gen. I'm ok waiting till 2024/2025 for real top of the line competition but what use is a 4090TI killer if the drivers suck and will never get fixed?