Tachyum announces questionable 96-Core Prodigy-based AI workstation — $5,000 system also supposedly includes 1TB of memory

Tachyum to offer Prodigy ATX Platform.

In a specially issued whitepaper, Tachyum on Friday announced its Prodigy ATX Platform, an artificial intelligence-oriented workstation based on a cut-down version of its Prodigy universal processor, which yet has to tape out. The company plans to sell the unit for $5000 and claims that the machine will democratize access to large language models with billions (or trillions) of parameters. However, the company hasn't disclosed when the product will become available.

The Prodigy ATX Platform is based on a 96-core Prodigy processor (which is projected to be volume-produced on TSMC's 5nm node in 2024) that runs up to 5.70 GHz. The CPU is said to come with only half of its die enabled to reduce power consumption and enhance yield, which helps to reduce costs and make the platform more accessible, Tachyum said.

Speaking of the processor, we should mention that Prodigy's release, initially set for 2020 after a 2019 tape-out, has been pushed back repeatedly — to 2021, 2022, and then 2023 — amid ever more extravagant performance claims without any demonstrated prototypes. Now, the latest plan from the company pegs the Prodigy processor's launch for the second half of 2024, but without elaborating, which could mean December 2024.

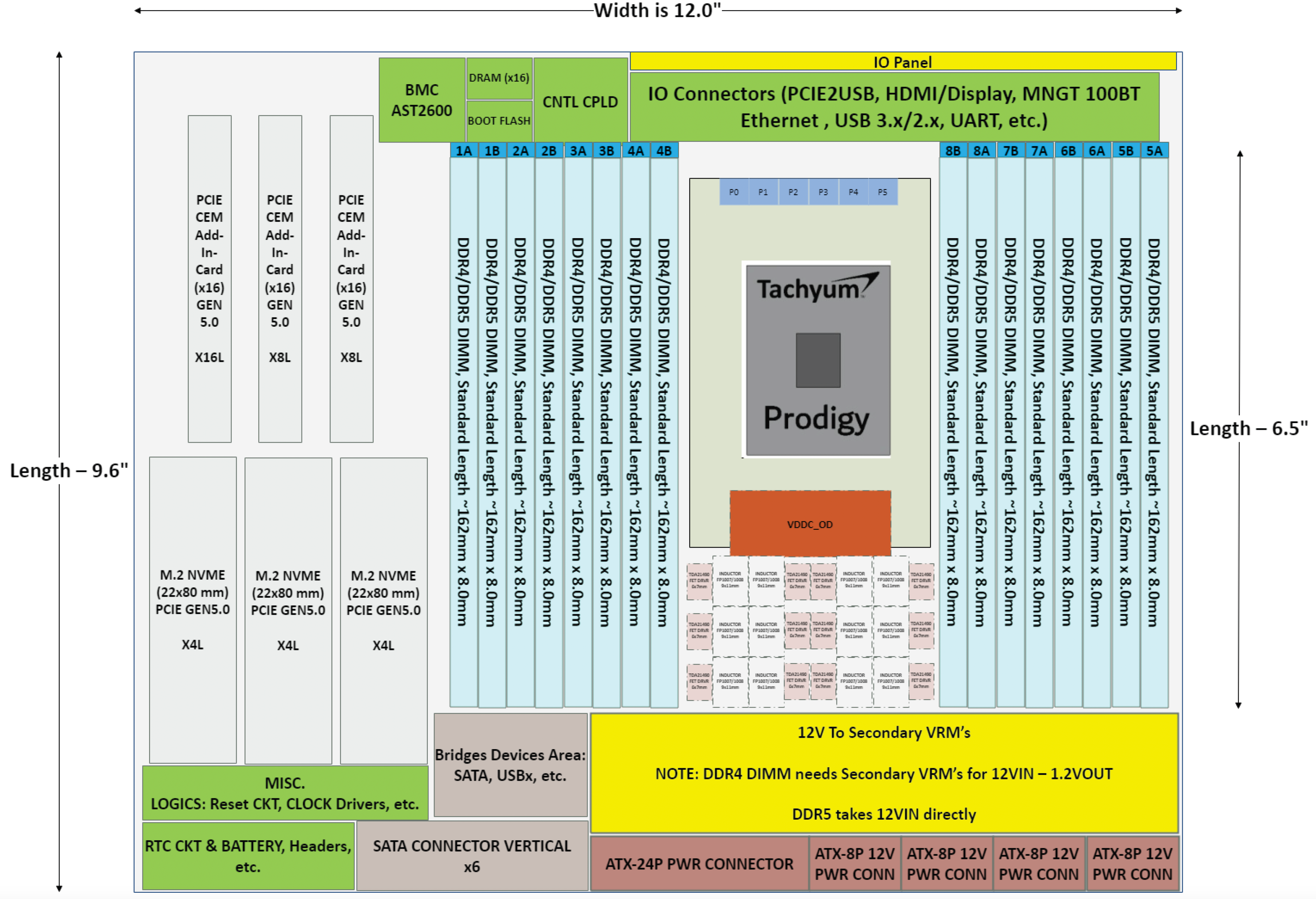

The machine is expected to come equipped with 1TB of DDR5-6400 SDRAM using 16 memory modules and offering a peak bandwidth of 819.2 GB/s. The system is expected to feature three PCIe x16 5.0 slots, three M.2-2280 NVMe slots with a PCIe 5.0 x4 interface, and SATA connectors for SSDs and HDDs.

Being an ATX box, the system promises to offer all the I/O connectors that one comes to expect from such a machine, including USB, HDMI, and Ethernet. In addition, the motherboard will feature Aspeed's AST2600 board management controller.

For now, Tachyum has released a block scheme of its Prodigy ATX Platform motherboard and an empty grey PC chassis with its name on it.

The focus of the Prodigy ATX Platform is on using pre-trained models for inference and doing so more efficiently thanks to the architectural peculiarities of the processor. Tachyum says that a trillion-parameter model requires 2.04 TB of memory using FP8. But, by adopting Tachyum's 4-bit TAI sparse with 4-bit weights format, memory requirements drop dramatically to 765 GB, which makes it possible to support even larger models within the 1TB limit of the system's memory.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Tachyum stated that a system powered by a single 96-core Prodigy processor with 1TB of RAM can run inference on a ChatGPT4 model with 1.7 trillion parameters, 'whereas it requires 52 Nvidia H100 GPUs to run the same thing at significantly higher cost and power consumption.'

"Generative AI will be widely used far faster than anyone originally anticipated," Dr. Radoslav Danilak, founder and CEO of Tachyum said in a press release. "In a year or two, AI will be a required component on websites, chatbots and other critical productivity components to ensure a good user experience. Prodigy's powerful AI capabilities enable LLMs to run much easier and more cost-effectively than existing CPU + GPGPU-based systems, empowering organizations of all sizes to compete in AI initiatives that otherwise would be dominated by the largest players in their industry."

If Tachyum manages to popularize its Prodigy platform, then it can indeed change the rules of the game on the AI front, provided that it can supply enough processors. However, given the lack of working silicon, it is unclear whether this actually can happen. Furthermore, it is unclear when exactly Tachyum will start mass production of its Prodigy processors, as well as when it plans to make them available in volume.

As far as the Prodigy ATX Platform is concerned, we have some reasonable doubts about its economic viability for the company. 16 64GB RDIMMs at $240 per unit cost $3,840, a highly-custom multi-layer motherboard (we are talking about 12 – 16 layers here) with an advanced voltage regulating module could end up at $500 (we are talking about a relatively low-volume batch of motherboards, these are expensive), a 2000W PSU costs around $300, a decent chassis with reliable coolers are at around $150. Combined, even without Tachyum's Prodigy processor, the system ends up at about $4800.

Of course, since the company purchases things in volume, that will end up at a much lower cost, but since the Prodigy silicon is probably expensive too, we can only wonder whether the platform designed for inference will be an economically feasible product for Tachyum. And, of course, there's the growing skepticism surrounding the company's oft-delayed launch schedule.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Findecanor Cautiously interested.Reply

Whenever a tech company uses the word "democratize" about its product, that's often a red flag.

But is the CPU any good ... as a CPU? -

bit_user The fatal error Tachyum made was to try to be everything to everyone - a master of all trades. Had they focused on just a couple key niches, that might've been enough for them to have shipped product, by now, and got their legs under them. As it is, I'm sure the only way they're hanging on is by government subsidies and no-bid contracts.Reply

Tachyum stated that a system powered by a single 96-core Prodigy processor with 1TB of RAM can run inference on a ChatGPT4 model with 1.7 trillion parameters, 'whereas it requires 52 Nvidia H100 GPUs to run the same thing at significantly higher cost and power consumption.'

The key detail he's glossing over is that running it on a Prodigy won't have nearly the same throughput as using H100's. He's really just relying on the memory capacity his CPU can support to establish that wild differential. But, if you just needed more memory capacity, it would seem to make much more sense to go with one of the x86 processors. With 256 cores, I expect the most cost effective option would currently be AMD's EPYC 9754 (2P).

As far as the Prodigy ATX Platform is concerned, we have some reasonable doubts about its economic viability for the company.

Thank you for doing this analysis. $5k sounded awfully cheap, to me.

This would be one kickstarter I wouldn't even be tempted by! -

bit_user Reply

I think you mean:brandonjclark said:The Duke 'Nuke-Em of processors?

https://en.wikipedia.org/wiki/Duke_Nukem_Forever

...which was first demo'd in 1998 and didn't ship until 2011.

I played the original Duke Nukem', which came out around the time Quake launched. Duke Nukem' was more advanced than Doom, but couldn't hold a candle to the graphics & gameplay in Quake. However, it was funny and irreverent enough to have still had an impact. -

Neilbob Reply

Duke Nukem 1 > 2 > 3D which I think is the one you mean. But I'll let you off :)bit_user said:I played the original Duke Nukem', which came out around the time Quake launched.

I remember the (initial) insane hype around Duke Nukem Forever. It was quite similar to Daikatana, and both games ended up having a lot in common: they both ended up being complete and utter trash. Good times.

As for this, to me it seems like it has hints of them trying to leap on the AI bandwagon just to drum up some kind of interest in the product (though I admittedly don't know enough of the details to be making any kind of assessment). -

bit_user Reply

I think it'll probably ship, just late and with reduced specs/higher price. Software support is going to be a big wildcard, also.vanadiel007 said:I am thinking vaporware. It would be very cheap considering the specs on paper. -

jlake3 Reply

It's a proprietary ISA, so it's sort of a guess at this stage what that core count and clockspeed will translate to in actual practice, and whether software will be able to take full advantage of all the numbers they've thrown out. Doing a little searching, apparenly branch prediction is not looking to be as good as Intel/AMD/ARM and they've scaled up FP32 performance more than they have memory bandwidth, so there may be bottlenecks depending on what you do and how you do it.Findecanor said:Cautiously interested.

Whenever a tech company uses the word "democratize" about its product, that's often a red flag.

But is the CPU any good ... as a CPU?

...also a question whether people will write software to run on it, given it's status as vaporware. If you can't buy it and no one writes anything for it, it's not a good CPU it's just a good whitepaper.

Supposedly it will run unmodified X86, ARM, or RISC-V binaries at "similar to running native" through using a higher clockspeed to offset a predicted 30-40% translation overhead... but somehow that feels both dubious and unimpressive?